Lucene2--索引的增删改查

1.全文检索系统的结构

2.Lucene倒排索引原理

假设有两篇文章1和2

文章1的内容为:Tom lives in Guangzhou,I live in Guangzhou too.

文章2的内容为:He once lived in Shanghai.

经过分词处理后

文章1的所有关键词为:[tom] [live] [guangzhou] [i] [live] [guangzhou]

文章2的所有关键词为:[he] [live] [shanghai]

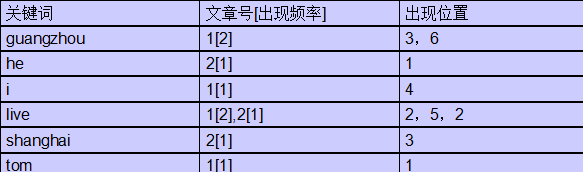

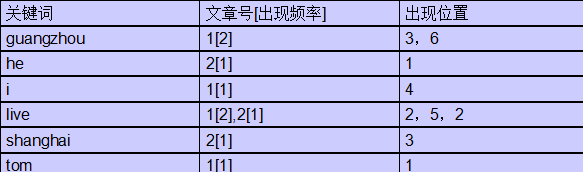

加上“出现频率”和“出现位置”信息后,我们的索引结构为:

3.索引的增删改查

核心索引类:

public class IndexWriter

org.apache.lucene.index.IndexWriter

public abstract class Directory

org.apache.lucene.store.Directory

public abstract class Analyzer

org.apache.lucene.analysis.Analyzer

public final class Document

org.apache.lucene.document.Document

public final class Field

org.apache.lucene.document.Field 示例代码:

4.域选项介绍:

Field.Index 表示Field的索引方式

NO 表示该Field不需要索引,也就是用户不需要去查找该Field的值

NO_NORMS 表示对该Field进行索引,但是不使用Analyzer,同时禁止它参加评分,主要是为了减少内存的消耗

TOKENIZED 表示该Field先被分词再索引

UN_TOKENIZED 像链接地址URL、文件系统路径信息、时间日期、人名、居民身份证、电话号码等等通常将被索引并且完整的存储在索引中,但一般不需要切分词

Field.Store 表示Field的存储方式

COMPRESS压缩存储

NO 原文不存储在索引文件中,搜索结果命中后,再根据其他附加属性如文件的Path,数据库的主键等,重新连接打开原文,适合原文内容较大的情况。

YES索引文件本来只存储索引数据, 此设计将原文内容直接也存储在索引文件中,如文档的标题。

5.IndexReader和IndexWriter的生命周期问题

IndexReader耗资源,做成单例

IndexReader在工作时IndexWriter重建或者更新了索引,假如IndexReader为单例模式,不

能实时查询到IndexWriter的更新

解决方法:IndexReader.openIfChanged(reader);

IndexWriter在项目中有可能只存在一个,故操作后要进行commit

2.Lucene倒排索引原理

假设有两篇文章1和2

文章1的内容为:Tom lives in Guangzhou,I live in Guangzhou too.

文章2的内容为:He once lived in Shanghai.

经过分词处理后

文章1的所有关键词为:[tom] [live] [guangzhou] [i] [live] [guangzhou]

文章2的所有关键词为:[he] [live] [shanghai]

加上“出现频率”和“出现位置”信息后,我们的索引结构为:

3.索引的增删改查

核心索引类:

public class IndexWriter

org.apache.lucene.index.IndexWriter

public abstract class Directory

org.apache.lucene.store.Directory

public abstract class Analyzer

org.apache.lucene.analysis.Analyzer

public final class Document

org.apache.lucene.document.Document

public final class Field

org.apache.lucene.document.Field 示例代码:

package cn.yang;

import java.io.File;

import java.io.IOException;

import java.text.ParseException;

import java.text.SimpleDateFormat;

import java.util.Date;

import java.util.HashMap;

import java.util.Map;

import org.apache.lucene.analysis.standard.StandardAnalyzer;

import org.apache.lucene.document.Document;

import org.apache.lucene.document.Field;

import org.apache.lucene.document.NumericField;

import org.apache.lucene.index.IndexReader;

import org.apache.lucene.index.IndexWriter;

import org.apache.lucene.index.IndexWriterConfig;

import org.apache.lucene.index.Term;

import org.apache.lucene.search.IndexSearcher;

import org.apache.lucene.search.ScoreDoc;

import org.apache.lucene.search.TermQuery;

import org.apache.lucene.search.TopDocs;

import org.apache.lucene.store.Directory;

import org.apache.lucene.store.FSDirectory;

import org.apache.lucene.util.Version;

public class IndexUtil {

//准备数据

private String[] ids = { "1", "2", "3", "4", "5", "6" };

private String[] emails = { "[email protected]", "[email protected]", "[email protected]", "[email protected]",

"[email protected]", "[email protected]" };

private String[] content = { "welcom to visited", "hello boy",

"my name is yang", "liu meigui is a dog", "haha fuck",

"i like fuck liu" };

private int[] attaches = { 2, 4, 4, 5, 6, 7 };

private String[] names = { "zhangsan", "lisi", "yuhan", "john", "dav",

"liu" };

private Date[] dates = null;

//加权信息

private Map<String,Float> scores = new HashMap<String,Float>();

private Directory directory = null;

//因IndexReader打开耗费资源 故使用静态的

private static IndexReader reader = null;

//初始化日期

public void setDates(){

SimpleDateFormat sdf = new SimpleDateFormat("yyyymmdd");

dates = new Date[ids.length];

try {

dates[0] = sdf.parse("2014-03-20");

dates[1] = sdf.parse("2012-03-22");

dates[2] = sdf.parse("2013-03-21");

dates[3] = sdf.parse("2011-03-20");

dates[4] = sdf.parse("2014-03-23");

dates[5] = sdf.parse("2014-03-24");

} catch (ParseException e) {

e.printStackTrace();

}

}

//构造函数内舒适化加权信息及directory、reader

public IndexUtil() {

try {

scores.put("yang.org", 2.0f);

scores.put("sina.org", 2.0f);

directory = FSDirectory.open(new File("d:/lucene/indextest"));

//false设置reder不为只读

reader = IndexReader.open(directory,false);

} catch (IOException e) {

e.printStackTrace();

}

}

//得到IndexSearcher

public IndexSearcher getSearcher(){

//实时更新单例reder

try {

if(null == reader){

//false设置reder不为只读

reader = IndexReader.open(directory,false);

} else {

IndexReader tr = IndexReader.openIfChanged(reader);

if (null != tr)

reader.close();

reader=tr;

}

return new IndexSearcher(reader);

}catch (Exception e) {

e.printStackTrace();

}

return null;

}

// 创建索引步骤:创建Directory;创建IndexWriter;创建Document并add Field;将Document加入IndexWriter

public void index() {

IndexWriter writer = null;

try {

writer = new IndexWriter(directory, new IndexWriterConfig(

Version.LUCENE_35, new StandardAnalyzer(Version.LUCENE_35)));

Document doc = null;

for (int i = 0; i < ids.length; i++) {

doc = new Document();

// Field.Store.YES或NO (存储域选项)

// YES表示把这个域即field中的内容完全存储到索引文件中,方便进行文本的还原

// NO表示把这个域中的内容不存储到索引文件中,但是可以被索引,但是内容无法完全还原

// Field.Index (索引域选项)

// Index.ANALYZED:进行分词和索引,适用于标题内容等

// Index.NOT_ANALYZED:进行索引,但是不进行分词,如身份证号,姓名,ID等适用于精确搜索

// Index.ANALYZED_NOT_NORMS:即进行分词但不存储norms信息,信息中包括创建索引的时间和权值等信息,影响评分排序的信息

// Index.NOT_ANALYZED_NOT_NORMS:不分词不存储

// Index.NO:不进行索引

doc.add(new Field("id", ids[i], Field.Store.YES,

Field.Index.NOT_ANALYZED_NO_NORMS));

doc.add(new Field("email", emails[i], Field.Store.YES,

Field.Index.ANALYZED));

doc.add(new Field("content", content[i], Field.Store.NO,

Field.Index.ANALYZED));

doc.add(new Field("name", names[i], Field.Store.YES,

Field.Index.NOT_ANALYZED_NO_NORMS));

//为数字加索引

doc.add(new NumericField("attaches", Field.Store.YES,true).setIntValue(attaches[i]));

//为日期字段加索引

doc.add(new NumericField("date", Field.Store.YES,true).setLongValue(dates[i].getTime()));

//加权信息

String et = emails[i].substring(emails[i].lastIndexOf("@")+1);

if(scores.containsKey(et)){

doc.setBoost(scores.get(et));

}else{

doc.setBoost(0.5f);

}

writer.addDocument(doc);

}

} catch (Exception e) {

e.printStackTrace();

} finally {

if (writer != null)

try {

writer.close();

} catch (Exception e) {

e.printStackTrace();

}

}

}

public void seach01(){

try {

// 创建IndexReader

IndexReader reader = IndexReader.open(directory);

// 根据IndexWriter创建IndexSearcher

IndexSearcher seacher = new IndexSearcher(reader);

// 创建搜索的Query

TermQuery query = new TermQuery(new Term("content","like"));

// 根据seacher搜索并返回TopDocs

TopDocs tds = seacher.search(query, 10);

// 根据TopDocs获取ScoreDoc

ScoreDoc[] sds = tds.scoreDocs;

for(ScoreDoc sd: sds){

// 根据seacher和ScoreDoc对象获取具体的Dcument对象

Document d = seacher.doc(sd.doc);

// 根据Dcument对象获取需要的值

System.out.println("id:"+d.get("id"));

System.out.println("name:"+d.get("name"));

System.out.println("email:"+d.get("email"));

System.out.println("attach:"+d.get("attaches"));

System.out.println("date:"+d.get("date"));

}

reader.close();

} catch (Exception e) {

e.printStackTrace();

}

}

public void seach02(){

try {

// 调用方法getSearcher()取得IndexSearcher

IndexSearcher seacher = getSearcher();

// 创建搜索的Query

TermQuery query = new TermQuery(new Term("content","like"));

// 根据seacher搜索并返回TopDocs

TopDocs tds = seacher.search(query, 10);

// 根据TopDocs获取ScoreDoc

ScoreDoc[] sds = tds.scoreDocs;

for(ScoreDoc sd: sds){

// 根据seacher和ScoreDoc对象获取具体的Dcument对象

Document d = seacher.doc(sd.doc);

// 根据Dcument对象获取需要的值

System.out.println("id:"+d.get("id"));

System.out.println("name:"+d.get("name"));

System.out.println("email:"+d.get("email"));

System.out.println("attach:"+d.get("attaches"));

System.out.println("date:"+d.get("date"));

}

seacher.close();

} catch (Exception e) {

e.printStackTrace();

}

}

//查询

public void query(){

IndexReader reader = null;

try {

//获得文档数量

reader = IndexReader.open(directory);

System.out.println("numDocs:"+reader.numDocs());

System.out.println("maxDocs:"+reader.maxDoc() );

} catch (Exception e) {

e.printStackTrace();

}finally{

if (reader != null)

try {

reader.close();

} catch (IOException e) {

e.printStackTrace();

}

}

}

//删除索引 通过writer删除文档

public void delete(){

IndexWriter writer = null;

try {

writer = new IndexWriter(directory, new IndexWriterConfig(

Version.LUCENE_35, new StandardAnalyzer(Version.LUCENE_35)));

//删除id1的文档 参数还可以是一个query或一个term(精确查找的值)

//删除的文档并不会被完全删除,而是存储在一个单独类似回收站文件,可进行恢复

writer.deleteDocuments(new Term("id","1"));

//清空回收站 不可恢复

writer.forceMergeDeletes();

}catch(Exception e){

e.printStackTrace();

}finally {

if (writer != null)

try {

writer.close();

} catch (Exception e) {

e.printStackTrace();

}

}

}

//删除索引 通过reader删除文档

public void delete02(){

try {

reader.deleteDocuments(new Term("id","1"));

}catch(Exception e){

e.printStackTrace();

}finally {

}

}

//恢复删除的文档

public void undelete(){

IndexReader reader = null;

try {

reader = IndexReader.open(directory,false);

//恢復時必須把IndexReader的只读属性設置為false

reader.undeleteAll();

System.out.println("numDocs:"+reader.numDocs());

System.out.println("maxDocs:"+reader.maxDoc() );

System.out.println("deleteDocs:"+reader.numDeletedDocs() );

} catch (Exception e) {

e.printStackTrace();

} finally{

if (reader != null)

try {

reader.close();

} catch (IOException e) {

e.printStackTrace();

}

}

}

//优化合并

public void merge(){

IndexWriter writer = null;

try {

writer = new IndexWriter(directory, new IndexWriterConfig(

Version.LUCENE_35, new StandardAnalyzer(Version.LUCENE_35)));

//将索引合并为两段,这两段中被删除的数据会被清空

writer.forceMerge(2);

}catch(Exception e){

e.printStackTrace();

}finally {

if (writer != null)

try {

writer.close();

} catch (Exception e) {

e.printStackTrace();

}

}

}

//更新操作

public void update(){

IndexWriter writer = null;

try {

writer = new IndexWriter(directory, new IndexWriterConfig(

Version.LUCENE_35, new StandardAnalyzer(Version.LUCENE_35)));

//lucene并不提供更新,更新为先删除后添加

Document doc = new Document();

doc.add(new Field("id", "11", Field.Store.YES,

Field.Index.NOT_ANALYZED_NO_NORMS));

doc.add(new Field("email", emails[0], Field.Store.YES,

Field.Index.ANALYZED));

doc.add(new Field("content", content[0], Field.Store.NO,

Field.Index.ANALYZED));

doc.add(new Field("name", names[0], Field.Store.YES,

Field.Index.NOT_ANALYZED_NO_NORMS));

writer.updateDocument(new Term("id","1"), doc);

}catch(Exception e){

e.printStackTrace();

}finally {

if (writer != null)

try {

writer.close();

} catch (Exception e) {

e.printStackTrace();

}

}

}

}

package cn.yang;

import org.junit.Test;

public class TestIndex {

@Test

public void testIndex(){

IndexUtil iu = new IndexUtil();

iu.index();

}

@Test

public void testQuery(){

IndexUtil iu = new IndexUtil();

iu.query();

}

@Test

public void testDelete(){

IndexUtil iu = new IndexUtil();

iu.delete();

}

@Test

public void testunDelete(){

IndexUtil iu = new IndexUtil();

iu.undelete();

}

@Test

public void testSerch01(){

IndexUtil iu = new IndexUtil();

iu.seach01();

}

@Test

public void testSerch02(){

IndexUtil iu = new IndexUtil();

//iu.seach02();

for(int i=0;i<5;i++){

iu.seach02();

System.out.println("----------");

iu.seach02();

try {

Thread.sleep(10000);

} catch (InterruptedException e) {

e.printStackTrace();

}

}

}

}

4.域选项介绍:

Field.Index 表示Field的索引方式

NO 表示该Field不需要索引,也就是用户不需要去查找该Field的值

NO_NORMS 表示对该Field进行索引,但是不使用Analyzer,同时禁止它参加评分,主要是为了减少内存的消耗

TOKENIZED 表示该Field先被分词再索引

UN_TOKENIZED 像链接地址URL、文件系统路径信息、时间日期、人名、居民身份证、电话号码等等通常将被索引并且完整的存储在索引中,但一般不需要切分词

Field.Store 表示Field的存储方式

COMPRESS压缩存储

NO 原文不存储在索引文件中,搜索结果命中后,再根据其他附加属性如文件的Path,数据库的主键等,重新连接打开原文,适合原文内容较大的情况。

YES索引文件本来只存储索引数据, 此设计将原文内容直接也存储在索引文件中,如文档的标题。

5.IndexReader和IndexWriter的生命周期问题

IndexReader耗资源,做成单例

IndexReader在工作时IndexWriter重建或者更新了索引,假如IndexReader为单例模式,不

能实时查询到IndexWriter的更新

解决方法:IndexReader.openIfChanged(reader);

IndexWriter在项目中有可能只存在一个,故操作后要进行commit