1 如何监听到文件夹内新增了文件

2 如何监听到原文件内容数据做了变更

3 为了有两个bolt 一个用于切分 一个用于统计单词个数 为何不写在一起呢??

每一个组件完成单独功能 执行速度非常快 并且提高每个组件的并行度已达到单位时间内处理数据更大

4 参考flume ng 对处理过的文件做修改, 这里是将处理过的文件后缀更改达到目的

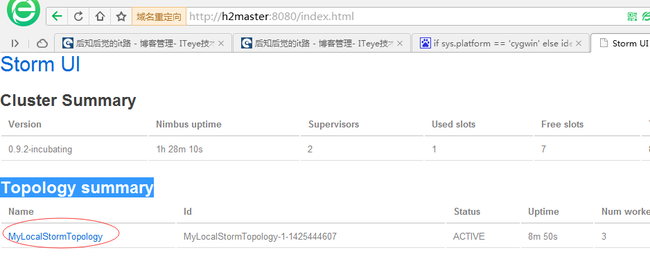

操作图如下:

2 单词计数代码:

package changping.houzhihoujue.storm;

import java.io.File;

import java.io.IOException;

import java.util.Collection;

import java.util.HashMap;

import java.util.List;

import java.util.Map;

import java.util.Map.Entry;

import org.apache.commons.io.FileUtils;

import backtype.storm.Config;

import backtype.storm.LocalCluster;

import backtype.storm.spout.SpoutOutputCollector;

import backtype.storm.task.OutputCollector;

import backtype.storm.task.TopologyContext;

import backtype.storm.topology.OutputFieldsDeclarer;

import backtype.storm.topology.TopologyBuilder;

import backtype.storm.topology.base.BaseRichBolt;

import backtype.storm.topology.base.BaseRichSpout;

import backtype.storm.tuple.Fields;

import backtype.storm.tuple.Tuple;

import backtype.storm.tuple.Values;

import backtype.storm.utils.Utils;

/**

* 作业:实现单词计数。

* (1)要求从一个文件夹中把所有文件都读取,计算所有文件中的单词出现次数。

* (2)当文件夹中的文件数量增加是,实时计算所有文件中的单词出现次数。

*/

public class MyWordCountTopology {

// 祖品火车 创建轨道 发车流程

public static void main( String[] args ) {

String DATASOURCE_SPOUT = DataSourceSpout.class.getSimpleName();

String SPLIT_BOLD = SplitBolt.class.getSimpleName();

String COUNT_BOLT = CountBolt.class.getSimpleName();

final TopologyBuilder builder = new TopologyBuilder();

builder.setSpout(DATASOURCE_SPOUT, new DataSourceSpout());

builder.setBolt(SPLIT_BOLD, new SplitBolt()).shuffleGrouping(DATASOURCE_SPOUT);

builder.setBolt(COUNT_BOLT, new CountBolt()).shuffleGrouping(SPLIT_BOLD);

final LocalCluster localCluster = new LocalCluster();

final Config config = new Config();

localCluster.submitTopology(MyWordCountTopology.class.getSimpleName(), config, builder.createTopology());

Utils.sleep(9999999);

localCluster.shutdown();

}

}

// 数据源

class DataSourceSpout extends BaseRichSpout{

private Map conf;

private TopologyContext context;

private SpoutOutputCollector collector;

public void open(Map conf, TopologyContext context,SpoutOutputCollector collector) {

this.conf = conf;

this.context = context;

this.collector = collector;

}

public void nextTuple() {

// 过滤文件夹D:/father 得到以txt结尾的文件

Collection<File> files = FileUtils.listFiles(new File("D:/father"), new String[]{"txt"}, true);

if(files != null && files.size() > 0){

for(File file : files){

try {// 将文件每一行都发射到 bolt内

List<String> lines = FileUtils.readLines(file);

for(String line : lines){

collector.emit(new Values(line));

}

//修改操作完的文件(这里是修改后缀) 这样nextTuple方法就不会再重新处理该文件

FileUtils.moveFile(file, new File(file.getAbsolutePath() + "." + System.currentTimeMillis()));

} catch (IOException e) {

e.printStackTrace();

}

}

}

}

public void declareOutputFields(OutputFieldsDeclarer declarer) {

declarer.declare(new Fields("line"));

}

}

// 切分单词逻辑

class SplitBolt extends BaseRichBolt{

private Map conf;

private TopologyContext context;

private OutputCollector collector;

public void prepare(Map stormConf, TopologyContext context, OutputCollector collector) {

this.conf = stormConf;

this.context = context;

this.collector = collector;

}

public void execute(Tuple input) {

String line = input.getStringByField("line");

String[] words = line.split("\\s");

for(String word : words){ // 发送每一个单词

System.err.println(word);

collector.emit(new Values(word));

}

}

public void declareOutputFields(OutputFieldsDeclarer declarer) {

declarer.declare(new Fields("word"));

}

}

//统计单词逻辑

class CountBolt extends BaseRichBolt{

private Map stormConf;

private TopologyContext context;

private OutputCollector collector;

public void prepare(Map stormConf, TopologyContext context,OutputCollector collector) {

this.stormConf = stormConf;

this.context = context;

this.collector = collector;

}

private HashMap<String, Integer> map = new HashMap<String, Integer>();

public void execute(Tuple input) {

String word = input.getStringByField("word");

System.err.println(word);

Integer value = map.get(word);

if(value==null){

value = 0;

}

value++;

map.put(word, value);

//把结果写出去

System.err.println("============================================");

Utils.sleep(2000);

for (Entry<String, Integer> entry : map.entrySet()) {

System.out.println(entry);

}

}

public void declareOutputFields(OutputFieldsDeclarer declarer) {

}

}

/*class CountBolt extends BaseRichBolt{

private Map conf;

private TopologyContext context;

private OutputCollector collector;

public void prepare(Map conf, TopologyContext context, OutputCollector collector) {

this.conf = conf;

this.context = context;

this.collector = collector;

}

*//**

* 对单词进行计数

*//*

Map<String, Integer> countMap = new HashMap<String, Integer>();

public void execute(Tuple tuple) {

//读取tuple

String word = tuple.getStringByField("word");

//保存每个单词

Integer value = countMap.get(word);

if(value==null){

value = 0;

}

value++;

countMap.put(word, value);

//把结果写出去

System.err.println("============================================");

Utils.sleep(2000);

for (Entry<String, Integer> entry : countMap.entrySet()) {

System.out.println(entry);

}

}

public void declareOutputFields(OutputFieldsDeclarer arg0) {

}

}*/

3 集群运行累加写法:

package changping.houzhihoujue.storm;

import java.io.File;

import java.io.IOException;

import java.util.HashMap;

import java.util.List;

import java.util.Map;

import java.util.concurrent.TimeUnit;

import org.apache.commons.io.FileUtils;

import ch.qos.logback.core.util.TimeUtil;

import backtype.storm.Config;

import backtype.storm.LocalCluster;

import backtype.storm.StormSubmitter;

import backtype.storm.spout.SpoutOutputCollector;

import backtype.storm.task.OutputCollector;

import backtype.storm.task.TopologyContext;

import backtype.storm.topology.OutputFieldsDeclarer;

import backtype.storm.topology.TopologyBuilder;

import backtype.storm.topology.base.BaseRichBolt;

import backtype.storm.topology.base.BaseRichSpout;

import backtype.storm.tuple.Fields;

import backtype.storm.tuple.Tuple;

import backtype.storm.tuple.Values;

/**

* 本地运行:

* 实现累加

* @author zm

*

*/

public class MyLocalStormTopology {

/**

* 组装火车 轨道 并让火车在轨道上行驶

* @throws InterruptedException

*/

public static void main(String[] args) throws Exception {

// 祖品列车

TopologyBuilder topologyBuilder = new TopologyBuilder();

topologyBuilder.setSpout("1", new MySpout2()); // 定义1号车厢

topologyBuilder.setBolt("2", new MyBolt1()).shuffleGrouping("1");// 定义2号车厢 并和1号车厢连接起来

// 造出轨道

/*LocalCluster localCluster = new LocalCluster();// 造出轨道 在本地运行

Config config = new Config();

// 轨道上运行列车, 三个参数分别为:定义的列车名,列车服务人员,轨道上跑的列车本身

localCluster.submitTopology(MyLocalStormTopology.class.getSimpleName(), config, topologyBuilder.createTopology());

TimeUnit.SECONDS.sleep(99999);// 设置列车运行时间

localCluster.shutdown();// 跑完后就停止下来, 否则storm是永不停止

*/

// 造出轨道 在集群中运行

StormSubmitter stormSubmitter = new StormSubmitter();// storm集群执行

HashMap conf = new HashMap();

stormSubmitter.submitTopology(MyLocalStormTopology.class.getSimpleName(), conf, topologyBuilder.createTopology());

}

}

//创建火车头

class MySpout2 extends BaseRichSpout {

private Map conf;

private TopologyContext context;

private SpoutOutputCollector collector;

// 此方法首先被调用 打开storm系统外的数据源

public void open(Map conf, TopologyContext context, SpoutOutputCollector collector) {

this.conf = conf;

this.context = context;

this.collector = collector;

}

private int i = 0;

// 认为是NameNode的heartbeat,永无休息的死循环的调用 并是线程安全的操作, 这里每一次调用此方法 将i++发送到bolt

public void nextTuple() {

System.err.println(i);

// 将数据(i++)放在弹壳(Values)中,并发送给bolt

this.collector.emit(new Values(i++));

try {

List linesNum = FileUtils.readLines(new File("D:/father"));

System.err.println("文件行数为: " + linesNum.size());

} catch (IOException e1) {

System.err.println("文件行数为: 0 读取失败" );

// TODO Auto-generated catch block

e1.printStackTrace();

}

try {

TimeUnit.SECONDS.sleep(1);

} catch (InterruptedException e) {

e.printStackTrace();

}

}

//声明输出的字段的名称为 v1 只有在输出给别人时才会重写此方法

public void declareOutputFields(OutputFieldsDeclarer declarer) {

declarer.declare(new Fields("v1"));

}

}

// 创建车厢

class MyBolt1 extends BaseRichBolt{

private Map stormConf;

private TopologyContext context;

private OutputCollector collector;

// 准备下要对接收storm spout发送来的数据

public void prepare(Map stormConf, TopologyContext context,OutputCollector collector) {

this.stormConf = stormConf;

this.context = context;

this.collector = collector;

}

private int sum = 0;

// 死循环,用于接收bolt送来的数据 这里storm每调用一次此方法 则获取发送来的tuple数据

public void execute(Tuple input) {

int i = input.getIntegerByField("v1");

sum += i;

System.err.println(sum);

}

// 只有向外发送数据时 此方法才会被调用 否则 不要实现此方法

public void declareOutputFields(OutputFieldsDeclarer declarer) {

}

}