系统均选用最小化安装的centos6.5

1.2 软件说明

nginx-0.8.55

pcre-8.13

apache-tomcat-6.0.35

jdk-6u31-linux-x64

nginx-upstream-jvm-route-0.1

1.3 规划说明

客户端通过访问nginx做的负载均衡层去访问后端的web运行层(tomcat),如下图:

另外,关于session复制原理,简单来说如下图:

负载层:192.168.254.200

安装:pcre、nginx、nginx-upstream-jvm-route-0.1

后端tomcat运行层:192.168.254.221、192.168.254.222

安装:tomcat、jdk

第2章 安装部署说明

2.1 负载均衡层安装部署说明

2.1.1 依赖包安装

yum install wget make gcc gcc-c++ -y

yum install pcre-devel openssl-devel patch -y

2.1.2 创建nginx运行帐号

useradd www -s /sbin/nologin -M

2.1.3 Pcre安装

解压pcre安装包:tar xvf pcre-8.13.tar.gz

cd pcre-8.13

编译pcre:./configure --prefix=/usr/local/pcre

安装:make && make install

2.1.4 Nginx安装

解压nginx和nginx-upstream

tar xvf nginx-upstream-jvm-route-0.1.tar.gz

tar xvf nginx-0.8.55.tar.gz

cd nginx-0.8.55

配置jvmroute路径:

patch -p0 < ../nginx_upstream_jvm_route/jvm_route.patch

编译nginx:

./configure \

--user=www \

--group=www \

--prefix=/usr/local/nginx \

--with-http_stub_status_module \

--with-http_ssl_module \

--with-http_flv_module \

--with-http_gzip_static_module \

--pid-path=/var/run/nginx.pid \

--error-log-path=/var/log/nginx/error.log \

--http-log-path=/var/log/nginx/access.log \

--http-client-body-temp-path=/var/tmp/nginx/client_body_temp \

--http-proxy-temp-path=/var/tmp/nginx/proxy_temp \

--http-fastcgi-temp-path=/var/tmp/nginx/fastcgi_temp \

--http-uwsgi-temp-path=/var/tmp/nginx/uwsgi_temp \

--http-scgi-temp-path=/var/tmp/nginx/scgi_temp \

--add-module=/root/scripts/src/nginx_upstream_jvm_route/

安装:

make && make install

2.1.5 Nginx配置文件修改

Nginx作为负载的配置文件修改很简单,只需添加后端web服务器的ip及端口即可,修改运行帐号,下面配置文件中的红色字体为本次测试环境的修改值;

user www www;

worker_processes 8;

#error_log logs/nginx_error.log crit;

#pid /usr/local/nginx/nginx.pid;

#Specifies the value for maximum file descriptors that can be opened by this process.

worker_rlimit_nofile 51200;

events

{

use epoll;

worker_connections 2048;

}

http

{

upstream backend {

server 192.168.254.221:80 srun_id=real1;

server 192.168.254.222:80 srun_id=real2;

jvm_route $cookie_JSESSIONID|sessionid reverse;

}

include mime.types;

default_type application/octet-stream;

#charset gb2312;

charset UTF-8;

server_names_hash_bucket_size 128;

client_header_buffer_size 32k;

large_client_header_buffers 4 32k;

client_max_body_size 20m;

limit_rate 1024k;

sendfile on;

tcp_nopush on;

keepalive_timeout 60;

tcp_nodelay on;

fastcgi_connect_timeout 300;

fastcgi_send_timeout 300;

fastcgi_read_timeout 300;

fastcgi_buffer_size 64k;

fastcgi_buffers 4 64k;

fastcgi_busy_buffers_size 128k;

fastcgi_temp_file_write_size 128k;

gzip on;

#gzip_min_length 1k;

gzip_buffers 4 16k;

gzip_http_version 1.0;

gzip_comp_level 2;

gzip_types text/plain application/x-javascript text/css application/xml;

gzip_vary on;

#limit_zone crawler $binary_remote_addr 10m;

server

{

listen 80;

server_name 192.168.254.250;

index index.jsp index.htm index.html;

root /data/www/;

location / {

proxy_pass http://backend;

proxy_redirect off;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header Host $http_host;

}

location ~ .*\.(gif|jpg|jpeg|png|bmp|swf)$

{

expires 30d;

}

location ~ .*\.(js|css)?$

{

expires 1h;

}

location /Nginxstatus {

stub_status on;

access_log off;

}

log_format access '$remote_addr - $remote_user [$time_local] "$request" '

'$status $body_bytes_sent "$http_referer" '

'"$http_user_agent" $http_x_forwarded_for';

# access_log off;

}

}

2.2 后端tomcat运行层部署说明

2.2.1 安装jdk

创建jdk安装目录:

mkdir /opt/java

赋予执行权限:

chmod 755 jdk-6u31-linux-x64.bin

cd /opt/java

./jdk-6u31-linux-x64.bin

修改环境变量:

cat >> /etc/profile.d/java.sh << "EOF"

export JAVA_HOME=/opt/java/jdk1.6.0_31

export CLASSPATH=$CLASSPATH:./:$JAVA_HOME/lib

PATH=$PATH:$HOME/bin:$JAVA_HOME/bin

EOF

配置环境变量生效:

source /etc/profile

2.2.2 安装tomcat

解压安装包,并将tomcat复制到/usr/local目录下,命名为tomcat;

tar xvf apache-tomcat-6.0.35.tar.gz

cp -r apache-tomcat-6.0.35 /usr/local/tomcat

2.2.3 修改配置文件 (其他配置请参考集群配置)

2.2.3.1 修改server.xml文件

修改server.xml文件只需要修改下面两点即可,因为是两台机器,故两台机器配置相同即可;

1、修改jvmRoute="real1"(自定义)

<Engine name="Catalina" defaultHost="localhost" jvmRoute="real1">

2、下面的代码是从官方网站上找到的默认的,具体运用中需要将auto的配置改成本机ip:

<Cluster className="org.apache.catalina.ha.tcp.SimpleTcpCluster"

channelSendOptions="8">

<Manager className="org.apache.catalina.ha.session.DeltaManager"

expireSessionsOnShutdown="false"

notifyListenersOnReplication="true"/>

<Channel className="org.apache.catalina.tribes.group.GroupChannel">

<Membership className="org.apache.catalina.tribes.membership.McastService"

address="228.0.0.4"

port="45564"

frequency="500"

dropTime="3000"/>

<Receiver className="org.apache.catalina.tribes.transport.nio.NioReceiver"

address="auto"

port="4000"

autoBind="100"

selectorTimeout="5000"

maxThreads="6"/>

<Sender className="org.apache.catalina.tribes.transport.ReplicationTransmitter">

<Transport className="org.apache.catalina.tribes.transport.nio.PooledParallelSender"/>

</Sender>

<Interceptor className="org.apache.catalina.tribes.group.interceptors.TcpFailureDetector"/>

<Interceptor className="org.apache.catalina.tribes.group.interceptors.MessageDispatch15Interceptor"/>

</Channel>

<Valve className="org.apache.catalina.ha.tcp.ReplicationValve"

filter=""/>

<Valve className="org.apache.catalina.ha.session.JvmRouteBinderValve"/>

<Deployer className="org.apache.catalina.ha.deploy.FarmWarDeployer"

tempDir="/tmp/war-temp/"

deployDir="/tmp/war-deploy/"

watchDir="/tmp/war-listen/"

watchEnabled="false"/>

<ClusterListener className="org.apache.catalina.ha.session.JvmRouteSessionIDBinderListener"/>

<ClusterListener className="org.apache.catalina.ha.session.ClusterSessionListener"/>

</Cluster>

2.2.3.2 修改web.xml文件

配置web.xml文件只需要在末端web-app前面添加<distributable/>即可;

<welcome-file-list>

<welcome-file>index.html</welcome-file>

<welcome-file>index.htm</welcome-file>

<welcome-file>index.jsp</welcome-file>

</welcome-file-list>

<distributable/>

</web-app>

2.3 测试session复制

2.3.1 创建测试文件

分别在tomcat项目部署目录下创建test文件夹,并创建index.jsp文件,文件内容如下:

<%@page language="java"%>

<html>

<body>

<h1><font color="red">Session serviced by tomcat</font></h1>

<table aligh="center" border="1">

<tr>

<td>Session ID</td>

<td><%=session.getId() %></td>

<% session.setAttribute("abc","abc");%>

</tr>

<tr>

<td>Created on</td>

<td><%= session.getCreationTime() %></td>

</tr>

</table>

</body>

<html>

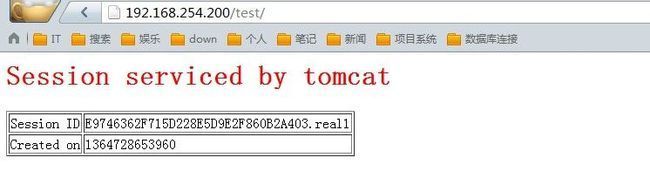

2.3.2 Web测试session复制

浏览器中输入:http://192.168.254.200/test/

即可查看当前负载tomcat测试页面输出:

关闭221的tomcat,并刷新页面

如此则是测试完成。

\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\

第一章 测试环境说明

1.1 系统说明

系统均选用最小化安装的centos 5.7

1.2 软件说明

nginx-0.8.55

pcre-8.13

apache-tomcat-6.0.35

jdk-6u31-linux-x64

nginx-upstream-jvm-route-0.1

1.3 规划说明

客户端通过访问nginx做的负载均衡层去访问后端的web运行层(tomcat),如下图:

另外,关于session复制原理,简单来说如下图:

负载层:192.168.254.200

安装:pcre、nginx、nginx-upstream-jvm-route-0.1

后端tomcat运行层:192.168.254.221、192.168.254.222

安装:tomcat、jdk

第2章 安装部署说明

2.1 负载均衡层安装部署说明

2.1.1 依赖包安装

yum install wget make gcc gcc-c++ -y

yum install pcre-devel openssl-devel patch -y

2.1.2 创建nginx运行帐号

useradd www -s /sbin/nologin -M

2.1.3 Pcre安装

解压pcre安装包:tar xvf pcre-8.13.tar.gz

cd pcre-8.13

编译pcre:./configure --prefix=/usr/local/pcre

安装:make && make install

2.1.4 Nginx安装

解压nginx和nginx-upstream

tar xvf nginx-upstream-jvm-route-0.1.tar.gz

tar xvf nginx-0.8.55.tar.gz

cd nginx-0.8.55

配置jvmroute路径:

patch -p0 < ../nginx_upstream_jvm_route/jvm_route.patch

编译nginx:

./configure \

--user=www \

--group=www \

--prefix=/usr/local/nginx \

--with-http_stub_status_module \

--with-http_ssl_module \

--with-http_flv_module \

--with-http_gzip_static_module \

--pid-path=/var/run/nginx.pid \

--error-log-path=/var/log/nginx/error.log \

--http-log-path=/var/log/nginx/access.log \

--http-client-body-temp-path=/var/tmp/nginx/client_body_temp \

--http-proxy-temp-path=/var/tmp/nginx/proxy_temp \

--http-fastcgi-temp-path=/var/tmp/nginx/fastcgi_temp \

--http-uwsgi-temp-path=/var/tmp/nginx/uwsgi_temp \

--http-scgi-temp-path=/var/tmp/nginx/scgi_temp \

--add-module=/root/scripts/src/nginx_upstream_jvm_route/

安装:

make && make install

2.1.5 Nginx配置文件修改

Nginx作为负载的配置文件修改很简单,只需添加后端web服务器的ip及端口即可,修改运行帐号,下面配置文件中的红色字体为本次测试环境的修改值;

user www www;

worker_processes 8;

#error_log logs/nginx_error.log crit;

#pid /usr/local/nginx/nginx.pid;

#Specifies the value for maximum file descriptors that can be opened by this process.

worker_rlimit_nofile 51200;

events

{

use epoll;

worker_connections 2048;

}

http

{

upstream backend {

server 192.168.254.221:80 srun_id=real1;

server 192.168.254.222:80 srun_id=real2;

jvm_route $cookie_JSESSIONID|sessionid reverse;

}

include mime.types;

default_type application/octet-stream;

#charset gb2312;

charset UTF-8;

server_names_hash_bucket_size 128;

client_header_buffer_size 32k;

large_client_header_buffers 4 32k;

client_max_body_size 20m;

limit_rate 1024k;

sendfile on;

tcp_nopush on;

keepalive_timeout 60;

tcp_nodelay on;

fastcgi_connect_timeout 300;

fastcgi_send_timeout 300;

fastcgi_read_timeout 300;

fastcgi_buffer_size 64k;

fastcgi_buffers 4 64k;

fastcgi_busy_buffers_size 128k;

fastcgi_temp_file_write_size 128k;

gzip on;

#gzip_min_length 1k;

gzip_buffers 4 16k;

gzip_http_version 1.0;

gzip_comp_level 2;

gzip_types text/plain application/x-javascript text/css application/xml;

gzip_vary on;

#limit_zone crawler $binary_remote_addr 10m;

server

{

listen 80;

server_name 192.168.254.250;

index index.jsp index.htm index.html;

root /data/www/;

location / {

proxy_pass http://backend;

proxy_redirect off;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header Host $http_host;

}

location ~ .*\.(gif|jpg|jpeg|png|bmp|swf)$

{

expires 30d;

}

location ~ .*\.(js|css)?$

{

expires 1h;

}

location /Nginxstatus {

stub_status on;

access_log off;

}

log_format access '$remote_addr - $remote_user [$time_local] "$request" '

'$status $body_bytes_sent "$http_referer" '

'"$http_user_agent" $http_x_forwarded_for';

# access_log off;

}

}

2.2 后端tomcat运行层部署说明

2.2.1 安装jdk

创建jdk安装目录:

mkdir /opt/java

赋予执行权限:

chmod 755 jdk-6u31-linux-x64.bin

cd /opt/java

./jdk-6u31-linux-x64.bin

修改环境变量:

cat >> /etc/profile.d/java.sh << "EOF"

export JAVA_HOME=/opt/java/jdk1.6.0_31

export CLASSPATH=$CLASSPATH:./:$JAVA_HOME/lib

PATH=$PATH:$HOME/bin:$JAVA_HOME/bin

EOF

配置环境变量生效:

source /etc/profile

2.2.2 安装tomcat

解压安装包,并将tomcat复制到/usr/local目录下,命名为tomcat;

tar xvf apache-tomcat-6.0.35.tar.gz

cp -r apache-tomcat-6.0.35 /usr/local/tomcat

2.2.3 修改配置文件 (其他配置请参考集群配置)

2.2.3.1 修改server.xml文件

修改server.xml文件只需要修改下面两点即可,因为是两台机器,故两台机器配置相同即可;

1、修改jvmRoute="real1"(自定义)

<Engine name="Catalina" defaultHost="localhost" jvmRoute="real1">

2、下面的代码是从官方网站上找到的默认的,具体运用中需要将auto的配置改成本机ip:

<Cluster className="org.apache.catalina.ha.tcp.SimpleTcpCluster"

channelSendOptions="8">

<Manager className="org.apache.catalina.ha.session.DeltaManager"

expireSessionsOnShutdown="false"

notifyListenersOnReplication="true"/>

<Channel className="org.apache.catalina.tribes.group.GroupChannel">

<Membership className="org.apache.catalina.tribes.membership.McastService"

address="228.0.0.4"

port="45564"

frequency="500"

dropTime="3000"/>

<Receiver className="org.apache.catalina.tribes.transport.nio.NioReceiver"

address="auto"

port="4000"

autoBind="100"

selectorTimeout="5000"

maxThreads="6"/>

<Sender className="org.apache.catalina.tribes.transport.ReplicationTransmitter">

<Transport className="org.apache.catalina.tribes.transport.nio.PooledParallelSender"/>

</Sender>

<Interceptor className="org.apache.catalina.tribes.group.interceptors.TcpFailureDetector"/>

<Interceptor className="org.apache.catalina.tribes.group.interceptors.MessageDispatch15Interceptor"/>

</Channel>

<Valve className="org.apache.catalina.ha.tcp.ReplicationValve"

filter=""/>

<Valve className="org.apache.catalina.ha.session.JvmRouteBinderValve"/>

<Deployer className="org.apache.catalina.ha.deploy.FarmWarDeployer"

tempDir="/tmp/war-temp/"

deployDir="/tmp/war-deploy/"

watchDir="/tmp/war-listen/"

watchEnabled="false"/>

<ClusterListener className="org.apache.catalina.ha.session.JvmRouteSessionIDBinderListener"/>

<ClusterListener className="org.apache.catalina.ha.session.ClusterSessionListener"/>

</Cluster>

2.2.3.2 修改web.xml文件

配置web.xml文件只需要在末端web-app前面添加<distributable/>即可;

<welcome-file-list>

<welcome-file>index.html</welcome-file>

<welcome-file>index.htm</welcome-file>

<welcome-file>index.jsp</welcome-file>

</welcome-file-list>

<distributable/>

</web-app>

2.3 测试session复制

2.3.1 创建测试文件

分别在tomcat项目部署目录下创建test文件夹,并创建index.jsp文件,文件内容如下:

<%@page language="java"%>

<html>

<body>

<h1><font color="red">Session serviced by tomcat</font></h1>

<table aligh="center" border="1">

<tr>

<td>Session ID</td>

<td><%=session.getId() %></td>

<% session.setAttribute("abc","abc");%>

</tr>

<tr>

<td>Created on</td>

<td><%= session.getCreationTime() %></td>

</tr>

</table>

</body>

<html>

2.3.2 Web测试session复制

浏览器中输入:http://192.168.254.200/test/

即可查看当前负载tomcat测试页面输出:

关闭221的tomcat,并刷新页面

如此则是测试完成。

---------------------------------------------------------------------------------------------------

第一章 测试环境说明

1.1 系统说明

系统均选用最小化安装的centos 5.7

1.2 软件说明

nginx-0.8.55

pcre-8.13

apache-tomcat-6.0.35

jdk-6u31-linux-x64

nginx-upstream-jvm-route-0.1

1.3 规划说明

客户端通过访问nginx做的负载均衡层去访问后端的web运行层(tomcat),如下图:

另外,关于session复制原理,简单来说如下图:

负载层:192.168.254.200

安装:pcre、nginx、nginx-upstream-jvm-route-0.1

后端tomcat运行层:192.168.254.221、192.168.254.222

安装:tomcat、jdk

第2章 安装部署说明

2.1 负载均衡层安装部署说明

2.1.1 依赖包安装

yum install wget make gcc gcc-c++ -y

yum install pcre-devel openssl-devel patch -y

2.1.2 创建nginx运行帐号

useradd www -s /sbin/nologin -M

2.1.3 Pcre安装

解压pcre安装包:tar xvf pcre-8.13.tar.gz

cd pcre-8.13

编译pcre:./configure --prefix=/usr/local/pcre

安装:make && make install

2.1.4 Nginx安装

解压nginx和nginx-upstream

tar xvf nginx-upstream-jvm-route-0.1.tar.gz

tar xvf nginx-0.8.55.tar.gz

cd nginx-0.8.55

配置jvmroute路径:

patch -p0 < ../nginx_upstream_jvm_route/jvm_route.patch

编译nginx:

./configure \

--user=www \

--group=www \

--prefix=/usr/local/nginx \

--with-http_stub_status_module \

--with-http_ssl_module \

--with-http_flv_module \

--with-http_gzip_static_module \

--pid-path=/var/run/nginx.pid \

--error-log-path=/var/log/nginx/error.log \

--http-log-path=/var/log/nginx/access.log \

--http-client-body-temp-path=/var/tmp/nginx/client_body_temp \

--http-proxy-temp-path=/var/tmp/nginx/proxy_temp \

--http-fastcgi-temp-path=/var/tmp/nginx/fastcgi_temp \

--http-uwsgi-temp-path=/var/tmp/nginx/uwsgi_temp \

--http-scgi-temp-path=/var/tmp/nginx/scgi_temp \

--add-module=/root/scripts/src/nginx_upstream_jvm_route/

安装:

make && make install

2.1.5 Nginx配置文件修改

Nginx作为负载的配置文件修改很简单,只需添加后端web服务器的ip及端口即可,修改运行帐号,下面配置文件中的红色字体为本次测试环境的修改值;

user www www;

worker_processes 8;

#error_log logs/nginx_error.log crit;

#pid /usr/local/nginx/nginx.pid;

#Specifies the value for maximum file descriptors that can be opened by this process.

worker_rlimit_nofile 51200;

events

{

use epoll;

worker_connections 2048;

}

http

{

upstream backend {

server 192.168.254.221:80 srun_id=real1;

server 192.168.254.222:80 srun_id=real2;

jvm_route $cookie_JSESSIONID|sessionid reverse;

}

include mime.types;

default_type application/octet-stream;

#charset gb2312;

charset UTF-8;

server_names_hash_bucket_size 128;

client_header_buffer_size 32k;

large_client_header_buffers 4 32k;

client_max_body_size 20m;

limit_rate 1024k;

sendfile on;

tcp_nopush on;

keepalive_timeout 60;

tcp_nodelay on;

fastcgi_connect_timeout 300;

fastcgi_send_timeout 300;

fastcgi_read_timeout 300;

fastcgi_buffer_size 64k;

fastcgi_buffers 4 64k;

fastcgi_busy_buffers_size 128k;

fastcgi_temp_file_write_size 128k;

gzip on;

#gzip_min_length 1k;

gzip_buffers 4 16k;

gzip_http_version 1.0;

gzip_comp_level 2;

gzip_types text/plain application/x-javascript text/css application/xml;

gzip_vary on;

#limit_zone crawler $binary_remote_addr 10m;

server

{

listen 80;

server_name 192.168.254.250;

index index.jsp index.htm index.html;

root /data/www/;

location / {

proxy_pass http://backend;

proxy_redirect off;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header Host $http_host;

}

location ~ .*\.(gif|jpg|jpeg|png|bmp|swf)$

{

expires 30d;

}

location ~ .*\.(js|css)?$

{

expires 1h;

}

location /Nginxstatus {

stub_status on;

access_log off;

}

log_format access '$remote_addr - $remote_user [$time_local] "$request" '

'$status $body_bytes_sent "$http_referer" '

'"$http_user_agent" $http_x_forwarded_for';

# access_log off;

}

}

2.2 后端tomcat运行层部署说明

2.2.1 安装jdk

创建jdk安装目录:

mkdir /opt/java

赋予执行权限:

chmod 755 jdk-6u31-linux-x64.bin

cd /opt/java

./jdk-6u31-linux-x64.bin

修改环境变量:

cat >> /etc/profile.d/java.sh << "EOF"

export JAVA_HOME=/opt/java/jdk1.6.0_31

export CLASSPATH=$CLASSPATH:./:$JAVA_HOME/lib

PATH=$PATH:$HOME/bin:$JAVA_HOME/bin

EOF

配置环境变量生效:

source /etc/profile

2.2.2 安装tomcat

解压安装包,并将tomcat复制到/usr/local目录下,命名为tomcat;

tar xvf apache-tomcat-6.0.35.tar.gz

cp -r apache-tomcat-6.0.35 /usr/local/tomcat

2.2.3 修改配置文件 (其他配置请参考集群配置)

2.2.3.1 修改server.xml文件

修改server.xml文件只需要修改下面两点即可,因为是两台机器,故两台机器配置相同即可;

1、修改jvmRoute="real1"(自定义)

<Engine name="Catalina" defaultHost="localhost" jvmRoute="real1">

2、下面的代码是从官方网站上找到的默认的,具体运用中需要将auto的配置改成本机ip:

<Cluster className="org.apache.catalina.ha.tcp.SimpleTcpCluster"

channelSendOptions="8">

<Manager className="org.apache.catalina.ha.session.DeltaManager"

expireSessionsOnShutdown="false"

notifyListenersOnReplication="true"/>

<Channel className="org.apache.catalina.tribes.group.GroupChannel">

<Membership className="org.apache.catalina.tribes.membership.McastService"

address="228.0.0.4"

port="45564"

frequency="500"

dropTime="3000"/>

<Receiver className="org.apache.catalina.tribes.transport.nio.NioReceiver"

address="auto"

port="4000"

autoBind="100"

selectorTimeout="5000"

maxThreads="6"/>

<Sender className="org.apache.catalina.tribes.transport.ReplicationTransmitter">

<Transport className="org.apache.catalina.tribes.transport.nio.PooledParallelSender"/>

</Sender>

<Interceptor className="org.apache.catalina.tribes.group.interceptors.TcpFailureDetector"/>

<Interceptor className="org.apache.catalina.tribes.group.interceptors.MessageDispatch15Interceptor"/>

</Channel>

<Valve className="org.apache.catalina.ha.tcp.ReplicationValve"

filter=""/>

<Valve className="org.apache.catalina.ha.session.JvmRouteBinderValve"/>

<Deployer className="org.apache.catalina.ha.deploy.FarmWarDeployer"

tempDir="/tmp/war-temp/"

deployDir="/tmp/war-deploy/"

watchDir="/tmp/war-listen/"

watchEnabled="false"/>

<ClusterListener className="org.apache.catalina.ha.session.JvmRouteSessionIDBinderListener"/>

<ClusterListener className="org.apache.catalina.ha.session.ClusterSessionListener"/>

</Cluster>

2.2.3.2 修改web.xml文件

配置web.xml文件只需要在末端web-app前面添加<distributable/>即可;

<welcome-file-list>

<welcome-file>index.html</welcome-file>

<welcome-file>index.htm</welcome-file>

<welcome-file>index.jsp</welcome-file>

</welcome-file-list>

<distributable/>

</web-app>

2.3 测试session复制

2.3.1 创建测试文件

分别在tomcat项目部署目录下创建test文件夹,并创建index.jsp文件,文件内容如下:

<%@page language="java"%>

<html>

<body>

<h1><font color="red">Session serviced by tomcat</font></h1>

<table aligh="center" border="1">

<tr>

<td>Session ID</td>

<td><%=session.getId() %></td>

<% session.setAttribute("abc","abc");%>

</tr>

<tr>

<td>Created on</td>

<td><%= session.getCreationTime() %></td>

</tr>

</table>

</body>

<html>

2.3.2 Web测试session复制

浏览器中输入:http://192.168.254.200/test/

即可查看当前负载tomcat测试页面输出:

关闭221的tomcat,并刷新页面

如此则是测试完成。

[[[[[[[[[[[[[[[[[[[[[[[[[[[[[[[[[[[[[[[[[[[[[[[[[[[[[[[[[[[[[[[[[[[[[[[[[[[[[[[[[[[[[[[[[[[[[[[[[[[[[[[[[[[[[[[[[[[[[[[[[[[[[[[[[

Tomcat集群session同步方案有以下几种方式:

- 使用tomcat自带的cluster方式,多个tomcat间自动实时复制session信息,配置起来很简单。但这个方案的效率比较低,在大并发下表现并不好。原理:http://zyycaesar.iteye.com/blog/296606

- 利用nginx的基于访问ip的hash路由策略,保证访问的ip始终被路由到同一个tomcat上,这个配置更简单。但如果应用是某一个局域网大量用户同时登录,这样负载均衡就没什么作用了。

- 利用nginx插件实现tomcat集群和session同步,nginx-upstream-jvm-route-0.1.tar.gz,是一 个 Nginx 的扩展模块,用来实现基于 Cookie 的 Session Sticky 的功能,可通过http://code.google.com/p/nginx-upstream-jvm-route/downloads/list获 取。

- 利用memcached把多个tomcat的session集中管理,前端在利用nginx负载均衡和动静态资源分离,在兼顾系统水平扩展的同时又能保证较高的性能。

以下使用第四种方案,集群环境:

1. nginx最新版本:1.5.7

2. tomcat版本:6.0.37

3. memcached最新版本:1.4.15

4. session复制同步使用memcache-session-manager最新版本:1.6.5

5. 系统:CentOS6.3

一、Nginx安装

- centos6.3默认未安装gcc-c++,先装gcc:

yum -y install gcc-c++

装完后记得reboot系统。

- cd到安装目录

cd /usr/lcoal/src

- 安装pcre库

cd /usr/local/src wget ftp://ftp.csx.cam.ac.uk/pub/software/programming/pcre/pcre-8.21.tar.gz tar -zxvf pcre-8.21.tar.gz cd pcre-8.21 ./configure make make install

如果wget下载不到的话,去官网下载pcre-8.12.tar.gz包拷贝到src下。

- 安装zlib库

cd /usr/local/src wget http://zlib.net/zlib-1.2.8.tar.gz tar -zxvf zlib-1.2.8.tar.gz cd zlib-1.2.8 ./configure make make install

- 安装ssl

cd /usr/local/src wget http://www.openssl.org/source/openssl-1.0.1c.tar.gz tar -zxvf openssl-1.0.1c.tar.gz

- 安装nginx

cd nginx-1.5.7 ./configure --prefix=/usr/local/nginx/nginx \ --with-http_ssl_module \ --with-pcre=/usr/local/src/pcre-8.12 \ --with-zlib=/usr/local/src/zlib-1.2.8 \ --with-openssl=/usr/local/src/openssl-1.0.1c make make install

安装成功,cd /usr/local/nginx/conf/nginx.conf,修改配置文件:

http {

...

upstream localhost {

server localhost:8081;

server localhost:8082;

server localhost:8083;

}

...

}

location / {

root html;

index index.html index.htm;

proxy_pass http://localhost;

proxy_redirect off;

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

client_max_body_size 10m;

client_body_buffer_size 128k;

proxy_connect_timeout 90;

proxy_send_timeout 90;

proxy_read_timeout 90;

proxy_buffer_size 4k;

proxy_buffers 4 32k;

proxy_busy_buffers_size 64k;

proxy_temp_file_write_size 64k;

}

二、memcached安装

- memcached安装较简单,需要先libevent库:

sudo yum install libevent libevent-devel wget http://www.danga.com/memcached/dist/memcached-1.4.15.tar.gz tar zxf memcached-1.4.15.tar.gz cd memcached-1.4.15 ./configure make sudo make install

安装成功(默认安装在bin下),启动:

#-vv 控制台输出 #-d 后台运行 /usr/local/bin/memcached -vv

启动后,可以telnet上去看下状态:

telnet 127.0.0.1 11211 stats

三、tomcat配置

1. 修改server.xml

<Engine name="Catalina" defaultHost="localhost" jvmRoute="jvm1"> ... <Context path="" docBase="/demo/appserver/app/cluster" debug="0" reloadable="true" crossContext="true"> <Manager className="de.javakaffee.web.msm.MemcachedBackupSessionManager" memcachedNodes="n1:192.168.2.43:11211" requestUriIgnorePattern=".*\.(png|gif|jpg|css|js|ico|jpeg|htm|html)$" sessionBackupAsync="false" sessionBackupTimeout="1800000" copyCollectionsForSerialization="false" transcoderFactoryClass="de.javakaffee.web.msm.serializer.kryo.KryoTranscoderFactory" /> </Context>

2. 添加mem和msm的依赖jar包

couchbase-client-1.2.2.jar

javolution-5.4.3.1.jar

kryo-1.03.jar

kryo-serializers-0.10.jar

memcached-session-manager-1.6.5.jar

memcached-session-manager-tc6-1.6.5.jar

minlog-1.2.jar

msm-kryo-serializer-1.6.5.jar

reflectasm-0.9.jar

spymemcached-2.10.2.jar

注意点:

-msm1.6.5依赖了Couchbase,需要添加couchbase-client的jar包,否则启动会报:java.lang.NoClassDefFoundError: com/couchbase/client/CouchbaseClient。

-tomcat6和7使用不同msm支持包:memcached-session-manager-tc6-1.6.5.jar和memcached-session-manager-tc7-1.6.5.jar,只可选一,否则启动报错。

-msm源码中的lib包版本太低:spymemcached-2.7.jar需要使用2.10.2,否则启动tomcat报错:

java.lang.NoSuchMethodError: net.spy.memcached.MemcachedClient.set(Ljava/lang/String;ILjava/lang/Object;)Lnet/spy/memcached/internal/OperationFuture;

at de.javakaffee.web.msm.BackupSessionTask.storeSessionInMemcached(BackupSessionTask.java:227)

kryo-serializers-0.8.jar需要使用0.10版本,否则报错:

Caused by: java.lang.ClassNotFoundException: de.javakaffee.kryoserializers.DateSerializer

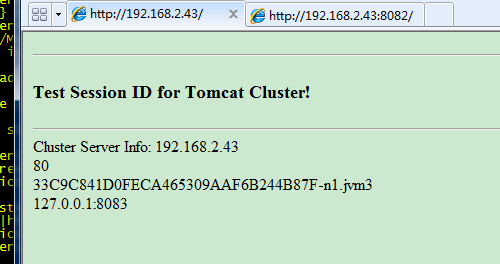

tomcat启动成功后可以去刷新页面,ip端口会变化,session是不会变化的:

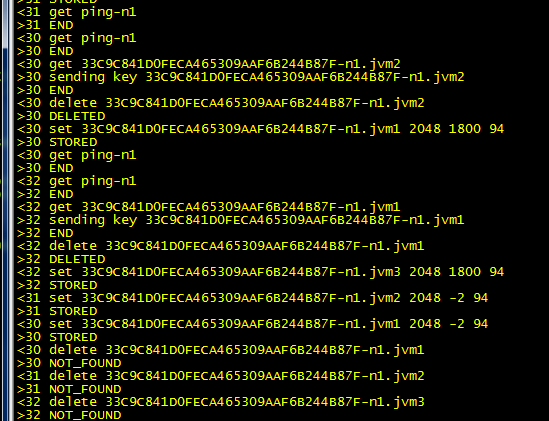

memcached的状态可以看到同步session的操作日志: