转载请标明出处SpringsSpace: http://springsfeng.iteye.com

1. 编译HCatalog安装包

(0) JDK: jdkjdk1.6.0_43

(1) 下载源码包hcatalog-trunk.zip, 下载地址:https://github.com/apache/hcatalog

并解压至:/home/kevin/Downloads/hcatalog-trunk

(2) 安装ANT, 并配置好环境变量:ANT_HOME, PATH,完整环境变量配置请参考本篇

文章第5部分:5. HCatalog环境变量设置。

(3) 下载Apache Forrest

apache-forrest-0.9-sources.tar.gz, apache-forrest-0.9-dependencies.tar.gz

并将上述两个文件解压至一个文件夹下:/usr/custom/apache-forrest-0.9

(4) 编译

注意:因为这次测试的是HCatalog0.6.0-SNAPSHOT运行在YARN(hadoop-2.0.2-alpha),

hive-0.11.0-SNAPSHOT, pig-0.11.0之上,请使用ANT进行编译,不要使用Maven进行

编译, 编译前请修改下面文件:

A. build.properties: 注意红色部分。

mvn.deploy.repo.id=apache.snapshots.https

mvn.deploy.repo.url=https://repository.apache.org/content/repositories/snapshots

maven-ant-tasks.version=2.1.3

mvn.local.repo=${user.home}/.m2/repository

mvn.hadoop.profile=hadoop23

B. build.xml: depends删除docs 。

<!--

===============================================================================

Distribution Section

===============================================================================

-->

<target name="package" depends="jar" description="Create an HCatalog release">

<mkdir dir="${dist.dir}"/>

<mkdir dir="${dist.dir}/share/${ant.project.name}/lib"/>

<mkdir dir="${dist.dir}/etc/hcatalog"/>

<mkdir dir="${dist.dir}/bin"/>

<mkdir dir="${dist.dir}/sbin"/>

<mkdir dir="${dist.dir}/share/${ant.project.name}/scripts"/>

<mkdir dir="${dist.dir}/share/${ant.project.name}/templates/conf"/>

<mkdir dir="${dist.dir}/share/doc/${ant.project.name}"/>

<mkdir dir="${dist.dir}/share/doc/${ant.project.name}/api"/>

<mkdir dir="${dist.dir}/share/doc/${ant.project.name}/jdiff"/>

<mkdir dir="${dist.dir}/share/doc/${ant.project.name}/license"/> C. pom.xml

...

<properties>

<activemq.version>5.5.0</activemq.version>

<commons-exec.version>1.1</commons-exec.version>

<commons-io.version>2.4</commons-io.version>

<guava.version>11.0.2</guava.version>

<hadoop20.version>1.0.3</hadoop20.version>

<hadoop23.version>0.23.3</hadoop23.version>

<hbase.version>0.92.0</hbase.version>

<hcatalog.version>${project.version}</hcatalog.version>

<hive.version>0.11.0</hive.version>

<jackson.version>1.8.3</jackson.version>

<jersey.version>1.14</jersey.version>

<jetty.webhcat.version>7.6.0.v20120127</jetty.webhcat.version>

<jms.version>1.1</jms.version>

<pig.version>0.11.0</pig.version>

<slf4j.version>1.6.1</slf4j.version>

<wadl-resourcedoc-doclet.version>1.4</wadl-resourcedoc-doclet.version>

<zookeeper.version>3.4.3</zookeeper.version>

</properties>

... 注意:<hive.version>0.11.0</hive.version>问题,请参考Hive的编译:

cd /home/kevin/Downloads/hcatalog-trunk

/usr/custom/apache-ant-1.8.4/bin/ant -Dhcatalog.version=0.6.0-SNAPSHOT -Dforrest.home=/usr/custom/apache-forrest-0.9 package

编译后在/home/kevin/Downloads/hcatalog-trunk/build目录下生成:

hcatalog-0.6.0-SNAPSHOT.tar.gz

(5) 编译过程中产生的异常

异常1:

compile:

[echo] hcatalog-core

[mkdir] Created dir: /home/kevin/Downloads/hcatalog-trunk/core/build/classes

[javac] Compiling 75 source files to /home/kevin/Downloads/hcatalog-trunk/core/build/classes

[javac] /home/kevin/Downloads/hcatalog-trunk/core/src/main/java/org/apache/hcatalog/cli/SemanticAnalysis/CreateDatabaseHook.java:54: cannot access org.antlr.runtime.tree.CommonTree

[javac] class file for org.antlr.runtime.tree.CommonTree not found

[javac] int numCh = ast.getChildCount();

解决方法:

Copy Hive/lib目录下的antlr-2.7.7.jar和antlr-runtime-3.4.jar至/home/kevin/Downloads/hcatalog-trunk/core/build/lib/compile目录下,重新执行编译。

异常2:

compile:

[echo] hcatalog-server-extensions

[mkdir] Created dir: /home/kevin/Downloads/hcatalog-trunk/server-extensions/build/classes

[javac] Compiling 19 source files to /home/kevin/Downloads/hcatalog-trunk/server-extensions/build/classes

[javac] /home/kevin/Downloads/hcatalog-trunk/server-extensions/src/main/java/org/apache/hcatalog/listener/NotificationListener.java:96: cannot access com.facebook.fb303.FacebookBase

[javac] class file for com.facebook.fb303.FacebookBase not found

[javac] .get_table(partition.getDbName(), partition.getTableName())

[javac] ^

解决方法:

Copy Hive/lib目录下的libfb303-0.9.0.jar至/home/kevin/Downloads/hcatalog-trunk/server-extensions/build/lib/compile目录下,重新执行编译。

异常3:

compile-src:

[javac] Compiling 35 source files to /home/kevin/Downloads/hcatalog-trunk/storage-handlers/hbase/build/classes

[javac] /home/kevin/Downloads/hcatalog-trunk/storage-handlers/hbase/src/java/org/apache/hcatalog/hbase/HBaseBaseOutputFormat.java:27: package org.apache.hadoop.hbase.client does not exist

[javac] import org.apache.hadoop.hbase.client.Put;

[javac] ^

解决方法:

Copy Hive/lib目录下的hbase-0.92.0.jar至/home/kevin/Downloads/hcatalog-trunk/storage-handlers/hbase/build/lib/compile目录下,重新执行编译。

异常4:

compile-src:

[javac] Compiling 35 source files to /home/kevin/Downloads/hcatalog-trunk/storage-handlers/hbase/build/classes

[javac] /home/kevin/Downloads/hcatalog-trunk/storage-handlers/hbase/src/java/org/apache/hcatalog/hbase/HBaseHCatStorageHandler.java:73: package com.facebook.fb303 does not exist

[javac] import com.facebook.fb303.FacebookBase;

[javac] ^

解决方法:

Copy Hive/lib目录下的libfb303-0.9.0.jar至/home/kevin/Downloads/hcatalog-trunk/storage-handlers/hbase/build/lib/compile目录下,重新执行编译。

2. 安装Thrift

(1) 下载thrift-0.9.0.tar.gz, 并解压至:/home/kevin/Downloads/Software/thrift-0.9.0

(2) 安装Thrift需要的以来包: 使用root权限

yum install automake libtool flex bison gcc-c++ boost-devel libevent-devel zlib-devel python-devel ruby-devel autoconf crypto-utils pkgconfig openssl-devel

(3) 安装Thrift:使用root权限

cd /home/kevin/Downloads/Software/thrift-0.9.0

./configure --with-boost=/usr/local //指定Thrift安装路径

make

make check //检测过程,可不执行

make install

(4) 测试Thrift安装

终端下执行:thrift, 若输出下面的提示,则证明Thrift安装成功:

[kevin@linux-fdc ~]$ thrift

Usage: thrift [options] file

Use thrift -help for a list of options

[kevin@linux-fdc ~]$

3. 安装MySQL并创建hive账户

该部分参考:Hive安装配置学习笔记1和2部分:http://springsfeng.iteye.com/blog/1734517

4. 安装Hcatalog:

在正式安装HCatalog前,建议先安装好Thrift, MySQL, Hive和Pig, 请分别参考:

http://springsfeng.iteye.com/admin/blogs/1734517

http://springsfeng.iteye.com/admin/blogs/1734556

(1) 解压hcatalog-0.6.0-SNAPSHOT.tar.gz至:/home/kevin/Downloads/hcatalog-0.6.0

(2) 安装

/usr/custom/hcatalog-0.6.0/share/hcatalog/scripts

[kevin@linux-fdc scripts]$ chmod 777 *

cd /home/kevin/Downloads/hcatalog-0.6.0

[kevin@linux-fdc hcatalog-0.6.0-SNAPSHOT]$ share/hcatalog/scripts/hcat_server_install.sh -r /usr/custom/hcatalog-0.6.0 -d /usr/custom/hive-0.11.0/lib -h /usr/custom/hadoop-2.0.2-alpha -p 8888

说明:

上述命令应写成一行,此处换行时为了方便阅读。

-r /usr/custom/hcatalog-0.6.0 指定HCatalog将要安装的目,请先建立该目录

-d /usr/custom/hive-0.11.0/lib 指定mysql-connector-java-5.1.22-bin.jar的路径

-h /usr/custom/hadoop-2.0.2-alpha 指定Hadoop的安装主目录

-p 8888指定HCatalog运行的端口

(3) 启动服务

cd /usr/custom/hcatalog-0.6.0

sbin/hcat_server.sh start

(4) 关闭服务

cd /usr/custom/hcatalog-0.6.0

sbin/hcat_server.sh stop

(5) 查看日志

进入/usr/custom/hcatalog-0.6.0/var/log目录查看。

5. HCatalog环境变量设置

此处设置的环境变量时为了便于运行官方文件中的例子:

http://incubator.apache.org/hcatalog/docs/r0.5.0/loadstore.html

http://incubator.apache.org/hcatalog/docs/r0.5.0/inputoutput.html

环境变量的完整文件请参考附件:profile.txt.

JAVA_HOME=/usr/custom/jdk1.6.0_43

ANT_HOME=/usr/custom/apache-ant-1.8.4

M2_HOME=/usr/custom/apache-maven-3.0.5

HADOOP_HOME=/usr/custom/hadoop-2.0.2-alpha

PIG_HOME=/usr/custom/pig-0.11.0

HIVE_HOME=/usr/custom/hive-0.11.0

HCAT_HOME=/usr/custom/hcatalog-0.6.0

HADOOP_MAPRED_HOME=$HADOOP_HOME

HADOOP_COMMON_HOME=$HADOOP_HOME

HADOOP_HDFS_HOME=$HADOOP_HOME

YARN_HOME=$HADOOP_HOME

HADOOP_LIB=$HADOOP_HOME/lib

HADOOP_CONF_DIR=$HADOOP_HOME/etc/hadoop

YARN_CONF_DIR=$HADOOP_HOME/etc/hadoop

PATH=$PATH:$M2_HOME/bin:$JAVA_HOME/bin:$PIG_HOME/bin:$ANT_HOME/bin:$HADOOP_HOME/bin:$HADOOP_HOME/sbin:$HIVE_HOME/bin:$HCAT_HOME/bin

CLASSPATH=.:$JAVA_HOME/lib/dt.jar:$JAVA_HOME/lib/tools.jar:$JAVA_HOME/jre/lib/rt.jar

PIG_OPTS=-Dhive.metastore.uris=thrift://linux-fdc.linux.com:8888

LIB_JARS=$HCAT_HOME/share/hcatalog/hcatalog-core-0.6.0-SNAPSHOT.jar,$HCAT_HOME/share/hcatalog/hcatalog-pig-adapter-0.6.0-SNAPSHOT.jar,$HCAT_HOME/share/hcatalog/hcatalog-server-extensions-0.6.0-SNAPSHOT.jar,$HIVE_HOME/lib/hive-metastore-0.11.0-SNAPSHOT.jar,$HIVE_HOME/lib/libthrift-0.9.0.jar,$HIVE_HOME/lib/hive-exec-0.11.0-SNAPSHOT.jar,$HIVE_HOME/lib/libfb303-0.9.0.jar,$HIVE_HOME/lib/jdo2-api-2.3-ec.jar,$HIVE_HOME/lib/slf4j-api-1.6.1.jar,$HIVE_HOME/lib/datanucleus-core-2.0.3.jar,$HIVE_HOME/lib/datanucleus-rdbms-2.0.3.jar,$HIVE_HOME/lib/datanucleus-connectionpool-2.0.3.jar,$HIVE_HOME/lib/commons-collections-3.2.1.jar,$HIVE_HOME/lib/commons-pool-1.5.4.jar,$HIVE_HOME/lib/commons-dbcp-1.4.jar,$HIVE_HOME/lib/mysql-connector-java-5.1.22-bin.jar

HADOOP_CLASSPATH=$HCAT_HOME/share/hcatalog/hcatalog-core-0.6.0-SNAPSHOT.jar:$HCAT_HOME/share/hcatalog/hcatalog-pig-adapter-0.6.0-SNAPSHOT.jar:$HCAT_HOME/share/hcatalog/hcatalog-server-extensions-0.6.0-SNAPSHOT.jar:$HIVE_HOME/lib/hive-metastore-0.11.0-SNAPSHOT.jar:$HIVE_HOME/lib/libthrift-0.9.0.jar:$HIVE_HOME/lib/hive-exec-0.11.0-SNAPSHOT.jar:$HIVE_HOME/lib/libfb303-0.9.0.jar:$HIVE_HOME/lib/jdo2-api-2.3-ec.jar:$HIVE_HOME/lib/slf4j-api-1.6.1.jar:$HIVE_HOME/lib/datanucleus-core-2.0.3.jar:$HIVE_HOME/lib/datanucleus-rdbms-2.0.3.jar:$HIVE_HOME/lib/datanucleus-connectionpool-2.0.3.jar:$HIVE_HOME/lib/commons-collections-3.2.1.jar:$HIVE_HOME/lib/commons-pool-1.5.4.jar:$HIVE_HOME/lib/commons-dbcp-1.4.jar:$HIVE_HOME/lib/mysql-connector-java-5.1.22-bin.jar:$HIVE_HOME/conf:$HADOOP_HOME/etc/hadoop

PIG_CLASSPATH=$HCAT_HOME/share/hcatalog/hcatalog-core-0.6.0-SNAPSHOT.jar:$HCAT_HOME/share/hcatalog/hcatalog-pig-adapter-0.6.0-SNAPSHOT.jar:$HCAT_HOME/share/hcatalog/hcatalog-server-extensions-0.6.0-SNAPSHOT.jar:$HIVE_HOME/lib/hive-metastore-0.11.0-SNAPSHOT.jar:$HIVE_HOME/lib/libthrift-0.9.0.jar:$HIVE_HOME/lib/hive-exec-0.11.0-SNAPSHOT.jar:$HIVE_HOME/lib/libfb303-0.9.0.jar:$HIVE_HOME/lib/jdo2-api-2.3-ec.jar:$HIVE_HOME/lib/slf4j-api-1.6.1.jar:$HIVE_HOME/conf:$HADOOP_HOME/etc/hadoop

export JAVA_HOME M2_HOME PATH CLASSPATH LD_LIBRARY_PATH HADOOP_HOME PIG_HOME HADOOP_MAPRED_HOME HADOOP_COMMON_HOME HADOOP_HDFS_HOME YARN_HOME HADOOP_LIB HADOOP_CONF_DIR YARN_CONF_DIR ANT_HOME HIVE_HOME HCAT_HOME LIB_JARS HADOOP_CLASSPATH PIG_CLASSPATH

说明:官方文档中需要依赖的JAR包不全,请参考我的配置进行操作。

6. Input/Output Interface( 基于Hive)例子运行步骤

(1) 启动一下进程:

mysql, hadoop, hive, pig, hcatalog

(2) 创建age和age_out表:

CREATE TABLE age (name STRING, birthday INT)

ROW FORMAT DELIMITED

FIELDS TERMINATED BY '\t'

LINES TERMINATED BY '\n'

STORED AS TEXTFILE;

CREATE TABLE age_out (birthday INT, birthday_count INT)

ROW FORMAT DELIMITED

FIELDS TERMINATED BY '\t'

LINES TERMINATED BY '\n'

STORED AS TEXTFILE;

(3) Load数据至age表

hive> LOAD DATA LOCAL INPATH '/home/kevin/Documents/age.txt' OVERWRITE INTO TABLE age;

(4) 编写MapReduce程序

package com.company.example.hcatalog;

import java.io.IOException;

import java.util.ArrayList;

import java.util.Iterator;

import java.util.List;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.conf.Configured;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.WritableComparable;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.Mapper;

import org.apache.hadoop.mapreduce.Reducer;

import org.apache.hadoop.util.GenericOptionsParser;

import org.apache.hadoop.util.Tool;

import org.apache.hadoop.util.ToolRunner;

import org.apache.hcatalog.common.HCatConstants;

import org.apache.hcatalog.common.HCatUtil;

import org.apache.hcatalog.data.DefaultHCatRecord;

import org.apache.hcatalog.data.HCatRecord;

import org.apache.hcatalog.data.schema.HCatFieldSchema;

import org.apache.hcatalog.data.schema.HCatSchema;

import org.apache.hcatalog.mapreduce.HCatInputFormat;

import org.apache.hcatalog.mapreduce.HCatOutputFormat;

import org.apache.hcatalog.mapreduce.OutputJobInfo;

public class GroupByBirthday extends Configured implements Tool {

public static class Map extends Mapper<WritableComparable, HCatRecord, IntWritable, IntWritable> {

Integer birthday;

HCatSchema schema;

@Override

protected void map(WritableComparable key, HCatRecord value, Context context) throws IOException, InterruptedException {

//Get our schema from the Job object.

schema = HCatInputFormat.getTableSchema(context.getConfiguration());

//Read the user field.

birthday = value.getInteger("birthday",schema);

context.write(new IntWritable(birthday), new IntWritable(1));

}

}

public static class Reduce extends Reducer<IntWritable, IntWritable, WritableComparable, HCatRecord> {

@Override

protected void reduce(IntWritable key, Iterable<IntWritable> values, Context context) throws IOException, InterruptedException {

List<HCatFieldSchema> columns = new ArrayList<HCatFieldSchema>(2);

columns.add(new HCatFieldSchema("birthday", HCatFieldSchema.Type.INT, ""));

columns.add(new HCatFieldSchema("birthday_count", HCatFieldSchema.Type.INT, ""));

HCatSchema schema = new HCatSchema(columns);

int sum = 0;

Iterator<IntWritable> iter = values.iterator();

while (iter.hasNext()) {

sum++;

iter.next().get();

}

HCatRecord record = new DefaultHCatRecord(2);

record.set("birthday", schema, key.get());

record.set("birthday_count", schema, sum);

context.write(null, record);

}

}

public int run(String[] args) throws Exception {

Configuration conf = getConf();

args = new GenericOptionsParser(conf, args).getRemainingArgs();

String inputTableName = args[0];

String outputTableName = args[1];

Job job = new Job(conf, "GroupByAge");

//Read the inputTableName table, partition null, initialize the default database.

HCatInputFormat.setInput(job, null, inputTableName);

// Initialize HCatoutputFormat

job.setInputFormatClass(HCatInputFormat.class);

job.setJarByClass(GroupByBirthday.class);

job.setMapperClass(Map.class);

job.setReducerClass(Reduce.class);

job.setMapOutputKeyClass(IntWritable.class);

job.setMapOutputValueClass(IntWritable.class);

job.setOutputKeyClass(WritableComparable.class);

job.setOutputValueClass(DefaultHCatRecord.class);

String inputJobString = job.getConfiguration().get(HCatConstants.HCAT_KEY_JOB_INFO);

job.getConfiguration().set(HCatConstants.HCAT_KEY_JOB_INFO, inputJobString);

//Write into outputTableName table, partition null, initialize the default database.

OutputJobInfo outputJobInfo = OutputJobInfo.create(null, outputTableName, null);

job.getConfiguration().set(HCatConstants.HCAT_KEY_OUTPUT_INFO, HCatUtil.serialize(outputJobInfo));

HCatOutputFormat.setOutput(job, outputJobInfo);

HCatSchema s = HCatOutputFormat.getTableSchema(job.getConfiguration());

HCatOutputFormat.setSchema(job, s);

System.out.println("INFO: Output scheme explicity set for writing:" + s);

job.setOutputFormatClass(HCatOutputFormat.class);

return (job.waitForCompletion(true) ? 0 : 1);

}

public static void main(String[] args) throws Exception {

int exitcode = ToolRunner.run(new GroupByBirthday(), args);

System.exit(exitcode);

}

}

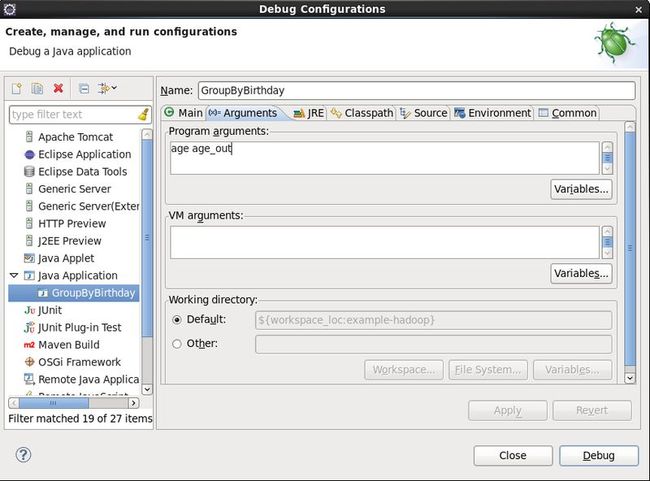

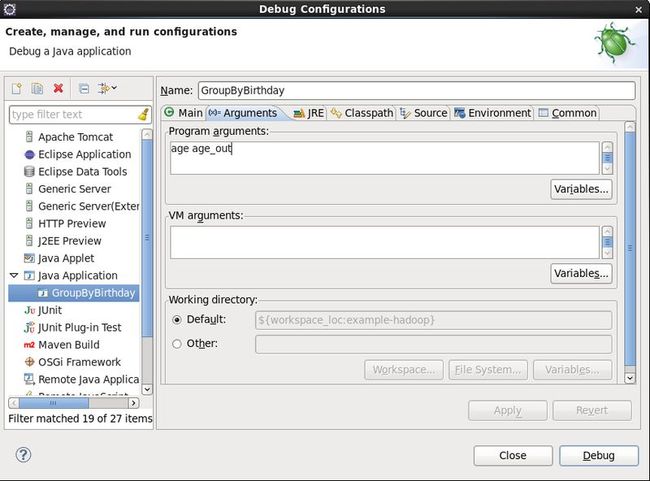

(5) 运行示例

Eclipse中运行:

bin/hadoop --config $HADOOP_HOME/etc/hadoop jar /home/kevin/workspace-eclipse/example-hadoop.jar com.example.hcatalog.GroupByBirthday -libjars $LIB_JARS age age_out

使用Maven进行项目管理时:

bin/hadoop --config $HADOOP_HOME/etc/hadoop jar /home/kevin/workspace-eclipse/example-hadoop/target/example-hadoop-0.0.1-SNAPSHOT.jar -libjars $LIB_JARS age age_out

example-hadoop.jar 见附件。

7. Load/Store Interface (基于Pig)例子运行步骤

(1) 创建表, 在hive命令下:

CREATE TABLE student (name STRING, age INT)

ROW FORMAT DELIMITED

FIELDS TERMINATED BY '\t'

LINES TERMINATED BY '\n'

STORED AS TEXTFILE;

(2) 准备数据:student.txt:

张三 21

张思 22

王五 23

王六 22

李四 22

小三 21

小四 22

(3) Pig启动

bin/pig -Dpig.additional.jars=$HCAT_HOME/share/hcatalog/hcatalog-core-0.6.0-SNAPSHOT.jar:$HCAT_HOME/share/hcatalog/hcatalog-pig-adapter-0.6.0-SNAPSHOT.jar:$HCAT_HOME/share/hcatalog/hcatalog-server-extensions-0.6.0-SNAPSHOT.jar:$HIVE_HOME/lib/hive-metastore-0.10.0.jar:$HIVE_HOME/lib/libthrift-0.9.0.jar:$HIVE_HOME/lib/hive-exec-0.10.0.jar:$HIVE_HOME/lib/libfb303-0.9.0.jar:$HIVE_HOME/lib/jdo2-api-2.3-ec.jar:$HIVE_HOME/lib/slf4j-api-1.6.1.jar

(4) 存储数据

grunt>student = LOAD '/input/student.txt' AS (name:chararray, age:int); //"/input/student.txt"文件为HDFS中的文件

grunt>lmt = LIMIT student 4;

grunt>DUMP lmt;

grunt>ILLUSTRATE lmt; --illustrate lmt;

grunt>STORE student INTO 'student' USING org.apache.hcatalog.pig.HCatStorer();

(5) 加载数据

grunt> temp = LOAD 'student' USING org.apache.hcatalog.pig.HCatLoader();

grunt> DUMP temp;