一、环境和说明

1.测试环境

操作系统:

CentOS6.5_mini-x64.iso

OpenStack版本:

Havana Release 2013.2

2.部署架构

OpenStack+KVM的部署架构

3.拓扑

4.OpenStack介绍

OpenStack 是一个开源的 IaaS(基础设施及服务)云计算平台,让任何人都可以自行建立和提供云端运算服务。OpenStack 由一系列相互关联的项目提供云基础设施解决方案的各个组件,核心项目(9 个):

计算 (Compute) - Nova;

网络和地址管理 - Neutron;

对象存储 (Object) - Swift;

块存储 (Block) - Cinder;

身份 (Identity) - keystone;

镜像 (Image) - Glance;

UI 界面 (Dashboard) - Horizon;

测量 (Metering) - Ceilometer;

编配 (Orchestration) – Heat;

5.OpenStack各组件及其关系

6.主机分配:

主 机名 IP(Static) 系统 配置 角色

openstack 192.168.10.21 CentOS-6.4-x86_64-minimal 4CPU,16G RAM,300G DISK,2网卡 管理节点/计算节点

node01 192.168.10.22 CentOS-6.4-x86_64-minimal 4CPU,16G RAM,300G DISK,2网卡 计算节点

二、管理节点安装(OpenStack)

1.基础配置

操作系统使用CentOS-6.4-x86_64-minimal.iso,安装过程省略,本文采用yum源安装。

(1).导入第三方安装源

[root@openstack ~]# rpm -Uvh http://dl.fedoraproject.org/pub/epel/6/x86_64/epel-release-6-8.noarch.rpm

[root@openstack ~]# rpm -Uvh http://pkgs.repoforge.org/rpmforge-release/rpmforge-release-0.5.3-1.el6.rf.x86_64.rpm

[root@openstack ~]# yum install http://repos.fedorapeople.org/repos/openstack/openstack-havana/rdo-release-havana-7.noarch.rpm

(2).配置/etc/hosts文件

[root@openstack ~]# vi /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.10.21 openstack

192.168.10.22 node01

(3).配置网络

[root@openstack ~]# vi /etc/sysconfig/network-scripts/ifcfg-eth0

DEVICE="eth0"

BOOTPROTO="static"

HWADDR="E4:1F:13:45:AB:C8"

ONBOOT="yes"

IPADDR=192.168.10.21

NETMASK=255.255.255.0

GATEWAY=192.168.10.1

TYPE="Ethernet"

[root@openstack ~]# vi /etc/sysconfig/network-scripts/ifcfg-eth1

DEVICE="eth1"

BOOTPROTO="none"

HWADDR="E4:1F:13:45:AB:CA"

ONBOOT="yes"

TYPE="Ethernet"

(4).关闭selinux:

[root@openstack ~]# more /etc/selinux/config

# This file controls the state of SELinux on the system.

# SELINUX= can take one of these three values:

# enforcing - SELinux security policy is enforced.

# permissive - SELinux prints warnings instead of enforcing.

# disabled - SELinux is fully disabled.

SELINUX=disabled

# SELINUXTYPE= type of policy in use. Possible values are:

# targeted - Only targeted network daemons are protected.

# strict - Full SELinux protection.

SELINUXTYPE=targeted

[root@openstack ~]# setenforce 0

(5).修改/etc/sysctl.conf参数:

[root@openstack ~]# vi /etc/sysctl.conf

……………………

net.ipv4.ip_forward = 1

……………………

[root@openstack ~]#sysctl -p #使sysctl.conf配置生效

2.安装配置NTP服务

(1).安装NTP服务:

[root@openstack ~]# yum -y install ntp

(2).配置NTP服务:

[root@openstack ~]# vi /etc/ntp.conf

driftfile /var/lib/ntp/drift

restrict default ignore

restrict 127.0.0.1

restrict 192.168.10.0 mask 255.255.255.0 nomodify notrap

server ntp.api.bz

server 127.127.1.0 # local clock

fudge 127.127.1.0 stratum 10

keys /etc/ntp/keys

(3).启动NTP服务,设置开机自启动:

[root@openstack ~]# service ntpd start

[root@openstack ~]# chkconfig ntpd on

3.配置安装MySQL:

(1).安装MySQL服务:

[root@openstack ~]# yum -y install mysql mysql-server MySQL-python

(2).修改MySQL配置文件:

[mysqld]

datadir=/var/lib/mysql

socket=/var/lib/mysql/mysql.sock

user=mysql

# Disabling symbolic-links is recommended to prevent assorted security risks

symbolic-links=0

bind-address = 0.0.0.0 #设置监听IP地址0.0.0.0

[mysqld_safe]

log-error=/var/log/mysqld.log

pid-file=/var/run/mysqld/mysqld.pid

(3).启动MqSQL服务,设置开机自启动:

[root@openstack ~]# service mysqld start

[root@openstack ~]# chkconfig mysqld on

(4).修改MySQL密码为passwd:

[root@openstack ~]# mysqladmin -uroot password 'passwd'; history -c

4.安装配置qpid服务

(1).安装qpid服务:

[root@openstack ~]# yum -y install qpid-cpp-server memcached

(2).修改/etc/qpidd.conf配置文件,将auth设置为no:

[root@openstack ~]# vi/etc/qpidd.conf

……………………

auth=no

(3).启动qpid服务,设置开机启动:

[root@openstack ~]# service qpidd start

[root@openstack ~]# chkconfig qpidd on

(4).安装OpenStack工具包:

[root@openstack ~]# yuminstall -y openstack-utils

5.安装配置KeyStone

5.1.初始化KeyStone:

(1).安装KeyStone服务:

[root@openstack ~]# yum -y install openstack-keystone

(2).创建keystone数据库,修改配置文件中的数据库链接:

[root@openstack ~]# openstack-db --init --service keystone

(3).修改配置文件中的数据库链接:

[root@openstack ~]# openstack-config --set /etc/keystone/keystone.conf sql connection mysql://keystone:keystone@localhost/keystone

(4).使用openssl随即生成一个令牌,将其存储在配置文件中:

[root@openstack ~]# export SERVICE_TOKEN=$(openssl rand -hex 10) //随机生成SERVICE_TOKEN值,请牢记

[root@openstack ~]# export SERVICE_ENDPOINT=http://127.0.0.1:35357/v2.0

[root@openstack ~]# mkdir /root/config

[root@openstack ~]# echo $SERVICE_TOKEN > /root/config/ks_admin_token.txt

[root@openstack ~]# cat /root/config/ks_admin_token .txt

12dd70ede7c9d9d3ed3c

[root@openstack ~]# openstack-config --set /etc/keystone/keystone.conf DEFAULT admin_token $SERVICE_TOKEN

*注:将生成的SERVICE_TOKEN值写入文件中保存,以备后续使用,后面涉及到的SERVICE_TOKEN值都是在ks_admin_token.txt文件中获取的。所以一旦写入文件,不要再次运行命令生成SERVICE_TOKEN,否则前后不一致会为调试带来麻烦。

(5).默认情况下keysonte使用PKI令牌。创建签名密钥和证书:

[root@openstack ~]# keystone-manage pki_setup --keystone-user keystone --keystone-group keystone

[root@openstack ~]# chown -R keystone:keystone /etc/keystone/* /var/log/keystone/keystone.log

(6).启动keystone服务,设置开机自启动:

[root@openstack ~]# service openstack-keystone start

[root@openstack ~]# chkconfig openstack-keystone on

5.2.定义Users、Tenants and Roles

(1).修改.bash_profile文件,添加以下参数:

[root@openstack ~]# vi .bash_profile

………………

export OS_USERNAME=admin

export OS_TENANT_NAME=admin

export OS_PASSWORD=password

export OS_AUTH_URL=http://127.0.0.1:5000/v2.0

export SERVICE_ENDPOINT=http://127.0.0.1:35357/v2.0

export SERVICE_TOKEN=12dd70ede7c9d9d3ed3c

………………

执行下面的命令使变量即时生效:

[root@openstack ~]# source .bash_profile

(2).为管理员用户创建一个tenant,为openstack其他服务的用户创建一个tenant:

[root@openstack ~]# keystone tenant-create --name=admin --description='Admin Tenant'

[root@openstack ~]# keystone tenant-create --name=service --description='Service Tenant'

(3).创建一个管理员用户admin:

[root@openstack ~]# keystoneuser-create --name=admin --pass=password --email=keystone@chensh.net

(4).创建一个管理员角色admin:

[root@openstack ~]# keystone role-create --name=admin

(5).将角色添加到用户:

[root@openstack ~]# keystone user-role-add --user=admin --tenant=admin --role=admin

5.3.定义Services 和 API Endpoints

(1).为KeyStone创建一个服务:

[root@openstack ~]# keystoneservice-create --name=keystone --type=identity --description="KeystoneIdentity Service"

(2).使用服务ID创建一个endpoint:

[root@openstack ~]# vi /root/config/keystone.sh

#!/bin/bash

my_ip=192.168.10.21

service=$(keystone service-list | awk '/keystone/ {print $2}')

keystone endpoint-create --service-id=$service --publicurl=http://$my_ip:5000/v2.0 --internalurl=http://$my_ip:5000/v2.0 --adminurl=http://$my_ip:35357/v2.0

[root@openstack ~]# sh /root/config/keystone.sh

6.安装配置Glance

6.1.初始化Glance

(1).安装Glance服务:

[root@openstack ~]# yum -y install openstack-glance

(2).创建Glance数据库:

[root@openstack ~]# openstack-db --init --service glance

(3).修改配置文件中的数据库链接:

[root@openstack ~]# openstack-config --set /etc/glance/glance-api.conf DEFAULT sql_connection mysql://glance:glance@localhost/glance

[root@openstack ~]# openstack-config --set /etc/glance/glance-registry.conf DEFAULT sql_connection mysql://glance:glance@localhost/glance

6.2.创建User,定义Services 和 API Endpoints

(1).为Glance服务创建一个glance用户:

[root@openstack ~]# keystone user-create --name=glance --pass=service [email protected]

[root@openstack ~]# keystone user-role-add --user=glance --tenant=service --role=admin

(2).为glance创建一个服务:

[root@openstack ~]# keystoneservice-create --name=glance --type=image --description="Glance ImageService"

(3).使用服务ID创建一个endpoint:

[root@openstack ~]# vi /root/config/glance.sh

#!/bin/bash

my_ip=192.168.10.21

service=$(keystone service-list | awk '/glance/ {print $2}')

keystone endpoint-create --service-id=$service --publicurl=http://$my_ip:9292 --internalurl=http://$my_ip:9292 --adminurl=http://$my_ip:9292

6.3.配置Glance服务

(1).将keystone认证信息添加到glance配置文件中:

[root@openstack ~]# openstack-config --set /etc/glance/glance-api.conf keystone_authtoken auth_host 127.0.0.1

[root@openstack ~]# openstack-config --set /etc/glance/glance-api.conf keystone_authtoken auth_port 35357

[root@openstack ~]# openstack-config --set /etc/glance/glance-api.conf keystone_authtoken auth_protocol http

[root@openstack ~]# openstack-config --set /etc/glance/glance-api.conf keystone_authtoken admin_tenant_name service

[root@openstack ~]# openstack-config --set /etc/glance/glance-api.conf keystone_authtoken admin_user glance

[root@openstack ~]# openstack-config --set /etc/glance/glance-api.conf keystone_authtoken admin_password service

[root@openstack ~]# openstack-config --set /etc/glance/glance-registry.conf keystone_authtoken auth_host 127.0.0.1

[root@openstack ~]# openstack-config --set /etc/glance/glance-registry.conf keystone_authtoken auth_port 35357

[root@openstack ~]# openstack-config --set /etc/glance/glance-registry.conf keystone_authtoken auth_protocol http

[root@openstack ~]# openstack-config --set /etc/glance/glance-registry.conf keystone_authtoken admin_tenant_name service

[root@openstack ~]# openstack-config --set /etc/glance/glance-registry.conf keystone_authtoken admin_user glance

[root@openstack ~]# openstack-config --set /etc/glance/glance-registry.conf keystone_authtoken admin_password service

(2).修改ini文件路径,将keystone认证信息添加到ini文件中:

[root@openstack ~]# openstack-config --set /etc/glance/glance-api.conf paste_deploy config_file /etc/glance/glance-api-paste.ini

[root@openstack ~]# openstack-config --set /etc/glance/glance-api.conf paste_deploy flavor keystone

[root@openstack ~]# openstack-config --set /etc/glance/glance-registry.conf paste_deploy config_file /etc/glance/glance-registry-paste.ini

[root@openstack ~]# openstack-config --set /etc/glance/glance-registry.conf paste_deploy flavor keystone

[root@openstack ~]# cp /usr/share/glance/glance-api-dist-paste.ini /etc/glance/glance-api-paste.ini

[root@openstack ~]# cp /usr/share/glance/glance-registry-dist-paste.ini /etc/glance/glance-registry-paste.ini

[root@openstack ~]# chown -R root:glance /etc/glance/glance-api-paste.ini

[root@openstack ~]# chown -R root:glance /etc/glance/glance-registry-paste.ini

[root@openstack ~]# openstack-config --set /etc/glance/glance-api-paste.ini filter:authtoken auth_host 127.0.0.1

[root@openstack ~]# openstack-config --set /etc/glance/glance-api-paste.ini filter:authtoken admin_tenant_name service

[root@openstack ~]# openstack-config --set /etc/glance/glance-api-paste.ini filter:authtoken admin_user glance

[root@openstack ~]# openstack-config --set /etc/glance/glance-api-paste.ini filter:authtoken admin_password service

[root@openstack ~]# openstack-config --set /etc/glance/glance-registry-paste.ini filter:authtoken auth_host 127.0.0.1

[root@openstack ~]# openstack-config --set /etc/glance/glance-registry-paste.ini filter:authtoken admin_tenant_name service

[root@openstack ~]# openstack-config --set /etc/glance/glance-registry-paste.ini filter:authtoken admin_user glance

[root@openstack ~]# openstack-config --set /etc/glance/glance-registry-paste.ini filter:authtoken admin_password service

(3).修改镜像文件的存放路径(默认存放在/var/lib/glance目录下,若不需修改,此步骤可省略)

[root@openstack ~]# openstack-config --set /etc/glance/glance-api.conf DEFAULT filesystem_store_datadir /openstack/lib/glance/images/

[root@openstack ~]# openstack-config --set /etc/glance/glance-api.conf DEFAULT scrubber_datadir /openstack/lib/glance/scrubber

[root@openstack ~]# openstack-config --set /etc/glance/glance-api.conf DEFAULT image_cache_dir /openstack/lib/glance/image-cache/

[root@openstack ~]# mkdir -p /openstack/lib

[root@openstack ~]# cp -r /var/lib/glance/ /openstack/lib/

[root@openstack ~]# chown -R glance:glance /openstack/lib/glance/

(4).启动glance服务,设置开机自启动

[root@openstack ~]# service openstack-glance-api start

[root@openstack ~]# service openstack-glance-registry start

[root@openstack ~]# chkconfig openstack-glance-api on

[root@openstack ~]# chkconfig openstack-glance-registry on

6.4.Glance测试

(1).上传镜像

[root@openstack ~]# glance image-create --name=centos6.4 --disk-format=qcow2 --container-format=ovf --is-public=true < /root/centos6.4-mini_x64.qcow2

+------------------+--------------------------------------+

| Property | Value |

+------------------+--------------------------------------+

| checksum | 4b16b4bcfd7f4fe7f0f2fdf8919048b4 |

| container_format | ovf |

| created_at | 2014-03-31T06:26:26 |

| deleted | False |

| deleted_at | None |

| disk_format | qcow2 |

| id | 45456157-9b46-4e40-8ee3-fbb2e40f227b |

| is_public | True |

| min_disk | 0 |

| min_ram | 0 |

| name | centos6.4 |

| owner | 446893f3733b4294a7080f3b0bf1ba61 |

| protected | False |

| size | 698023936 |

| status | active |

| updated_at | 2014-03-31T06:26:30 |

+------------------+--------------------------------------+

(2).查看镜像

[root@openstack ~]# glance image-list

+--------------------------------------+-----------+-------------+------------------+-----------+--------+

| ID | Name | Disk Format | Container Format | Size | Status |

+--------------------------------------+-----------+-------------+------------------+-----------+--------+

| 45456157-9b46-4e40-8ee3-fbb2e40f227b | centos6.4 | qcow2 | ovf | 698023936 | active |

+--------------------------------------+-----------+-------------+------------------+-----------+--------+

7.安装配置Nova

7.1.初始化Nova

(1).安装nova:

[root@openstack ~]# yum -y install openstack-nova

(2).创建nova数据库:

[root@openstack ~]# openstack-db --init --service nova

7.2.创建User,定义Services 和 API Endpoints

(1).编写脚本:

[root@openstack ~]# vi /root/config/nova-user.sh

#!/bin/sh

my_ip=192.168.10.21

keystone user-create --name=nova --pass=service [email protected]

keystone user-role-add --user=nova --tenant=service --role=admin

keystone service-create --name=nova --type=compute --description="Nova Compute Service"

service=$(keystone service-list | awk '/nova/ {print $2}')

keystone endpoint-create --service-id=$service --publicurl=http://$my_ip:8774/v2/%\(tenant_id\)s --internalurl=http://$my_ip:8774/v2/%\(tenant_id\)s --adminurl=http://$my_ip:8774/v2/%\(tenant_id\)s

(2).运行脚本,创建用户、服务及api endpoint:

[root@openstack ~]# sh /root/config/nova-user.sh

7.3.配置nova服务:

(1).修改/etc/nova.conf配置文件:

[root@openstack ~]# vi /etc/nova/nova.conf

[DEFAULT]

my_ip = 192.168.10.21

auth_strategy = keystone

state_path = /openstack/lib/nova

verbose=True

allow_resize_to_same_host = true

rpc_backend = nova.openstack.common.rpc.impl_qpid

qpid_hostname = 192.168.10.21

libvirt_type = kvm

glance_api_servers = 192.168.10.21:9292

novncproxy_base_url = http://192.168.10.21:6080/vnc_auto.html

vncserver_listen = $my_ip

vncserver_proxyclient_address = $my_ip

vnc_enabled = true

vnc_keymap = en-us

network_manager = nova.network.manager.FlatDHCPManager

firewall_driver = nova.virt.firewall.NoopFirewallDriver

multi_host = True

flat_interface = eth1

flat_network_bridge = br1

public_interface = eth0

instance_usage_audit = True

instance_usage_audit_period = hour

notify_on_state_change = vm_and_task_state

notification_driver = nova.openstack.common.notifier.rpc_notifier

compute_scheduler_driver=nova.scheduler.simple.SimpleScheduler

[hyperv]

[zookeeper]

[osapi_v3]

[conductor]

[keymgr]

[cells]

[database]

[image_file_url]

[baremetal]

[rpc_notifier2]

[matchmaker_redis]

[ssl]

[trusted_computing]

[upgrade_levels]

[matchmaker_ring]

[vmware]

[spice]

[keystone_authtoken]

auth_host = 127.0.0.1

auth_port = 35357

auth_protocol = http

admin_user = nova

admin_tenant_name = service

admin_password = service

因上述配置文件中修改了instances实例存放的位置,还需要作一下操作:

修改instances路径,设置目录权限:

[root@openstack ~]# cp -r /var/lib/nova/ /openstack/lib/

[root@openstack ~]# chown -R nova:nova /openstack/lib/nova/

(2).配置libvirtd服务,删除virbr0

启动libvirt服务:

[root@openstack ~]# service libvirtd start

查看net-list,发现default:

[root@openstack ~]# virsh net-list

Name State Autostart Persistent

--------------------------------------------------

default active yes yes

删除default,即virbr0:

[root@openstack ~]# virsh net-destroy default

Network default destroyed

[root@openstack ~]# virsh net-undefine default

Network default has been undefined

重启libvirtd服务,设置开机自启动:

[root@openstack ~]# service libvirtd restart

[root@openstack ~]# chkconfig libvirtd on

(3).启动nova相关服务,设置开机自启动

[root@openstack ~]# service messagebus start

[root@openstack ~]# chkconfig messagebus on

启动nova服务,采用network网络服务:

[root@openstack ~]# service openstack-nova-api start

[root@openstack ~]# service openstack-nova-cert start

[root@openstack ~]# service openstack-nova-consoleauth start

[root@openstack ~]# service openstack-nova-scheduler start

[root@openstack ~]# service openstack-nova-conductor start

[root@openstack ~]# service openstack-nova-novncproxy start

[root@openstack ~]# service openstack-nova-compute start

[root@openstack ~]# service openstack-nova-network start

[root@openstack ~]# chkconfig openstack-nova-api on

[root@openstack ~]# chkconfig openstack-nova-cert on

[root@openstack ~]# chkconfig openstack-nova-consoleauth on

[root@openstack ~]# chkconfig openstack-nova-scheduler on

[root@openstack ~]# chkconfig openstack-nova-conductor on

[root@openstack ~]# chkconfig openstack-nova-novncproxy on

[root@openstack ~]# chkconfig openstack-nova-compute on

[root@openstack ~]# chkconfig openstack-nova-network on

------------------------------------------------------------------------------------------

7.4.nova测试:

(1).创建网络:

[root@openstack ~]# nova network-create vmnet --fixed-range-v4=10.1.1.0/24 --bridge-interface=br1 --multi-host=T

[root@openstack ~]#nova network-list

+--------------------------------------+-------+-------------+

| ID | Label | Cidr |

+--------------------------------------+-------+-------------+

| d3bc4874-2b4b-4abf-b963-0e5ae69b7b31 | vmnet | 10.1.1.0/24 |

+--------------------------------------+-------+-------------+

[root@openstack ~]# nova-manage network list

id IPv4 IPv6 start address DNS1 DNS2 VlanID project uuid

1 10.1.1.0/24 None 10.1.1.2 8.8.4.4 None None None d3bc4874-2b4b-4abf-b963-0e5ae69b7b31

(2).设置安全组:

[root@openstack ~]# nova secgroup-add-rule default tcp 22 22 0.0.0.0/0

+-------------+-----------+---------+-----------+--------------+

| IP Protocol | From Port | To Port | IP Range | Source Group |

+-------------+-----------+---------+-----------+--------------+

| tcp | 22 | 22 | 0.0.0.0/0 | |

+-------------+-----------+---------+-----------+--------------+

[root@openstack ~]# nova secgroup-add-rule default icmp -1 -1 0.0.0.0/0

+-------------+-----------+---------+-----------+--------------+

| IP Protocol | From Port | To Port | IP Range | Source Group |

+-------------+-----------+---------+-----------+--------------+

| icmp | -1 | -1 | 0.0.0.0/0 | |

+-------------+-----------+---------+-----------+--------------+

(3).创建虚拟机实例:

查看可用镜像:

[root@openstack ~]# nova image-list

+--------------------------------------+-----------+--------+--------------------------------------+

| ID | Name | Status | Server |

+--------------------------------------+-----------+--------+--------------------------------------+

| 45456157-9b46-4e40-8ee3-fbb2e40f227b | centos6.4 | ACTIVE | |

+--------------------------------------+-----------+--------+--------------------------------------+

创建虚拟机实例:

[root@openstack ~]# nova boot --flavor 1 --image centos6.4 vm01

+--------------------------------------+--------------------------------------------------+

| Property | Value |

+--------------------------------------+--------------------------------------------------+

| OS-DCF:diskConfig | MANUAL |

| OS-EXT-AZ:availability_zone | nova |

| OS-EXT-SRV-ATTR:host | - |

| OS-EXT-SRV-ATTR:hypervisor_hostname | - |

| OS-EXT-SRV-ATTR:instance_name | instance-00000001 |

| OS-EXT-STS:power_state | 0 |

| OS-EXT-STS:task_state | scheduling |

| OS-EXT-STS:vm_state | building |

| OS-SRV-USG:launched_at | - |

| OS-SRV-USG:terminated_at | - |

| accessIPv4 | |

| accessIPv6 | |

| adminPass | hZBG4A7eJMdL |

| config_drive | |

| created | 2014-03-31T07:12:55Z |

| flavor | m1.tiny |

| hostId | |

| id | f754afe1-784f-41d0-9139-a05d25eaca20 |

| image | centos6.4 (45456157-9b46-4e40-8ee3-fbb2e40f227b) |

| key_name | - |

| metadata | {} |

| name | vm01 |

| os-extended-volumes:volumes_attached | [] |

| progress | 0 |

| security_groups | default |

| status | BUILD |

| tenant_id | 446893f3733b4294a7080f3b0bf1ba61 |

| updated | 2014-03-31T07:12:55Z |

| user_id | 2d7f8e7ec15c40cfb4209134cb5b30ba |

+--------------------------------------+--------------------------------------------------+

查看虚拟机实例运行状态:

[root@openstack ~]# nova list

+--------------------------------------+-----------------------+--------+------------+-------------+-----------------+

| ID | Name | Status | Task State | Power State | Networks |

+--------------------------------------+-----------------------+--------+------------+-------------+-----------------+

| f754afe1-784f-41d0-9139-a05d25eaca20 | vm01 | ACTIVE | - | Running | vmnet=10.1.1.2 |

+--------------------------------------+-----------------------+--------+------------+-------------+-----------------+

测试虚拟机实例连通性:

[root@openstack ~]# ping 10.1.1.2

PING 10.1.1.2 (10.1.1.2) 56(84) bytes of data.

64 bytes from 10.1.1.2: icmp_seq=1 ttl=64 time=0.057 ms

64 bytes from 10.1.1.2: icmp_seq=2 ttl=64 time=0.037 ms

--- 10.1.1.2 ping statistics ---

2 packets transmitted, 2 received, 0% packet loss, time 1639ms

rtt min/avg/max/mdev = 0.037/0.047/0.057/0.010 ms

8.安装配置Horizon

(1).安装Horizon

[root@openstack ~]# yum -y install openstack-dashboard

(2).修改local_settings文件,将DEBUG = False修改为DEBUG = True

[root@openstack ~]# vi /etc/openstack-dashboard/local_settings

DEBUG = True

……

(3).修改httpd.conf文件,将#ServerName www.example.com:80修改为ServerName 192.168.10.21:80

[root@openstack ~]# vi /etc/httpd/conf/httpd.conf

#ServerName www.example.com:80

ServerName 192.168.10.21:80

(4).修改local_settings.py文件,将"Member"修改为"admin"

[root@openstack ~]# find / -name local_settings.py

/usr/share/openstack-dashboard/openstack_dashboard/local/local_settings.py

[root@openstack keystone]# cat /usr/share/openstack-dashboard/openstack_dashboard/local/local_settings.py | grep OPENSTACK_KEYSTONE_DEFAULT_ROLE

OPENSTACK_KEYSTONE_DEFAULT_ROLE = "Member"

[root@openstack keystone]# vi /usr/share/openstack-dashboard/openstack_dashboard/local/local_settings.py

#OPENSTACK_KEYSTONE_DEFAULT_ROLE = "Member"

OPENSTACK_KEYSTONE_DEFAULT_ROLE = "admin"

(5).启动http服务,设置开机自启动

[root@openstack ~]# service httpd start

[root@openstack ~]# chkconfig httpd on

(6).重启nova-api服务

[root@openstack ~]# service openstack-nova-api restart

(7).添加防火墙策略

[root@openstack ~]# iptables -I INPUT -p tcp --dport 80 -j ACCEPT

[root@openstack ~]# iptables -I INPUT -p tcp -m multiport --dports 5900:6000 -j ACCEPT

[root@openstack ~]# iptables -I INPUT -p tcp --dport 6080 -j ACCEPT

[root@openstack ~]# service iptables save

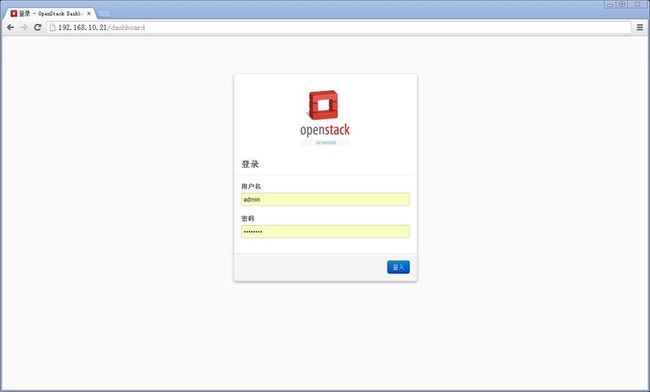

Horizon安装完成,使用http://192.168.10.21/dashboard访问openstack web界面。

用户名:admin

密码:password

9.添加新项目

(1).为操作员用户创建一个tenant:

[root@openstack ~]# keystone tenant-create --name=manager --description='Manager Tenant'

(2).创建操作员用户:

[root@openstack ~]# keystone user-create --name=manager --pass=password [email protected]

(3).创建一个管理角色manager:

[root@openstack ~]# keystone role-create --name=manager

(4).将manager角色添加到用户:

[root@openstack ~]# keystone user-role-add --user=manager --tenant=manager --role=manager

(5).将nova角色添加到用户:

[root@openstack ~]# keystone user-role-add --user=nova --tenant=service --role=manager

*******************************************************************************************

三、计算节点安装(Node01)

1.基础配置

操作系统使用CentOS-6.4-x86_64-minimal.iso,安装过程省略,本文采用yum源安装。

(1).导入第三方安装源

[root@node01 ~]# rpm -Uvh http://dl.fedoraproject.org/pub/epel/6/x86_64/epel-release-6-8.noarch.rpm

[root@node01 ~]# rpm -Uvh http://pkgs.repoforge.org/rpmforge-release/rpmforge-release-0.5.3-1.el6.rf.x86_64.rpm

[root@node01 ~]# yum install http://repos.fedorapeople.org/repos/openstack/openstack-havana/rdo-release-havana-7.noarch.rpm

(2).配置/etc/hosts文件

[root@node01 ~]# vi /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.10.21 openstack

192.168.10.22 node01

(3).配置网络

[root@node01 ~]# vi /etc/sysconfig/network-scripts/ifcfg-eth0

DEVICE="eth0"

BOOTPROTO="static"

HWADDR="E4:1F:13:45:AB:C1"

ONBOOT="yes"

IPADDR=192.168.10.22

NETMASK=255.255.255.0

GATEWAY=192.168.10.1

TYPE="Ethernet"

[root@openstack ~]# vi /etc/sysconfig/network-scripts/ifcfg-eth1

DEVICE="eth1"

BOOTPROTO="none"

HWADDR="E4:1F:13:45:AB:C3"

ONBOOT="yes"

TYPE="Ethernet"

(4).关闭selinux:

[root@node01 ~]# more /etc/selinux/config

# This file controls the state of SELinux on the system.

# SELINUX= can take one of these three values:

# enforcing - SELinux security policy is enforced.

# permissive - SELinux prints warnings instead of enforcing.

# disabled - SELinux is fully disabled.

SELINUX=disabled

# SELINUXTYPE= type of policy in use. Possible values are:

# targeted - Only targeted network daemons are protected.

# strict - Full SELinux protection.

SELINUXTYPE=targeted

[root@node01 ~]# setenforce 0

(5).修改/etc/sysctl.conf参数:

[root@node01 ~]# vi /etc/sysctl.conf

……………………

net.ipv4.ip_forward = 1

……………………

[root@node01 ~]#sysctl -p #使sysctl.conf配置生效

2.安装配置NTP客户端

(1).安装NTP客户端服务:

[root@node01 ~]# yum -y install ntpdate

(2).设置时间同步:

[root@node01 ~]# ntpdate 192.168.10.21

31 Mar 16:17:03 ntpdate[5848]: the NTP socket is in use, exiting

(3).设置计划任务:

[root@node01 ~]# crontab -e

插入下行内容:

*/5 * * * * ntpdate 192.168.10.21 >> /var/log/ntpdate.log

3.设置环境变量

修改.bash_profile文件,添加以下参数:

[root@node01 ~]# vi .bash_profile

………………

export OS_USERNAME=admin

export OS_TENANT_NAME=admin

export OS_PASSWORD=password

export OS_AUTH_URL=http://192.168.10.21:5000/v2.0

export SERVICE_ENDPOINT=http://192.168.10.21:35357/v2.0

export SERVICE_TOKEN=12dd70ede7c9d9d3ed3c

………………

执行下面的命令使变量即时生效:

[root@node01 ~]# source .bash_profile

4.安装配置libvirt服务

(1).安装libvirt服务:

[root@node01 ~]# yum -y install qemu-kvm libvirt

(2)启动libvirt服务:

[root@node01 ~]# service libvirtd start

(3)查看net-list,发现default:

[root@node01 ~]# virsh net-list

Name State Autostart Persistent

--------------------------------------------------

default active yes yes

(4)删除default,即virbr0:

[root@node01 ~]# virsh net-destroy default

Network default destroyed

[root@node01 ~]# virsh net-undefine default

Network default has been undefined

(5)重启libvirtd服务,设置开机自启动:

[root@node01 ~]# service libvirtd restart

[root@node01 ~]# chkconfig libvirtd on

5.安装MySQL客户端

(1).安装mysql客户端

[root@node01 ~]# yum -y install mysql

(2).检查mysql数据库连通性

[root@node01 ~]# mysql -h 192.168.10.21 -unova -pnova

Welcome to the MySQL monitor. ………………

mysql> quit;

Bye

6.安装配置Nova服务

(1)安装nova-compute、nova-network、nova-scheduler

[root@node01 ~]# yum -y install openstack-nova-compute openstack-nova-network openstack-nova-scheduler

(2).修改nova配置文件

[root@node01 ~]# vi /etc/nova/nova.conf

[DEFAULT]

my_ip = 192.168.10.22

auth_strategy = keystone

state_path = /letv/openstack/lib/nova

verbose=True

allow_resize_to_same_host = true

rpc_backend = nova.openstack.common.rpc.impl_qpid

qpid_hostname = 192.168.10.21

libvirt_type = kvm

glance_api_servers = 192.168.10.21:9292

novncproxy_base_url = http://192.168.10.21:6080/vnc_auto.html

vncserver_listen = 192.168.10.22

vncserver_proxyclient_address = 192.168.10.22

vnc_enabled = true

vnc_keymap = en-us

network_manager = nova.network.manager.FlatDHCPManager

firewall_driver = nova.virt.firewall.NoopFirewallDriver

multi_host = True

flat_interface = eth1

flat_network_bridge = br1

public_interface = eth0

instance_usage_audit = True

instance_usage_audit_period = hour

notify_on_state_change = vm_and_task_state

notification_driver = nova.openstack.common.notifier.rpc_notifier

compute_scheduler_driver=nova.scheduler.simple.SimpleScheduler

[hyperv]

[zookeeper]

[osapi_v3]

[conductor]

[keymgr]

[cells]

[database]

sql_connection=mysql://nova:nova@192.168.10.21/nova

[image_file_url]

[baremetal]

[rpc_notifier2]

[matchmaker_redis]

[ssl]

[trusted_computing]

[upgrade_levels]

[matchmaker_ring]

[vmware]

[spice]

[keystone_authtoken]

auth_host = 192.168.10.21

auth_port = 35357

auth_protocol = http

admin_user = nova

admin_tenant_name = service

admin_password = service

因上管理节点nova.conf配置文件中修改了instances实例存放的位置,还需要作一下操作:

修改路径,设置目录权限:

[root@node01 ~]# cp -r /var/lib/nova/ /openstack/lib/

[root@node01 ~]# chown -R nova:nova /openstack/lib/nova/

(3).启动nova相关服务,设置开机自启动

[root@node01 ~]# service messagebus start

[root@node01 ~]# chkconfig messagebus on

[root@node01 ~]# service openstack-nova-compute start

[root@node01 ~]# service openstack-nova-network start

[root@node01 ~]# chkconfig openstack-nova-compute on

[root@node01 ~]# chkconfig openstack-nova-network on

*******************************************************************************************

OpenStack其他常用命令:

1.list nova服务,包含管理节点和计算节点

[root@openstack ~]# nova service-list

+------------------+-------------+----------+---------+-------+----------------------------+-----------------+

| Binary | Host | Zone | Status | State | Updated_at | Disabled Reason |

+------------------+-------------+----------+---------+-------+----------------------------+-----------------+

| nova-cert | openstack | internal | enabled | up | 2014-03-31T08:59:50.000000 | - |

| nova-consoleauth | openstack | internal | enabled | up | 2014-03-31T08:59:54.000000 | - |

| nova-scheduler | openstack | internal | enabled | up | 2014-03-31T08:59:48.000000 | - |

| nova-conductor | openstack | internal | enabled | up | 2014-03-31T08:59:52.000000 | - |

| nova-compute | openstack | nova | enabled | up | 2014-03-31T08:59:56.000000 | - |

| nova-compute | node01 | nova | enabled | up | 2014-03-31T08:59:53.000000 | - |

| nova-network | openstack | internal | enabled | up | 2014-03-31T08:59:50.000000 | - |

| nova-network | node01 | internal | enabled | up | 2014-03-31T08:59:56.000000 | - |

| nova-scheduler | node01 | internal | enabled | up | 2014-03-31T08:59:52.000000 | - |

2.查看计算节点:

[root@node01 ~]# nova hypervisor-list

+----+---------------------+

| ID | Hypervisor hostname |

+----+---------------------+

| 1 | openstack |

| 2 | node01 |

+----+---------------------+

3.查看虚拟机模板配置:

[root@node01 ~]# nova flavor-list

+----+-----------+-----------+------+-----------+------+-------+-------------+-----------+

| ID | Name | Memory_MB | Disk | Ephemeral | Swap | VCPUs | RXTX_Factor | Is_Public |

+----+-----------+-----------+------+-----------+------+-------+-------------+-----------+

| 1 | m1.tiny | 512 | 1 | 0 | | 1 | 1.0 | True |

| 2 | m1.small | 2048 | 20 | 0 | | 1 | 1.0 | True |

| 3 | m1.medium | 4096 | 40 | 0 | | 2 | 1.0 | True |

| 4 | m1.large | 8192 | 80 | 0 | | 4 | 1.0 | True |

| 5 | m1.xlarge | 16384 | 160 | 0 | | 8 | 1.0 | True |

+----+-----------+-----------+------+-----------+------+-------+-------------+-----------+