2013-07-31----openstack安装教程初版

一直一来做的都是基于BMC产品的自动化和云计算,最近想学习一点关于底层与开源之类的东西, 所以选择了openstack。整个学习过程还是有困难的,刚开始由于习惯了百度,也出来了一些文章,但是大多的都是非常有问题的。最后选择了官方的文档进行参考安装。这里需要说明几点:

1. openstack版本从诞生到现在已经有8个版本,最新的为Havana,也就是H版,版本历史为

Havana Due

Grizzly 2013.1.2

Folsom 2012.2.4

Essex 2012.1.3

Diablo 2011.3.1

Cactus 2011.2

Bexar 2011.1

Austin 2010.1

网上的教程大多集中在E版,由于各人的环境差异等等原因,按照网上的教程安装会出现很多问题,对于openstack的新手来说,对问题进行troubleshooting是非常困难的。本次安装采用G版进行。对于初学者同样建议参见官方英文文档进行学习。

2. 网络与加架构问题

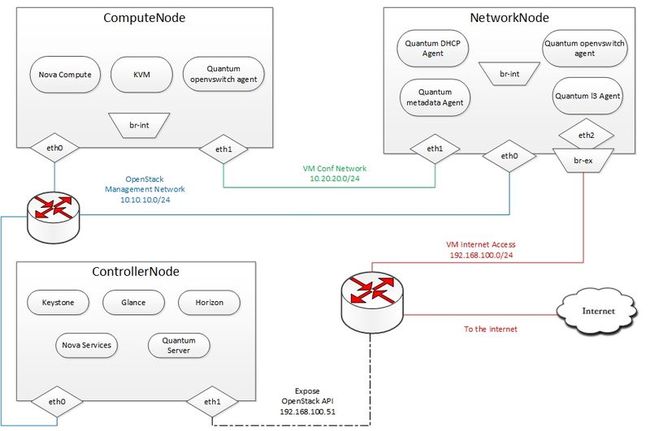

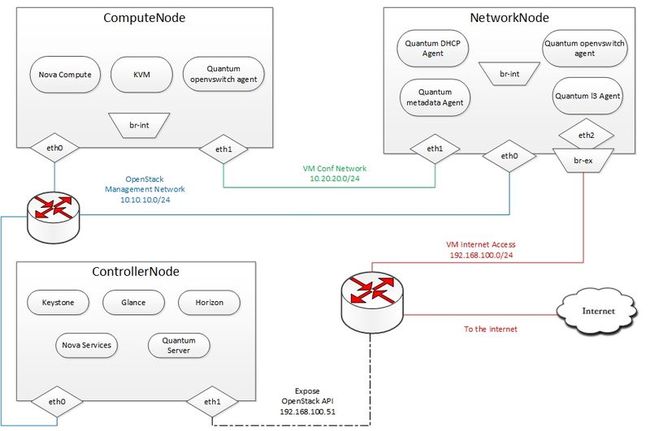

安装需要提前规划好网络及架构,单网卡还是双网卡,单节点还是多节点。第一次学习安装建议按照官方的标准架构进行,多网卡多节点。这样可以容易理解openstack的逻辑关系。

1. 安装架构图(官方多网卡多节点部署)

2. 环境需求

vmwareworkstation 网络配置

controller VM1

eth0 (192.168.70.247)

Network VM2

eth0 (192.168.70.248)

eth1 (192.168.2.2)

eth2 (192.168.3.2)

Computer VM3

eth0 (192.168.70.249)

eth1 (192.168.2.3)

3.安装Controller Node

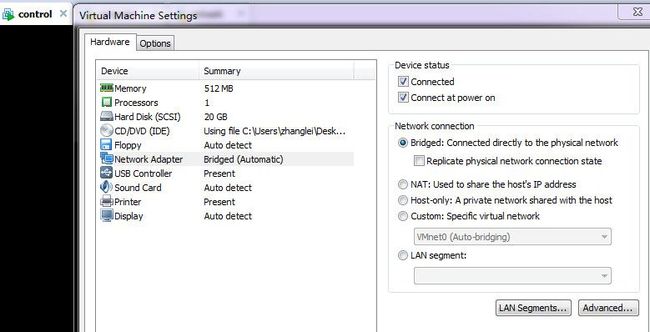

3.1 vm1虚拟机网络设置

由于controller的eth1网卡主要负责对公网的API提供,这里我们不需要

3.2 Ubuntu环境初始化,需要更新源,否则安装的是F版

apt-get install -y ubuntu-cloud-keyring

echo deb http://ubuntu-cloud.archive.canonical.com/ubuntu precise-updates/grizzly main >> /etc/apt/sources.list.d/grizzly.list

apt-get update -y

apt-get upgrade -y

apt-get -y dist-upgrade

3.3 网络设置,请注意网卡。DNS设置成8.8.8.8或者自己的,主机名可选配置,建议做配置,我这里配置的是controller

root@openstack:~# cat /etc/network/interfaces

# This file describes the network interfaces available on your system

# and how to activate them. For more information, see interfaces(5).

# The loopback network interface

auto lo

iface lo inet loopback

# The primary network interface

auto eth0

iface eth0 inet static

address 192.168.70.247

netmask 255.255.255.0

gateway 192.168.70.1

root@openstack:~# cat /etc/resolv.conf

# Dynamic resolv.conf(5) file for glibc resolver(3) generated by resolvconf(8)

# DO NOT EDIT THIS FILE BY HAND -- YOUR CHANGES WILL BE OVERWRITTEN

nameserver 119.6.6.6

sudo /etc/init.d/networking restart

3.4 安装MYSQL,安装过程需要输入密码

apt-get install -y mysql-server python-mysqldb

配置MYSQL可外部访问

sed -i 's/127.0.0.1/0.0.0.0/g' /etc/mysql/my.cnf

service mysql restart

3.5 安装RabbitMQ

Install RabbitMQ:

apt-get install -y rabbitmq-server

Install NTP service时间同步的组件,时间不同步后期会有很多问题)

apt-get install -y ntp

初始化MYSQL数据库,这里我统一改了官方的密码为password

mysql -uroot -ppassword <<EOF

CREATE DATABASE keystone;

GRANT ALL ON keystone.* TO 'keystoneUser'@'%' IDENTIFIED BY 'keystonePass';

CREATE DATABASE glance;

GRANT ALL ON glance.* TO 'glanceUser'@'%' IDENTIFIED BY 'glancePass';

CREATE DATABASE quantum;

GRANT ALL ON quantum.* TO 'quantumUser'@'%' IDENTIFIED BY 'quantumPass';

CREATE DATABASE nova;

GRANT ALL ON nova.* TO 'novaUser'@'%' IDENTIFIED BY 'novaPass';

CREATE DATABASE cinder;

GRANT ALL ON cinder.* TO 'cinderUser'@'%' IDENTIFIED BY 'cinderPass';

FLUSH PRIVILEGES;

EOF

3.6 安装其它组件

apt-get install -y vlan bridge-utils

sed -i 's/#net.ipv4.ip_forward=1/net.ipv4.ip_forward=1/' /etc/sysctl.conf

3.7 安装Keystone,openstack核心模块,主要负责集中认证

apt-get install -y keystone

修改/etc/keystone/keystone.conf配置文件中

原:connection = sqlite:////var/lib/keystone/keystone.db

改:connection = mysql://keystoneUser:[email protected]/keystone

可以通过如下命令修改

sed -i '/connection = .*/{s|sqlite:///.*|mysql://'"keystoneUser"':'"keystonePass"'@'"192.168.70.247"'/keystone|g}' /etc/keystone/keystone.conf

service keystone restart

keystone-manage db_sync

下载初始化数据脚本

wget https://raw.github.com/mseknibilel/OpenStack-Grizzly-Install-Guide/OVS_MultiNode/KeystoneScripts/keystone_basic.sh

wget https://raw.github.com/mseknibilel/OpenStack-Grizzly-Install-Guide/OVS_MultiNode/KeystoneScripts/keystone_endpoints_basic.sh

修改下载的脚本**HOST_IP** 和 **EXT_HOST_IP**为自己的ip地址

sed -i -e " s/10.10.10.51/192.168.70.247/g " keystone_basic.sh

sed -i -e " s/10.10.10.51/192.168.70.247/g; s/192.168.100.51/192.168.70.247/g; " keystone_endpoints_basic.sh

chmod +x keystone_basic.sh

chmod +x keystone_endpoints_basic.sh

./keystone_basic.sh

./keystone_endpoints_basic.sh

配置环境变量,注意该文件路径我自己所有的操作都在自己创建的文件夹下面

cat >/opt/openstack/novarc <<EOF

export OS_TENANT_NAME=admin

export OS_USERNAME=admin

export OS_PASSWORD=admin_pass

export OS_AUTH_URL="http://192.168.70.247:5000/v2.0/"

EOF

source /opt/openstack/novarc

检查keystone的安装,如下表示安装成功

root@controll:/opt/openstack# keystone user-list

+----------------------------------+---------+---------+--------------------+

| id | name | enabled | email |

+----------------------------------+---------+---------+--------------------+

| 9a3d119d7a314ce59c6f7ce13866dab8 | admin | True | [email protected] |

| 4d6af357450840f5bbd62969d286e509 | cinder | True | [email protected] |

| 33c2eda2f2c84f6391d3bf4ad10312e5 | glance | True | [email protected] |

| 3877d5c411b647d79573673920938929 | nova | True | [email protected] |

| bdca016a814c4065a9c5358a0f5d60bb | quantum | True | [email protected] |

+----------------------------------+---------+---------+--------------------+

root@controll:/opt/openstack#

3.8 安装Glance

安装Glance

apt-get install -y glance

在配置文件/etc/glance/glance-api-paste.ini中[filter:authtoken]下面增加,如下内容,以前的内容注释掉。注意修改自己的IP

[filter:authtoken]

paste.filter_factory = keystoneclient.middleware.auth_token:filter_factory

delay_auth_decision = true

auth_host = 192.168.70.247

auth_port = 35357

auth_protocol = http

admin_tenant_name = service

admin_user = glance

admin_password = service_pass

在配置文件/etc/glance/glance-registry-paste.ini中[filter:authtoken]下面增加,如下内容,以前的内容注释掉。注意修改自己的IP

[filter:authtoken]

paste.filter_factory = keystoneclient.middleware.auth_token:filter_factory

auth_host = 192.168.70.247

auth_port = 35357

auth_protocol = http

admin_tenant_name = service

admin_user = glance

admin_password = service_pass

修改/etc/glance/glance-api.conf文件中

sql_connection = mysql://glanceUser:[email protected]/glance

[paste_deploy]

flavor = keystone

修改/etc/glance/glance-registry.conf文件中

sql_connection = mysql://glanceUser:[email protected]/glance

[paste_deploy]

flavor = keystone

service glance-api restart; service glance-registry restart

glance-manage db_sync

测试glance

root@controll:/etc/glance# glance index

ID Name Disk Format Container Format Size

------------------------------------ ------------------------------ -------------------- -------------------- --------------

下载IMG,这里推荐一个只有10M的Cirros的IMG

wget https://launchpad.net/cirros/trunk/0.3.0/+download/cirros-0.3.0-x86_64-disk.img

上传IMG

glance image-create --name myFirstImage --is-public true --container-format bare --disk-format qcow2 < cirros-0.3.0-x86_64-disk.img

上传成功

+------------------+--------------------------------------+

| Property | Value |

+------------------+--------------------------------------+

| checksum | 50bdc35edb03a38d91b1b071afb20a3c |

| container_format | bare |

| created_at | 2013-08-01T02:31:24 |

| deleted | False |

| deleted_at | None |

| disk_format | qcow2 |

| id | 7b5a9b07-e21a-49cd-87b6-7d70d3e9f9eb |

| is_public | True |

| min_disk | 0 |

| min_ram | 0 |

| name | myFirstImage |

| owner | b2f9f0cd99c54e008b5ccac2122b5ee5 |

| protected | False |

| size | 9761280 |

| status | active |

| updated_at | 2013-08-01T02:31:25 |

+------------------+--------------------------------------+

查看上传的img

root@controll:/opt/openstack# glance image-list

+--------------------------------------+--------------+-------------+------------------+---------+--------+

| ID | Name | Disk Format | Container Format | Size | Status |

+--------------------------------------+--------------+-------------+------------------+---------+--------+

| 7b5a9b07-e21a-49cd-87b6-7d70d3e9f9eb | myFirstImage | qcow2 | bare | 9761280 | active |

+--------------------------------------+--------------+-------------+------------------+---------+--------+

root@controll:/opt/openstack#

3.9安装Quantum

apt-get install -y quantum-server

修改配置文件/etc/quantum/plugins/openvswitch/ovs_quantum_plugin.ini,trnant_network是选择网络模式,openstack的网络模式有4种,较复杂的有vlan和gre,这里的gre类似bmc clm的 network container

#Under the database section

[DATABASE]

sql_connection = mysql://quantumUser:[email protected]/quantum

#Under the OVS section

[OVS]

tenant_network_type = gre

tunnel_id_ranges = 1:1000

enable_tunneling = True

#Firewall driver for realizing quantum security group function

[SECURITYGROUP]

firewall_driver = quantum.agent.linux.iptables_firewall.OVSHybridIptablesFirewallDriver

修改配置文件/etc/quantum/api-paste.ini

[filter:authtoken]

paste.filter_factory = keystoneclient.middleware.auth_token:filter_factory

auth_host = 192.168.70.247

auth_port = 35357

auth_protocol = http

admin_tenant_name = service

admin_user = quantum

admin_password = service_pass

修改配置文件 /etc/quantum/quantum.conf

[keystone_authtoken]

auth_host = 192.168.70.247

auth_port = 35357

auth_protocol = http

admin_tenant_name = service

admin_user = quantum

admin_password = service_pass

signing_dir = /var/lib/quantum/keystone-signing

重启服务

service quantum-server restart

3.10安装Nova

apt-get install -y nova-api nova-cert novnc nova-consoleauth nova-scheduler nova-novncproxy nova-doc nova-conductor

修改配置文件/etc/nova/api-paste.ini

[filter:authtoken]

paste.filter_factory = keystoneclient.middleware.auth_token:filter_factory

auth_host = 192.168.70.247

auth_port = 35357

auth_protocol = http

admin_tenant_name = service

admin_user = nova

admin_password = service_pass

signing_dirname = /tmp/keystone-signing-nova

# Workaround for https://bugs.launchpad.net/nova/+bug/1154809

auth_version = v2.0

修改配置文件/etc/nova/nova.conf

[DEFAULT]

logdir=/var/log/nova

state_path=/var/lib/nova

lock_path=/run/lock/nova

verbose=True

api_paste_config=/etc/nova/api-paste.ini

compute_scheduler_driver=nova.scheduler.simple.SimpleScheduler

rabbit_host=192.168.70.247

nova_url=http://192.168.70.247:8774/v1.1/

sql_connection=mysql://novaUser:[email protected]/nova

root_helper=sudo nova-rootwrap /etc/nova/rootwrap.conf

# Auth

use_deprecated_auth=false

auth_strategy=keystone

# Imaging service

glance_api_servers=192.168.70.247:9292

image_service=nova.image.glance.GlanceImageService

# Vnc configuration

novnc_enabled=true

novncproxy_base_url=http://192.168.70.247:6080/vnc_auto.html

novncproxy_port=6080

vncserver_proxyclient_address=192.168.70.247

vncserver_listen=0.0.0.0

# Network settings

network_api_class=nova.network.quantumv2.api.API

quantum_url=http://192.168.70.247:9696

quantum_auth_strategy=keystone

quantum_admin_tenant_name=service

quantum_admin_username=quantum

quantum_admin_password=service_pass

quantum_admin_auth_url=http://192.168.70.247:35357/v2.0

libvirt_vif_driver=nova.virt.libvirt.vif.LibvirtHybridOVSBridgeDriver

linuxnet_interface_driver=nova.network.linux_net.LinuxOVSInterfaceDriver

#If you want Quantum + Nova Security groups

firewall_driver=nova.virt.firewall.NoopFirewallDriver

security_group_api=quantum

#If you want Nova Security groups only, comment the two lines above and uncomment line -1-.

#-1-firewall_driver=nova.virt.libvirt.firewall.IptablesFirewallDriver

#Metadata

service_quantum_metadata_proxy = True

quantum_metadata_proxy_shared_secret = helloOpenStack

# Compute #

compute_driver=libvirt.LibvirtDriver

# Cinder #

volume_api_class=nova.volume.cinder.API

osapi_volume_listen_port=5900

数据同步

重启服务

cd /etc/init.d/; for i in $( ls nova-* ); do sudo service $i restart; done

查看服务状态,如下为正常

root@controll:/etc/init.d# nova-manage service list

Binary Host Zone Status State Updated_At

nova-cert controll internal enabled :-) None

nova-consoleauth controll internal enabled :-) None

nova-conductor controll internal enabled :-) None

nova-scheduler controll internal enabled :-) None

3.11安装Cinder,给虚拟机提供块存储服务

注:安装前先给虚拟机添加一块磁盘并重启

apt-get install -y cinder-api cinder-scheduler cinder-volume iscsitarget open-iscsi iscsitarget-dkms

配置iscis

sed -i 's/false/true/g' /etc/default/iscsitarget

启动服务

service iscsitarget start

service open-iscsi start

修改配置文件/etc/cinder/api-paste.ini

[filter:authtoken]

paste.filter_factory = keystoneclient.middleware.auth_token:filter_factory

service_protocol = http

service_host = 192.168.70.247

service_port = 5000

auth_host = 192.168.70.247

auth_port = 35357

auth_protocol = http

admin_tenant_name = service

admin_user = cinder

admin_password = service_pass

signing_dir = /var/lib/cinder

修改配置文件/etc/cinder/cinder.conf

[DEFAULT]

rootwrap_config=/etc/cinder/rootwrap.conf

sql_connection = mysql://cinderUser:[email protected]/cinder

api_paste_config = /etc/cinder/api-paste.ini

iscsi_helper=ietadm

volume_name_template = volume-%s

volume_group = cinder-volumes

verbose = True

auth_strategy = keystone

iscsi_ip_address=192.168.70.247

同步数据,这里同步时会有输出

创建一个名字为cinder-volumes的volumegroup,这里需要开始时添加的磁盘

执行命令:fdisk -l

Disk /dev/sda: 21.5 GB, 21474836480 bytes

255 heads, 63 sectors/track, 2610 cylinders, total 41943040 sectors

Units = sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk identifier: 0x00005f37

Device Boot Start End Blocks Id System

/dev/sda1 * 2048 37750783 18874368 83 Linux

/dev/sda2 37752830 41940991 2094081 5 Extended

/dev/sda5 37752832 41940991 2094080 82 Linux swap / Solaris

Disk /dev/sdb: 21.5 GB, 21474836480 bytes

255 heads, 63 sectors/track, 2610 cylinders, total 41943040 sectors

Units = sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk identifier: 0x00000000

Disk /dev/sdb doesn't contain a valid partition table

通过输出可以看到有/dev/sdb盘可以使用

执行命令 fdisk /dev/sdb

按照步骤输入一下内容

n

p

1

ENTER

ENTER

t

8e

w

查看格式化结果 fdisk -l

Disk /dev/sda: 21.5 GB, 21474836480 bytes

255 heads, 63 sectors/track, 2610 cylinders, total 41943040 sectors

Units = sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk identifier: 0x00005f37

Device Boot Start End Blocks Id System

/dev/sda1 * 2048 37750783 18874368 83 Linux

/dev/sda2 37752830 41940991 2094081 5 Extended

/dev/sda5 37752832 41940991 2094080 82 Linux swap / Solaris

Disk /dev/sdb: 21.5 GB, 21474836480 bytes

213 heads, 34 sectors/track, 5791 cylinders, total 41943040 sectors

Units = sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk identifier: 0x14815849

Device Boot Start End Blocks Id System

/dev/sdb1 2048 41943039 20970496 8e Linux LVM

最后执行以下操作创建物理存储

root@controll:~# pvcreate /dev/sdb1

Physical volume "/dev/sdb1" successfully created

root@controll:~# vgcreate cinder-volumes /dev/sdb1

Volume group "cinder-volumes" successfully created

root@controll:~#

重启服务

cd /etc/init.d/; for i in $( ls cinder-* ); do sudo service $i restart; done

3.12安装Horizon

apt-get install -y openstack-dashboard memcached

删除Ubuntu openstack主题,可不执行

dpkg --purge openstack-dashboard-ubuntu-theme

重启服务

service apache2 restart; service memcached restart

controller节点安装完成,可以通过一下地址访问

http://192.168.70.247/horizon

帐号:admin

密码:admin_pass

4.安装Network Node

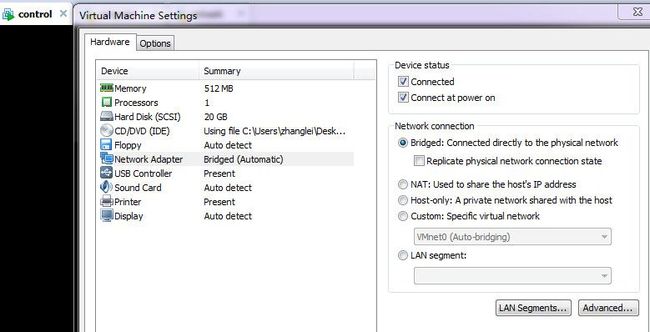

4.1节点虚拟机初始化

配置网络如下

eth0 Link encap:Ethernet HWaddr 00:0c:29:08:dc:56

inet addr:192.168.70.248 Bcast:192.168.70.255 Mask:255.255.255.0

inet6 addr: fe80::20c:29ff:fe08:dc56/64 Scope:Link

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:4175 errors:0 dropped:46 overruns:0 frame:0

TX packets:39 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:417150 (417.1 KB) TX bytes:6215 (6.2 KB)

Interrupt:19 Base address:0x2000

eth1 Link encap:Ethernet HWaddr 00:0c:29:08:dc:60

inet addr:192.168.2.2 Bcast:192.168.2.255 Mask:255.255.255.0

inet6 addr: fe80::20c:29ff:fe08:dc60/64 Scope:Link

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:5 errors:0 dropped:0 overruns:0 frame:0

TX packets:6 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:2565 (2.5 KB) TX bytes:468 (468.0 B)

Interrupt:16 Base address:0x2080

安装Grizzly repositories

apt-get install -y ubuntu-cloud-keyring

echo deb http://ubuntu-cloud.archive.canonical.com/ubuntu precise-updates/grizzly main >> /etc/apt/sources.list.d/grizzly.list

系统升级

apt-get update -y

apt-get upgrade -y

apt-get dist-upgrade -y

安装NTP服务

配置NTP服务

#Comment the ubuntu NTP servers

sed -i 's/server 0.ubuntu.pool.ntp.org/#server 0.ubuntu.pool.ntp.org/g' /etc/ntp.conf

sed -i 's/server 1.ubuntu.pool.ntp.org/#server 1.ubuntu.pool.ntp.org/g' /etc/ntp.conf

sed -i 's/server 2.ubuntu.pool.ntp.org/#server 2.ubuntu.pool.ntp.org/g' /etc/ntp.conf

sed -i 's/server 3.ubuntu.pool.ntp.org/#server 3.ubuntu.pool.ntp.org/g' /etc/ntp.conf

#Set the network node to follow up your conroller node

sed -i 's/server ntp.ubuntu.com/server 192.168.70.247/g' /etc/ntp.conf

service ntp restart

安装其它组件

apt-get install -y vlan bridge-utils

配置IP指向

sed -i 's/#net.ipv4.ip_forward=1/net.ipv4.ip_forward=1/' /etc/sysctl.conf

# To save you from rebooting, perform the following

sysctl net.ipv4.ip_forward=1

4.2安装OpenVSwitch

apt-get install -y openvswitch-switch openvswitch-datapath-dkms

创建网桥

#br-int will be used for VM integration

ovs-vsctl add-br br-int

#br-ex is used to make to VM accessible from the internet

ovs-vsctl add-br br-ex

root@networkvm:/etc# ovs-vsctl show

e9c13238-c723-47f9-8af9-9af75ac8d006

Bridge br-ex

Port br-ex

Interface br-ex

type: internal

Bridge br-int

Port br-int

Interface br-int

type: internal

ovs_version: "1.4.0+build0"

4.3安装Quantum

apt-get -y install quantum-plugin-openvswitch-agent quantum-dhcp-agent quantum-l3-agent quantum-metadata-agent

修改配置文件/etc/quantum/api-paste.ini,注意ip地址为

keyston主机地址

[filter:authtoken]

paste.filter_factory = keystoneclient.middleware.auth_token:filter_factory

auth_host = 192.168.70.247

auth_port = 35357

auth_protocol = http

admin_tenant_name = service

admin_user = quantum

admin_password = service_pass

修改配置文件/etc/quantum/plugins/openvswitch/ovs_quantum_plugin.ini,local ip为本机eth1网卡IP

#Under the database section

[DATABASE]

sql_connection = mysql://quantumUser:[email protected]/quantum

#Under the OVS section

[OVS]

tenant_network_type = gre

tunnel_id_ranges = 1:1000

integration_bridge = br-int

tunnel_bridge = br-tun

local_ip = 192.168.2.2

enable_tunneling = True

#Firewall driver for realizing quantum security group function

[SECURITYGROUP]

firewall_driver = quantum.agent.linux.iptables_firewall.OVSHybridIptablesFirewallDriver

修改配置文件/etc/quantum/metadata_agent.ini

# The Quantum user information for accessing the Quantum API.

auth_url = http://192.168.70.247:35357/v2.0

auth_region = RegionOne

admin_tenant_name = service

admin_user = quantum

admin_password = service_pass

# IP address used by Nova metadata server

nova_metadata_ip = 192.168.70.247

# TCP Port used by Nova metadata server

nova_metadata_port = 8775

metadata_proxy_shared_secret = helloOpenStack

修改配置文件/etc/quantum/quantum.conf

rabbit_host = 192.168.70.247

#And update the keystone_authtoken section

[keystone_authtoken]

auth_host = 192.168.70.247

auth_port = 35357

auth_protocol = http

admin_tenant_name = service

admin_user = quantum

admin_password = service_pass

signing_dir = /var/lib/quantum/keystone-signing

修改配置文件/etc/sudoers/sudoers.d/quantum_sudoers

quantum ALL=NOPASSWD: ALL

重启服务 ,如果执行有sudo: unable to resolve host networkvm,请配置hosts文件

cd /etc/init.d/; for i in $( ls quantum-* ); do sudo service $i restart; done

4.4配置OpenVSwitch2

配置eth2网卡的信息

# VM internet Access

auto eth2

iface eth2 inet manual

up ifconfig $IFACE 0.0.0.0 up

up ip link set $IFACE promisc on

down ip link set $IFACE promisc off

down ifconfig $IFACE down

配置网卡eth2与网桥的关联

ovs-vsctl add-port br-ex eth2

root@networkvm:/etc/init.d# ovs-vsctl show

e9c13238-c723-47f9-8af9-9af75ac8d006

Bridge br-ex

Port "eth2"

Interface "eth2"

Port br-ex

Interface br-ex

type: internal

Bridge br-tun

Port br-tun

Interface br-tun

type: internal

Port patch-int

Interface patch-int

type: patch

options: {peer=patch-tun}

Bridge br-int

Port patch-tun

Interface patch-tun

type: patch

options: {peer=patch-int}

Port br-int

Interface br-int

type: internal

ovs_version: "1.4.0+build0"

5.安装Computer Node

5.1节点虚拟机初始化

apt-get install -y ubuntu-cloud-keyring

echo deb http://ubuntu-cloud.archive.canonical.com/ubuntu precise-updates/grizzly main >> /etc/apt/sources.list.d/grizzly.list

apt-get update -y

apt-get upgrade -y

apt-get dist-upgrade -y

# The loopback network interface

auto lo

iface lo inet loopback

# The primary network interface

auto eth0

iface eth0 inet static

address 192.168.70.249

netmask 255.255.255.0

gateway 192.168.70.1

auto eth1

iface eth1 inet static

address 192.168.2.3

netmask 255.255.255.0

重启虚拟机

安装NTP服务

配置NTP服务

#Comment the ubuntu NTP servers

sed -i 's/server 0.ubuntu.pool.ntp.org/#server 0.ubuntu.pool.ntp.org/g' /etc/ntp.conf

sed -i 's/server 1.ubuntu.pool.ntp.org/#server 1.ubuntu.pool.ntp.org/g' /etc/ntp.conf

sed -i 's/server 2.ubuntu.pool.ntp.org/#server 2.ubuntu.pool.ntp.org/g' /etc/ntp.conf

sed -i 's/server 3.ubuntu.pool.ntp.org/#server 3.ubuntu.pool.ntp.org/g' /etc/ntp.conf

#Set the compute node to follow up your conroller node

sed -i 's/server ntp.ubuntu.com/server 10.10.10.51/g' /etc/ntp.conf

service ntp restart

安装其他服务

apt-get install -y vlan bridge-utils

配置IP指向

sed -i 's/#net.ipv4.ip_forward=1/net.ipv4.ip_forward=1/' /etc/sysctl.conf

# To save you from rebooting, perform the following

sysctl net.ipv4.ip_forward=1

安装KVM

apt-get install -y cpu-checker

kvm-ok

会提示如下信息

root@computervm:/opt/test# kvm-ok

INFO: Your CPU does not support KVM extensions

KVM acceleration can NOT be used

虚拟机不支持kvm cpu加速,但是不影响使用

安装KVM

apt-get install -y kvm libvirt-bin pm-utils

配置 /etc/libvirt/qemu.conf文件

cgroup_device_acl = [

"/dev/null", "/dev/full", "/dev/zero",

"/dev/random", "/dev/urandom",

"/dev/ptmx", "/dev/kvm", "/dev/kqemu",

"/dev/rtc", "/dev/hpet","/dev/net/tun"

]

删除默认网桥

virsh net-destroy default

virsh net-undefine default

配置/etc/libvirt/libvirtd.conf文件

listen_tls = 0

listen_tcp = 1

auth_tcp = "none"

配置/etc/init/libvirt-bin.conf文件

env libvirtd_opts="-d -l"

配置/etc/default/libvirt-bin文件

重启服务

service dbus restart && service libvirt-bin restart

5.3安装openVSwitch

apt-get install -y openvswitch-switch openvswitch-datapath-dkms

创建网桥

ovs-vsctl add-br br-int

apt-get -y install quantum-plugin-openvswitch-agent

配置/etc/quantum/plugins/openvswitch/ovs_quantum_plugin.ini文件

#Under the database section

[DATABASE]

sql_connection = mysql://quantumUser:[email protected]/quantum

#Under the OVS section

[OVS]

tenant_network_type = gre

tunnel_id_ranges = 1:1000

integration_bridge = br-int

tunnel_bridge = br-tun

local_ip = 192.168.2.3enable_tunneling = True

#Firewall driver for realizing quantum security group function

[SECURITYGROUP]

firewall_driver = quantum.agent.linux.iptables_firewall.OVSHybridIptablesFirewallDriver

配置 /etc/quantum/quantum.conf文件

rabbit_host = 192.168.70.247

#And update the keystone_authtoken section

[keystone_authtoken]

auth_host = 192.168.70.247

auth_port = 35357

auth_protocol = http

admin_tenant_name = service

admin_user = quantum

admin_password = service_pass

signing_dir = /var/lib/quantum/keystone-signing

重启服务

service quantum-plugin-openvswitch-agent restart

5.4安装Nova

apt-get install -y nova-compute-kvm

配置/etc/nova/api-paste.ini文件

[filter:authtoken]

paste.filter_factory = keystoneclient.middleware.auth_token:filter_factory

auth_host = 192.168.70.247

auth_port = 35357

auth_protocol = http

admin_tenant_name = service

admin_user = nova

admin_password = service_pass

signing_dirname = /tmp/keystone-signing-nova

# Workaround for https://bugs.launchpad.net/nova/+bug/1154809

auth_version = v2.0

配置/etc/nova/nova-compute.conf文件,由于是虚拟机安装libvirt_type=需要改成qemu,物理机为KVM

[DEFAULT]

#libvirt_type=kvm

libvirt_type=qemu

libvirt_ovs_bridge=br-int

libvirt_vif_type=ethernet

libvirt_vif_driver=nova.virt.libvirt.vif.LibvirtHybridOVSBridgeDriver

libvirt_use_virtio_for_bridges=True

配置/etc/nova/nova.conf文件

[DEFAULT]

logdir=/var/log/nova

state_path=/var/lib/nova

lock_path=/run/lock/nova

verbose=True

api_paste_config=/etc/nova/api-paste.ini

compute_scheduler_driver=nova.scheduler.simple.SimpleScheduler

rabbit_host=192.168.70.247

nova_url=http://192.168.70.247:8774/v1.1/

sql_connection=mysql://novaUser:[email protected]/nova

root_helper=sudo nova-rootwrap /etc/nova/rootwrap.conf

# Auth

use_deprecated_auth=false

auth_strategy=keystone

# Imaging service

glance_api_servers=192.168.70.247:9292

image_service=nova.image.glance.GlanceImageService

# Vnc configuration

novnc_enabled=true

novncproxy_base_url=http://192.168.70.247:6080/vnc_auto.html

novncproxy_port=6080

vncserver_proxyclient_address=192.168.70.249

vncserver_listen=0.0.0.0

# Network settings

network_api_class=nova.network.quantumv2.api.API

quantum_url=http://192.168.70.247:9696

quantum_auth_strategy=keystone

quantum_admin_tenant_name=service

quantum_admin_username=quantum

quantum_admin_password=service_pass

quantum_admin_auth_url=http://192.168.70.247:35357/v2.0

libvirt_vif_driver=nova.virt.libvirt.vif.LibvirtHybridOVSBridgeDriver

linuxnet_interface_driver=nova.network.linux_net.LinuxOVSInterfaceDriver

#If you want Quantum + Nova Security groups

firewall_driver=nova.virt.firewall.NoopFirewallDriver

security_group_api=quantum

#If you want Nova Security groups only, comment the two lines above and uncomment line -1-.

#-1-firewall_driver=nova.virt.libvirt.firewall.IptablesFirewallDriver

#Metadata

service_quantum_metadata_proxy = True

quantum_metadata_proxy_shared_secret = helloOpenStack

# Compute #

compute_driver=libvirt.LibvirtDriver

# Cinder #

volume_api_class=nova.volume.cinder.API

osapi_volume_listen_port=5900

cinder_catalog_info=volume:cinder:internalURL

重启服务

cd /etc/init.d/; for i in $( ls nova-* ); do sudo service $i restart; done

验证安装,全为笑脸表示成功

root@computervm:/etc/init.d# nova-manage service list

Binary Host Zone Status State Updated_At

nova-cert controll internal enabled :-) 2013-08-01 14:23:23

nova-consoleauth controll internal enabled :-) 2013-08-01 14:23:20

nova-conductor controll internal enabled :-) 2013-08-01 14:23:19

nova-scheduler controll internal enabled :-) 2013-08-01 14:23:21

nova-compute computervm nova enabled :-) None

root@computervm:/etc/init.d#

结束语:至此,多节点安装openstack G版完全完成。后期针对openstack的配置及使用再发相关教程。