1. When I alter a talbe in a database, I can get the altered table information by hive cli and hwi, but error comes when click the table in Hue Metastore Manager .

A: Find no reasons,but fixed it by restart hiveserver2

2.When I run a pig script in Hue using HCatLoader, error emits

ERROR 1070: Could not resolve HCatLoader using imports: [, java.lang., org.apache.pig.builtin., org.apache.pig.impl.builtin.]

the pig srcipt likes:

--extract user basic info from inok_raw.inok_user table

user = load 'inok_raw.inok_user' using HCatLoader();

user1 = foreach user generate user_id, user_name, user_gender, live_city, birth_city;

store user1 into 'inok_datamine.inok_user' using HCatStorer();

see:http://mail-archives.apache.org/mod_mbox/incubator-hcatalog-user/201208.mbox/%3CCAP0y+ToQrexQTd8q7tYSdEJoceE5u-9x60ptR9Z0DRiL9ZVUVw@mail.gmail.com%3E

A:

a. copy all related jars in hive-0.12.0-bin/hcatalog/share/hcatalog to oozie-4.0.1/share/lib/pig and update sharelib in hdfs

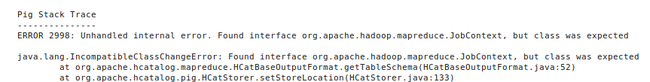

This method solves the frist error,but produces another one:

------------------------------

To run pig with hcat in hue, following the steps shown below to prepare env.

a. backups all jars in share/lib/pig

b. delete share/lib/pig in hdfs

c. compile pig-0.12.0 to support hadoop2.3.0

d. copy all jars except hadoop*.jar in pig-0.12.0/build/ivy/lib/Pig to oozie-4.0.1/share/lib/pig

e. copy pig-0.12.0.jar pig-0.12.1-withouthadoop.jar to share/lib/pig

f. copy oozie-sharelib-pig-4.0.1.jar from backuped jars in share/lib/pig

g. copy all jars in hive-0.12.0-bin/hcatalog/share/hcatalog to share/lib/pig

h. copy all jars in hive-0.12.0-bin/lib to share/lib/pig

i. copy mysql jdbc driver to share/lib/pig

j. update sharelib in hdfs

but erros comes

#jar tf share/lib/pig/

hcatalog-core-0.12.0.jar

this jar comes from

hive-0.12.0-bin/hcatalog/share/hcatalog/hcatalog-core-0.12.0.jar

so, I guess the hive-0.12.0-bin is not target to hadoop2.3.0. so should recompile it.

see:

http://community.cloudera.com/t5/Cloudera-Manager-Installation/Upgraded-the-Hive-to-0-12-manually-and-I-can-t-run-the-sample/td-p/7055

http://stackoverflow.com/questions/22630323/hadoop-java-lang-incompatibleclasschangeerror-found-interface-org-apache-hadoo

https://issues.apache.org/jira/browse/HIVE-6729

org.apache.hive.hcatalog.pig.HCatStorer.setStoreLocation

public void setStoreLocation(String location, Job job) throws IOException {

....

Job clone = new Job(job.getConfiguration());

....

HCatSchema hcatTblSchema = HCatOutputFormat.getTableSchema(job);

.....

https://cwiki.apache.org/confluence/display/Hive/GettingStarted#GettingStarted-CompileHivePriorto0.13onHadoop23

https://cwiki.apache.org/confluence/display/Hive/AdminManual+Installation

https://cwiki.apache.org/confluence/display/Hive/HowToContribute

1.down source code

svn co http:

svn co http://svn.apache.org/repos/asf/hive/branches/branch-0.12 hive-0.12

svn co http://svn.apache.org/repos/asf/hive/branches/branch-0.13 hive-0.13

2.compile

hive-0.12 compile

ant clean package -Dhadoop.version=2.0.0-alpha -Dhadoop-0.23.version=2.0.0-alpha -Dhadoop.mr.rev=23

ant clean package -Dhadoop.version=2.3.0 -Dhadoop-0.23.version=2.3.0 -Dhadoop.mr.rev=23

faild due to some reasons

hive-0.13 compile

mvn clean install -DskipTests -Phadoop-2,dist

sucess.

The dist tar is in packaging/target.

But there is no hwi.war and can not work with hue3.5.0 when create hive metastore database

I recompiled hive-0.12.0 to support hadoop2.3.0,but the error is still exist. When I use libs in compiled hive-0.13.0,the error is dismissed,but another error comes. Fuck!

---------------

ERROR 2998: Unhandled internal error. java.lang.NoSuchFieldError: METASTORETHRIFTCONNECTIONRETRIES

com.google.common.util.concurrent.ExecutionError: java.lang.NoSuchFieldError: METASTORETHRIFTCONNECTIONRETRIES

at com.google.common.cache.LocalCache$Segment.get(LocalCache.java:2232)

at com.google.common.cache.LocalCache.get(LocalCache.java:3965)

at com.google.common.cache.LocalCache$LocalManualCache.get(LocalCache.java:4764)

at org.apache.hive.hcatalog.common.HiveClientCache.getOrCreate(HiveClientCache.java:216)

at org.apache.hive.hcatalog.common.HiveClientCache.get(HiveClientCache.java:192)

at org.apache.hive.hcatalog.common.HCatUtil.getHiveClient(HCatUtil.java:569)

at org.apache.hive.hcatalog.pig.PigHCatUtil.getHiveMetaClient(PigHCatUtil.java:159)

at org.apache.hive.hcatalog.pig.PigHCatUtil.getTable(PigHCatUtil.java:195)

at org.apache.hive.hcatalog.pig.HCatLoader.getSchema(HCatLoader.java:210)

When recrate hive metastore to schema version 0.13.0 . But hue can't support hive0.13.0 (could delete or create database in hive metastore). So we revert to hive0.12.0 but using schema version 0.13.0 and

configure the hive-site.xml the entity

<property>

<name>hive.metastore.schema.verification</name>

<value>false</value>

</property>

and use related jars from hive-0.13.0 in oozie/share/lib/pig

The main reason is that jars confilcts when copy jars from pig0.12.0/lib/. So the correct way is ignore the

d step.

*********************************************

In order to integrate hive metastore/hcatalog with pig. We should do the following steps:

1.compile pig0.12.0 to support hadoop2.3.0

2.compile hive0.12.0 and hive0.13.0 to support hadoop2.3.0

3.use hive metastore schema version 0.13.0

4.use hive0.12.0 with hue

5.use hive0.13.0 related jars in oozie/share/lib/pig/

*********************************************