【NoSQL】Mongodb高可用架构―Replica Set 集群实战

wget http://fastdl.mongodb.org/linux/mongodb-linux-x86_64-2.0.4.tgz

tar zxf mongodb-linux-x86_64-2.0.4.tgz

mv mongodb-linux-x86_64-2.0.4 /opt/mongodb

echo "export PATH=$PATH:/opt/mongodb/bin" >> /etc/profile

source /etc/profile

useradd -u 600 -s /bin/false mongodb

30服务器:

mkdir -p /data0/mongodb/{db,logs}

mkdir -p /data0/mongodb/db/{shard11,shard21,config}

31服务器:

mkdir -p /data0/mongodb/{db,logs}

mkdir -p /data0/mongodb/db/{shard12,shard22,config}

32服务器:

mkdir -p /data0/mongodb/{db,logs}

mkdir -p /data0/mongodb/db/{shard13,shard23,config}

true > /etc/hosts

echo -ne "

192.168.8.30 mong01

192.168.8.31 mong02

192.168.8.32 mong03

" >>/etc/hosts

或

cat >> /etc/hosts << EOF

192.168.8.30 mong01

192.168.8.31 mong02

192.168.8.32 mong03

EOF

30 server:

/opt/mongodb/bin/mongod -shardsvr -replSet shard1 -port 27021 -dbpath /data0/mongodb/db/shard11 -oplogSize 1000 -logpath /data0/mongodb/logs/shard11.log -logappend --maxConns 10000 --quiet -fork --directoryperdb

sleep 2

/opt/mongodb/bin/mongod -shardsvr -replSet shard2 -port 27022 -dbpath /data0/mongodb/db/shard21 -oplogSize 1000 -logpath /data0/mongodb/logs/shard21.log -logappend --maxConns 10000 --quiet -fork --directoryperdb

sleep 2

echo "all mongo started."

31 server:

/opt/mongodb/bin/mongod -shardsvr -replSet shard1 -port 27021 -dbpath /data0/mongodb/db/shard12 -oplogSize 1000 -logpath /data0/mongodb/logs/shard12.log -logappend --maxConns 10000 --quiet -fork --directoryperdb

sleep 2

numactl --interleave=all /opt/mongodb/bin/mongod -shardsvr -replSet shard2 -port 27022 -dbpath /data0/mongodb/db/shard22 -oplogSize 1000 -logpath /data0/mongodb/logs/shard22.log -logappend --maxConns 10000 --quiet -fork --directoryperdb

sleep 2

echo "all mongo started."

32 server:

numactl --interleave=all /opt/mongodb/bin/mongod -shardsvr -replSet shard1 -port 27021 -dbpath /data0/mongodb/db/shard13 -oplogSize 1000 -logpath /data0/mongodb/logs/shard13.log -logappend --maxConns 10000 --quiet -fork --directoryperdb

sleep 2

numactl --interleave=all /opt/mongodb/bin/mongod -shardsvr -replSet shard2 -port 27022 -dbpath /data0/mongodb/db/shard23 -oplogSize 1000 -logpath /data0/mongodb/logs/shard23.log -logappend --maxConns 10000 --quiet -fork --directoryperdb

sleep 2

echo "all mongo started."

shardsvr = true

replSet = shard1

port = 27021

dbpath = /data0/mongodb/db/shard11

oplogSize = 1000

logpath = /data0/mongodb/logs/shard11.log

logappend = true

maxConns = 10000

quit=true

profile = 1

slowms = 5

rest = true

fork = true

directoryperdb = true

[root@mon1 sh]# cat start.sh

/opt/mongodb/bin/mongod -shardsvr -replSet shard1 -port 27021 -dbpath /data0/mongodb/db/shard11 -oplogSize 1000 -logpath /data0/mongodb/logs/shard11.log -logappend --maxConns 10000 --quiet -fork --directoryperdb

sleep 2

/opt/mongodb/bin/mongod -shardsvr -replSet shard2 -port 27022 -dbpath /data0/mongodb/db/shard21 -oplogSize 1000 -logpath /data0/mongodb/logs/shard21.log -logappend --maxConns 10000 --quiet -fork --directoryperdb

sleep 2

ps aux |grep mongo

echo "all mongo started."

[root@mon2 sh]# cat start.sh

/opt/mongodb/bin/mongod -shardsvr -replSet shard1 -port 27021 -dbpath /data0/mongodb/db/shard12 -oplogSize 1000 -logpath /data0/mongodb/logs/shard12.log -logappend --maxConns 10000 --quiet -fork --directoryperdb

sleep 2

/opt/mongodb/bin/mongod -shardsvr -replSet shard2 -port 27022 -dbpath /data0/mongodb/db/shard22 -oplogSize 1000 -logpath /data0/mongodb/logs/shard22.log -logappend --maxConns 10000 --quiet -fork --directoryperdb

sleep 2

ps aux |grep mongo

echo "all mongo started."

[root@mongo03 sh]# cat start.sh

/opt/mongodb/bin/mongod -shardsvr -replSet shard1 -port 27021 -dbpath /data0/mongodb/db/shard11 -oplogSize 1000 -logpath /data0/mongodb/logs/shard11.log -logappend --maxConns 10000 --quiet -fork --directoryperdb --keyFile=/opt/mongodb/sh/keyFile

sleep 2

/opt/mongodb/bin/mongod -shardsvr -replSet shard2 -port 27022 -dbpath /data0/mongodb/db/shard21 -oplogSize 1000 -logpath /data0/mongodb/logs/shard21.log -logappend --maxConns 10000 --quiet -fork --directoryperdb --keyFile=/opt/mongodb/sh/keyFile

sleep 2

echo "all mongo started."

[root@mongo01 ~]# mongo 192.168.8.30:27021

> config = {_id: 'shard1', members: [

{_id: 0, host: '192.168.8.30:27021'},

{_id: 1, host: '192.168.8.31:27021'},

{_id: 2, host: '192.168.8.32:27021'}]

}

> config = {_id: 'shard1', members: [

... {_id: 0, host: '192.168.8.30:27021'},

... {_id: 1, host: '192.168.8.31:27021'},

... {_id: 2, host: '192.168.8.32:27021'}]

... }

{

"_id" : "shard1",

"members" : [

{

"_id" : 0,

"host" : "192.168.8.30:27021"

},

{

"_id" : 1,

"host" : "192.168.8.31:27021"

},

{

"_id" : 2,

"host" : "192.168.8.32:27021"

}

]

}

> rs.initiate(config)

{

"info" : "Config now saved locally. Should come online in about a minute.",

"ok" : 1

}

> rs.status()

{

"set" : "shard1",

"date" : ISODate("2012-06-07T11:35:22Z"),

"myState" : 1,

"members" : [

{

"_id" : 0,

"name" : "192.168.8.30:27021",

"health" : 1, #1 表示正常

"state" : 1, #1 表示是primary

"stateStr" : "PRIMARY", #表示此服务器是主库

"optime" : {

"t" : 1339068873000,

"i" : 1

},

"optimeDate" : ISODate("2012-06-07T11:34:33Z"),

"self" : true

},

{

"_id" : 1,

"name" : "192.168.8.31:27021",

"health" : 1, #1 表示正常

"state" : 2, #2 表示是secondary

"stateStr" : "SECONDARY", #表示此服务器是从库

"uptime" : 41,

"optime" : {

"t" : 1339068873000,

"i" : 1

},

"optimeDate" : ISODate("2012-06-07T11:34:33Z"),

"lastHeartbeat" : ISODate("2012-06-07T11:35:21Z"),

"pingMs" : 7

},

{

"_id" : 2,

"name" : "192.168.8.32:27021",

"health" : 1,

"state" : 2,

"stateStr" : "SECONDARY",

"uptime" : 36,

"optime" : {

"t" : 1341373105000,

"i" : 1

},

"optimeDate" : ISODate("2012-06-07T11:34:00Z"),

"lastHeartbeat" : ISODate("2012-06-07T11:35:21Z"),

"pingMs" : 3

}

],

"ok" : 1

}

PRIMARY>

[root@mongo02 sh]# mongo 192.168.8.31:27021

MongoDB shell version: 2.0.5

connecting to: 192.168.8.31:27021/test

SECONDARY>

[root@mongo03 sh]# mongo 192.168.8.32:27021

MongoDB shell version: 2.0.5

connecting to: 192.168.8.32:27021/test

SECONDARY>

PRIMARY> rs.conf()

{

"_id" : "shard1",

"version" : 1,

"members" : [

{

"_id" : 0,

"host" : "192.168.8.30:27021"

},

{

"_id" : 1,

"host" : "192.168.8.31:27021"

},

{

"_id" : 2,

"host" : "192.168.8.32:27021",

"shardOnly" : true

}

]

}

[root@mongo02 sh]# mongo 192.168.8.30:27022

MongoDB shell version: 2.0.5

connecting to: 192.168.8.30:27022/test

> config = {_id: 'shard2', members: [

{_id: 0, host: '192.168.8.30:27022'},

{_id: 1, host: '192.168.8.31:27022'},

{_id: 2, host: '192.168.8.32:27022'}]

}

> config = {_id: 'shard2', members: [

... {_id: 0, host: '192.168.8.30:27022'},

... {_id: 1, host: '192.168.8.31:27022'},

... {_id: 2, host: '192.168.8.32:27022'}]

... }

{

"_id" : "shard2",

"members" : [

{

"_id" : 0,

"host" : "192.168.8.30:27022"

},

{

"_id" : 1,

"host" : "192.168.8.31:27022"

},

{

"_id" : 2,

"host" : "192.168.8.32:27022"

}

]

}

> rs.initiate(config)

{

"info" : "Config now saved locally. Should come online in about a minute.",

"ok" : 1

}

> rs.status()

{

"set" : "shard2",

"date" : ISODate("2012-06-07T11:43:47Z"),

"myState" : 2,

"members" : [

{

"_id" : 0,

"name" : "192.168.8.30:27022",

"health" : 1,

"state" : 1,

"stateStr" : "PRIMARY",

"optime" : {

"t" : 1341367921000,

"i" : 1

},

"optimeDate" : ISODate("2012-06-07T11:43:40Z"),

"self" : true

},

{

"_id" : 1,

"name" : "192.168.8.31:27022",

"health" : 1,

"state" : 2,

"stateStr" : "SECONDARY",

"uptime" : 50,

"optime" : {

"t" : 1341367921000,

"i" : 1

},

"optimeDate" : ISODate("1970-01-01T00:00:00Z"),

"lastHeartbeat" : ISODate("2012-06-07T11:43:46Z"),

"pingMs" : 0,

},

{

"_id" : 2,

"name" : "192.168.8.32:27022",

"health" : 1,

"state" : 2,

"stateStr" : "SECONDARY",

"uptime" : 81,

"optime" : {

"t" : 1341373254000,

"i" : 1

},

"optimeDate" : ISODate("2012-06-07T11:41:00Z"),

"lastHeartbeat" : ISODate("2012-06-07T11:43:46Z"),

"pingMs" : 0,

}

],

"ok" : 1

}

PRIMARY>

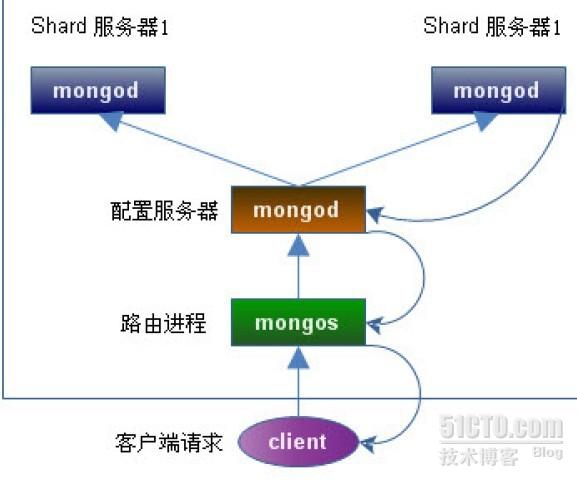

[root@mongo01 sh]# cat config.sh

/opt/mongodb/bin/mongod --configsvr --dbpath /data0/mongodb/db/config --port 20000 --logpath /data0/mongodb/logs/config.log --logappend --fork --directoryperdb

[root@mongo01 sh]# pwd

/opt/mongodb/sh

[root@mongo01 sh]# ./config.sh

[root@mongo01 sh]# ps aux |grep mong

root 25343 0.9 6.8 737596 20036 ? Sl 19:32 0:12 /opt/mongodb/bin/mongod -shardsvr -replSet shard1 -port 27021 -dbpath /data0/mongodb/db/shard1 -oplogSize 100 -logpath /data0/mongodb/logs/shard1.log -logappend --maxConns 10000 --quiet -fork --directoryperdb

root 25351 0.9 7.0 737624 20760 ? Sl 19:32 0:11 /opt/mongodb/bin/mongod -shardsvr -replSet shard2 -port 27022 -dbpath /data0/mongodb/db/shard2 -oplogSize 100 -logpath /data0/mongodb/logs/shard2.log -logappend --maxConns 10000 --quiet -fork --directoryperdb

root 25669 13.0 4.7 118768 13852 ? Sl 19:52 0:07 /opt/mongodb/bin/mongod --configsvr --dbpath /data0/mongodb/db/config --port 20000 --logpath /data0/mongodb/logs/config.log --logappend --fork --directoryperdb

root 25695 0.0 0.2 61220 744 pts/3 R+ 19:53 0:00 grep mong

[root@mongo01 sh]# cat mongos.sh

/opt/mongodb/bin/mongos -configdb 192.168.8.30:20000,192.168.8.31:20000,192.168.8.32:20000 -port 30000 -chunkSize 50 -logpath /data0/mongodb/logs/mongos.log -logappend -fork

[root@mongo01 sh]# pwd

/opt/mongodb/sh

[root@mongo01 sh]# ./mongos.sh

[root@mongo01 sh]# ps aux |grep mong

root 25343 0.8 6.8 737596 20040 ? Sl 19:32 0:13 /opt/mongodb/bin/mongod -shardsvr -replSet shard1 -port 27021 -dbpath /data0/mongodb/db/shard1 -oplogSize 100 -logpath /data0/mongodb/logs/shard1.log -logappend --maxConns 10000 --quiet -fork --directoryperdb

root 25351 0.9 7.0 737624 20768 ? Sl 19:32 0:16 /opt/mongodb/bin/mongod -shardsvr -replSet shard2 -port 27022 -dbpath /data0/mongodb/db/shard2 -oplogSize 100 -logpath /data0/mongodb/logs/shard2.log -logappend --maxConns 10000 --quiet -fork --directoryperdb

root 25669 2.0 8.0 321852 23744 ? Sl 19:52 0:09 /opt/mongodb/bin/mongod --configsvr --dbpath /data0/mongodb/db/config --port 20000 --logpath /data0/mongodb/logs/config.log --logappend --fork --directoryperdb

root 25863 0.5 0.8 90760 2388 ? Sl 20:00 0:00 /opt/mongodb/bin/mongos -configdb 192.168.8.30:20000,192.168.8.31:20000,192.168.8.32:20000 -port 30000 -chunkSize 50 -logpath /data0/mongodb/logs/mongos.log -logappend -fork

root 25896 0.0 0.2 61220 732 pts/3 D+ 20:00 0:00 grep mong

[root@mongo01 sh]# mongo 192.168.8.30:30000/admin

MongoDB shell version: 2.0.5

connecting to: 192.168.8.30:30000/admin

mongos>

mongos> db.runCommand({ addshard : "shard1/192.168.8.30:27021,192.168.8.31:27021,192.168.8.32:27021",name:"shard1",maxSize:20480})

{ "shardAdded" : "shard1", "ok" : 1 }

mongos> db.runCommand({ addshard : "shard2/192.168.8.30:27022,192.168.8.31:27022,192.168.8.32:27022",name:"shard2",maxSize:20480})

{ "shardAdded" : "shard2", "ok" : 1 }

mongos> db.runCommand( { listshards : 1 } );

{

"shards" : [

{

"_id" : "shard1",

"host" : "shard1/192.168.8.30:27021,192.168.8.31:27021,192.168.8.32:27021",

"maxSize" : NumberLong(20480)

},

{

"_id" : "shard2",

"host" : "shard2/192.168.8.30:27022,192.168.8.31:27022,192.168.8.32:27022",

"maxSize" : NumberLong(20480)

}

],

"ok" : 1

}

mongos> db.runCommand({ismaster:1});

{

"ismaster" : true,

"msg" : "isdbgrid",

"maxBsonObjectSize" : 16777216,

"ok" : 1

}

mongos> db.runCommand( { listshards : 1 } );

{ "ok" : 0, "errmsg" : "access denied - use admin db" }

mongos> use admin

switched to db admin

mongos> db.runCommand( { listshards : 1 } );

{

"shards" : [

{

"_id" : "s1",

"host" : "shard1/192.168.8.30:27021,192.168.8.31:27021"

},

{

"_id" : "s2",

"host" : "shard2/192.168.8.30:27022,192.168.8.31:27022"

}

],

"ok" : 1

}

mongos>

mongos> use nosql

switched to db nosql

mongos> for(var i=0;i<100;i++)db.fans.insert({uid:i,uname:'nosqlfans'+i});

mongos> use admin

switched to db admin

mongos> db.runCommand( { enablesharding : "nosql" } );

{ "ok" : 1 }

mongos> use nosql

switched to db nosql

mongos> db.fans.find()

{ "_id" : ObjectId("4ff2ae6816df1d1b33bad081"), "uid" : 0, "uname" : "nosqlfans0" }

{ "_id" : ObjectId("4ff2ae6816df1d1b33bad082"), "uid" : 1, "uname" : "nosqlfans1" }

{ "_id" : ObjectId("4ff2ae6816df1d1b33bad083"), "uid" : 2, "uname" : "nosqlfans2" }

{ "_id" : ObjectId("4ff2ae6816df1d1b33bad084"), "uid" : 3, "uname" : "nosqlfans3" }

{ "_id" : ObjectId("4ff2ae6816df1d1b33bad085"), "uid" : 4, "uname" : "nosqlfans4" }

{ "_id" : ObjectId("4ff2ae6816df1d1b33bad086"), "uid" : 5, "uname" : "nosqlfans5" }

{ "_id" : ObjectId("4ff2ae6816df1d1b33bad087"), "uid" : 6, "uname" : "nosqlfans6" }

{ "_id" : ObjectId("4ff2ae6816df1d1b33bad088"), "uid" : 7, "uname" : "nosqlfans7" }

{ "_id" : ObjectId("4ff2ae6816df1d1b33bad089"), "uid" : 8, "uname" : "nosqlfans8" }

{ "_id" : ObjectId("4ff2ae6816df1d1b33bad08a"), "uid" : 9, "uname" : "nosqlfans9" }

{ "_id" : ObjectId("4ff2ae6816df1d1b33bad08b"), "uid" : 10, "uname" : "nosqlfans10" }

{ "_id" : ObjectId("4ff2ae6816df1d1b33bad08c"), "uid" : 11, "uname" : "nosqlfans11" }

{ "_id" : ObjectId("4ff2ae6816df1d1b33bad08d"), "uid" : 12, "uname" : "nosqlfans12" }

{ "_id" : ObjectId("4ff2ae6816df1d1b33bad08e"), "uid" : 13, "uname" : "nosqlfans13" }

{ "_id" : ObjectId("4ff2ae6816df1d1b33bad08f"), "uid" : 14, "uname" : "nosqlfans14" }

{ "_id" : ObjectId("4ff2ae6816df1d1b33bad090"), "uid" : 15, "uname" : "nosqlfans15" }

{ "_id" : ObjectId("4ff2ae6816df1d1b33bad091"), "uid" : 16, "uname" : "nosqlfans16" }

{ "_id" : ObjectId("4ff2ae6816df1d1b33bad092"), "uid" : 17, "uname" : "nosqlfans17" }

{ "_id" : ObjectId("4ff2ae6816df1d1b33bad093"), "uid" : 18, "uname" : "nosqlfans18" }

{ "_id" : ObjectId("4ff2ae6816df1d1b33bad094"), "uid" : 19, "uname" : "nosqlfans19" }

has more

mongos> db.fans.ensureIndex({"uid":1})

mongos> db.fans.find({uid:10}).explain()

{

"cursor" : "BtreeCursor uid_1",

"nscanned" : 1,

"nscannedObjects" : 1,

"n" : 1,

"millis" : 25,

"nYields" : 0,

"nChunkSkips" : 0,

"isMultiKey" : false,

"indexOnly" : false,

"indexBounds" : {

"uid" : [

[

10,

10

]

]

}

}

mongos> use admin

switched to db admin

mongos> db.runCommand({shardcollection : "nosql.fans",key : {uid:1}})

{ "collectionsharded" : "nosql.fans", "ok" : 1 }

mongos> use nosql

mongos> db.fans.stats()

{

"sharded" : true,

"flags" : 1,

"ns" : "nosql.fans",

"count" : 100,

"numExtents" : 2,

"size" : 5968,

"storageSize" : 20480,

"totalIndexSize" : 24528,

"indexSizes" : {

"_id_" : 8176,

"uid0_1" : 8176,

"uid_1" : 8176

},

"avgObjSize" : 59.68,

"nindexes" : 3,

"nchunks" : 1,

"shards" : {

"shard1" : {

"ns" : "nosql.test",

"count" : 100,

"size" : 5968,

"avgObjSize" : 59.68,

"storageSize" : 20480,

"numExtents" : 2,

"nindexes" : 3,

"lastExtentSize" : 16384,

"paddingFactor" : 1,

"flags" : 1,

"totalIndexSize" : 24528,

"indexSizes" : {

"_id_" : 8176,

"uid0_1" : 8176,

"uid_1" : 8176

},

"ok" : 1

}

},

"ok" : 1

}

mongos>

mongos> use nosql

switched to db nosql

mongos> for(var i=200;i<200003;i++)db.fans.save({uid:i,uname:'nosqlfans'+i});

mongos> db.fans.stats()

{

"sharded" : true,

"flags" : 1,

"ns" : "nosql.fans",

"count" : 200002,

"numExtents" : 12,

"size" : 12760184,

"storageSize" : 22646784,

"totalIndexSize" : 12116832,

"indexSizes" : {

"_id_" : 6508096,

"uid_1" : 5608736

},

"avgObjSize" : 63.80028199718003,

"nindexes" : 2,

"nchunks" : 10,

"shards" : {

"shard1" : {

"ns" : "nosql.fans",

"count" : 9554,

"size" : 573260,

"avgObjSize" : 60.00209336403601,

"storageSize" : 1396736,

"numExtents" : 5,

"nindexes" : 2,

"lastExtentSize" : 1048576,

"paddingFactor" : 1,

"flags" : 1,

"totalIndexSize" : 596848,

"indexSizes" : {

"_id_" : 318864,

"uid_1" : 277984

},

"ok" : 1

},

"shard2" : {

"ns" : "nosql.fans",

"count" : 190448,

"size" : 12186924,

"avgObjSize" : 63.990821641602956,

"storageSize" : 21250048,

"numExtents" : 7,

"nindexes" : 2,

"lastExtentSize" : 10067968,

"paddingFactor" : 1,

"flags" : 1,

"totalIndexSize" : 11519984,

"indexSizes" : {

"_id_" : 6189232,

"uid_1" : 5330752

},

"ok" : 1

}

},

"ok" : 1

}

mongos>

[root@mon1 sh]# cat /opt/mongodb/sh/stop.sh

#!/bin/sh

check=`ps aux|grep mongo|grep configdb|awk '{print $2;}'|wc -l`

echo $check

while [ $check -gt 0 ]

do

# echo $check

no=`ps aux|grep mongo|grep configdb|awk '{print $2;}'|sed -n '1p'`

kill -3 $no

echo "kill $no mongo daemon is ok."

sleep 2

check=`ps aux|grep mongo|grep configdb|awk '{print $2;}'|wc -l`

echo "stopping mongo,pls waiting..."

done

check=`ps aux|grep mongo|grep configsvr|awk '{print $2;}'|wc -l`

echo $check

while [ $check -gt 0 ]

do

# echo $check

no=`ps aux|grep mongo|grep configsvr|awk '{print $2;}'|sed -n '1p'`

kill -3 $no

echo "kill $no mongo daemon is ok."

sleep 2

check=`ps aux|grep mongo|grep configsvr|awk '{print $2;}'|wc -l`

echo "stopping mongo,pls waiting..."

done

check=`ps aux|grep mongo|grep shardsvr|awk '{print $2;}'|wc -l`

echo $check

while [ $check -gt 0 ]

do

# echo $check

no=`ps aux|grep mongo|grep shardsvr|awk '{print $2;}'|sed -n '1p'`

kill -3 $no

echo "kill $no mongo daemon is ok."

sleep 2

check=`ps aux|grep mongo|grep shardsvr|awk '{print $2;}'|wc -l`

echo "stopping mongo,pls waiting..."

done

echo "all mongodb stopped!"

>use admin

>db.runCommand({listshards:1})

>use admin

>db.runCommand( { removeshard : "shard1/192.168.8.30:27021,192.168.8.31:27021" } )

>db.runCommand( { removeshard : "shard2/192.168.8.30:27022,192.168.8.31:27022" } )

mongos> use config

switched to db config

mongos> show collections

changelog

chunks

collections

databases

lockpings

locks

mongos

settings

shards

system.indexes

version

mongos> db.shards.find()

{ "_id" : "shard1", "host" : "shard1/192.168.8.30:27021,192.168.8.31:27021,192.168.8.32:27021", "maxSize" : NumberLong(20480) }

{ "_id" : "shard2", "host" : "shard2/192.168.8.30:27022,192.168.8.31:27022,192.168.8.32:27022", "maxSize" : NumberLong(20480) }

关于mongos接入高可用的介绍请看下回分解!!!!

本文出自 “->” 博客,转载请与作者联系!