ambari方式安装hadoop的hive组件遇到的问题

最近在部署hadoop,我发现了ambari工具部署hadoop的hive 组件的一个问题,不知道其他人遇到过没有。

问题描述:通过ambari工具搭建了hadoop2.0完全分布式集群。在测试hive的时候,按照官方文档里的说明通过下面命令检查根目录的时候:总是报错无法连接mysql。(java.sql.SQLException: Access denied foruser 'hive'@'hdb3.yc.com'(using password: YES))

[root@hdb3 bin]# /usr/lib/hive/bin/metatool -listFSRoot

报错关键信息:

14/02/20 13:21:09 WARN bonecp.BoneCPConfig: Max Connections < 1. Setting to 20 14/02/20 13:21:09 ERROR Datastore.Schema: Failed initialising database. Unable to open a test connection to the given database. JDBC url = jdbc:mysql://hdb3.yc.com/hive?createDatabaseIfNotExist=true, username = hive. Terminating connection pool. Original Exception: ------ java.sql.SQLException: Access denied for user 'hive'@'hdb3.yc.com' (using password: YES)

从报错信息初步判断是没有权限访问mysql数据库。但是经过测试,利用hive用户,及密码连接mysql服务器是正常的。而且我在安装之前是在mysql中对hive用户做过授权的。

#创建Hive帐号并授权:

mysql> CREATE USER 'hive'@'%' IDENTIFIED BY 'hive_passwd';

Query OK, 0 rows affected (0.00 sec) mysql> GRANT ALL PRIVILEGES ON *.* TO 'hive'@'%';

Query OK, 0 rows affected (0.00 sec)

mysql> CREATE USER 'hive'@'hdb3.yc.com' IDENTIFIED BY 'hive_passwd';

mysql> GRANT ALL PRIVILEGES ON *.* TO 'hive'@'hdb3.yc.com';

Query OK, 0 rows affected (0.00 sec)

测试连接是正常的:

[root@hda3 ~]# mysql -h hdb3.yc.com -u hive -p Enter password: Welcome to the MySQL monitor. Commands end with ; or \g. Your MySQL connection id is 556 Server version: 5.1.73 Source distribution Copyright (c) 2000, 2012, Oracle and/or its affiliates. All rights reserved. Oracle is a registered trademark of Oracle Corporation and/or its affiliates. Other names may be trademarks of their respective owners. Type 'help;' or '\h' for help. Type '\c' to clear the current input statement. mysql>

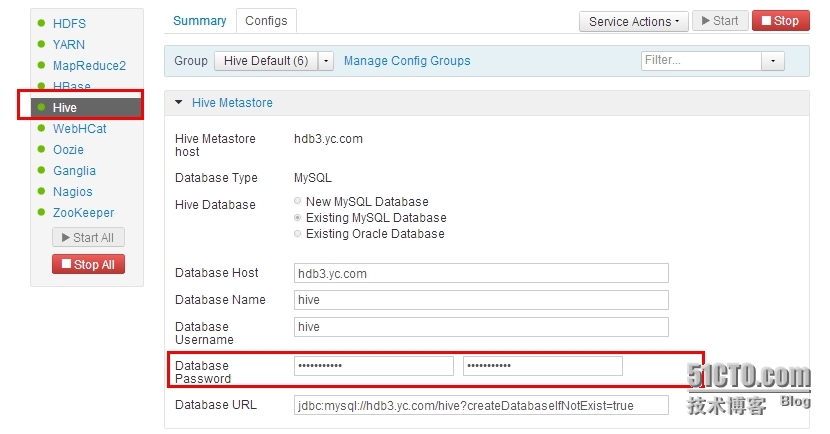

于是检查hive的配置,在ambari管理页面,如下,hive的配置项中输入了正确的密码。重启hive服务后测试依然会报错。

后来检查服务器上的配置文件找到的问题原由:

上面那个web页面里配置了hive 数据库的连接密码,但是实际上hive服务器的配置文件里/usr/lib/hive/conf/hive-site.xml并没有密码的相关配置,也就是没有下面这一段参数配置:

<property> <name>javax.jdo.option.ConnectionPassword</name> <value>hive_passwd</value> </property>

手动编辑配置文件增加上这些配置,加入这段密码的配置后,没有重启hive服务,再次执行下面的命令检查根目录,这次不报错了。

[root@hdb3 bin]# /usr/lib/hive/bin/metatool -listFSRoot Initializing HiveMetaTool.. 14/02/20 14:29:22 INFO Configuration.deprecation: mapred.input.dir.recursive is deprecated. Instead, use mapreduce.input.fileinputformat.input.dir.recursive 14/02/20 14:29:22 INFO Configuration.deprecation: mapred.max.split.size is deprecated. Instead, use mapreduce.input.fileinputformat.split.maxsize 14/02/20 14:29:22 INFO Configuration.deprecation: mapred.min.split.size is deprecated. Instead, use mapreduce.input.fileinputformat.split.minsize 14/02/20 14:29:22 INFO Configuration.deprecation: mapred.min.split.size.per.rack is deprecated. Instead, use mapreduce.input.fileinputformat.split.minsize.per.rack 14/02/20 14:29:22 INFO Configuration.deprecation: mapred.min.split.size.per.node is deprecated. Instead, use mapreduce.input.fileinputformat.split.minsize.per.node 14/02/20 14:29:22 INFO Configuration.deprecation: mapred.reduce.tasks is deprecated. Instead, use mapreduce.job.reduces 14/02/20 14:29:22 INFO Configuration.deprecation: mapred.reduce.tasks.speculative.execution is deprecated. Instead, use mapreduce.reduce.speculative 14/02/20 14:29:23 INFO metastore.ObjectStore: ObjectStore, initialize called 14/02/20 14:29:23 INFO DataNucleus.Persistence: Property datanucleus.cache.level2 unknown - will be ignored SLF4J: Class path contains multiple SLF4J bindings. SLF4J: Found binding in [jar:file:/usr/lib/hadoop/lib/slf4j-log4j12-1.7.5.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: Found binding in [jar:file:/usr/lib/hive/lib/slf4j-log4j12-1.7.5.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation. SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory] 14/02/20 14:29:24 WARN bonecp.BoneCPConfig: Max Connections < 1. Setting to 20 14/02/20 14:29:24 INFO metastore.ObjectStore: Setting MetaStore object pin classes with hive.metastore.cache.pinobjtypes="Table,Database,Type,FieldSchema,Order" 14/02/20 14:29:24 INFO metastore.ObjectStore: Initialized ObjectStore 14/02/20 14:29:25 WARN bonecp.BoneCPConfig: Max Connections < 1. Setting to 20 Listing FS Roots.. hdfs://hda1.yc.com:8020/apps/hive/warehouse

但是在通过ambari管理界面重启hive服务后又会重新自动给你去掉了。

这导致在执行 /usr/lib/hive/bin/metatool -listFSRoot 命令的时候无法连接mysql数据库。不知道这是我哪里的配置不对还是ambari的bug问题。