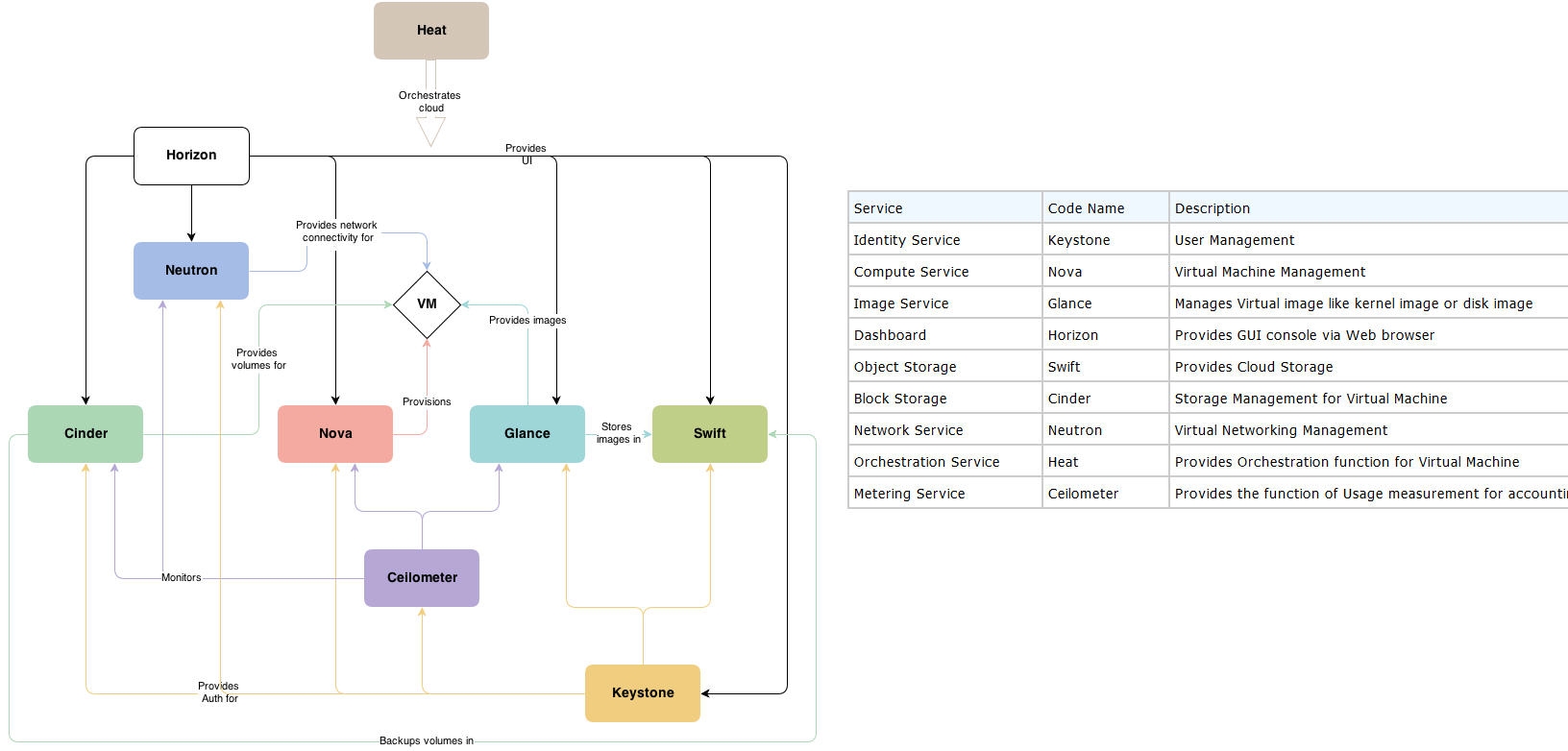

OpenStack conceptual architecture

OpenStack conceptual architecture

Based on OpenStack Icehouse release

calculate instances for openstack

cpu_allocation_ratio=16.0

ram_allocation_ratio=1.5

nova flavor-list

number of instances = ( cpu_allocation_ratio x total physical cores ) / virtual cores per instance

number of instances = ( ram_allocation_ratio x total physical memory ) / flavor memory size

size of instances = number of instances x flavor disk size ( /var/lib/nova/instances )

Load environment variables

unset OS_SERVICE_TOKEN OS_SERVICE_ENDPOINT

vi ~/adminrc

export OS_USERNAME=admin

export OS_PASSWORD=ADMIN-USER-PASSWORD

export OS_TENANT_NAME=admin

export OS_AUTH_URL=http://controller:35357/v2.0

chmod 600 ~/adminrc ; source ~/adminrc

echo "source ~/adminrc " >> ~/.bash_profile

Get user-role-list script

vi get-user-role-list.py

#!/usr/bin/python

import os, prettytable, sys

from keystoneclient.v2_0 import client

from keystoneclient import utils

keystone = client.Client(username=os.environ['OS_USERNAME'], password=os.environ['OS_PASSWORD'],

tenant_name=os.environ['OS_TENANT_NAME'], auth_url=os.environ['OS_AUTH_URL'])

f_user = f_tenant = ""

if "-u" in sys.argv: f_user = sys.argv[sys.argv.index("-u")+1]

if "-t" in sys.argv: f_tenant = sys.argv[sys.argv.index("-t")+1]

tenants = [t for t in keystone.tenants.list() if f_tenant in t.name]

users = [u for u in keystone.users.list() if f_user in u.name]

pt = prettytable.PrettyTable(["name"]+[t.name for t in tenants])

for user in users:

row = [user.name]

for tenant in tenants:

row.append("\n".join([u.name for u in user.list_roles(tenant.id)]))

pt.add_row(row)

print pt.get_string(sortby="name")

chmod +x get-user-role-list.py

Notes:

Install OpenStack Clients

yum -y install python-ceilometerclient python-cinderclient python-glanceclient python-heatclient python-keystoneclient python-neutronclient python-novaclient python-swiftclient python-troveclient

Using standalone mysql server:

Hosted MySQL Server

yum -y install mysql mysql-server MySQL-python

all other openstack nodes

yum -y install mysql MySQL-python openstack-utils

1. The public_url parameteris the URL that end users would connect on. In a public

cloud environment, this would be a public URL that resolves to a public IP address.

2. The admin_url parameteris a restricted address for conducting administration.

In a public deployment, you would keep this separate from the public_URL by

presenting the service you are configuring on a different, restricted URL. Some

services have a different URI for the admin service, so this is configured using this

attribute.

3. The internal_url parameterwould be the IP or URL that existed only within the

private local area network. The reason for this is that you are able to connect to

services from your cloud environment internally without connecting over a public IP

address space, which could incur data charges for traversing the Internet. It is also

potentially more secure and less complex to do so.

KVM

yum -y install qemu-kvm libvirt python-virtinst bridge-utils

yum -y install httpd

ln -s /media /var/www/html/centos6

service httpd start

for using virt-manager

qemu-img create -f qcow2 /var/lib/libvirt/images/centos6.img 10G

virt-install -n centos6 -r 1024 --disk path=/var/lib/libvirt/images/centos6.img,format=qcow2 --vcpus=1 --os-type linux --os-variant=rhel6 --graphics none --location=http://192.168.1.12/centos6 --extra-args='console=tty0 console=ttyS0,115200n8 serial'

sohu epel:

[epel]

name=epel

baseurl=http://mirrors.sohu.com/fedora-epel/6Server/x86_64/

enabled=1

gpgcheck=0

my notes:

vi /root/checkiso.sh

#!/bin/bash

DIR=/icehouse

if [ "$(ls -A $DIR 2> /dev/null)" == '' ]; then

mount -o loop /tmp/OpenStackIcehouse.iso /icehouse/

fi

chmod +x /root/checkiso.sh

echo "/root/checkiso.sh" >> root/.bash_profile

nova live migration libvirt related configuration:

for CentOS 6.5

vi /etc/libvirt/libvirtd.conf

listen_tls = 0

listen_tcp = 1

auth_tcp = “none”

vi /etc/sysconfig/libvirtd

LIBVIRTD_ARGS="--listen"

service libvirtd restart

for Ubuntu 14.04

vi /etc/libvirt/libvirtd.conf

listen_tls = 0

listen_tcp = 1

auth_tcp = “none”

vi /etc/init/libvirt-bin.conf

改前 : exec /usr/sbin/libvirtd $libvirtd_opts

改后 : exec /usr/sbin/libvirtd -d -l

vi /etc/default/libvirt-bin

改前 :libvirtd_opts=” -d”

改后 :libvirtd_opts=” -d -l”

service libvirt-bin restart

my notes:

controller node:

[ml2]

type_drivers=vlan

tenant_network_types=vlan

mechanism_drivers=openvswitch

[ml2_type_vlan]

network_vlan_ranges = physnet1:1:4094

network node:

[ml2]

type_drivers=vlan

tenant_network_types=vlan

mechanism_drivers=openvswitch

[ml2_type_vlan]

network_vlan_ranges = physnet1:1:4094

[ovs]

bridge_mappings = physnet1:br-eth1

vi /etc/sysconfig/network-scripts/ifcfg-eth1

DEVICE=eth1

TYPE=Ethernet

ONBOOT=yes

BOOTPROTO=none

ovs-vsctl add-br br-eth1

ovs-vsctl add-port br-eth1 eth1

computer node:

[ml2]

type_drivers=vlan

tenant_network_types=vlan

mechanism_drivers=openvswitch

[ml2_type_vlan]

network_vlan_ranges = physnet1:1:4094

[ovs]

bridge_mappings = physnet1:br-eth1

vi /etc/sysconfig/network-scripts/ifcfg-eth1

DEVICE=eth1

TYPE=Ethernet

ONBOOT=yes

BOOTPROTO=none

ovs-vsctl add-br br-eth1

ovs-vsctl add-port br-eth1 eth1