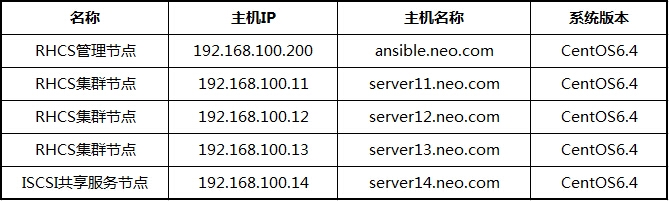

RHCS高可用部署以及GFS2/CLVM集群文件系统应用

说明:由于配置节点比较多,有些操作使用简单的ansible部署,同时为了能清淅明白操作步骤没有写成playbook;

拓扑图:

1、确保所有节点时间同步;

[root@ansible ~]# ansible group1 -a 'date' server13.neo.com | success | rc=0 >> Sat Jul 26 10:31:49 CST 2014 server11.neo.com | success | rc=0 >> Sat Jul 26 10:31:50 CST 2014 server12.neo.com | success | rc=0 >> Sat Jul 26 10:31:50 CST 2014

2、确保NetworkManager己关闭;

[root@ansible ~]# ansible group1 -a 'chkconfig --list NetworkManager' server11.neo.com | success | rc=0 >> NetworkManager 0:off 1:off 2:off 3:off 4:off 5:off 6:off server13.neo.com | success | rc=0 >> NetworkManager 0:off 1:off 2:off 3:off 4:off 5:off 6:off server12.neo.com | success | rc=0 >> NetworkManager 0:off 1:off 2:off 3:off 4:off 5:off 6:off

一、RHCS高可用部署

1、RHCS管理节点安装luci组件

[root@ansible ~]# yum install -y luci --disablerepo=epel [root@ansible ~]# service luci start [root@ansible ~]# netstat -tunlp |grep 8084

2、RHCS集群节点安装ricci组件

[root@ansible ~]# ansible group1 -m yum -a 'name=ricci state=present disablerepo=epel' [root@ansible ~]# ansible group1 -m service -a 'name=ricci state=started enabled=yes' [root@ansible ~]# ansible group1 -m shell -a 'netstat -tunlp |grep ricci'

3、为每个RHCS集群节点添加web服务且提供主页文件

[root@ansible ~]# ansible group1 -m yum -a 'name=httpd state=present' [root@ansible ~]# ansible group1 -m shell -a 'echo "<h1>`uname -n`</h1>" >/var/www/html/index.html' [root@ansible ~]# ansible group1 -m service -a 'name=httpd state=started enabled=yes' [root@ansible ~]# ansible group1 -m shell -a 'echo asdasd |passwd --stdin ricci'

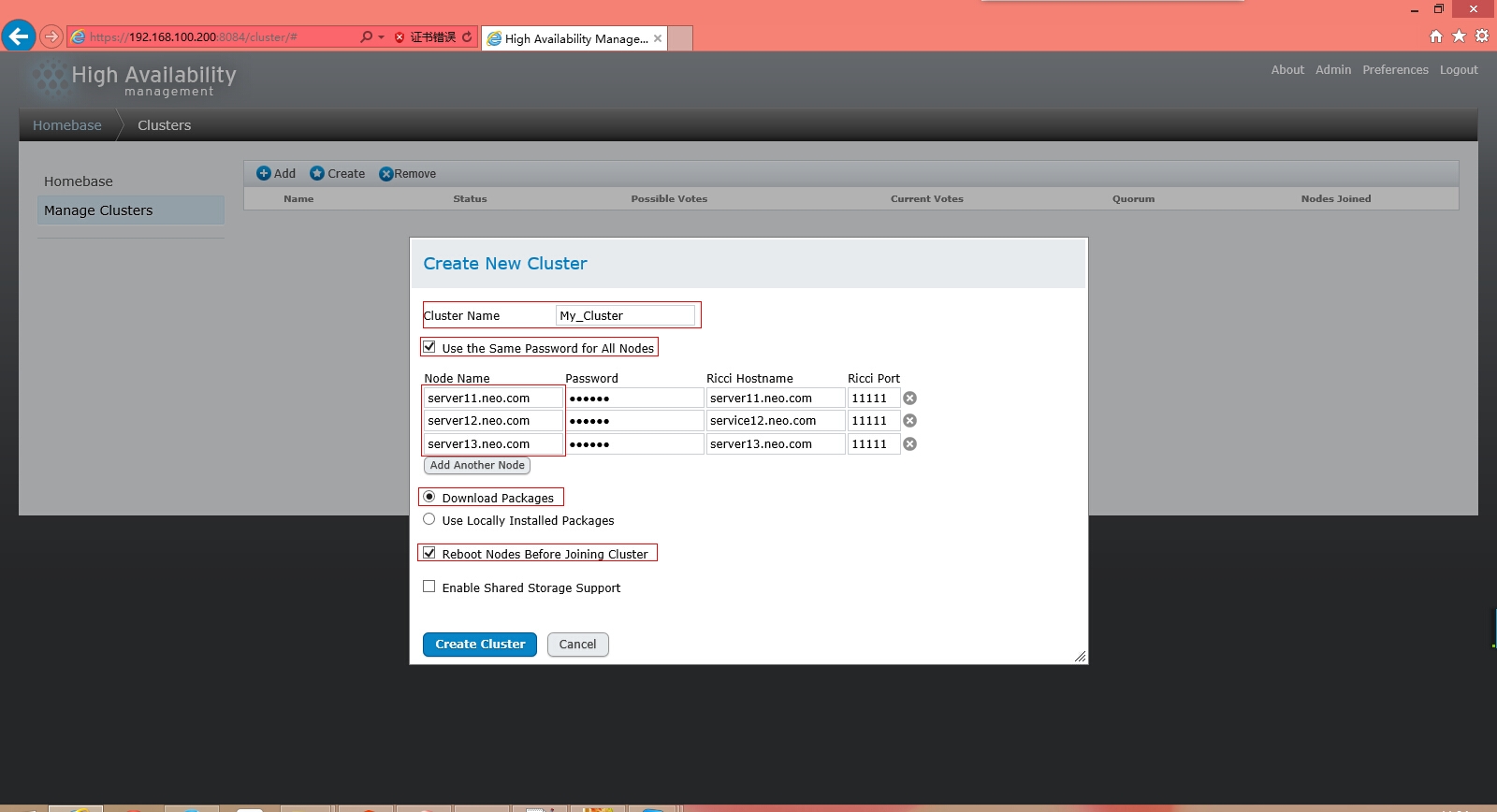

4、使用web GUI界面配置高可用服务

1)在浏览器中访问https://192.168.100.200:8084;

2)使用root帐号密码登陆;

3)创建集群服务

注意Node Name中的Password是我们为ricci添加的密码;

4)定义故障域"FailOver Domains",且定义“Priority”值;

5)添加资源“Resources”,这里lsb风格的服务需指定为Scripts;添加VIP与Httpd两个资源;

6)定义组“Group”,把VIP与httpd添加进组中;

7)启动组;

8)模拟故障,在web GUI界面切换组资源在节点上的运行;

我们也可以在shell命令行下查看节点状态:

[root@server11 ~]# clustat Cluster Status for My_Cluster @ Sat Jul 26 11:28:36 2014 Member Status: Quorate Member Name ID Status ------ ---- ---- ------ server11.neo.com 1 Online, Local, rgmanager server12.neo.com 2 Online, rgmanager server13.neo.com 3 Online, rgmanager Service Name Owner (Last) State ------- ---- ----- ------ ----- service:webserver server13.neo.com started

至此一个简单的web高可用集群服务部署完成。

相关的命令行管理工具:

clusvcadm

cman_tool

ccs_tool

cman的配置文件在/etc/cluster/cluster.conf

二、部署GFS2/CLVM集群文件系统

由于上面部署GFS2使用的是ricci和luci套件,而且使用了CONGA图型化配置,下面我们部置集群文件系统不需要用到图型化配置,所以还是手动安装就行;

1、在集群节点上安装cman,corosync,rgmanager

# ansible group1 -m yum -a 'name=cman state=present' # ansible group1 -m yum -a 'name=corosync state=present' # ansible group1 -m yum -a 'name=rgmanager state=present'

2、在任一集群节点上创建集群,且添加集如节点信息;

下面这里必须手动创建,默认安装完后是没有配置文件,CMAN也无法启动;

[root@server11 ~]# ccs_tool create My_Cluster [root@server11 ~]# ccs_tool addnode server11.neo.com -n 1 -v 1 [root@server11 ~]# ccs_tool addnode server12.neo.com -n 2 -v 1 [root@server11 ~]# ccs_tool addnode server13.neo.com -n 3 -v 1 [root@server11 ~]# ccs_tool lsnode //查看集群节点; Cluster name: My_Cluster, config_version: 4 Nodename Votes Nodeid Fencetype server11.neo.com 1 1 server12.neo.com 1 2 server13.neo.com 1 3 [root@server11 ~]# cat /etc/cluster/cluster.conf //查看CMAN配置文件; <?xml version="1.0"?> <cluster name="My_Cluster" config_version="4"> <clusternodes> <clusternode name="server11.neo.com" votes="1" nodeid="1"/> <clusternode name="server12.neo.com" votes="1" nodeid="2"/> <clusternode name="server13.neo.com" votes="1" nodeid="3"/> </clusternodes> <fencedevices> </fencedevices> <rm> <failoverdomains/> <resources/> </rm> </cluster> 将server11上的cluster.conf复制到其它集群节点://以下两步,我记得以前在RHEL5上是可以自动传送给其它节点的,但不知为何RHEL6无法自动传送,所以我们还是手动传送给其它集群节点; [root@server11 ~]# scp -p /etc/cluster/cluster.conf server12:/etc/cluster/ [root@server11 ~]# scp -p /etc/cluster/cluster.conf server13:/etc/cluster/

3、启动服务

[root@ansible ~]# ansible group1 -m service -a 'name=cman state=started enabled=yes' //设置CMAN服务启动且开机自动启动;

4、安装配置ISCSI服务端与客户端

[root@ansible ~]# ansible group1 -m yum -a 'name=iscsi-initiator-utils state=present' //在各集群节点上安装iscsi客户端软件; [root@server14 ~]# yum install scsi-target-utils -y //在iscsi服务器上安装配置ISCSI服务端 [root@server14 ~]# cat /etc/tgt/targets.conf //配置文件; default-driver iscsi <target iqn.2014-07.com.neo:server14.target2> backing-store /dev/sdb1 backing-store /dev/sdb2 initiator-address 192.168.100.0/24 </target> [root@server14 ~]# service tgtd start //启动iscsi服务端; Starting SCSI target daemon: [ OK ] [root@server14 ~]# chkconfig tgtd on //设置开机自动启动; [root@server14 ~]# tgtadm -L iscsi -m target -o show //查看状态信息; Target 1: iqn.2014-07.com.neo:server14.target2 System information: Driver: iscsi State: ready LUN information: LUN: 0 Type: controller SCSI ID: IET 00010000 SCSI SN: beaf10 Size: 0 MB, Block size: 1 Online: Yes Removable media: No Prevent removal: No Readonly: No Backing store type: null Backing store path: None Backing store flags: LUN: 1 Type: disk SCSI ID: IET 00010001 SCSI SN: beaf11 Size: 10742 MB, Block size: 512 Online: Yes Removable media: No Prevent removal: No Readonly: No Backing store type: rdwr Backing store path: /dev/sdb1 Backing store flags: LUN: 2 Type: disk SCSI ID: IET 00010002 SCSI SN: beaf12 Size: 10742 MB, Block size: 512 Online: Yes Removable media: No Prevent removal: No Readonly: No Backing store type: rdwr Backing store path: /dev/sdb2 Backing store flags: Account information: ACL information: 192.168.100.0/24 为每个集群节点配置独有的iscsi客户端名称; [root@ansible ~]# ansible group1 -m shell -a 'echo "InitiatorName=`iscsi-iname -p iqn.2014-07.com.neo`" >/etc/iscsi/initiatorname.iscsi' 检查是否正确; [root@ansible ~]# ansible group1 -m shell -a 'cat /etc/iscsi/initiatorname.iscsi' server11.neo.com | success | rc=0 >> InitiatorName=iqn.2014-07.com.neo:bb39eb84c2 server12.neo.com | success | rc=0 >> InitiatorName=iqn.2014-07.com.neo:3032a51c13da server13.neo.com | success | rc=0 >> InitiatorName=iqn.2014-07.com.neo:c82593bb8d [root@ansible ~]# ansible group1 -m shell -a 'iscsiadm -m discovery -p 192.168.100.14 -t sendtargets' //集群节点都去discovery一次目标主机; [root@ansible ~]# ansible group1 -m shell -a 'iscsiadm -m node -T iqn.2014-07.com.neo:server14.target2 -p 192.168.100.14 -l' //集群节点登陆,连接服务端; [root@ansible ~]# ansible group1 -m yum -a 'name=gfs2-utils state=present' //安装GFS2组件; 以下为选择集群中任一节点操作: [root@server11 ~]# fdisk /dev/sdb [root@server11 ~]# partx -a /dev/sdb [root@server11 ~]# mkfs.gfs2 -h Usage: mkfs.gfs2 [options] <device> [ block-count ] Options: -b <bytes> Filesystem block size -c <MB> Size of quota change file -D Enable debugging code -h Print this help, then exit -J <MB> Size of journals -j <num> Number of journals //设定日志文件系统个数,有多少个集群节点设置多少个; -K Don't try to discard unused blocks -O Don't ask for confirmation -p <name> Name of the locking protocol //指定锁类型,创建集群文件系统我们使用lock_dlm; -q Don't print anything -r <MB> Resource Group Size -t <name> Name of the lock table //指定锁表名称:ClusterName:string -u <MB> Size of unlinked file -V Print program version information, then exit [root@server11 ~]# mkfs.gfs2 -j 3 -t My_Cluster:myweb -p lock_dlm /dev/sdb1 // 创建集群文件系统; This will destroy any data on /dev/sdb1. It appears to contain: data Are you sure you want to proceed? [y/n] y Device: /dev/sdb1 Blocksize: 4096 Device Size 10.00 GB (2622460 blocks) Filesystem Size: 10.00 GB (2622458 blocks) Journals: 3 Resource Groups: 41 Locking Protocol: "lock_dlm" Lock Table: "My_Cluster:myweb" UUID: c6d9c780-9a94-e245-0471-a16c55e6b1c1 [root@server11 ~]# ansible group1 -m shell -a 'mount /dev/sdb1 /root/gfs2' // 所有节点都挂载此集群文件系统,且都可以同时读取文件;

CLVM集群逻辑卷文件系统

这里我们继续上面GFS2文件系统环境进行;

每个集群节点上安装lvm2-utils

[root@ansible ~]# ansible group1 -m yum -a 'name=lvm2-cluster state=present disablerepo=epel' [root@ansible ~]# ansible group1 -m shell -a 'lvmconf --enable-cluster' //启用lvm集群功能,默认是不启用的;这里也可以直接去修改/etc/lvm/lvm.conf中“locking-type=3”; [root@ansible ~]# ansible group1 -m service -a 'name=clvmd state=started enabled=yes' //设置开机自动启动;

同样的,在任一集群节点上创建一个新的分区,然后创建PV,VG,LV等;

[root@server11 ~]# fdisk /dev/sdc

Command (m for help): n

p

Partition number (1-4): 1

First cylinder (1-1020, default 1):

Using default value 1

Last cylinder, +cylinders or +size{K,M,G} (1-1020, default 1020): +1G

Command (m for help): n

p

Partition number (1-4): 2

Last cylinder, +cylinders or +size{K,M,G} (206-1020, default 1020): +1G

Command (m for help): w

在每个节点上刷新内核重识分区 (由于ISCSI磁盘是先连接登陆,所以集群中某一节点对其分区,另外节点内核是无法识别的);

[root@ansible ~]# ansible group1 -m shell -a 'partx -a /dev/sdc'

[root@server11 ~]# pvcreate /dev/sdc1 //创建PV;

Physical volume "/dev/sdc1" successfully created

[root@server11 ~]# vgcreate vg_clvm /dev/sdc1 //创建VG;

Clustered volume group "vg_clvm" successfully created

[root@server11 ~]# lvcreate -L 1G -n lv_clvm vg_clvm //创建LV;

Logical volume "lv_clvm" created

下面可以查看其它集群节点都可以看到server11上的操作结果;

[root@ansible ~]# ansible group1 -m shell -a 'vgs'

server11.neo.com | success | rc=0 >>

VG #PV #LV #SN Attr VSize VFree

vg_clvm 1 1 0 wz--nc 1.00g 4.00m

vg_server 1 2 0 wz--n- 19.51g 0

server12.neo.com | success | rc=0 >>

VG #PV #LV #SN Attr VSize VFree

vg_clvm 1 1 0 wz--nc 1.00g 4.00m

vg_server 1 2 0 wz--n- 19.51g 0

server13.neo.com | success | rc=0 >>

VG #PV #LV #SN Attr VSize VFree

vg_clvm 1 1 0 wz--nc 1.00g 4.00m

vg_server 1 2 0 wz--n- 19.51g 0

[root@ansible ~]# ansible group1 -m shell -a 'lvs'

server12.neo.com | success | rc=0 >>

LV VG Attr LSize Pool Origin Data% Move Log Cpy%Sync Convert

lv_clvm vg_clvm -wi-a----- 1.00g

lv_root vg_server -wi-ao---- 17.57g

lv_swap vg_server -wi-ao---- 1.94g

server13.neo.com | success | rc=0 >>

LV VG Attr LSize Pool Origin Data% Move Log Cpy%Sync Convert

lv_clvm vg_clvm -wi-a----- 1.00g

lv_root vg_server -wi-ao---- 17.57g

lv_swap vg_server -wi-ao---- 1.94g

server11.neo.com | success | rc=0 >>

LV VG Attr LSize Pool Origin Data% Move Log Cpy%Sync Convert

lv_clvm vg_clvm -wi-a----- 1.00g

lv_root vg_server -wi-ao---- 17.57g

lv_swap vg_server -wi-ao---- 1.94g

创建GFS2文件系统;

[root@server11 ~]# mkfs.gfs2 -j 3 -t My_Cluster:clvm -p lock_dlm /dev/mapper/vg_clvm-lv_clvm

This will destroy any data on /dev/mapper/vg_clvm-lv_clvm.

It appears to contain: symbolic link to `../dm-2'

Are you sure you want to proceed? [y/n] y

Device: /dev/mapper/vg_clvm-lv_clvm

Blocksize: 4096

Device Size 1.00 GB (262144 blocks)

Filesystem Size: 1.00 GB (262142 blocks)

Journals: 3

Resource Groups: 4

Locking Protocol: "lock_dlm"

Lock Table: "My_Cluster:clvm"

UUID: dfcd6df8-c309-9fba-c19f-3f8d174096d9