Install Hadoop with HDP 2.2 on CentOS 6.5

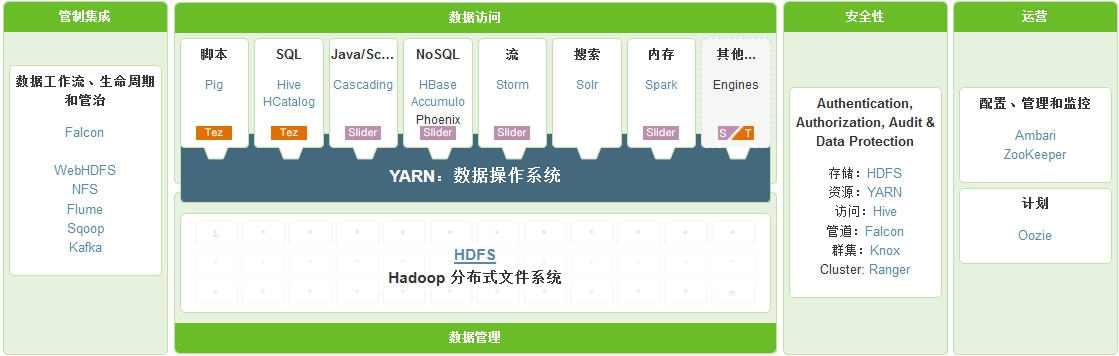

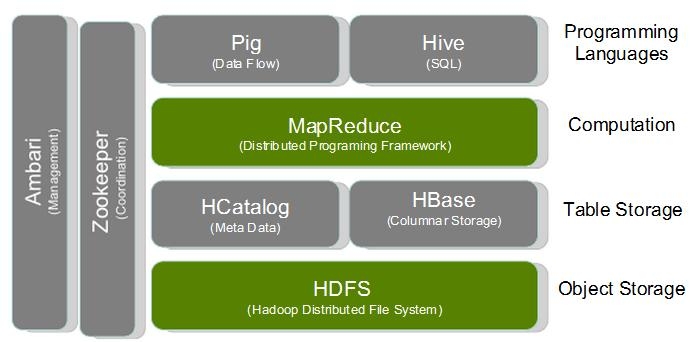

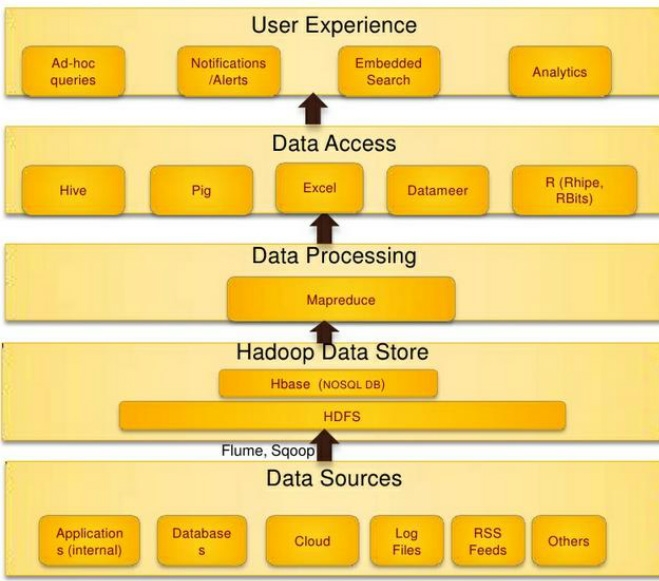

Hadoop Hortonworks

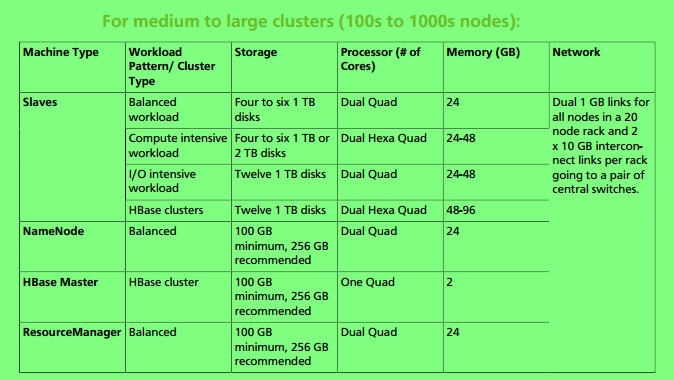

Masters-- HDFS NameNode, YARN ResourceManager (ApplicationsManager + Scheduler), and HBase Master

Slaves-- HDFS DataNodes, YARN NodeManagers, and HBase RegionServers (Worker Nodes co-deployed)

ZooKeeper -- to manage HBase cluster

HA -- NameNode + Secondary NameNode 或者NameNode HA (Active + Standby),推荐后者

分区考虑,不要使用LVM

root -- > 100G

/tmp -- >100G (run job失败的话请查看此目录空间)

swap -- 2倍系统内存

Master node:

RAID 10, dual Ethernet cards, dual power supplies, etc.

Slave node:

1. RAID is not necessary

2. HDFS分区, not using LVM

/etc/fstab -- ext3/ext4 defaults,noatime

挂载到/grid/[0-n] (one partition per disk)

HDP 2.2 and Ambari 1.7 repo

http://public-repo-1.hortonworks.com/HDP/centos6/2.x/GA/2.2.0.0/hdp.repo

http://public-repo-1.hortonworks.com/ambari/centos6/1.x/updates/1.7.0/ambari.repo

yum -y install yum-utils

yum repolist

reposync -r HDP-2.2.0.0

reposync -r HDP-UTILS-1.1.0.20

reposync -r Updates-ambari-1.7.0

on all cluster nodes and ambari server:

cat << EOF > /etc/yum.repos.d/iso.repo

[iso]

name=iso

baseurl=http://mirrors.aliyun.com/centos/6.5/os/x86_64/

enable=1

gpgcheck=0

EOF

vi /etc/hosts to add all hosts FQDN, like below:

192.168.1.19 ambari.local

192.168.1.20 master1.local # HDFS NameNode

192.168.1.21 master2.local # YARN ResourceManager

192.168.1.22 slave1.local

192.168.1.23 slave2.local

192.168.1.24 slave3.local

192.168.1.25 client.local

vi /etc/sysconfig/network to set FQDN

yum -y install ntp

service ntpd start; chkconfig ntpd on

disable firewall and selinux:

service iptables stop

chkconfig iptables off; chkconfig ip6tables off

setenforce 0

sed -i 's,SELINUX=enforcing,SELINUX=disabled,g' /etc/selinux/config

yum -y install openssh-clients wget unzip

rpm -Uvh http://mirrors.aliyun.com/centos/6.5/updates/x86_64/Packages/openssl-1.0.1e-16.el6_5.15.x86_64.rpm

rpm -ivh jdk-7u67-linux-x64.rpm

echo 'export JAVA_HOME=/usr/java/default' >> /etc/profile

echo 'export PATH=$JAVA_HOME/bin:$PATH' >> /etc/profile

source /etc/profile

just on all cluster nodes:

disable ipv6 and kernel parameters tuning

echo "net.ipv6.conf.all.disable_ipv6 = 1" >> /etc/sysctl.conf

echo "vm.swappiness = 0" >> /etc/sysctl.conf

echo 'net.ipv4.tcp_retries2 = 2' >> /etc/sysctl.conf

echo 'vm.overcommit_memory = 1' >> /etc/sysctl.conf

echo "fs.file-max = 6815744" >> /etc/sysctl.conf

echo "fs.aio-max-nr = 1048576" >> /etc/sysctl.conf

echo "net.core.rmem_default = 262144" >> /etc/sysctl.conf

echo "net.core.wmem_default = 262144" >> /etc/sysctl.conf

echo "net.core.rmem_max = 16777216" >> /etc/sysctl.conf

echo "net.core.wmem_max = 16777216" >> /etc/sysctl.conf

echo "net.ipv4.tcp_rmem = 4096 262144 16777216" >> /etc/sysctl.conf

echo "net.ipv4.tcp_wmem = 4096 262144 16777216" >> /etc/sysctl.conf

only on ResourceManager and JobHistory Server

echo "net.core.somaxconn = 1000" >> /etc/sysctl.conf

sysctl -p

vi /etc/security/limits.conf

* soft core unlimited

* hard core unlimited

* soft nofile 65536

* hard nofile 65536

* soft nproc unlimited

* hard nproc unlimited

* soft memlock unlimited

* hard memlock unlimited

echo "echo never > /sys/kernel/mm/redhat_transparent_hugepage/enabled" >> /etc/rc.local

echo "echo never > /sys/kernel/mm/redhat_transparent_hugepage/defrag" >> /etc/rc.local

echo "echo no > /sys/kernel/mm/redhat_transparent_hugepage/khugepaged/defrag" >> /etc/rc.local

vi /etc/grub.conf

add "elevator=deadline"(no quotes) at the end of kernel line

reboot to take effect

Just on ambari server:

ssh-keygen -q -t rsa -f ~/.ssh/id_rsa -C '' -N ''

vi ~/.ssh/config

StrictHostKeyChecking no

ssh-copy-id master1.local

ssh-copy-id master2.local

ssh-copy-id slave1.local

ssh-copy-id slave2.local

ssh-copy-id slave3.local

yum -y install mysql-server httpd

vi /etc/my.cnf

[mysqld]

default_storage_engine=InnoDB

innodb_file_per_table=1

service mysqld start; chkconfig mysqld on

mysql_secure_installation

vi /etc/yum.repos.d/ambari.repo

[ambari]

name=ambari

baseurl=http://192.168.1.19/ambari

enable=1

gpgcheck=0

mkdir /hdp; mount /dev/cdrom /hdp

ln -s /hdp/ambari/ /var/www/html/ambari

ln -s /hdp/hdp /var/www/html/hdp

service httpd start; chkconfig httpd on

For ambari/hive/oozie database:

yum -y install ambari-server

mysql -uroot -p

create database ambari;

GRANT ALL PRIVILEGES ON *.* TO 'ambari'@'localhost' IDENTIFIED BY 'ambari' WITH GRANT OPTION;

GRANT ALL PRIVILEGES ON *.* TO 'ambari'@'%' IDENTIFIED BY 'ambari' WITH GRANT OPTION;

flush privileges;

use ambari;

source /var/lib/ambari-server/resources/Ambari-DDL-MySQL-CREATE.sql;

create database hive;

GRANT ALL PRIVILEGES ON *.* TO 'hive'@'localhost' IDENTIFIED BY 'hive' WITH GRANT OPTION;

GRANT ALL PRIVILEGES ON *.* TO 'hive'@'%' IDENTIFIED BY 'hive' WITH GRANT OPTION;

flush privileges;

create database oozie;

GRANT ALL PRIVILEGES ON *.* TO 'oozie'@'localhost' IDENTIFIED BY 'oozie' WITH GRANT OPTION;

GRANT ALL PRIVILEGES ON *.* TO 'oozie'@'%' IDENTIFIED BY 'oozie' WITH GRANT OPTION;

flush privileges;

Install mysql jdbc connector:

tar zxf mysql-connector-java-5.1.33.tar.gz

mkdir /usr/share/java

cp mysql-connector-java-5.1.33/mysql-connector-java-5.1.33-bin.jar /usr/share/java/mysql-connector-java.jar

ambari-server setup --jdbc-db=mysql --jdbc-driver=/usr/share/java/mysql-connector-java.jar

ambari-server setup -j /usr/java/default

Enter advanced database configuration [y/n] (n)? y

choose to use mysql, enter 3

ambari-server start

http://{your.ambari.server}:8080

default user name/password: admin/admin

使用Pi Estimator验证测试:

sudo -u hdfs hadoop jar /usr/hdp/version-number/hadoop-mapreduce/hadoop-mapreduce-examples.jar pi 10 1000

关机的正确步骤:

1. stop all service in Ambari Web UI (if there is no response for stopping, service ambari-server restart, then try again)

2. poweroff hosts

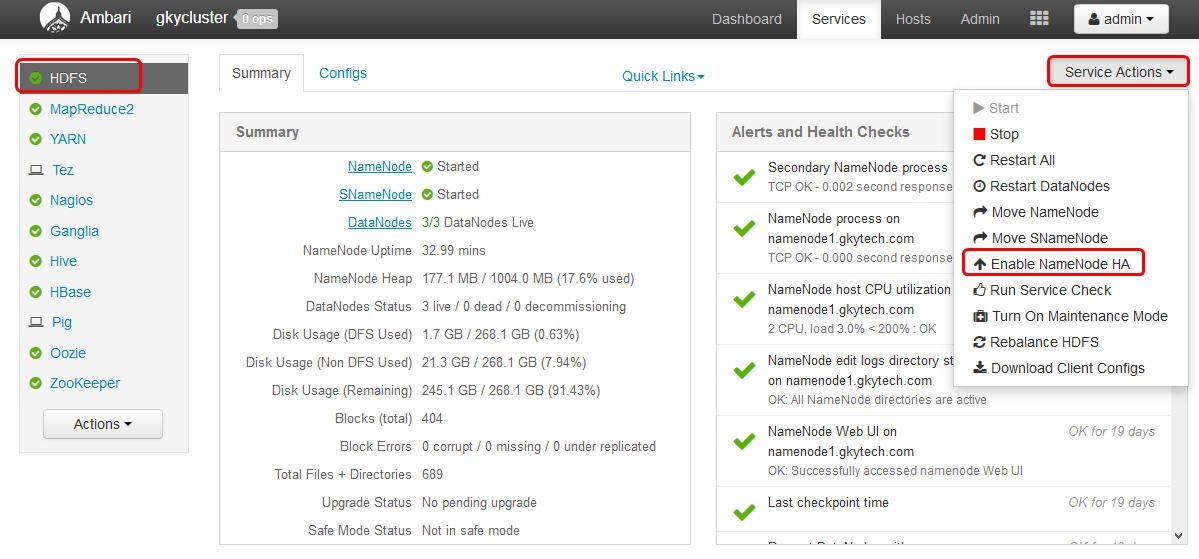

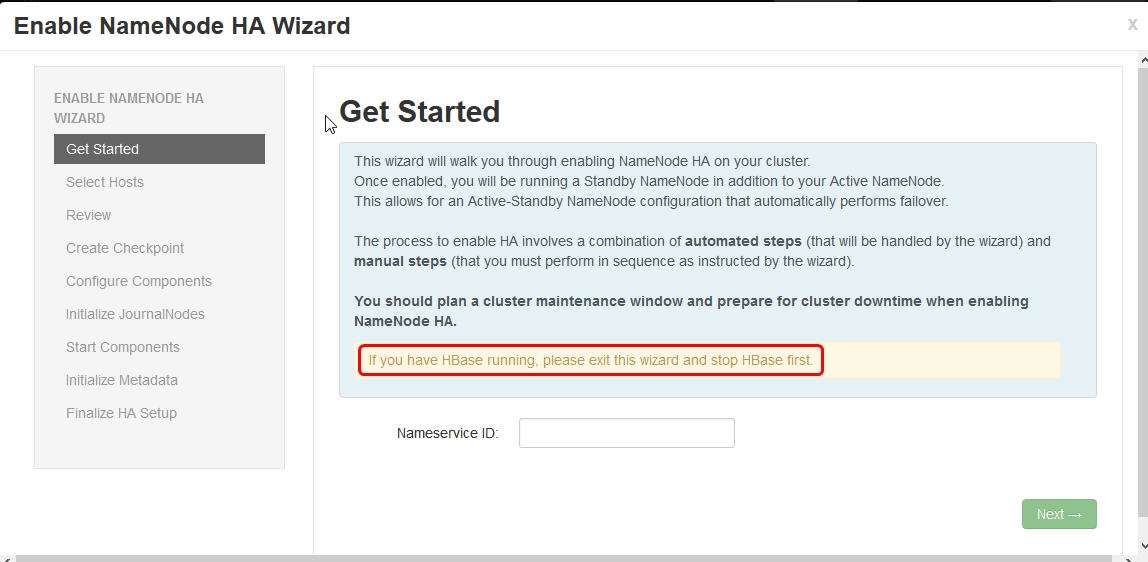

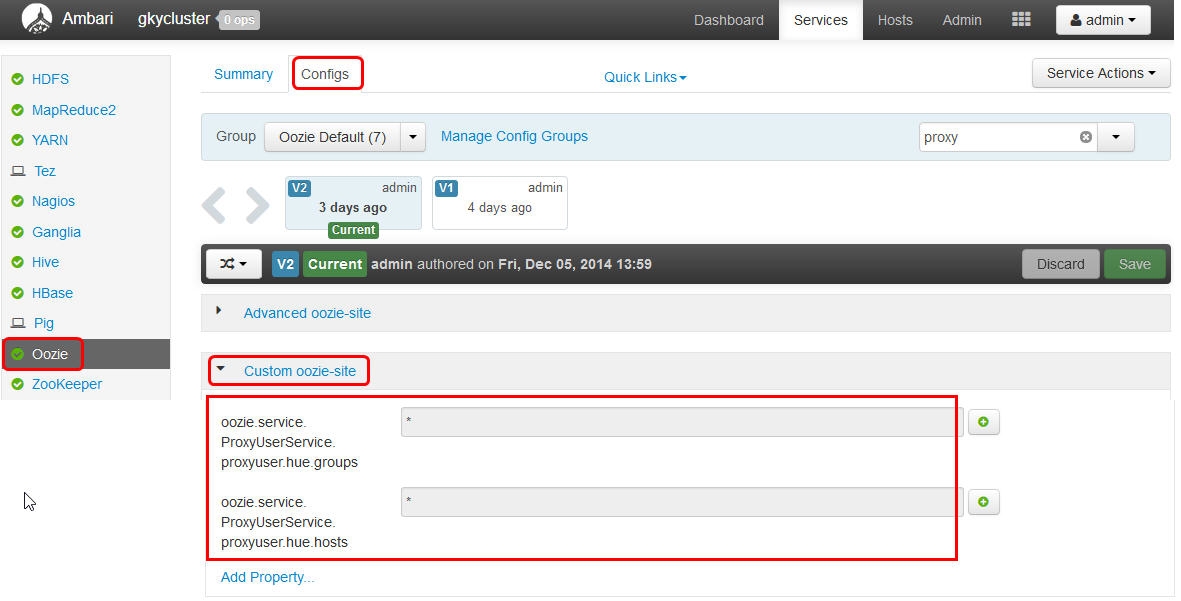

Enable HA for NameNode and ResourceManager

HUE installation:

we will install HUE on client node:

The Hive client configuration (hive-site.xml file) needs to be populated on the host to run Hue. In the beeswax section of hue.ini configure:

hive_conf_dir=/etc/hive/conf

Configure HDP

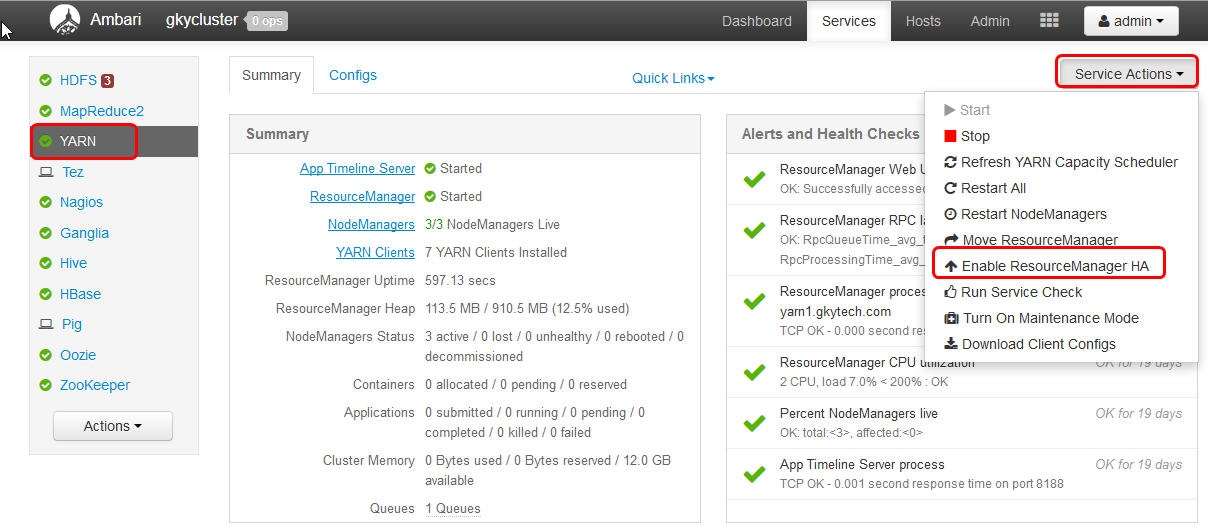

1. Modify the core-site.xml  2. Modify the webhcat-site.xml file

2. Modify the webhcat-site.xml file 3. Modify the oozie-site.xml file

3. Modify the oozie-site.xml file

yum -y install hue

vi /etc/hue/conf/hue.ini

[desktop]

time_zone=Asia/Shanghai

[hadoop]

[[hdfs_clusters]]

[[[default]]]

fs_defaultfs=hdfs://fqdn.namenode.host:8020

webhdfs_url=http://fqdn.namenode.host:50070/webhdfs/v1/

[[yarn_clusters]]

[[[default]]]

resourcemanager_api_url=http://fqdn.resourcemanager.host:8088

resourcemanager_rpc_url=http://fqdn.resourcemanager.host:8050

proxy_api_url=http://fqdn.resourcemanager.host:8088

history_server_api_url=http://fqdn.historyserver.host:19888

node_manager_api_url=http://fqdn.resourcemanager.host:8042

[liboozie]

oozie_url=http://fqdn.oozieserver.host:11000/oozie

[beeswax]

hive_server_host=fqdn.hiveserver.host

[hcatalog]

templeton_url=http://fqdn.webhcatserver.host:50111/templeton/v1/

service hue start

http://hue.server:8000

Configuring Hue for MySQL Database

mysql -uroot -p

create database hue;

GRANT ALL PRIVILEGES ON *.* TO 'hue'@'localhost' IDENTIFIED BY 'hue' WITH GRANT OPTION;

GRANT ALL PRIVILEGES ON *.* TO 'hue'@'%' IDENTIFIED BY 'hue' WITH GRANT OPTION;

flush privileges;

service hue stop

vi /etc/hue/conf/hue.ini

[[database]]

engine=mysql

host=fqdn.mysqlserver.host

port=3306

user=hue

password=hue

name=hue

/usr/lib/hue/build/env/bin/hue syncdb --noinput

service hue start

This is just for hadoop tarball:

disable firewall and selinux

rpm -ivh jdk-7u67-linux-x64.rpm

yum -y install wget rsync unzip openssh-clients

ssh-keygen -q -t rsa -f ~/.ssh/id_rsa -C '' -N ''

vi ~/.ssh/config

StrictHostKeyChecking no

ssh-copy-id localhost

cd /usr/local/

tar xvf /root/hadoop-2.5.1.tar.gz

ln -s hadoop-2.5.1 hadoop

vi ~/.bashrc

export JAVA_HOME=/usr/java/default

export HADOOP_HOME=/usr/local/hadoop

export PATH=$PATH:$JAVA_HOME/bin:$HADOOP_HOME/bin

. ~/.bashrc

vi $HADOOP_HOME/etc/hadoop/hadoop-env.sh

export JAVA_HOME=/usr/java/default

export HADOOP_CONF_DIR=${HADOOP_CONF_DIR:-"/usr/local/hadoop"}

Web Interface:

hadoop namenode: 50070

hadoop secondarynode: 50090

hadoop yarn ResourceManager: 8088

hadoop yarn NodeManager: 8042

hadoop MapReduce JobHistory Server: 19888