MySQL MMM

本文转载:http://diudiu.blog.51cto.com/6371183/1585907

一、MMM简介:

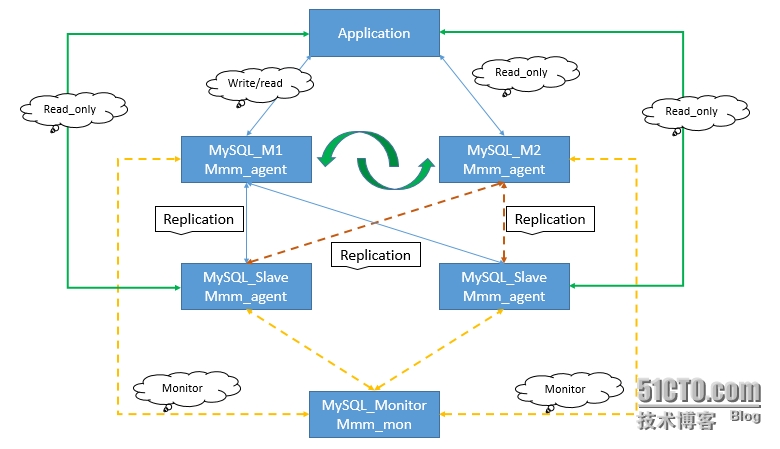

MMM即Master-Master Replication Manager for MySQL(mysql主主复制管理器)关于mysql主主复制配置的监控、故障转移和管理的一套可伸缩的脚本套件(在任何时候只有一个节点可以被写入),这个套件也能对居于标准的主从配置的任意数量的从服务器进行读负载均衡,所以你可以用它来在一组居于复制的服务器启动虚拟ip,除此之外,它还有实现数据备份、节点之间重新同步功能的脚本。

MySQL本身没有提供replication failover的解决方案,通过MMM方案能实现服务器的故障转移,从而实现mysql的高可用。

MMM项目来自 Google:http://code.google.com/p/mysql-master-master

官方网站为:http://mysql-mmm.org

Mmm主要功能由下面三个脚本提供

mmm_mond 负责所有的监控工作的监控守护进程,决定节点的移除等等

mmm_agentd 运行在mysql服务器上的代理守护进程,通过简单远程服务集提供给监控节点

mmm_control 通过命令行管理mmm_mond进程

二、mysql-mmm架构的搭建

| 编号 | 名称 | 主机名 | IP |

| 1 | 监控服务器 | monitor | 172.16.100.129 |

| 2 | MySQL_M1 | M1 | 172.16.100.100 |

| 3 | MySQL_M2 | M2 | 172.16.100.130 |

| 4 | MySQl_Slave | Salve1 | 172.16.100.131 |

| 5 | Vip-write | 172.16.100.252 | |

| 6 | Vip-read | 172.16.100.251 | |

配置集群确保以下信息同步:

双击互信:

Monitor:

|

1

2

|

# sed -i 's@\(HOSTNAME=\).*@\1monitor@g'/etc/sysconfig/network

# hostname monitor

|

M1:

|

1

2

|

# sed -i 's@\(HOSTNAME=\).*@\1M1@g'/etc/sysconfig/network

# hostname M1

|

M2:

|

1

2

|

# sed -i 's@\(HOSTNAME=\).*@\1M2@g'/etc/sysconfig/network

# hostname M2

|

Salve1:

|

1

2

|

# sed -i 's@\(HOSTNAME=\).*@\1Slave1@g'/etc/sysconfig/network

# hostname Slave1

|

Host文件如下:

Monitor:

|

1

2

3

4

5

|

# cat /etc/hosts | grep ^172

172.16.100.129

monitor

172.16.100.100

M1

172.16.100.130

M2

172.16.100.131

Slave

|

|

1

|

# ssh-keygen -t rsa

|

秘钥的拷贝:

|

1

2

3

4

5

6

|

# for

i in M1 M2 Slave1; do

ssh-copy-id -i~/.ssh/id_rsa.pub root@$i;done

# for

i in M1 M2 Slave1; do

ssh $i'date';done

Sun Nov 16

15:36:50

CST 2014

Sun Nov 16

15:36:50

CST 2014

Sun Nov 16

15:36:50

CST 2014

[root@localhost

~]#

|

能显示以上说明双击互信配置成功

|

1

|

# for

i in M1 M2 Slave1; do

scp /etc/hosts root@$i:/etc/;done

|

查看HOSTS文件是否已经同步:

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

|

# for

i in M1 M2 Slave1;do

ssh $i'cat /etc/hosts | grep ^172 ';done

172.16.100.129

monitor

172.16.100.100

M1

172.16.100.130

M2

172.16.100.131

Slave1

172.16.100.129

monitor

172.16.100.100

M1

172.16.100.130

M2

172.16.100.131

Slave1

172.16.100.129

monitor

172.16.100.100

M1

172.16.100.130

M2

172.16.100.131

Slave1

[root@localhost

~]#

|

时间同步:

配置monitor为NTP服务器:

|

1

2

3

|

# yum install ntp

# ntpdate ntp.api.bz

# /etc/init.d/ntpd start

|

分别在MySQL服务器上执行:

|

1

|

# ntpdate 172.16.100.129

|

安装MySQL

配置好M1和M2的主主同步,M1和salve1的主从同步

主主同步:

二进制包安装:

复制二进制的安装包到各个节点:

|

1

2

3

4

5

6

|

# for

i in M1 M2 Slave1;do

scpmysql-5.5.40-linux2.6-x86_64.tar.gz root@$i:/tmp/;done

mysql-5.5.40-linux2.6-x86_64.tar.gz 100% 176MB 8.4MB/s 00:21

mysql-5.5.40-linux2.6-x86_64.tar.gz 100% 176MB 8.8MB/s 00:20

mysql-5.5.40-linux2.6-x86_64.tar.gz 100% 176MB 9.3MB/s 00:19

[root@localhost

~]#

# for

i in M1 M2 Slave1;do

ssh $i 'tar zxvf/tmp/mysql-5.5.40-linux2.6-x86_64.tar.gz -C /usr/local';done

|

创建MySQL账户:

|

1

2

3

4

5

6

7

8

9

10

11

|

# for

i in M1 M2 Slave1;do

ssh $i 'groupadd-g 3306 mysql';done

# for

i in M1 M2 Slave1;do

ssh $i 'useradd-u 3306 -g mysql -s /sbin/nologin -M mysq1';done

# for

i in M1 M2 Slave1;do

ssh $i 'idmysq';done

uid=3306(mysq) gid=3306(mysql)groups=3306(mysql)

uid=3306(mysq) gid=3306(mysql)groups=3306(mysql)

uid=3306(mysq) gid=3306(mysql)groups=3306(mysql)

[root@localhost

~]#

# for

i in M1 M2 Slave1;do

ssh $i 'cd/usr/local/;ln -s mysql-5.5.40-linux2.6-x86_64 mysql';done

# for

i in M1 M2 Slave1;do

ssh $i 'cd/usr/local/mysql;chown root:mysql -R ./';done

# for

i in M1 M2 Slave1;do

ssh $i 'cd/usr/local/mysql;\cp support-files/my-huge.cnf /etc/my.cnf';done

# for

i in M1 M2 Slave1;do

ssh $i 'cd/usr/local/mysql;\cp support-files/mysql.server /etc/init.d/mysqld';done

|

判断脚本的执行权限:

|

1

2

3

4

5

|

# for

i in M1 M2 Slave1;do

ssh $i '[ -x/etc/init.d/mysqld ] && echo "ok" || echo"NO"';done

ok

ok

ok

[root@localhost

~]#

|

创建MySQL存储目录:

|

1

2

3

4

5

6

7

8

9

10

11

|

# for

i in M1 M2 Slave1;do

ssh $i 'mkdir-pv /data/mysqldata;chown -R mysql:mysql /data/';done

[root@localhost

~]#

# for

i in M1 M2 Slave1;do

ssh $i 'yuminstall libaio -y';done

# for

i in M1 M2 Slave1;do

ssh $i 'sed -i"37a\datadir = /data/mysqldata/" /etc/my.cnf ';done

# for

i in M1 M2 Slave1;do

ssh $i 'sed -i"38a\log-error = /data/mysqldata/mysql_error.log" /etc/my.cnf ';done

# for

i in M1 M2 Slave1;do

ssh $i 'sed -i"39a\pid-file = /data/mysqldata/mysql.pid" /etc/my.cnf ';done

# for

i in M1 M2 Slave1;do

ssh $i'chkconfig --add mysqld;chkconfig mysqld on;service mysqld start';done

Starting MySQL......... SUCCESS!

Starting MySQL........ SUCCESS!

Starting MySQL....... SUCCESS!

[root@localhost

~]#

|

MySQL服务已经安装好,下面进行主主同步:

M1:

|

1

2

3

4

|

mysql> GRANT REPLICATION SLAVE,replication client ON *.* TO 'repl'@'%'

IDENTIFIED BY 'repl';

mysql> GRANT REPLICATION CLIENT ON *.*TO 'mmm_monitor'@'%'

IDENTIFIED BY '123456';

mysql> GRANT SUPER,REPLICATIONCLIENT,PROCESS ON *.* TO 'mmm_agent'@'%'

IDENTIFIED BY '123456';

mysql> FLUSH PRIVILEGES;

|

M2:

|

1

2

3

4

|

mysql> GRANT REPLICATION SLAVE,replication client ON *.* TO 'repl'@'%'

IDENTIFIED BY 'repl';

mysql> GRANT REPLICATION CLIENT ON *.*TO 'mmm_monitor'@'%'

IDENTIFIED BY '123456';

mysql> GRANT SUPER,REPLICATIONCLIENT,PROCESS ON *.* TO 'mmm_agent'@'%'

IDENTIFIED BY '123456';

mysql> FLUSH PRIVILEGES;

|

Slave1:

|

1

2

3

|

mysql> GRANT REPLICATION CLIENT ON *.*TO 'mmm_monitor'@'%'

IDENTIFIED BY '123456';

mysql> GRANT SUPER,REPLICATIONCLIENT,PROCESS ON *.* TO 'mmm_agent'@'%'

IDENTIFIED BY '123456';

mysql> FLUSH PRIVILEGES;

|

备注:

由于MMM集群套件对数据库的读写进行了严格的控制,根据MMM管理机制,需要首先在所有的MySQL服务器上,这是read_only参数,也就是在/etc/my.cnf的mysqld组中进行配置:

|

1

|

read_only=1

|

此参数对所有的非临时表进行控制,以下两个例外:

对replication threads例外

对拥有超级权限的账户例外

|

1

2

3

4

5

6

7

8

9

|

# for

i in M1 M2 Slave1;do

ssh $i 'sed -i"40a\read_only = 1" /etc/my.cnf';done

# for

i in M1 M2 Slave1;do

ssh $i 'servicemysqld restart';done

Shutting down MySQL.. SUCCESS!

Starting MySQL...... SUCCESS!

Shutting down MySQL.. SUCCESS!

Starting MySQL....... SUCCESS!

Shutting down MySQL.. SUCCESS!

Starting MySQL....... SUCCESS!

[root@localhost

~]#

|

M1--->M2的复制

M1上的配置如下:

|

1

2

3

4

5

6

7

|

log-bin=mysql-bin

server-id = 1

relay-log = mysql-relay-bin

replicate-wild-ignore-table=mysql.%

replicate-wild-ignore-table=test.%

replicate-wild-ignore-table=information_schema.%

# Service mysqld restart

|

M2配置:

|

1

2

3

4

5

6

7

|

log-bin=mysql-bin

server-id = 2

relay-log = mysql-relay-bin

replicate-wild-ignore-table=mysql.%

replicate-wild-ignore-table=test.%

replicate-wild-ignore-table=information_schema.%

# Service mysqld restart

|

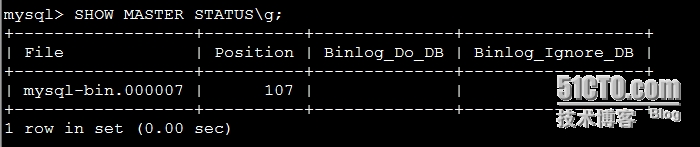

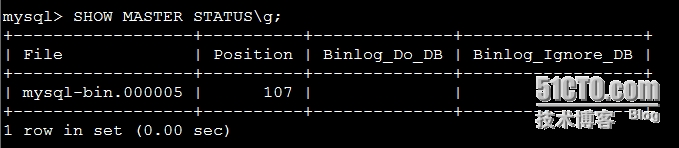

M1信息:

M2上操作:

M2---->M1的复制:

M2的复制信息:

M1上操作:

|

1

2

3

4

5

6

|

mysql> CHANGE MASTER TO\

-> master_host='172.16.100.130',

-> master_user='repl',

-> master_password='repl',

-> master_log_file='mysql-bin.000005',

->master_log_pos=107;

|

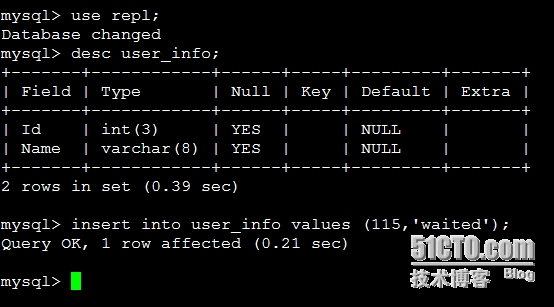

验证主主同步复制:

|

1

2

3

4

5

6

7

8

9

10

|

mysql> CREATE DATABASE repl;

Query OK, 1

row affected (0.02

sec)

mysql> use repl;

Database changed

mysql> create table user_info (Idint(3),Name varchar(8));

Query OK, 0

rows affected (0.23

sec)

mysql> insert into user_info values(100,'James');

Query OK, 1

row affected (0.09

sec)

mysql>

|

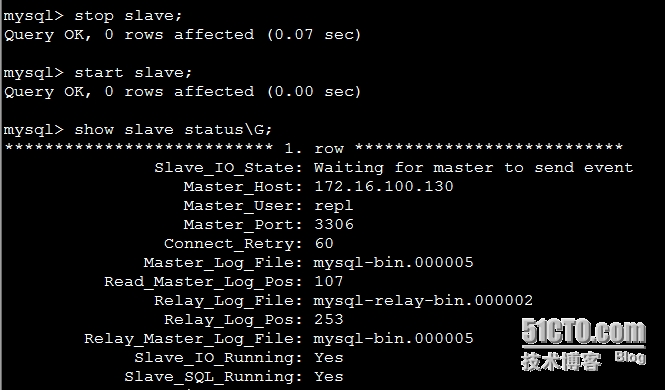

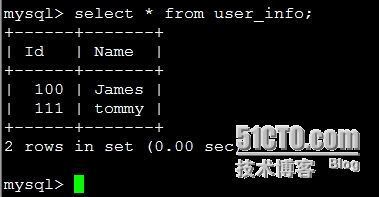

M2上操作:

M1上查看:

已经同步成功

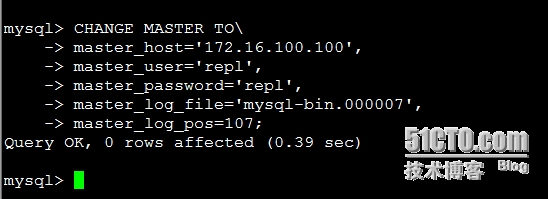

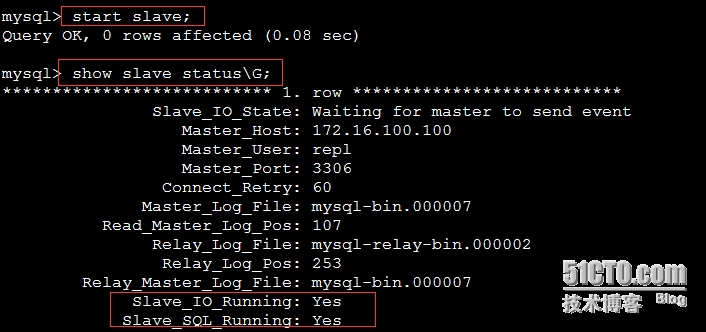

M1-->Slave1的主从同步:

Slave1上的配置:

|

1

2

3

4

5

6

7

|

log-bin=mysql-bin

server-id = 11

relay-log = mysql-relay-bin

replicate-wild-ignore-table=mysql.%

replicate-wild-ignore-table=test.%

replicate-wild-ignore-table=information_schema.%

# service mysqld restart

|

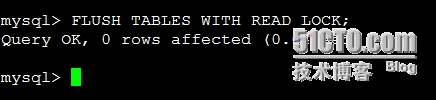

导出M1上的数据到Slave1上

不要退出此终端,开启两外一个终端,进行导出数据

|

1

2

|

# /usr/local/mysql/bin/mysqldump -uroot -prepl > /tmp/repl.sql

# scp /tmp/repl.sql root@Slave1:/tmp/

|

M1上执行

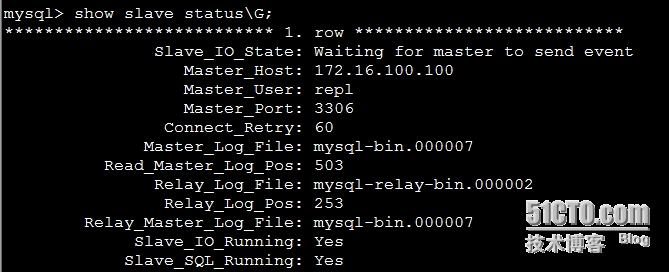

Slave1上进行数据还原和同步:

|

1

2

3

4

5

6

7

8

|

# /usr/local/mysql/bin/mysql -uroot -e"create database repl"

# /usr/local/mysql/bin/mysqldump -uroot -prepl < /tmp/repl.sql

mysql> CHANGE MASTER TO\

-> master_user='repl',

-> master_password='repl',

-> master_log_file='mysql-bin.000007',

-> master_log_pos=503,

->master_host='172.16.100.100';

|

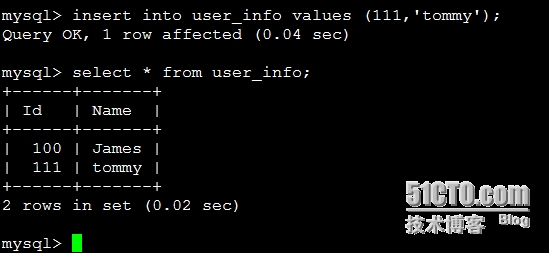

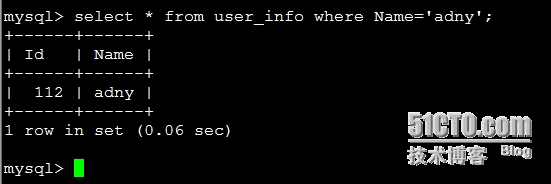

可以看到数据已经同步过来。

M1上插入数据:

|

1

2

|

mysql> insert into user_info values(112,'adny');

Query OK, 1

row affected (0.18

sec)

|

Slave1上进行查看

安装MySQL-MMM集群套件

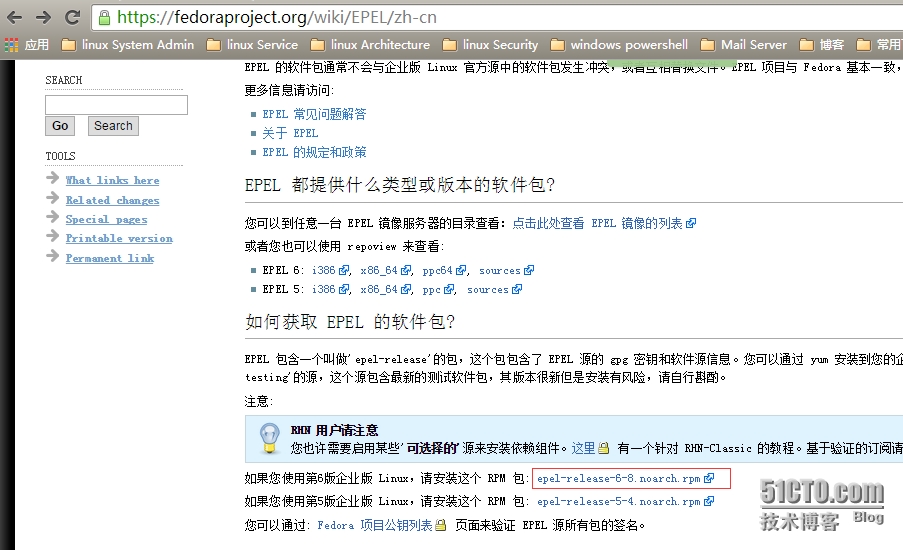

下载yum源:

批量执行下载:

|

1

2

|

# for

i in M1 M2 Slave1;do

ssh $i 'wget http://dl.fedoraproject.org/pub/epel/6/i386/epel-release-6-8.noarch.rpm';done

# for

i in M1 M2 Slave1 monitor;do

ssh $i'rpm -vih epel-release-6-8.noarch.rpm';done

|

所有节点批量安装:

|

1

|

# for

i in M1 M2 Slave1 monitor;do

ssh $i'yum install mysql-mmm* -y';done

|

安装完成之后,查看安装的位置:

|

1

2

3

4

|

# rpm -ql mysql-mmm-2.2.1

/etc/logrotate.d/mysql-mmm

/etc/mysql-mmm

/etc/mysql-mmm/mmm_common.conf

|

配置文件如下:

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

|

# cp mmm_common.conf mmm_common.conf.default

# cat mmm_common.conf

active_master_role writer

<host default>

cluster_interface eth0

pid_path /var/run/mysql-mmm/mmm_agentd.pid

bin_path /usr/libexec/mysql-mmm/

replication_user repl #主从复制的账户

replication_password repl #主从复制的密码

agent_user mmm_agent #代理账号

agent_password 123456

#代理账号的密码

</host>

<host M1>

ip 172.16.100.100

mode master

peer M2

</host>

<host M2>

ip 172.16.100.130

mode master

peer M1

</host>

<host Slave1>

ip 172.16.100.131

mode slave

</host>

#<host db3>

# ip192.16.100.51

# modeslav

#</host>

<role writer>

hosts M1, M2 #能够作为写的服务器主机名

ips 172.16.100.252

#写的VIP地址

mode exclusive #排他模式,该模式下只有一个主机拥有

</role>

<role reader>

hosts M1, M2, Slave1 #作为读的服务器主机名

ips 172.16.100.251

#读的VIP地址

mode balanced #平衡模式

</role>

|

此配置文件四个节点的配置相同:

|

1

2

3

4

5

|

# for

i in M1 M2 Slave1;do

scp /etc/mysql-mmm/mmm_common.confroot@$i:/etc/mysql-mmm;done

mmm_common.conf 100% 810

0.8KB/s 00:00

mmm_common.conf 100% 810

0.8KB/s 00:00

mmm_common.conf 100% 810

0.8KB/s 00:00

[root@localhost

mysql-mmm]#

|

M1的mmm_agent.conf配置

|

1

2

3

4

5

6

7

8

9

|

# cat mmm_agent.conf | grep-v ^# | sed -e '/^$/d'

include mmm_common.conf

this

M1

[root@localhost

mysql-mmm]#

M2的mmm_agent.conf配置

# cat mmm_agent.conf | grep-v ^# | sed -e '/^$/d'

include mmm_common.conf

this

M2

[root@localhost

mysql-mmm]#

|

Slave1上的mmm_agent.conf配置

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

|

# cat mmm_agent.conf | grep -v ^# | awk 'NF>0'

include mmm_common.conf

this

Slave1

[root@localhost

mysql-mmm]#

Monitor的mmm_mon.conf配置

# cat mmm_mon.conf | grep -v "#"| awk 'NF>0'

include mmm_common.conf

<monitor>

ip 127.0.0.1

pid_path /var/run/mysql-mmm/mmm_mond.pid

bin_path /usr/libexec/mysql-mmm

status_path /var/lib/mysql-mmm/mmm_mond.status

ping_ips 172.16.100.100,172.16.100.130,172.16.100.131

#ping方式监控

auto_set_online 10

#发现节点丢失10秒钟后进行切换

</monitor>

<host default>

monitor_user mmm_monitor #监控账号

monitor_password 123456

#监控密码

</host>

debug 0

[root@localhost

mysql-mmm]#

|

至此MYSQL_,MMM已经配置完成,下面进行启动:

M1 M2 Slave1上一次启动:

|

1

|

/etc/init.d/mysql-mmm-agent start

|

完成之后在monitor上启动:

|

1

|

/etc/init.d/mysql-mmm-monitor start

|

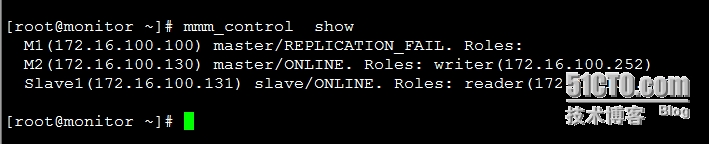

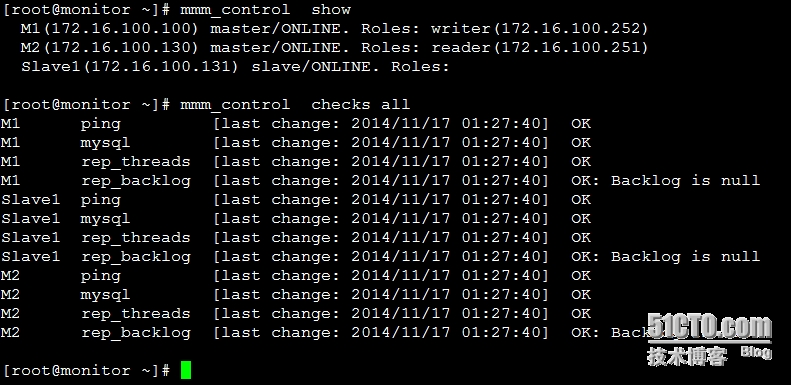

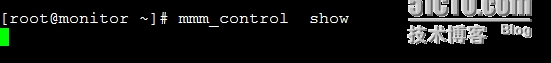

在monitor上查看集群的状态:

其中:M1负责写操作,M2负责读操作

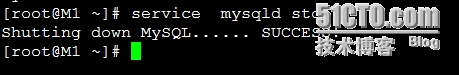

模拟宕机切换测试:

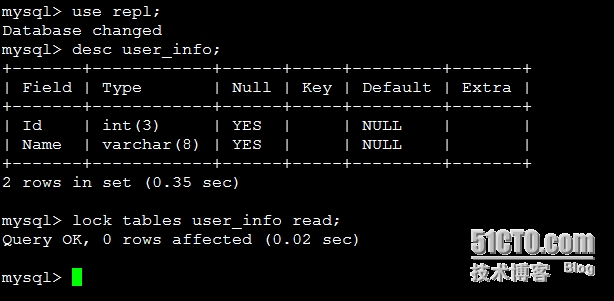

在M2上锁住user_info表然后,在M1上进行更新表操作:

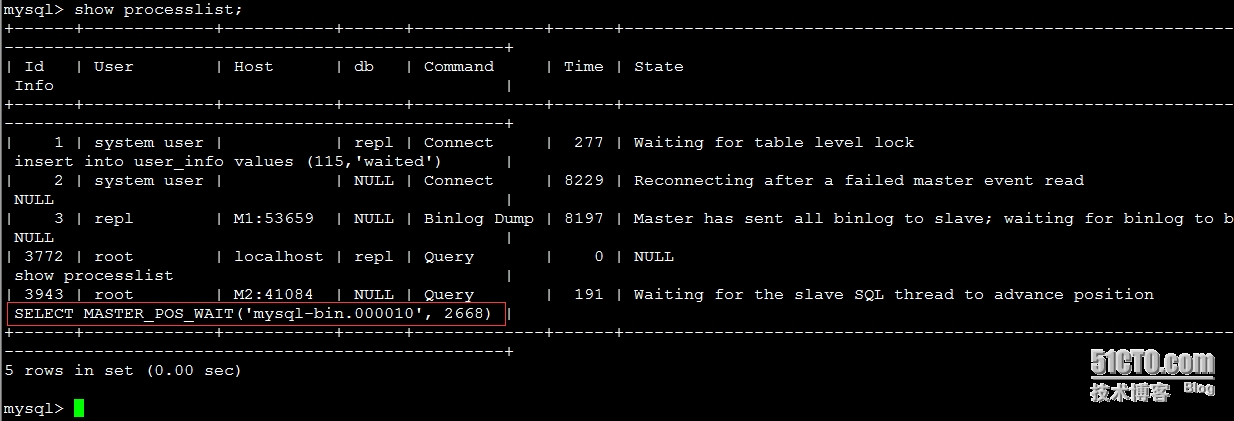

然后关闭M1的MySQL进程,此时VIP漂移到M2上之后,必须等到同步完成才可以切换,否则就在哪里等待,在此过程中是用的是如图所示的函数:

Master_pos_wait必须等到中继日志执行完成后,再释放锁,遇到这种问题,如果延时很大,要切换,只能人工kil掉此进程,此时S1会自动指向M2,自动执行change master命令进行同步复制。

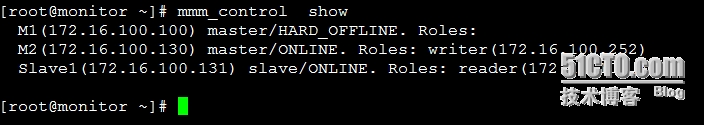

此时monitor的信息无法显示,等待释放锁,然后进行切换:

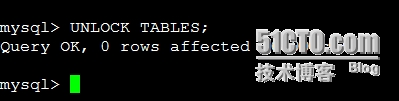

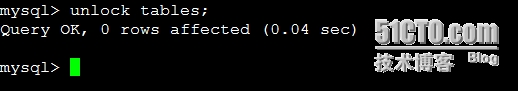

M2解锁:

Monitor:

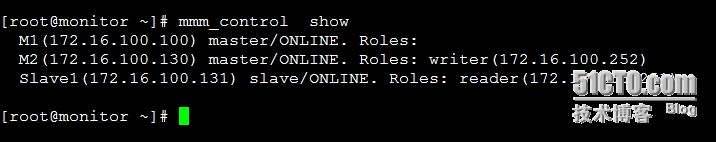

重新启动M1

VIP还在M2上,不会因为M1修复好,切换回去。

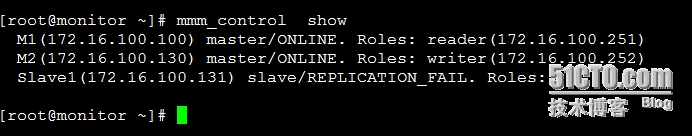

Slave切换:

S1上执行stop slave,VIP就会漂移到M1上

如果在M1上执行stop slave,VIP就会漂移到M2上,之后如果在slave1上执行start slave,此时VIP又会回到slave1上