MongoDB Sharding学习操作篇

本文使用的MongoDB版本是2.4.6。以下测试都是在本机上完成,线上环境需要各个组件分开部署。

1.添加主机列表

vim /etc/hosts 添加如下列表

127.0.0.1 mongo-sharding-router1 127.0.0.1 mongo-sharding-router2 127.0.0.1 mongo-sharding-config1 127.0.0.1 mongo-sharding-config2 127.0.0.1 mongo-sharding-config3 127.0.0.1 mongo-sharding-replica1 127.0.0.1 mongo-sharding-replica2 127.0.0.1 mongo-sharding-replica3 127.0.0.1 mongo-sharding-replica4 127.0.0.1 mongo-sharding-replica5 127.0.0.1 mongo-sharding-replica6

2.配置两个Shard,各自拥有三个成员的Replica Set

相应的主机列表

shard1

127.0.0.1 mongo-sharding-replica1 2811 Primary 127.0.0.1 mongo-sharding-replica2 2822 Secondary 127.0.0.1 mongo-sharding-replica3 2833 Secondary

shard2

127.0.0.1 mongo-sharding-replica4 3811 Primary 127.0.0.1 mongo-sharding-replica5 3822 Secondary 127.0.0.1 mongo-sharding-replica6 3833 Secondary

router

127.0.0.1 mongo-sharding-router1 2911 127.0.0.1 mongo-sharding-router2 2922

config server

127.0.0.1 mongo-sharding-config1 2711 127.0.0.1 mongo-sharding-config2 2722 127.0.0.1 mongo-sharding-config3 2733

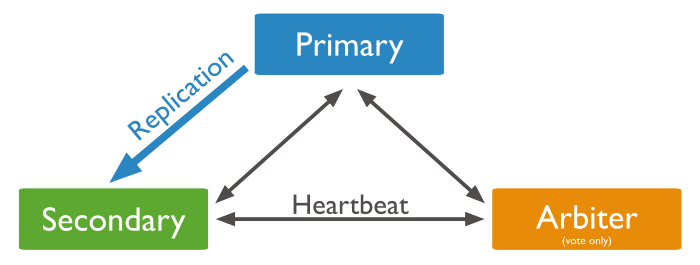

部署架构如下:

A.分别配置mongo-sharding-replica1,mongo-sharding-replica2和mongo-sharding-replica3的MongoDB的配置文件和启动脚本并启动MongoDB

如mongo-sharding-replica1相关配置

# cat /etc/mongod2811.conf logpath=/data/app_data/mongodb/log2811/mongodb.log logappend=true fork=true port=2811 dbpath=/data/app_data/mongodb/data2811/ pidfilepath=/data/app_data/mongodb/data2811/mongod.pid maxConns=2048 nohttpinterface=true directoryperdb=true replSet=test_shard1

/etc/init.d/mongod2811

#!/bin/bash

# mongod - Startup script for mongod

# chkconfig: 35 85 15

# description: Mongo is a scalable, document-oriented database.

# processname: mongod

# config: /etc/mongod.conf

# pidfile: /data/app_data/mongodb/data/mongodb/mongod.pid

. /etc/rc.d/init.d/functions

# things from mongod.conf get there by mongod reading it

# NOTE: if you change any OPTIONS here, you get what you pay for:

# this script assumes all options are in the config file.

CONFIGFILE="/etc/mongod2811.conf"

OPTIONS=" -f $CONFIGFILE"

SYSCONFIG="/etc/sysconfig/mongod"

# FIXME: 1.9.x has a --shutdown flag that parses the config file and

# shuts down the correct running pid, but that's unavailable in 1.8

# for now. This can go away when this script stops supporting 1.8.

DBPATH=`awk -F= '/^dbpath=/{print $2}' "$CONFIGFILE"`

PIDFILE=`awk -F= '/^pidfilepath=/{print $2}' "$CONFIGFILE"`

mongod=${MONGOD-/data/app_platform/mongodb/bin/mongod}

MONGO_USER=mongod

MONGO_GROUP=mongod

if [ -f "$SYSCONFIG" ]; then

. "$SYSCONFIG"

fi

# Handle NUMA access to CPUs (SERVER-3574)

# This verifies the existence of numactl as well as testing that the command works

NUMACTL_ARGS="--interleave=all"

if which numactl >/dev/null 2>/dev/null && numactl $NUMACTL_ARGS ls / >/dev/null 2>/dev/null

then

NUMACTL="numactl $NUMACTL_ARGS"

else

NUMACTL=""

fi

start()

{

echo -n $"Starting mongod: "

daemon --user "$MONGO_USER" $NUMACTL $mongod $OPTIONS

RETVAL=$?

echo

[ $RETVAL -eq 0 ] && touch /var/lock/subsys/mongod

}

stop()

{

echo -n $"Stopping mongod: "

killproc -p "$PIDFILE" -d 300 /data/app_platform/mongodb/bin/mongod

RETVAL=$?

echo

[ $RETVAL -eq 0 ] && rm -f /var/lock/subsys/mongod

}

restart () {

stop

start

}

ulimit -n 12000

RETVAL=0

case "$1" in

start)

start

;;

stop)

stop

;;

restart|reload|force-reload)

restart

;;

condrestart)

[ -f /var/lock/subsys/mongod ] && restart || :

;;

status)

status $mongod

RETVAL=$?

;;

*)

echo "Usage: $0 {start|stop|status|restart|reload|force-reload|condrestart}"

RETVAL=1

esac

exit $RETVAL

注意/data/app_data/mongodb/这个目录的权限设置成为mongod进程运行的用户权限。

# service mongod2811 start Starting mongod: about to fork child process, waiting until server is ready for connections. all output going to: /data/app_data/mongodb/log2811/mongodb.log forked process: 4589 child process started successfully, parent exiting [ OK ] # service mongod2822 start Starting mongod: about to fork child process, waiting until server is ready for connections. all output going to: /data/app_data/mongodb/log2822/mongodb.log forked process: 4656 child process started successfully, parent exiting [ OK ] # service mongod2833 start Starting mongod: about to fork child process, waiting until server is ready for connections. all output going to: /data/app_data/mongodb/log2833/mongodb.log forked process: 4723 child process started successfully, parent exiting [ OK ]

B.初始化Replica Set

# /data/app_platform/mongodb/bin/mongo --port 2811

MongoDB shell version: 2.4.6

connecting to: 127.0.0.1:2811/test

> rs.status();

{

"startupStatus" : 3,

"info" : "run rs.initiate(...) if not yet done for the set",

"ok" : 0,

"errmsg" : "can't get local.system.replset config from self or any seed (EMPTYCONFIG)"

}

> rs.initiate("mongo-sharding-replica1:2811");

{

"info2" : "no configuration explicitly specified -- making one",

"me" : "mongo-sharding-replica1:2811",

"info" : "Config now saved locally. Should come online in about a minute.",

"ok" : 1

}

>

test_shard1:PRIMARY>

test_shard1:PRIMARY> rs.status();

{

"set" : "test_shard1",

"date" : ISODate("2015-01-07T10:16:51Z"),

"myState" : 1,

"members" : [

{

"_id" : 0,

"name" : "mongo-sharding-replica1:2811",

"health" : 1,

"state" : 1,

"stateStr" : "PRIMARY",

"uptime" : 569,

"optime" : Timestamp(1420625715, 1),

"optimeDate" : ISODate("2015-01-07T10:15:15Z"),

"self" : true

}

],

"ok" : 1

}

C.向Replica Set添加mongo-sharding-replica2和mongo-sharding-replica3

test_shard1:PRIMARY> rs.add("mongo-sharding-replica2:2822");

{ "ok" : 1 }

test_shard1:PRIMARY> rs.add("mongo-sharding-replica3:2833");

{ "ok" : 1 }

test_shard1:PRIMARY> rs.config();

{

"_id" : "test_shard1",

"version" : 5,

"members" : [

{

"_id" : 0,

"host" : "mongo-sharding-replica1:2811"

},

{

"_id" : 1,

"host" : "mongo-sharding-replica2:2822"

},

{

"_id" : 2,

"host" : "mongo-sharding-replica3:2833"

}

]

}

test_shard1:PRIMARY>

D.查看mongo-sharding-replica2:2822和mongo-sharding-replica3:2833的状态

# /data/app_platform/mongodb/bin/mongo --port 2822

MongoDB shell version: 2.4.6

connecting to: 127.0.0.1:2822/test

test_shard1:SECONDARY> db.isMaster();

{

"setName" : "test_shard1",

"ismaster" : false,

"secondary" : true,

"hosts" : [

"mongo-sharding-replica2:2822",

"mongo-sharding-replica3:2833",

"mongo-sharding-replica1:2811"

],

"primary" : "mongo-sharding-replica1:2811",

"me" : "mongo-sharding-replica2:2822",

"maxBsonObjectSize" : 16777216,

"maxMessageSizeBytes" : 48000000,

"localTime" : ISODate("2015-01-07T10:21:41.522Z"),

"ok" : 1

}

test_shard1:SECONDARY>

# /data/app_platform/mongodb/bin/mongo --port 2833

MongoDB shell version: 2.4.6

connecting to: 127.0.0.1:2833/test

test_shard1:SECONDARY> db.isMaster();

{

"setName" : "test_shard1",

"ismaster" : false,

"secondary" : true,

"hosts" : [

"mongo-sharding-replica3:2833",

"mongo-sharding-replica2:2822",

"mongo-sharding-replica1:2811"

],

"primary" : "mongo-sharding-replica1:2811",

"me" : "mongo-sharding-replica3:2833",

"maxBsonObjectSize" : 16777216,

"maxMessageSizeBytes" : 48000000,

"localTime" : ISODate("2015-01-07T10:22:27.125Z"),

"ok" : 1

}

test_shard1:SECONDARY>

同理对shard2的MongoDB实例进行相同的配置

3.配置config server

config server是用来存储集群元数据的mongod实例,在生产环境中需要3个config server,每个config server都有一份集群元数据。

以配置mongo-sharding-config1为例

# cat /etc/mongod2711.conf logpath=/data/app_data/mongodb/log2711/mongodb.log logappend=true fork=true port=2711 dbpath=/data/app_data/mongodb/configdb2711/ pidfilepath=/data/app_data/mongodb/configdb2711/mongod.pid maxConns=2048 nohttpinterface=true directoryperdb=true configsvr=true

/etc/init.d/mongod2711

#!/bin/bash

# mongod - Startup script for mongod

# chkconfig: 35 85 15

# description: Mongo is a scalable, document-oriented database.

# processname: mongod

# config: /etc/mongod.conf

# pidfile: /data/app_data/mongodb/data/mongodb/mongod.pid

. /etc/rc.d/init.d/functions

# things from mongod.conf get there by mongod reading it

# NOTE: if you change any OPTIONS here, you get what you pay for:

# this script assumes all options are in the config file.

CONFIGFILE="/etc/mongod2711.conf"

OPTIONS=" -f $CONFIGFILE"

SYSCONFIG="/etc/sysconfig/mongod"

# FIXME: 1.9.x has a --shutdown flag that parses the config file and

# shuts down the correct running pid, but that's unavailable in 1.8

# for now. This can go away when this script stops supporting 1.8.

DBPATH=`awk -F= '/^dbpath=/{print $2}' "$CONFIGFILE"`

PIDFILE=`awk -F= '/^pidfilepath=/{print $2}' "$CONFIGFILE"`

mongod=${MONGOD-/data/app_platform/mongodb/bin/mongod}

MONGO_USER=mongod

MONGO_GROUP=mongod

if [ -f "$SYSCONFIG" ]; then

. "$SYSCONFIG"

fi

# Handle NUMA access to CPUs (SERVER-3574)

# This verifies the existence of numactl as well as testing that the command works

NUMACTL_ARGS="--interleave=all"

if which numactl >/dev/null 2>/dev/null && numactl $NUMACTL_ARGS ls / >/dev/null 2>/dev/null

then

NUMACTL="numactl $NUMACTL_ARGS"

else

NUMACTL=""

fi

start()

{

echo -n $"Starting mongod: "

daemon --user "$MONGO_USER" $NUMACTL $mongod $OPTIONS

RETVAL=$?

echo

[ $RETVAL -eq 0 ] && touch /var/lock/subsys/mongod

}

stop()

{

echo -n $"Stopping mongod: "

killproc -p "$PIDFILE" -d 300 /data/app_platform/mongodb/bin/mongod

RETVAL=$?

echo

[ $RETVAL -eq 0 ] && rm -f /var/lock/subsys/mongod

}

restart () {

stop

start

}

ulimit -n 12000

RETVAL=0

case "$1" in

start)

start

;;

stop)

stop

;;

restart|reload|force-reload)

restart

;;

condrestart)

[ -f /var/lock/subsys/mongod ] && restart || :

;;

status)

status $mongod

RETVAL=$?

;;

*)

echo "Usage: $0 {start|stop|status|restart|reload|force-reload|condrestart}"

RETVAL=1

esac

exit $RETVAL

service mongod2711 start

查看状态

# /data/app_platform/mongodb/bin/mongo --port 2711 MongoDB shell version: 2.4.6 connecting to: 127.0.0.1:2711/test configsvr> exit bye # /data/app_platform/mongodb/bin/mongo --port 2722 MongoDB shell version: 2.4.6 connecting to: 127.0.0.1:2722/test configsvr> exit bye # /data/app_platform/mongodb/bin/mongo --port 2733 MongoDB shell version: 2.4.6 connecting to: 127.0.0.1:2733/test configsvr>

4.配置router server

router server就是一个或多个mongos实例,每个mongos实例不需要占用太多系统资源,所以可以将mongos实例部署到其他mongod实例的服务器上或者其他应用服务器上。启动mongos实例需要添加configdb这个参数来指明config server的连接信息。

可以在一个MongoDB集群中开启认证功能。可以在集群中的每一个成员包括mongos和mongod,使用相同的私钥并使用keyFile参数来开启认证。

A.生成私钥文件

私钥文件的长度必须是6到1024个字符

openssl rand -base64 741

MongoDB为了夸平台方便,会将空白字符剥离,所以一下几种方式效果相同

echo -e "my secret key" > key1 echo -e "my secret key\n" > key2 echo -e "my secret key" > key3 echo -e "my\r\nsecret\r\nkey\r\n" > key4

B.在MongoDB集群中的每一个组件中开启认证方式

通过在mongos或mongod的配置文件使用keyFile指定私钥存放路径

keyFile = /srv/mongodb/keyfile

或者命令行启动时添加--keyFile参数

以配置mongo-sharding-router1为例

# cat /etc/mongos2911.conf logpath=/data/app_data/mongodb/log2911/mongodb.log logappend=true fork=true port=2911 pidfilepath=/data/app_data/mongodb/log2911/mongod.pid maxConns=2048 nohttpinterface=true configdb=mongo-sharding-config1:2711,mongo-sharding-config2:2722,mongo-sharding-config3:2733

#!/bin/bash

# mongod - Startup script for mongod

# chkconfig: 35 85 15

# description: Mongo is a scalable, document-oriented database.

# processname: mongod

# config: /etc/mongod.conf

# pidfile: /data/app_data/mongodb/data/mongodb/mongod.pid

. /etc/rc.d/init.d/functions

# things from mongod.conf get there by mongod reading it

# NOTE: if you change any OPTIONS here, you get what you pay for:

# this script assumes all options are in the config file.

CONFIGFILE="/etc/mongos2911.conf"

OPTIONS=" -f $CONFIGFILE"

SYSCONFIG="/etc/sysconfig/mongod"

# FIXME: 1.9.x has a --shutdown flag that parses the config file and

# shuts down the correct running pid, but that's unavailable in 1.8

# for now. This can go away when this script stops supporting 1.8.

DBPATH=`awk -F= '/^dbpath=/{print $2}' "$CONFIGFILE"`

PIDFILE=`awk -F= '/^pidfilepath=/{print $2}' "$CONFIGFILE"`

mongod=${MONGOD-/data/app_platform/mongodb/bin/mongos}

MONGO_USER=mongod

MONGO_GROUP=mongod

if [ -f "$SYSCONFIG" ]; then

. "$SYSCONFIG"

fi

# Handle NUMA access to CPUs (SERVER-3574)

# This verifies the existence of numactl as well as testing that the command works

NUMACTL_ARGS="--interleave=all"

if which numactl >/dev/null 2>/dev/null && numactl $NUMACTL_ARGS ls / >/dev/null 2>/dev/null

then

NUMACTL="numactl $NUMACTL_ARGS"

else

NUMACTL=""

fi

start()

{

echo -n $"Starting mongod: "

daemon --user "$MONGO_USER" $NUMACTL $mongod $OPTIONS

RETVAL=$?

echo

[ $RETVAL -eq 0 ] && touch /var/lock/subsys/mongod

}

stop()

{

echo -n $"Stopping mongod: "

killproc -p "$PIDFILE" -d 300 /data/app_platform/mongodb/bin/mongod

RETVAL=$?

echo

[ $RETVAL -eq 0 ] && rm -f /var/lock/subsys/mongod

}

restart () {

stop

start

}

ulimit -n 12000

RETVAL=0

case "$1" in

start)

start

;;

stop)

stop

;;

restart|reload|force-reload)

restart

;;

condrestart)

[ -f /var/lock/subsys/mongod ] && restart || :

;;

status)

status $mongod

RETVAL=$?

;;

*)

echo "Usage: $0 {start|stop|status|restart|reload|force-reload|condrestart}"

RETVAL=1

esac

exit $RETVAL

service mongos2911 start

同理配置mongo-sharding-router2

查看状态显示mongos

# /data/app_platform/mongodb/bin/mongo --port 2911 MongoDB shell version: 2.4.6 connecting to: 127.0.0.1:2911/test mongos> show dbs; admin (empty) config 0.046875GB mongos> exit bye # /data/app_platform/mongodb/bin/mongo --port 2922 MongoDB shell version: 2.4.6 connecting to: 127.0.0.1:2922/test mongos> show dbs; admin (empty) config 0.046875GB mongos>

5.向集群中增加shard

每个shard可以是单个mongod实例或者一个Replica Set,生产环境必须要是Replica Set以确保高可用性。

这里先将shard1 添加到集群中

# /data/app_platform/mongodb/bin/mongo --port 2911

MongoDB shell version: 2.4.6

connecting to: 127.0.0.1:2911/test

mongos> sh.addShard("test_shard1/mongo-sharding-replica1:2811")

{ "shardAdded" : "test_shard1", "ok" : 1 }

mongos>

mongos> sh.status()

--- Sharding Status ---

sharding version: {

"_id" : 1,

"version" : 3,

"minCompatibleVersion" : 3,

"currentVersion" : 4,

"clusterId" : ObjectId("54adef1aa56455cefe56d8bd")

}

shards:

{ "_id" : "test_shard1", "host" : "test_shard1/mongo-sharding-replica1:2811,mongo-sharding-replica2:2822,mongo-sharding-replica3:2833" }

databases:

{ "_id" : "admin", "partitioned" : false, "primary" : "config" }

mongos>

6.开启数据库的分片功能

在将一个集合分片之前,先要将这个集合所在的数据库开启分片功能。开启数据库的分片功能并不会直接将数据分布存储,而是可以让这个库里面的集合可以分片。一旦开启数据库的分片功能,MongoDB会为这个数据库分配一个primary shard用来存储分片之前的所有数据。

mongos> show dbs;

admin (empty)

config 0.046875GB

game 0.203125GB

test (empty)

mongos> sh.enableSharding("game");

{ "ok" : 1 }

mongos> sh.status();

--- Sharding Status ---

sharding version: {

"_id" : 1,

"version" : 3,

"minCompatibleVersion" : 3,

"currentVersion" : 4,

"clusterId" : ObjectId("54adef1aa56455cefe56d8bd")

}

shards:

{ "_id" : "test_shard1", "host" : "test_shard1/mongo-sharding-replica1:2811,mongo-sharding-replica2:2822,mongo-sharding-replica3:2833" }

databases:

{ "_id" : "admin", "partitioned" : false, "primary" : "config" }

{ "_id" : "test", "partitioned" : false, "primary" : "test_shard1" }

{ "_id" : "game", "partitioned" : true, "primary" : "test_shard1" }

7.将指定的集合分片

在确定将一个集合作分片之前需要选好shard key,即一个索引,选择合适的shard key会直接影响整个分片集群的效率。如果要分片的集合已经有数据,就需要用ensureIndex()来添加索引,如果没有数据MongoDB会通过sh.shardCollection()为这个集合创建相应的索引

举例

sh.shardCollection("records.people", { "zipcode": 1, "name": 1 } )

sh.shardCollection("people.addresses", { "state": 1, "_id": 1 } )

sh.shardCollection("assets.chairs", { "type": 1, "_id": 1 } )

sh.shardCollection("events.alerts", { "_id": "hashed" } )

A.records库中的people集合使用{"zipcode":1,"name": 1}作为sharding key。sharding key会根据zipcode字段的值将people集合中的文档分布到各个shard上,如果有多个文档的zipcode字段值相同,那么MongoDB会将对应的chunk依据name字段的值分割成多块。

B.people库中的addresses集合使用{"state":1,"_id" : 1}作为sharding key。

C.assets库中的chairs集合使用{"type":1,"_id" :1}作为sharding key。

D.events库中的alerts集合使用{"_id" : "hashed"}作为sharding key。sharding key根据_id字段值的哈希值来讲alerts集合中的文档分布到各个shard上。需要先建立哈希索引,如db.friends.ensureIndex({"_id":"hashed"});

选择sharding key最好要根据自身的业务特定来,很难选择一个很合适的key既能够保证数据都是平均分布在各个shard上,又能够方便应用程序能够快速定位查找数据。

8.config server相关维护操作

1)将用于测试环境的一个config server转换为用于线上的三个config server的步骤

测试环境可以使用一个config server,但是线上环境一定要使用三个config server。如果要将单个config server配置成线上的三个config server,需要做以下几步:

A。停掉集群中所有的MongoDB进程,包括所有的mongod实例和mongos实例

B。复制现有的config server的数据库文件目录即dbpath配置的目录到新的config server上。

C。重新启动3个config server

D。重新启动整个集群的其他MongoDB实例

2)迁移config server到拥有相同主机名的服务器上

如果要迁移一个集群中的所有config server,则按相反顺序对mongos配置文件中列出的的config server执行以下几个步奏:

如mongos配置文件中配置

configdb=mongo-sharding-config1:2711,mongo-sharding-config2:2722,mongo-sharding-config3:2733

则先对mongo-sharding-config3:2733进行以下操作

A.停掉这个config server.这会导致整个集群的配置数据变为只读

B.更这个config server的DNS指向,将DNS指向到新的服务器的IP

C.将这个config server的数据库目录整个复制到新的config server

D.启动新服务器上的config server

当三个新的config server都启动完毕,整个集群变得可写,可以分割chunk和迁移chunk.

3)迁移config server到拥有不同主机名的服务器上

生产环境中,三个config server必须同时可以使用,如果任何一个config server不可用,必须马上更换.

如果这个环境不方便使用相同的主机名更换不可用的config server,那么则按照以下步骤:

需要特别注意的是,更换集群中的config server的主机名需要停机并且需要重新启动集群中的每一个MongoDB实例.在迁移config server的过程中,需要确保每一个mongos的配置文件中config server的顺序是一样的.

A.临时停掉集群中的balancer进程.

# mongo --port 2911 MongoDB shell version: 2.4.6 connecting to: 127.0.0.1:2911/test mongos> sh.stopBalancer() Waiting for active hosts... Waiting for the balancer lock... Waiting again for active hosts after balancer is off... mongos> sh.getBalancerState() false mongos>

B.停掉需要迁移的config server,这会使整个集群的配置信息只读.

C.复制这个config server的数据库目录下的所有文件到新的config server的数据库目录

D.启动新的config server。

E.停掉所有的MongoDB实例,包括所有的mongod进程和mongos进程。

F.重新启动所有shard中的mongod实例

G.重启没有迁移的config server

H.更新mongos配置文件的config server连接信息

I.重新启动mongos实例

J.重新启动balancer进程。

mongos> sh.setBalancerState(true) mongos> sh.getBalancerState() true mongos>

4)换掉不可用的config server

以下步奏的前提是不要讲monogs配置文件中的config server并且不可用的config server变

A.部署一个与原有config server相同IP和主机名的系统

B.停掉其中一台剩下的config server,将dbpath指定的数据库目录复制到新的系统中。

C.如果有必要,更新DNS

D.启动新的config server

9.将一个Replica Set转换成分片集群

http://docs.mongodb.org/manual/tutorial/convert-replica-set-to-replicated-shard-cluster/

这个文档为了方便测试将chunk size设置成为1M,MongoDB默认的chunk size是64MB。

使用

10.将一个分片集群转换成一个Replica Set

1)将只有一个分片的分片集群转成Replica Set

如果一个分片集群只有一个分片,那么这个分片拥有整个集群的数据集。

A.配置应用程序重新连接到这个Replica Set的Primary上

B.如果Replica Set中mongod进程以添加了--shardsrv参数,则需要将这个参数去掉,重新启动.

C.去掉集群中的其他成员,如config server和router server.

2)将拥有多个分片的分片集群转换成Replica Set

A.部署一个新的Replica Set,确保这个新的Replica Set的容量足够装下整个分片集群的数据,在没有完成转换之前,不要将应用程序连接到这个新的Replica Set上

B.阻止应用程序再向集群发送写操作请求.可以重新更新应用程序的数据库连接信息或是停掉所有的mongos进程.

C.使用mongodump从mongos备份数据,然后使用mongorestore将数据恢复到新的Replica Set.不只是分片的集合需要迁移,没有分片的集合也要迁移,确保所有的数据都迁移到新的Replica Set.

D.重新配置应用程序连接到新的Replica Set.

11.查看集群配置信息

查看开启sharding功能的数据库

# mongo --port 2911

MongoDB shell version: 2.4.6

connecting to: 127.0.0.1:2911/test

mongos> show dbs;

admin (empty)

config 0.046875GB

game 0.203125GB

test (empty)

mongos> use config

switched to db config

mongos> show tables;

changelog

chunks

collections

databases

lockpings

locks

mongos

settings

shards

system.indexes

tags

version

mongos> db.databases.find();

{ "_id" : "admin", "partitioned" : false, "primary" : "config" }

{ "_id" : "test", "partitioned" : false, "primary" : "test_shard1" }

{ "_id" : "game", "partitioned" : true, "primary" : "test_shard1" }

mongos>

查看shard的配置信息

mongos> use admin;

switched to db admin

mongos> db.runCommand({listShards : 1})

{

"shards" : [

{

"_id" : "test_shard1",

"host" : "test_shard1/mongo-sharding-replica1:2811,mongo-sharding-replica2:2822,mongo-sharding-replica3:2833"

},

{

"_id" : "test_shard2",

"host" : "test_shard2/mongo-sharding-replica4:3811,mongo-sharding-replica5:3822,mongo-sharding-replica6:3833"

}

],

"ok" : 1

}

mongos>

查看集群的状态信息

使用sh.status();或者db.printShardingStatus(); 列出集群的详细信息

mongos> sh.status();

--- Sharding Status ---

sharding version: {

"_id" : 1,

"version" : 3,

"minCompatibleVersion" : 3,

"currentVersion" : 4,

"clusterId" : ObjectId("54adef1aa56455cefe56d8bd")

}

shards:

{ "_id" : "test_shard1", "host" : "test_shard1/mongo-sharding-replica1:2811,mongo-sharding-replica2:2822,mongo-sharding-replica3:2833" }

{ "_id" : "test_shard2", "host" : "test_shard2/mongo-sharding-replica4:3811,mongo-sharding-replica5:3822,mongo-sharding-replica6:3833" }

databases:

{ "_id" : "admin", "partitioned" : false, "primary" : "config" }

{ "_id" : "test", "partitioned" : false, "primary" : "test_shard1" }

{ "_id" : "game", "partitioned" : true, "primary" : "test_shard1" }

game.player

shard key: { "_id" : 1 }

chunks:

test_shard1 1

{ "_id" : { "$minKey" : 1 } } -->> { "_id" : { "$maxKey" : 1 } } on : test_shard1 Timestamp(1, 0)

game.task

shard key: { "_id" : "hashed" }

chunks:

test_shard1 1

{ "_id" : { "$minKey" : 1 } } -->> { "_id" : { "$maxKey" : 1 } } on : test_shard1 Timestamp(1, 0)

12.将一个分片集群迁移到不同的硬件上

以下步骤是有关如何将一个分片集群中的组件在不影响读写的前提下迁移到不同的硬件系统上。

在迁移的过程中,不要有任何改变集群元数据的操作,如创建和删除数据库,创建和删除集合,或者使用任何sharding操作。

A.停掉balancer进程

mongos> sh.stopBalancer(); Waiting for active hosts... Waiting for the balancer lock... Waiting again for active hosts after balancer is off... mongos> sh.getBalancerState() false mongos>

B.分别迁移每个config server

要从mongos配置文件中列出的config server的相反顺序挨个迁移,并且在迁移的过程中不能对config server重新命名。如果集群中一个config server的IP或者主机名更改,需要对集群中的所有的mongod和mongos进行重启,所以为了避免宕机,可以使用CNAME来标明config server。

a.停掉这个config server

b.更改这个config server的DNS指向

c.复制dbpath指定的目录内容到新的服务器上

d.启动新的config server

C.重新启动mongos实例

如果在mongos迁移的过程中,configdb需要变更,则更好之前先要停掉所有的mongos实例。如果迁移mongos的过程中不需要变更configdb,那么可以直接依次或者全部迁移mongos

a.使用shutdown命令关掉mongos实例,如果configdb有变更则关闭所有的mongos实例

b.如果config server的主机名有所变更,则需要将所有的mongos的configdb重新更新。

c.重新启动mongos实例

D.迁移后端shard

一次迁移个shard。对于每一个shard重复一下步骤:

对于每一个replica set shard即副本集分片,先迁移非primary成员,最后再迁移primary成员

如果这个replica set中含有两个投票成员,那么添加一个arbiter,保证在迁移的过程中大多数投票可用,迁移完成后可以移除这个arbiter。

a.关闭mongod进程,可以使用shutdown命令确保完全关闭。

b.移动数据目录到新的机器。

c.启动新服务器上的mongod实例

d.连接到这个replica set的primary

e.如果这个成员的主机名更改了使用rs.reconfig()主机名

cfg = rs.conf() cfg.members[2].host = "pocatello.example.net:27017" rs.reconfig(cfg)

f.使用rs.conf()确认配置

g.等待这个成员恢复状态,使用rs.status()查看状态

迁移Replica set中的Primary

在迁移Primary的过程中,Replica Set必须选举出一个新的Primary。在选举新的Primary的过程中,整个Replica Set不能对外提供读操作和接受些操作。所以迁移最好放到维护时间进行。

a.在Primary上执行rs.stepDown()操作

b.当Primary让位变成Secondary,其他的成员升级为Primary。就可以按照以上的步骤迁移这个Primary了。

E.重新开启balancer进程

连接到其中一个mongos实例,然后执行以下操作。

sh.setBalancerState(true)

使用

sh.getBalancerState();

查看balancer状态

13.备份集群中元数据

集群中的config server存储了整个集群的元数据,最重要的是存储了chunk到shard的对应关系。

a.临时关闭集群中的balancer进程

# mongo --port 2911 MongoDB shell version: 2.4.6 connecting to: 127.0.0.1:2911/test mongos> sh.setBalancerState(false); mongos> sh.getBalancerState(); false mongos>

b.关闭其中一个config server

# mongo --port 2711 MongoDB shell version: 2.4.6 connecting to: 127.0.0.1:2711/test configsvr> use admin; switched to db admin configsvr> db.shutdownServer(); Thu Mar 12 16:57:40.085 DBClientCursor::init call() failed server should be down... Thu Mar 12 16:57:40.087 trying reconnect to 127.0.0.1:2711 Thu Mar 12 16:57:40.087 reconnect 127.0.0.1:2711 failed couldn't connect to server 127.0.0.1:2711 >

c.复制dbpath指定目录的内容

d.重新启动这个config server

e.重新开启balancer进程

mongos> sh.setBalancerState(true); mongos> sh.getBalancerState(); true

当关闭一个config server时,整个集群不能分裂chunks和在shard间迁移chunks,但是仍然可以向整个集群中写入数据。

参考文章:

http://docs.mongodb.org/manual/administration/sharded-cluster-deployment/