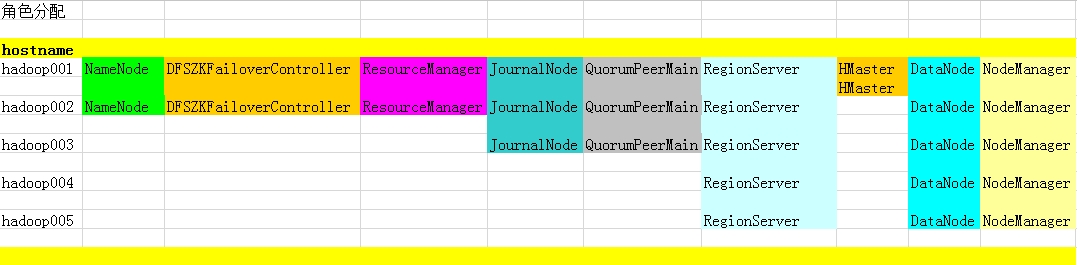

hadoop-2.6.0+zookeeper-3.4.6+hbase-1.0.0+hive-1.1.0完全分布式集群HA部署

我这也是整合了大家的东西整理出来的,如果有不足或者错误的地方,希望大家指正

因为准备生产环境用的,所以都做了HA

HDFS的NameNode HA

YARN的Resource Manager HA

Hbase的Hmaster HA

Hive的Hiveserver2 HA

PS:听说还有人对JobHistory做HA,这个我没做,谁有经验的也跟我讲下

=================================================================

部署hadoop完全分布式集群

一、准备环境

移除已有jdk(最小化安装没有自带jdk,可略过此步骤)

yum remove java-1.7.0-openjdk -y

关闭防火墙和selinux

service iptables stop

chkconfig iptables off

setenforce 0

vi /etc/selinux/config

SELINUX=disabled

所有机器同步时间

ntpdate 202.120.2.101

配置主机名和hosts

vi /etc/networks

HOSTNAME=hadoop001~005

编辑hosts文件

vi /etc/hosts

192.168.5.2 hadoop001.test.com

192.168.5.3 hadoop002.test.com

192.168.5.4 hadoop003.test.com

192.168.5.5 hadoop004.test.com

192.168.5.6 hadoop005.test.com

配置yum源

vi /etc/yum.repos.d/rhel.repo

创建hadoop用户和组

groupadd hadoop

useradd -g hadoop hadoop

passwd hadoop

赋权限,以备后续步骤安装软件[安装包都在/usr/local/src]

chown hadoop.hadoop /usr/local/src -R

切换hadoop用户

su - hadoop

配置密钥验证免密码登录[所有namenode都要做一遍]

ssh-keygen -t rsa -P ''

cat $HOME/.ssh/id_rsa.pub >> $HOME/.ssh/authorized_keys

chmod 700 ~/.ssh/

chmod 600 ~/.ssh/authorized_keys

ssh-copy-id -i $HOME/.ssh/id_rsa.pub [email protected]

ssh-copy-id -i $HOME/.ssh/id_rsa.pub [email protected]

ssh-copy-id -i $HOME/.ssh/id_rsa.pub [email protected]

ssh-copy-id -i $HOME/.ssh/id_rsa.pub [email protected]

验证

ssh hadoop002.test.com ~ hadoop005.test.com

创建备用目录

mkdir -pv /home/hadoop/storage/hadoop/tmp

mkdir -pv /home/hadoop/storage/hadoop/name

mkdir -pv /home/hadoop/storage/hadoop/data

mkdir -pv /home/hadoop/storage/hadoop/journal

mkdir -pv /home/hadoop/storage/yarn/local

mkdir -pv /home/hadoop/storage/yarn/logs

mkdir -pv /home/hadoop/storage/hbase

mkdir -pv /home/hadoop/storage/zookeeper/data

mkdir -pv /home/hadoop/storage/zookeeper/logs

scp -r /home/hadoop/storage hadoop002.test.com:/home/hadoop/

scp -r /home/hadoop/storage hadoop003.test.com:/home/hadoop/

scp -r /home/hadoop/storage hadoop004.test.com:/home/hadoop/

scp -r /home/hadoop/storage hadoop005.test.com:/home/hadoop/

配置hadoop环境变量

vi ~/.bashrc

export JAVA_HOME=/usr/java/jdk1.7.0_67-cloudera

export JRE_HOME=$JAVA_HOME/jre

export CLASSPATH=.:$JAVA_HOME/lib/dt.jar:$JAVA_HOME/lib/tools.jar:$JRE_HOME/lib/rt.jar

export PATH=$PATH:$JAVA_HOME/bin

##java env

export HADOOP_HOME=/home/hadoop/hadoop

export HIVE_HOME=/home/hadoop/hive

export HBASE_HOME=/home/hadoop/hbase

##hadoop hbase hive

export HADOOP_MAPRED_HOME=${HADOOP_HOME}

export HADOOP_COMMON_HOME=${HADOOP_HOME}

export HADOOP_HDFS_HOME=${HADOOP_HOME}

export YARN_HOME=${HADOOP_HOME}

export HADOOP_YARN_HOME=${HADOOP_HOME}

export HADOOP_CONF_DIR=${HADOOP_HOME}/etc/hadoop

export HDFS_CONF_DIR=${HADOOP_HOME}/etc/hadoop

export YARN_CONF_DIR=${HADOOP_HOME}/etc/hadoop

export PATH=$PATH:$HADOOP_HOME/bin:$HADOOP_HOME/sbin:$HBASE_HOME/bin:$HIVE_HOME/bin

source ~/.bashrc

scp ~/.bashrc hadoop002.test.com:~/.bashrc

scp ~/.bashrc hadoop003.test.com:~/.bashrc

scp ~/.bashrc hadoop004.test.com:~/.bashrc

scp ~/.bashrc hadoop005.test.com:~/.bashrc

安装jdk

rpm -ivh oracle-j2sdk1.7-1.7.0+update67-1.x86_64.rpm

配置环境变量[准备环境时已做,此步骤可略]

验证jdk安装成功

java -version

二、部署hadoop-2.6.0的namenoe HA、resource manager HA

解压、改名

tar xf /usr/local/src/hadoop-2.6.0.tar.gz -C /home/hadoop

cd /home/hadoop

mv hadoop-2.6.0 hadoop

配置hadoop环境变量[准备环境时已做,略]

验证hadoop安装成功

hadoop version

修改hadoop配置文件

[1]

vi /home/hadoop/hadoop/etc/hadoop/core-site.xml

#################################################################################

<?xml version="1.0" encoding="UTF-8"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<configuration>

<!-- 指定hdfs的nameservice为ns1,是NameNode的URI。hdfs://主机名:端口/ -->

<property>

<name>fs.defaultFS</name>

<value>hdfs://gagcluster:9000</value>

</property>

<property>

<name>io.file.buffer.size</name>

<value>131072</value>

</property>

<!-- 指定hadoop临时目录 -->

<property>

<name>hadoop.tmp.dir</name>

<value>/home/hadoop/storage/hadoop/tmp</value>

<description>Abase for other temporary directories.</description>

</property>

<!--指定可以在任何IP访问-->

<property>

<name>hadoop.proxyuser.hduser.hosts</name>

<value>*</value>

</property>

<!--指定所有用户可以访问-->

<property>

<name>hadoop.proxyuser.hduser.groups</name>

<value>*</value>

</property>

<!-- 指定zookeeper地址 -->

<property>

<name>ha.zookeeper.quorum</name>

<value>hadoop001.test.com:2181,hadoop002.test.com:2181,hadoop003.test.com:2181</value>

</property>

</configuration>

#################################################################################

[2]

vi /home/hadoop/hadoop/etc/hadoop/hdfs-site.xml

#################################################################################

<?xml version="1.0" encoding="UTF-8"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<configuration>

<!--节点黑名单列表文件,用于下线hadoop节点 -->

<property>

<name>dfs.hosts.exclude</name>

<value>/home/hadoop/hadoop/etc/hadoop/exclude</value>

</property>

<!--指定hdfs的block大小64M -->

<property>

<name>dfs.block.size</name>

<value>67108864</value>

</property>

<!--指定hdfs的nameservice为ns1,需要和core-site.xml中的保持一致 -->

<property>

<name>dfs.nameservices</name>

<value>gagcluster</value>

</property>

<!-- ns1下面有两个NameNode,分别是nn1,nn2 -->

<property>

<name>dfs.ha.namenodes.gagcluster</name>

<value>nn1,nn2</value>

</property>

<!-- nn1的RPC通信地址 -->

<property>

<name>dfs.namenode.rpc-address.gagcluster.nn1</name>

<value>hadoop001.test.com:9000</value>

</property>

<!-- nn1的http通信地址 -->

<property>

<name>dfs.namenode.http-address.gagcluster.nn1</name>

<value>hadoop001.test.com:50070</value>

</property>

<!-- nn2的RPC通信地址 -->

<property>

<name>dfs.namenode.rpc-address.gagcluster.nn2</name>

<value>hadoop002.test.com:9000</value>

</property>

<!-- nn2的http通信地址 -->

<property>

<name>dfs.namenode.http-address.gagcluster.nn2</name>

<value>hadoop002.test.com:50070</value>

</property>

<!-- 指定NameNode的元数据在JournalNode上的存放位置 -->

<property>

<name>dfs.namenode.shared.edits.dir</name>

<value>qjournal://hadoop001.test.com:8485;hadoop002.test.com:8485;hadoop003.test.com:8485/gagcluster</value>

</property>

<!-- 配置失败自动切换实现方式 -->

<property>

<name>dfs.client.failover.proxy.provider.gagcluster</name>

<value>org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvider</value>

</property>

<!-- 配置隔离机制 -->

<property>

<name>dfs.ha.fencing.methods</name>

<value>sshfence</value>

</property>

<!-- 使用隔离机制时需要ssh免密码登陆 -->

<property>

<name>dfs.ha.fencing.ssh.private-key-files</name>

<value>/home/hadoop/.ssh/id_rsa</value>

</property>

<!-- 指定NameNode的元数据在JournalNode上的存放位置 -->

<property>

<name>dfs.journalnode.edits.dir</name>

<value>/home/hadoop/storage/hadoop/journal</value>

</property>

<!--指定支持高可用自动切换机制-->

<property>

<name>dfs.ha.automatic-failover.enabled</name>

<value>true</value>

</property>

<!--指定namenode名称空间的存储地址-->

<property>

<name>dfs.namenode.name.dir</name>

<value>/home/hadoop/storage/hadoop/name</value>

</property>

<!--指定datanode数据存储地址-->

<property>

<name>dfs.datanode.data.dir</name>

<value>file:/home/hadoop/storage/hadoop/data</value>

</property>

<!--指定数据冗余份数-->

<property>

<name>dfs.replication</name>

<value>3</value>

</property>

<!--指定可以通过web访问hdfs目录-->

<property>

<name>dfs.webhdfs.enabled</name>

<value>true</value>

</property>

<!--保证数据恢复 -->

<property>

<name>dfs.journalnode.http-address</name>

<value>0.0.0.0:8480</value>

</property>

<property>

<name>dfs.journalnode.rpc-address</name>

<value>0.0.0.0:8485</value>

</property>

<property>

<name>ha.zookeeper.quorum</name>

<value>hadoop001.test.com:2181,hadoop002.test.com:2181,hadoop003.test.com:2181</value>

</property>

</configuration>

#################################################################################

[3]

vi /home/hadoop/hadoop/etc/hadoop/mapred-site.xml

#################################################################################

<configuration>

<!-- 配置MapReduce运行于yarn中 -->

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

<!-- 配置 MapReduce JobHistory Server 地址 ,默认端口10020 -->

<property>

<name>mapreduce.jobhistory.address</name>

<value>0.0.0.0:10020</value>

</property>

<!-- 配置 MapReduce JobHistory Server web ui 地址, 默认端口19888 -->

<property>

<name>mapreduce.jobhistory.webapp.address</name>

<value>0.0.0.0:19888</value>

</property>

</configuration>

#################################################################################

[4]

yarn-site.xml

#################################################################################

<?xml version="1.0"?>

<configuration>

<!--日志聚合功能-->

<property>

<name>yarn.log-aggregation-enable</name>

<value>true</value>

</property>

<!--在HDFS上聚合的日志最长保留多少秒。3天-->

<property>

<name>yarn.log-aggregation.retain-seconds</name>

<value>259200</value>

</property>

<!--rm失联后重新链接的时间-->

<property>

<name>yarn.resourcemanager.connect.retry-interval.ms</name>

<value>2000</value>

</property>

<!--开启resource manager HA,默认为false-->

<property>

<name>yarn.resourcemanager.ha.enabled</name>

<value>true</value>

</property>

<!--配置resource manager -->

<property>

<name>yarn.resourcemanager.ha.rm-ids</name>

<value>rm1,rm2</value>

</property>

<property>

<name>ha.zookeeper.quorum</name>

<value>hadoop001.test.com:2181,hadoop002.test.com:2181,hadoop003.test.com:2181</value>

</property>

<!--开启故障自动切换-->

<property>

<name>yarn.resourcemanager.ha.automatic-failover.enabled</name>

<value>true</value>

</property>

<property>

<name>yarn.resourcemanager.hostname.rm1</name>

<value>hadoop001.test.com</value>

</property>

<property>

<name>yarn.resourcemanager.hostname.rm2</name>

<value>hadoop002.test.com</value>

</property>

<!--在namenode1上配置rm1,在namenode2上配置rm2,注意:一般都喜欢把配置好的文件远程复制到其它机器上,但这个在YARN的另一个机器上一定要修改-->

<property>

<name>yarn.resourcemanager.ha.id</name>

<value>rm1</value>

<description>If we want to launch more than one RM in single node, we need this configuration</description>

</property>

<!--开启自动恢复功能-->

<property>

<name>yarn.resourcemanager.recovery.enabled</name>

<value>true</value>

</property>

<!--配置与zookeeper的连接地址-->

<property>

<name>yarn.resourcemanager.zk-state-store.address</name>

<value>hadoop001.test.com:2181,hadoop002.test.com:2181,hadoop003.test.com:2181</value>

</property>

<property>

<name>yarn.resourcemanager.store.class</name>

<value>org.apache.hadoop.yarn.server.resourcemanager.recovery.ZKRMStateStore</value>

</property>

<property>

<name>yarn.resourcemanager.zk-address</name>

<value>hadoop001.test.com:2181,hadoop002.test.com:2181,hadoop003.test.com:2181</value>

</property>

<property>

<name>yarn.resourcemanager.cluster-id</name>

<value>gagcluster-yarn</value>

</property>

<!--schelduler失联等待连接时间-->

<property>

<name>yarn.app.mapreduce.am.scheduler.connection.wait.interval-ms</name>

<value>5000</value>

</property>

<!--配置rm1-->

<property>

<name>yarn.resourcemanager.address.rm1</name>

<value>hadoop001.test.com:8132</value>

</property>

<property>

<name>yarn.resourcemanager.scheduler.address.rm1</name>

<value>hadoop001.test.com:8130</value>

</property>

<property>

<name>yarn.resourcemanager.webapp.address.rm1</name>

<value>hadoop001.test.com:8188</value>

</property>

<property>

<name>yarn.resourcemanager.resource-tracker.address.rm1</name>

<value>hadoop001.test.com:8131</value>

</property>

<property>

<name>yarn.resourcemanager.admin.address.rm1</name>

<value>hadoop001.test.com:8033</value>

</property>

<property>

<name>yarn.resourcemanager.ha.admin.address.rm1</name>

<value>hadoop001.test.com:23142</value>

</property>

<!--配置rm2-->

<property>

<name>yarn.resourcemanager.address.rm2</name>

<value>hadoop002.test.com:8132</value>

</property>

<property>

<name>yarn.resourcemanager.scheduler.address.rm2</name>

<value>hadoop002.test.com:8130</value>

</property>

<property>

<name>yarn.resourcemanager.webapp.address.rm2</name>

<value>hadoop002.test.com:8188</value>

</property>

<property>

<name>yarn.resourcemanager.resource-tracker.address.rm2</name>

<value>hadoop002.test.com:8131</value>

</property>

<property>

<name>yarn.resourcemanager.admin.address.rm2</name>

<value>hadoop002.test.com:8033</value>

</property>

<property>

<name>yarn.resourcemanager.ha.admin.address.rm2</name>

<value>hadoop002.test.com:23142</value>

</property>

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<property>

<name>yarn.nodemanager.aux-services.mapreduce.shuffle.class</name>

<value>org.apache.hadoop.mapred.ShuffleHandler</value>

</property>

<property>

<name>yarn.nodemanager.local-dirs</name>

<value>/home/hadoop/storage/yarn/local</value>

</property>

<property>

<name>yarn.nodemanager.log-dirs</name>

<value>/home/hadoop/storage/yarn/logs</value>

</property>

<property>

<name>mapreduce.shuffle.port</name>

<value>23080</value>

</property>

<!--故障处理类-->

<property>

<name>yarn.client.failover-proxy-provider</name>

<value>org.apache.hadoop.yarn.client.ConfiguredRMFailoverProxyProvider</value>

</property>

<property>

<name>yarn.resourcemanager.ha.automatic-failover.zk-base-path</name>

<value>/yarn-leader-election</value>

<description>Optional setting. The default value is /yarn-leader-election</description>

</property>

</configuration>

##########################################################################

配置DataNode节点

vi /home/hadoop/hadoop/etc/hadoop/slaves

##########################################################################

hadoop001.test.com

hadoop002.test.com

hadoop003.test.com

hadoop004.test.com

hadoop005.test.com

创建exclude文件,用于以后下线hadoop节点

touch /home/hadoop/hadoop/etc/hadoop/exclude

同步hadoop工程到hadoop002~005机器上面

scp -r /home/hadoop/hadoop hadoop002.test.com:/home/hadoop/

scp -r /home/hadoop/hadoop hadoop003.test.com:/home/hadoop/

scp -r /home/hadoop/hadoop hadoop004.test.com:/home/hadoop/

scp -r /home/hadoop/hadoop hadoop005.test.com:/home/hadoop/

修改nn2配置文件yarn-site.xml

三、部署zookeeper3.4.6三节点完全分布式集群

使用三台服务器安装zookeeper,安装在hadoop用户上

hadoop001.test.com 192.168.5.2

hadoop002.test.com 192.168.5.3

hadoop003.test.com 192.168.5.4

解压、改名

tar xf zookeeper-3.4.6.tar.gz -C /home/hadoop/

mv /home/hadoop/zookeeper-3.4.6/ /home/hadoop/zookeeper

cd /home/hadoop/zookeeper

修改配置文件

vi /usr/local/zookeeper/conf/zoo.cfg

tickTime=2000

initLimit=5

syncLimit=2

dataDir=/home/hadoop/storage/zookeeper/data

dataLogDir=/home/hadoop/storage/zookeeper/logs

clientPort=2181

server.1=hadoop001.test.com:2888:3888

server.2=hadoop002.test.com:2888:3888

server.3=hadoop003.test.com:2888:3888

同步到hadoop002、hadoop003节点

scp -r /home/hadoop/zookeeper hadoop002.test.com:/home/hadoop

scp -r /home/hadoop/zookeeper hadoop003.test.com:/home/hadoop

创建zookeeper的数据文件和日志存放目录[准备环境已做,此步骤略]

hadoop001~003分别编辑myid值

echo 1 > /home/hadoop/storage/zookeeper/data/myid

echo 2 > /home/hadoop/storage/zookeeper/data/myid

echo 3 > /home/hadoop/storage/zookeeper/data/myid

====================现在不执行,统一启动=========================

启动命令

/home/hadoop/zookeeper/bin/zkServer.sh start

验证安装成功

通过jps命令可以查看ZooKeeper服务器进程,名称为QuorumPeerMain

查看状态

/home/hadoop/zookeeper/bin/zkServer.sh status

启动命令行

/home/hadoop/zookeeper/bin/zkCli.sh

查看是否有hadoop-ha

ls /

查看是否有逻辑namenode组名称

ls /hadoop-ha

================================================================

四、部署hbase-1.0.0的Hmaster HA

解压部署

tar xf hbase-1.0.0-bin.tar.gz -C /home/hadoop

cd /home/hadoop

mv hbase-1.0.0 hbase

修改配置文件

配置regionserver节点

vi /home/hadoop/hbase/conf/regionservers

hadoop001.test.com

hadoop002.test.com

hadoop003.test.com

hadoop004.test.com

hadoop005.test.com

vi /home/hadoop/hbase/conf/hbase-site.xml

#########################################################################

<?xml version="1.0"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<configuration>

<property>

<!--因为是多台master,所以hbase.roodir的值跟hadoop配置文件hdfs-site.xml中dfs.nameservices的值是一样的-->

<name>hbase.rootdir</name>

<value>hdfs://gagcluster/hbase</value>

</property>

<property>

<name>hbase.cluster.distributed</name>

<value>true</value>

</property>

<property>

<name>hbase.tmp.dir</name>

<value>/home/hadoop/storage/hbase</value>

</property>

<property>

<name>hbase.zookeeper.quorum</name>

<value>hadoop001.test.com,hadoop002.test.com,hadoop003.test.com</value>

</property>

<property>

<!--当定义多台master的时候,我们只需要提供端口号-->

<name>hbase.master.port</name>

<value>60000</value>

</property>

<property>

<name>zookeeper.session.timeout</name>

<value>60000</value>

</property>

<property>

<name>hbase.zookeeper.property.clientPort</name>

<value>2181</value>

</property>

<property>

<!--跟zookeeperper配置的dataDir一致-->

<name>hbase.zookeeper.property.dataDir</name>

<value>/home/hadoop/storage/zookeeper/data</value>

</property>

</configuration>

###########################################################################

禁用hbase自带的zookeeper

vi /home/hadoop/hbase/conf/hbase-env.sh

export HBASE_MANAGES_ZK=false

同步hbase工程到hadoop002~005机器上

scp -r /home/hadoop/hbase hadoop002.test.com:/home/hadoop

scp -r /home/hadoop/hbase hadoop003.test.com:/home/hadoop

scp -r /home/hadoop/hbase hadoop004.test.com:/home/hadoop

scp -r /home/hadoop/hbase hadoop005.test.com:/home/hadoop

====================现在不执行,统一启动=========================

注意!!必须在hadoop集群启动后再启动hbase集群

方法一、

主hmaster节点上

/home/hadoop/hbase/bin/start-hbase.sh

备hmaster节点上

/home/hadoop/hbase/bin/hbase-daemon.sh start master

验证安装成功

hbase shell

list

方法二、

添加配置文件

vi /home/hadoop/hbase/conf/backup-masters

hadoop002.test.com

主master节点启动

/home/hadoop/hbase/bin/start-hbase.sh

================================================================

五、部署hive-1.1.0的hiveserver2 HA

解压部署

tar xf apache-hive-1.1.0-bin.tar.gz -C /home/hadoop/

mv apache-hive-1.1.0-bin hive

修改配置文件

cd /home/hadoop/hive/conf/

cp hive-default.xml.template hive-site.xml

vi hive-site.xml

###########################################################################################

<?xml version="1.0"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<configuration>

<!--hive数据存放目录-->

<property>

<name>hive.metastore.warehouse.dir</name>

<value>/home/hadoop/storage/hive/warehouse</value>

</property>

<!--通过jdbc协议连接mysql的hive库-->

<property>

<name>javax.jdo.option.ConnectionURL</name>

<value>jdbc:mysql://192.168.5.2:3306/hive?createData baseIfNotExist=true</value>

<description>JDBC connect string for a JDBC metastore</description>

</property>

<!--jdbc的mysql驱动-->

<property>

<name>javax.jdo.option.ConnectionDriverName</name>

<value>com.mysql.jdbc.Driver</value>

<description>Driver class name for a JDBC metastore</description>

</property>

<!--mysql用户名-->

<property>

<name>javax.jdo.option.ConnectionUserName</name>

<value>hive</value>

<description>username to use against metastore database</description>

</property>

<!--mysql用户密码-->

<property>

<name>javax.jdo.option.ConnectionPassword</name>

<value>hive</value>

<description>password to use against metastore database</description>

</property>

<!--hive的web页面-->

<property>

<name>hive.hwi.war.file</name>

<value>lib/hive-hwi-1.1.0.war</value>

</property>

<!--远程模式-->

<property>

<name>hive.metastore.local</name>

<value>false</value>

</property>

<!--指定hive元数据访问路径-->

<property>

<name>hive.metastore.uris</name>

<value>thrift://192.168.5.2:9083</value>

</property>

<!--hiveserver2的HA-->

<property>

<name>hive.zookeeper.quorum</name>

<value>hadoop001.test.com,hadoop002.test.com,hadoop003.test.com</value>

</property>

<configuration>

###########################################################################################

添加mysql驱动

cp mysql-connector-java-5.1.35.jar /home/hadoop/hive/lib/

添加hive web页面的war包

下载hive源码包,进入hwi/web

jar cvf hive-hwi-1.1.0.war ./*

cp hive-hwi-1.1.0.war /home/hadoop/hive/lib/

从hbase/lib下复制必要jar包到hive/lib下

cp /home/hadoop/hbase/lib/hbase-client-1.0.0.jar /home/hadoop/hbase/lib/hbase-common-1.0.0.jar /home/hadoop/hive/lib

同步hive和hadoop的jline版本

查看版本

find ./ -name "*jline*jar"

cp /home/hadoop/hive/lib/jline-2.12.jar /home/hadoop/hadoop/share/hadoop/yarn/lib

删除低版本的jline 0.9

复制jdk的tools.jar到hive/lib下

cp $JAVA_HOME/lib/tools.jar /home/hadoop/hive/lib

在mysql节点

mysql创建hive用户,密码hive

CREATE USER hive IDENTIFIED BY 'hive';

GRANT ALL PRIVILEGES ON *.* TO 'hive'@'%' WITH GRANT OPTION;

flush privileges;

用创建的hive用户登录mysql并创建hive库

mysql -uhive -phive

create database hive;

====================现在不执行,统一启动=========================

Hive的service

1、 hive 远程服务 (端口号10000) 启动方式

/home/hadoop/hive/bin/hive --service metastore &

/home/hadoop/hive/bin/hive --service hiveserver2 &【或 /home/hadoop/hive/bin/hiveserver2 &】

客户端调用

!connect jdbc:hive2://xxxxx:2181,xxxx:2181/;serviceDiscoveryMode=zookeeper user pass

2、hive 命令行模式

/home/hadoop/hive/bin/hive

或者输入

hive --service cli

3、 hive web界面的 (端口号9999) 启动方式

/home/hadoop/hive/bin/hive --service hwi&

用于通过浏览器来访问hive

http://hadoop001.test.com:9999/hwi/

###########################################################################################

Hadoop集群首次启动过程

###########################################################################################

1.如果zookeeper集群还没有启动的话, 首先把各个zookeeper起来。

/home/hadoop/zookeeper/bin/zkServer.sh start (记住所有的zookeeper机器都要启动)

/home/hadoop/zookeeper/bin/zkServer.sh status (1个leader,n-1个follower)

输入jps,会显示启动进程:QuorumPeerMain

2.、然后在主namenode节点执行如下命令,创建命名空间

/home/hadoop/hadoop/bin/hdfs zkfc -formatZK (复制命令,-会变成― 建议手敲)

3、在每个节点用如下命令启日志程序

/home/hadoop/hadoop/sbin/hadoop-daemon.sh start journalnode

(每个journalnode节点都需要启动)

4、在主namenode节点用./bin/hadoop namenode -format格式化namenode和journalnode目录

/home/hadoop/hadoop/bin/hadoop namenode -format

验证成功

在zookeeper节点执行

/home/hadoop/zookeeper/bin/zkCli.sh

ls /

ls /hadoop-ha

5、在主namenode节点启动namenode进程

/home/hadoop/hadoop/sbin/hadoop-daemon.sh start namenode

6、在备namenode节点执行第一行命令,把备namenode节点的目录格式化并把元数据从主namenode节点copy过来,并且这个命令不会把journalnode目录再格式化了!然后用第二个命令启动备namenode进程!

/home/hadoop/hadoop/bin/hdfs namenode -bootstrapStandby【或者直接scp -r /home/hadoop/storage/hadoop/name hadoop002.test.com:/home/hadoop/storage/hadoop】

/home/hadoop/hadoop/sbin/hadoop-daemon.sh start namenode

7、在两个namenode节点都执行以下命令

/home/hadoop/hadoop/sbin/hadoop-daemon.sh start zkfc

8、启动datanode

方法一、

在所有datanode节点都执行以下命令启动datanode

/home/hadoop/hadoop/sbin/hadoop-daemons.sh start datanode

方法二、

启动datanode节点多的时候,可以直接在主NameNode(nn1)上执行如下命令一次性启动所有datanode

/home/hadoop/hadoop/sbin/hadoop-daemons.sh start datanode

9. 主namenode节点启动Yarn

/home/hadoop/hadoop/sbin/start-yarn.sh

10.启动hbase

方法一、

主hmaster节点上

/home/hadoop/hbase/bin/start-hbase.sh

备hmaster节点上

/home/hadoop/hbase/bin/hbase-daemon.sh start master

验证安装成功

hbase shell

list

方法二、

添加配置文件

vi /home/hadoop/hbase/conf/backup-masters

hadoop002.test.com

主master节点启动

/home/hadoop/hbase/bin/start-hbase.sh

11.启动hive

hive 远程服务 (端口号10000) 启动方式

/home/hadoop/hive/bin/hive --service metastore &

/home/hadoop/hive/bin/hive --service hiveserver2 &【或 /home/hadoop/hive/bin/hiveserver2 &】

客户端调用

!connect jdbc:hive2://xxxxx:2181,xxxx:2181/;serviceDiscoveryMode=zookeeper user pass

hive 命令行模式

/home/hadoop/hive/bin/hive

或者输入

hive --service cli

hive web界面的 (端口号9999) 启动方式

/home/hadoop/hive/bin/hive --service hwi&

用于通过浏览器来访问hive

http://hadoop001.test.com:9999/hwi/

##########################################################################################

参考资料:

==========================================================================================

【Hdfs的NameNode HA、Yarn的Resouce Manager HA】

HBase+ZooKeeper+Hadoop2.6.0的ResourceManager HA集群高可用配置

http://www.aboutyun.com/thread-11909-1-1.html

【Hbase HA】

http://www.cnblogs.com/junrong624/p/3580477.html

***linux/Hbasegaokeyong_backup_master_569269_1377277861.html

【Hive HA】

Hive HA使用说明及Hive使用HAProxy配置HA(高可用)

http://www.aboutyun.com/thread-10938-1-1.html

Hive安装及使用攻略

http://blog.fens.me/hadoop-hive-intro/

Hive metastore三种配置方式

http://blog.csdn.net/reesun/article/details/8556078

Hive学习之HiveServer2服务端配置与启动

http://www.aboutyun.com/thread-12278-1-1.html

Hive学习之HiveServer2 JDBC客户端

http://blog.csdn.net/skywalker_only/article/details/38366347

Hive内置服务介绍

http://www.aboutyun.com/thread-7438-1-1.html

使用Hive命令行及内置服务

http://www.aboutyun.com/thread-12280-1-1.html

Hive配置文件中配置项的含义详解(收藏版)

http://www.aboutyun.com/thread-7548-1-1.html

hbase0.96与hive0.12整合高可靠文档及问题总结

http://www.aboutyun.com/thread-7881-1-1.html

【hadoop系列】

http://www.cnblogs.com/junrong624/category/537234.html

【其他】

HBase 默认配置说明

http://www.aboutyun.com/thread-7914-1-1.html

HBASE启动脚本/Shell解析

http://zjushch.iteye.com/blog/1736065

全面了解hive

http://www.aboutyun.com/thread-7478-1-1.html

Hadoop添加删除节点

http://my.oschina.net/MrMichael/blog/291802

粉丝日志

http://blog.fens.me/

【备注】

启动hadoop jobhistory历史服务器

/home/hadoop/hadoop/sbin/mr-jobhistory-daemon.sh start historyserver

查看namenode状态

hdfs haadmin -getServiceState <serviceId>