xtrabackup备份陷阱引起的一次严重业务故障

一、场景:早上7点被电话叫醒,说夜间有大量业务订单不成功,web server上报了很多JDBCException和SocketTimeoutException,很明显与数据库有关,考虑到今天是新部署的xtrabackup备份脚本运行的第一天,怀疑与此有关。生产系统环境为redHat5.8,mysql版本为5.6,xtrabackup版本为2.2.10

二、分析过程:

1、上db一看,备份时间确实很异常,1点钟的crontab,运行到6:55才完成:

innobackupex: Backup created in directory '/backup/inc_1'

150825 06:55:57 innobackupex: Connection to database server closed

150825 06:55:57 innobackupex: completed OK!

2、之前测试的时候,与生产同样规模的数据库(30G),全备半小时内可以完成,增量备份5分钟左右完成,为何这次备份耗了接近6个小时?难道是锁表?不应该啊,网上所有的信息都说xtrabackup备份过程是绝对不会锁innodb表的,只会锁MyISAM表,而业务表全是innodb,问题在哪里呢?继续往前看备份日志:

150825 01:04:09 innobackupex: Continuing after ibbackup has suspended

150825 01:04:09 innobackupex: Executing FLUSH TABLES WITH READ LOCK...

>> log scanned up to (547712171257)

>> log scanned up to (547712171257)

>> log scanned up to (547712171257)

。。。。。。

一堆log scanned up to

150825 06:55:44 innobackupex: All tables locked and flushed to disk

150825 06:55:44 innobackupex: Starting to backup non-InnoDB tables and files

。。。。。。

150825 06:55:57 innobackupex: All tables unlocked

很明显,xtrabackup在执行"FLUSH TABLES WITH READ LOCK"的时候被阻塞了,一直阻塞到06:55才执行成功。之前没太理解"FLUSH TABLES WITH READ LOCK"的威力,这个东西的执行,会遇到两种情况:

1)、很快执行成功,这样子后续的备份能较快完成。问题是"FLUSH TABLES WITH READ LOCK"到“All tables unlocked(unlock tables)"期间,肯定是会锁表的,这里不管你是innodb表还是MyISAM表。从上面的日志可以看出,锁了13秒,这13秒,所有的DML语句都会被阻塞。

2)、无法快速执行成功,这样灾难就来了,也就是本次故障发生的根源。什么会阻塞"FLUSH TABLES WITH READ LOCK"的执行呢?可以做下实验确认下

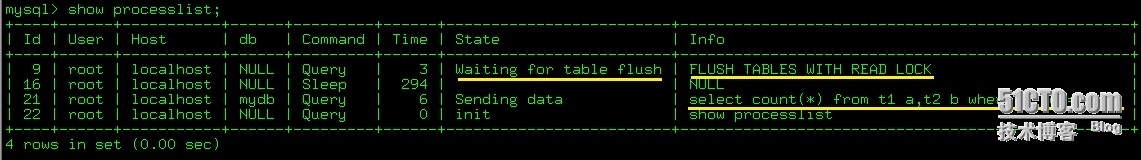

a、运行前有长查询没有执行完

这里可以看到,一个长查询就把"FLUSH TABLES WITH READ LOCK"给阻塞了。

b、运行前有任何表被显式加了锁

对任何一个表,比如t1,执行lock table t1 read,然后再在其它会话执行"FLUSH TABLES WITH READ LOCK",阻塞。如果对t1加写锁lock table t1 write,更会阻塞"FLUSH TABLES WITH READ LOCK"。

不管什么原因,"FLUSH TABLES WITH READ LOCK"被阻塞后,结果是很悲哀的。不仅所有库的DML语句会被阻塞,而且长查询或者显式加锁的数据库,查询都会有问题。

三、DB日志分析

可以从慢查询日志来验证一下前面的分析:

1、订单

# Time: 150825 6:55:57

# User@Host: root[root] @ [172.17.5.15] Id: 9922648

# Query_time: 1534.181557 Lock_time: 0.000000 Rows_sent: 0 Rows_examined: 0

SET timestamp=1440456957;

INSERT INTO `globallink_g`.`g_order` (`id`, `userid`, `create_date`, `modify_date`, `amount_paid`, `order_status`) VALUES (234977, 'test', '2015-08-25 06:36:39', '2015-08-25 06:36:42', 232, 1);

这么一条简单的sql,执行了近半个小时,而且在6:55:57才执行完成,这正好是备份完成(解锁的时刻)

2、报表

# Time: 150825 6:55:58

# User@Host: root[root] @ [192.168.1.200] Id: 9921401

# Query_time: 4173.803182 Lock_time: 0.000081 Rows_sent: 1 Rows_examined: 9742

SET timestamp=1440456958;

call g_getActiveImsi('201508250551','10008');

这个也是被阻塞了1个多小时,在备份完成后才执行完毕。

四、总结

1、该生产系统的数据库架构是传统的HA模式,即数据目录在共享磁阵,cluster软件监控mysql进程,不是流行的mysql master-slave架构,如果是master-slave架构,可以直接备份从库,这样子不会对主库有任何影响。

2、"xtrabackup全程备份不锁innodb表,只锁MyISAM表"这种说法有问题。经过测试,在innobackupex命令里面加个--no-lock参数,就真的不会锁表了,但使用这个参数有几个前提。

官方解释如下:

Use this option to disable table lock with FLUSH TABLES WITH READ LOCK. Use it only if ALL your tables are InnoDB and you DO NOT CARE about the binary log position of the backup. This option shouldn’ t be used if there are any DDL statements being executed or if any updates are happening on non-InnoDB tables (this includes the system MyISAM tables in the mysql database), otherwise it could lead to an inconsistent backup. If you are considering to use --no-lock because your backups are failing to acquire the lock,this could be because of incoming replication events preventing the lock from succeeding. Please try using

--safe-slave-backup to momentarily stop the replication slave thread, this may help the backup to succeed and you then don’t need to resort to using this option. xtrabackup_binlog_info is not created when �Cno-lock option is used (because SHOW MASTER STATUS may be inconsistent), but under certain conditions xtrabackup_binlog_pos_innodb can be used instead to get consistent binlog coordinates as described in Working with Binary Logs.

3、这个问题在业务不繁忙的系统上,可能还不容易显现。半夜1点,锁表锁个10几秒钟,问题不大。

如果在业务繁忙的系统,或者备份刚好和某个繁重的task(日结月结之类的)在一起运行,那么xtrabackup很有可能引起业务表长时间的锁定导致业务故障。

本文出自 “记忆碎片” 博客,谢绝转载!