Hadoop-2.6.0学习笔记(一)HA集群搭建

鲁春利的工作笔记,好记性不如烂笔头

hadoop环境分主要分为单机、伪分布式以及集群,这里主要记录集群环境的搭建。

环境配置

1、下载Hadoop

从http://hadoop.apache.org/下载hadoop安装文件

2、解压tar包

[hadoop@nnode lucl]$ tar -xzv -f hadoop-2.6.0.tar.gz

3、配置Hadoop环境变量

[hadoop@nnode lucl]$ vim ~/.bash_profile 新增如下配置 # Hadoop export HADOOP_HOME=/lucl/hadoop-2.6.0 export PATH=$HADOOP_HOME/bin:$HADOOP_HOME/sbin:$PATH

4、修改配置文件hadoop-env.sh

# 配置hadoop运行时的环境变量 export JAVA_HOME=/lucl/jdk1.7.0_80 export HADOOP_HOME=/lucl/hadoop-2.6.0 export HADOOP_CONF_DIR=/lucl/hadoop-2.6.0/etc/hadoop

说明:配置文件位于/lucl/hadoop-2.6.0/etc/hadoop/目录下,如下配置文件一样。

5、修改配置文件mapred-env.sh

# 配置JAVA_HOME export JAVA_HOME=/lucl/jdk1.7.0_80

6、修改配置文件yarn-env.sh

# 配置JAVA_HOME export JAVA_HOME=/lucl/jdk1.7.0_80

7、修改配置文件 core-site.xml

<configuration>

<!-- version of this configuration file -->

<property>

<name>hadoop.common.configuration.version</name>

<value>0.23.0</value>

</property>

<!-- 这里的值指的是默认的HDFS路径。当有多个HDFS集群同时工作时,

用户如果不写集群名称,那么默认使用哪个哪?在这里指定!

该值来自于hdfs-site.xml中的配置 -->

<property>

<name>fs.defaultFS</name>

<value>hdfs://cluster</value>

</property>

<!-- 这里的路径默认是NameNode、DataNode、JournalNode等存放数据的公共目录。

用户也可以自己单独指定这三类节点的目录。-->

<!-- 默认值为/tmp/hadoop-${user.name},linux下的/tmp目录容易被清空,

建议指定自己的目录 -->

<property>

<name>hadoop.tmp.dir</name>

<value>/lucl/storage/hadoop/tmp</value>

</property>

<!-- config zookeeper for ha -->

<!-- 这里是ZooKeeper集群的地址和端口。注意,数量一定是奇数,

且不少于三个节点 -->

<property>

<name>ha.zookeeper.quorum</name>

<value>nnode:2181,dnode1:2181,dnode2:2181</value>

</property>

</configuration>

8、修改配置文件hdfs-site.xml

<configuration>

<!-- version of this configuration file -->

<property>

<name>hadoop.hdfs.configuration.version</name>

<value>1</value>

</property>

<!-- 指定DataNode存储block的副本数量,现在有两个datanode,设定2。

默认值是3个 -->

<property>

<name>dfs.replication</name>

<value>2</value>

</property>

<!-- 指定DFS的name node在本地文件系统的什么位置存储name table(fsimage) -->

<!-- 实际上最终的hdfs数据还是在namenode节点所在的linux主机上存着 -->

<!-- 如果这里是以英文逗号分割的目录列表,那么name table将复制到所有目录;

作为冗余存储。 -->

<!-- 默认file://${hadoop.tmp.dir}/dfs/name -->

<property>

<name>dfs.namenode.name.dir</name>

<value>/lucl/storage/hadoop/name</value>

</property>

<!-- 默认为${dfs.namenode.name.dir},指定DFS name node在本地文件系统的什么

位置存储transaction (edits) file。 -->

<!-- 如果这里是以英文逗号分割的目录列表,那么transaction (edits) file将复制到

所有目录;作为冗余存储(for redundancy)。 -->

<property>

<name>dfs.namenode.name.dir</name>

<value>/lucl/storage/hadoop/edits</value>

</property>

<!-- 指定DFS的data node在本地文件系统的什么位置存储its blocks -->

<!-- 如果是以逗号分割的目录列表,则数据会存储在所有指定的目录,

一般都是将目录分散在不同的设备上,不存着的目录会被忽略 -->

<!-- 默认file://${hadoop.tmp.dir}/dfs/data -->

<property>

<name>dfs.datanode.data.dir</name>

<value>/lucl/storage/hadoop/data</value>

</property>

<!-- 默认为true,启用NameNodes和DataNodes的WebHDFS(通过50070来访问) -->

<property>

<name>dfs.webhdfs.enabled</name>

<value>true</value>

</property>

<!-- 是否启用HDFS的权限检查,现在还不熟悉,暂时禁用 -->

<property>

<name>dfs.permissions.enabled</name>

<value>false</value>

</property>

<!-- The follows just for ha -->

<!-- Comma-separated list of nameservices. -->

<!-- 我这里只有一个集群,一个命名空间,一个nameservice,自定义集群名称 -->

<property>

<name>dfs.nameservices</name>

<value>cluster</value>

</property>

<!-- dfs.ha.namenodes.EXAMPLENAMESERVICE -->

<!-- EXAMPLENAMESERVICE代表了一个样例,如前面的cluster,表示该集群中的namenode,

自定义名称,这里的值也是逻辑名称,名字随便起,相互不重复即可 -->

<property>

<name>dfs.ha.namenodes.cluster</name>

<value>nn1,nn2</value>

</property>

<!-- for rpc connection -->

<!-- 处理客户端请求的RPC地址,对于HA/Federation这种有多个namenode存着的情况,

为自定义的nameservie和namenode标识 -->

<!-- Hadoop的架构基于RPC来实现的,NameNode等为RPC的server端,

如FileSystem等为实现的RPC的client端 -->

<property>

<name>dfs.namenode.rpc-address.cluster.nn1</name>

<value>nnode:8020</value>

</property>

<!-- 下面为另一个namenode节点 -->

<property>

<name>dfs.namenode.rpc-address.cluster.nn2</name>

<value>dnode1:8020</value>

</property>

<!-- for http connection -->

<!-- 默认值0.0.0.0:50070,dfs namenode web ui监听的端口,

namenode启动后可以通过该地址查看namenode状态 -->

<property>

<name>dfs.namenode.http-address.cluster.nn1</name>

<value>nnode:50070</value>

</property>

<!-- 同上 -->

<property>

<name>dfs.namenode.http-address.cluster.nn2</name>

<value>dnode1:50070</value>

</property>

<!-- for connection with namenodes-->

<!-- 用来进行HDFS服务通信的RPC地址,如果配置了该地址则BackupNode、Datanodes

以及其他服务应当连接该地址。 -->

<!-- 对于HA/Federation有多个namenode的情况,应采用nameservice.namenode的形式 -->

<!-- 如果该参数未设置,dfs.namenode.rpc-address将作为默认值被使用 -->

<property>

<name>dfs.namenode.servicerpc-address.cluster.nn1</name>

<value>nnode:53310</value>

</property>

<!-- 同上 -->

<property>

<name>dfs.namenode.servicerpc-address.cluster.nn2</name>

<value>dnode1:53310</value>

</property>

<!-- namenode.shared.edits -->

<!-- 在HA中,多个namenode节点间共享存储目录时,使用的JournalNode集群信息。 -->

<!-- active状态的namenode执行write,而standby状态的namenode执行read,

以保证namespaces同步。-->

<!-- 该目录无需位于dfs.namenode.edits.dir目录之上,若非HA集群该目录为空。 -->

<property>

<name>dfs.namenode.shared.edits.dir</name>

<value>qjournal://nnode:8485;dnode1:8485;dnode2:8485/cluster</value>

</property>

<!-- journalnode.edits.dir -->

<!-- the path where the JournalNode daemon will store its local state -->

<property>

<name>dfs.journalnode.edits.dir</name>

<value>/lucl/storage/hadoop/journal</value>

</property>

<!-- failover proxy -->

<!-- 指定cluster出故障时,哪个实现类负责执行故障切换 -->

<property>

<name>dfs.client.failover.proxy.provider.cluster</name>

<value>org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvider</value>

</property>

<!-- automatic-failover -->

<!-- 指定cluster是否启动自动故障恢复,即当NameNode出故障时,是否自动切换到另一台NameNode -->

<property>

<name>dfs.ha.automatic-failover.enabled</name>

<value>true</value>

</property>

<!-- 一旦需要NameNode切换,使用ssh方式进行操作 -->

<property>

<name>dfs.ha.fencing.methods</name>

<value>sshfence</value>

</property>

<!-- 如果使用ssh进行故障切换,使用ssh通信时用的密钥存储的位置 -->

<property>

<name>dfs.ha.fencing.ssh.private-key-files</name>

<value>/home/hadoop/.ssh/id_dsa</value>

</property>

</configuration>

9、修改配置文件mapred-site.xml

<configuration>

<!-- The runtime framework for executing MapReduce jobs.

Can be one of local, classic or yarn. -->

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

<!-- The host and port that the MapReduce job tracker runs at. -->

<property>

<name>mapreduce.jobtracker.address</name>

<value>nnode:9001</value>

</property>

<!-- The job tracker http server address and port the server will listen on,

默认0.0.0.0:50030。If the port is 0 then server will start on a free port -->

<property>

<name>mapreduce.jobtracker.http.address</name>

<value>nnode:50030</value>

</property>

<!-- The task tracker http server address and port,默认值0.0.0.0:50060。

If the port is 0 then the server will start on a free port. -->

<property>

<name>mapreduce.tasktracker.http.address</name>

<value>nnode:50060</value>

</property>

<!-- MapReduce JobHistory Server IPC host:port -->

<property>

<name>mapreduce.jobhistory.address</name>

<value>nnode:10020</value>

</property>

<!-- MapReduce JobHistory Server Web UI host:port -->

<property>

<name>mapreduce.jobhistory.webapp.address</name>

<value>nnode:19888</value>

</property>

<!-- The directory where MapReduce stores control files.

默认值${hadoop.tmp.dir}/mapred/system. -->

<property>

<name>mapreduce.jobtracker.system.dir</name>

<value>/lucl/storage/hadoop/mapred/system</value>

</property>

<!-- The root of the staging area for users' job files,

默认值${hadoop.tmp.dir}/mapred/staging -->

<property>

<name>mapreduce.jobtracker.staging.root.dir</name>

<value>/lucl/storage/hadoop/mapred/staging</value>

</property>

<!-- A shared directory for temporary files.

默认值${hadoop.tmp.dir}/mapred/temp -->

<property>

<name>mapreduce.cluster.temp.dir</name>

<value>/lucl/storage/hadoop/mapred/tmp</value>

</property>

<!-- The local directory where MapReduce stores intermediate data files.

默认值${hadoop.tmp.dir}/mapred/local -->

<property>

<name>mapreduce.cluster.local.dir</name>

<value>/lucl/storage/hadoop/mapred/local</value>

</property>

</configuration>

10、修改配置文件yarn-site.xml

<configuration> <!-- The hostname of the RM.还是单点,这是隐患 --> <property> <name>yarn.resourcemanager.hostname</name> <value>nnode</value> </property> <!-- The hostname of the NM. <property> <name>yarn.nodemanager.hostname</name> <value>nnode</value> </property> --> <!-- NodeManager上运行的附属服务。需配置成mapreduce_shuffle,才可运行MapReduce --> <property> <name>yarn.nodemanager.aux-services</name> <value>mapreduce_shuffle</value> </property> <!-- 默认值即为该Handler --> <property> <name>yarn.nodemanager.aux-services.mapreduce_shuffle.class</name> <value>org.apache.hadoop.mapred.ShuffleHandler</value> </property> <!-- 说明:为了能够运行MapReduce程序,需要让各个NodeManager在启动时加载 shuffle server,shuffle server实际上是Jetty/Netty Server,Reduce Task通 过该server从各个NodeManager上远程拷贝Map Task产生的中间结果。 上面增加的两个配置均用于指定shuffle serve。 --> </configuration>

11、配置数据节点

修改slaves文件,指定所有的DataNode节点列表,每行一个节点名称

# [hadoop@nnode ~]$ cd /lucl/hadoop-2.6.0/etc/hadoop/ [hadoop@nnode hadoop]$ cat slaves dnode1 dnode2 [hadoop@nnode hadoop]$

12、分发hadoop安装文件

将hadoop配置好的安装文件分发到另外两台机器

[hadoop@nnode lucl]$ scp -r hadoop-2.6.0 hadoop@dnode1:/usr/local/ [hadoop@nnode lucl]$ scp -r hadoop-2.6.0 hadoop@dnode2:/usr/local/

13、分发存储目录

[hadoop@nnode lucl]$ scp -r storage/hadoop dnode1:/lucl/storage/ [hadoop@nnode lucl]$ scp -r storage/hadoop dnode2:/lucl/storage/

14、配置dnode1和dnode2

# Hadoop export HADOOP_HOME=/lucl/hadoop-2.6.0 export PATH=$HADOOP_HOME/bin:$HADOOP_HOME/sbin:$PATH

启动hadoop集群

1、启动ZooKeeper集群

在nnode、dnode1和dnode2三台机器上依次执行zkServer.sh start

# 节点nnode [hadoop@nnode ~]$ zkServer.sh start JMX enabled by default Using config: /lucl/zookeeper-3.4.6/bin/../conf/zoo.cfg Starting zookeeper ... STARTED [hadoop@nnode ~]$ # 节点dnode1 [hadoop@dnode1 ~]$ zkServer.sh start JMX enabled by default Using config: /lucl/zookeeper-3.4.6/bin/../conf/zoo.cfg Starting zookeeper ... STARTED [hadoop@dnode1 ~]$ # 节点dnode2 [hadoop@dnode2 ~]$ zkServer.sh start JMX enabled by default Using config: /lucl/zookeeper-3.4.6/bin/../conf/zoo.cfg Starting zookeeper ... STARTED [hadoop@dnode2 ~]$

2、格式化ZooKeeper集群,目的是在ZooKeeper集群上建立HA的相应节点

[hadoop@nnode ~]$ hdfs zkfc -formatZK

说明:ddfs zkfc --help查看命令帮助。

输出如下信息表明zkformat成功。

2016-01-17 05:56:25 INFO ha.ActiveStandbyElector: Session connected. 2016-01-17 05:56:25 INFO ha.ActiveStandbyElector: Successfully created /hadoop-ha/cluster in ZK. 2016-01-17 05:56:25 INFO zookeeper.ZooKeeper: Session: 0x77524fcabbde0001 closed 2016-01-17 05:56:25 INFO zookeeper.ClientCnxn: EventThread shut down

通过zkCli.sh命令查看zookeeper集群中的节点。

WatchedEvent state:SyncConnected type:None path:null [zk: localhost:2181(CONNECTED) 0] ls / [hadoop-ha, zookeeper] [zk: localhost:2181(CONNECTED) 1] ls /hadoop-ha [cluster] [zk: localhost:2181(CONNECTED) 2] ls /hadoop-ha/cluster [] [zk: localhost:2181(CONNECTED) 3] get /hadoop-ha/cluster cZxid = 0x200000009 ctime = Sun Jan 17 05:56:24 PST 2016 mZxid = 0x200000009 mtime = Sun Jan 17 05:56:24 PST 2016 pZxid = 0x200000009 cversion = 0 dataVersion = 0 aclVersion = 0 ephemeralOwner = 0x0 dataLength = 0 numChildren = 0 [zk: localhost:2181(CONNECTED) 4]

说明:此时新增了hadoop-ha节点。

3、启动JournalNode集群

在三个节点上分别执行hadoop-daemon.sh start journalnode

# [hadoop@nnode ~]$ hadoop-daemon.sh start journalnode [hadoop@dnode1~]$ hadoop-daemon.sh start journalnode [hadoop@dnode2 ~]$ hadoop-daemon.sh start journalnode # 注意这里是hadoop-daemon.sh而非hadoop-daemons.sh(daemon后面没有s)

说明:执行后通过jps能够看到多了JournalNode进程(QuorumPeerMain是ZK的进程)。

4、格式化集群的NameNode

[hadoop@nnode ~]$ hdfs namenode -format # 我这里在任意目录下均可执行hdfs命令是由于我已经把hadoop的bin目录加入环境变量 # 略 2016-01-17 06:02:25 INFO util.GSet: Computing capacity for map NameNodeRetryCache 2016-01-17 06:02:25 INFO util.GSet: VM type = 64-bit 2016-01-17 06:02:25 INFO util.GSet: 0.029999999329447746% max memory 966.7 MB = 297.0 KB 2016-01-17 06:02:25 INFO util.GSet: capacity = 2^15 = 32768 entries 2016-01-17 06:02:25 INFO namenode.NNConf: ACLs enabled? false 2016-01-17 06:02:25 INFO namenode.NNConf: XAttrs enabled? true 2016-01-17 06:02:25 INFO namenode.NNConf: Maximum size of an xattr: 16384 2016-01-17 06:02:28 INFO namenode.FSImage: Allocated new BlockPoolId: BP-1345724338-192.168.137.117-1453039348319 2016-01-17 06:02:28 INFO common.Storage: Storage directory /lucl/storage/hadoop/name has been successfully formatted. 2016-01-17 06:02:29 INFO namenode.NNStorageRetentionManager: Going to retain 1 images with txid >= 0 2016-01-17 06:02:29 INFO util.ExitUtil: Exiting with status 0 2016-01-17 06:02:29 INFO namenode.NameNode: SHUTDOWN_MSG: /************************************************************ SHUTDOWN_MSG: Shutting down NameNode at nnode/192.168.137.117 ************************************************************/

5、启动NameNode

[hadoop@nnode ~]$ hadoop-daemon.sh start namenode starting namenode, logging to /usr/local/hadoop2.6.0/logs/hadoop-hadoop-namenode-nnode.out # 查看NameNode进程 [hadoop@nnode ~]$ jps 2970 NameNode 3123 Jps 2858 QuorumPeerMain 2908 JournalNode [hadoop@nnode ~]$

6、同步NameNode的数据

# 在主机dnode1上执行如下同步命令 [hadoop@dnode1 ~]$ hdfs namenode -bootstrapStandby # 注意这里bootstrapStandby前面的折线必须为英文状态的,否则总是会报错 # 输出如下 16/01/18 12:41:33 INFO namenode.NameNode: SHUTDOWN_MSG: /************************************************************ SHUTDOWN_MSG: Shutting down NameNode at dnode1/192.168.137.118 ************************************************************/

7、启动另一个Namenode

[hadoop@dnode1 ~]$ hadoop-daemon.sh start namenode starting namenode, logging to /lucl/hadoop-2.6.0/logs/hadoop-hadoop-namenode-dnode1.out [hadoop@dnode1 ~] [hadoop@dnode1 ~]$ jps 2770 NameNode 2857 Jps 2334 QuorumPeerMain 2570 JournalNode [hadoop@dnode1 ~]$

8、启动所有的DataNode

[hadoop@nnode ~]$ hadoop-daemons.sh start datanode dnode1: starting datanode, logging to /usr/local/hadoop2.6.0/logs/hadoop-hadoop-datanode-dnode1.out dnode2: starting datanode, logging to /usr/local/hadoop2.6.0/logs/hadoop-hadoop-datanode-dnode2.out [hadoop@nnode ~]$ # 上面的命令为hadoop-daemons.sh(这里的daemon是带有s的) # 查看dnode1节点的进程 [hadoop@dnode1 ~]$ jps 12431 JournalNode 12534 NameNode 11636 QuorumPeerMain 12651 DataNode 12737 Jps [hadoop@dnode1 ~]$ # 查看dnode2节点的进程 [hadoop@dnode2 ~]$ jps 12477 Jps 12400 DataNode 11566 QuorumPeerMain 12286 JournalNode [hadoop@dnode2 ~]$

9、启动Yarn

[hadoop@nnode ~]$ start-yarn.sh starting yarn daemons starting resourcemanager, logging to /usr/local/hadoop2.6.0/logs/yarn-hadoop-resourcemanager-nnode.out dnode1: starting nodemanager, logging to /usr/local/hadoop2.6.0/logs/yarn-hadoop-nodemanager-dnode1.out dnode2: starting nodemanager, logging to /usr/local/hadoop2.6.0/logs/yarn-hadoop-nodemanager-dnode2.out [hadoop@nnode ~]$

10、启动ZooKeeperFailoverController

# nnode和dnode1节点配置的有namenode,在这两个节点执行 [hadoop@nnode ~]$ hadoop-daemon.sh start zkfc starting zkfc, logging to /usr/local/hadoop2.6.0/logs/hadoop-hadoop-zkfc-nnode.out [hadoop@nnode ~]$ [hadoop@nnode ~]$ jps 12629 NameNode 12385 JournalNode 13571 Jps 11637 QuorumPeerMain 13218 ResourceManager 13502 DFSZKFailoverController [hadoop@dnode1 ~]$ hadoop-daemon.sh start zkfc starting zkfc, logging to /lucl/hadoop-2.6.0/logs/hadoop-hadoop-zkfc-dnode1.out [hadoop@dnode1 ~]$

11、 验证HDFS是否好用

[hadoop@dnode1 ~]$ hdfs dfs -ls -R / 16/01/18 12:47:04 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable [hadoop@dnode1 ~]$ [hadoop@dnode1 ~]$ hdfs dfs -mkdir -p /user/hadoop 16/01/18 12:47:49 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable [hadoop@dnode1 ~]$ hdfs dfs -ls -R / 16/01/18 12:47:58 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable drwxr-xr-x - hadoop supergroup 0 2016-01-18 12:47 /user drwxr-xr-x - hadoop supergroup 0 2016-01-18 12:47 /user/hadoop [hadoop@dnode1 ~]$

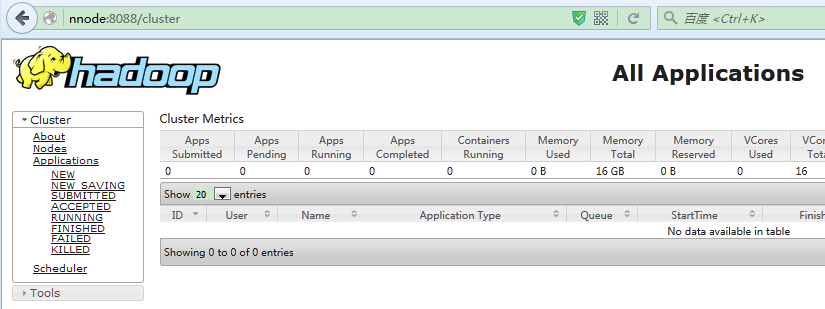

12、验证Yarn是否好用

# 通过如下地址访问正常 http://nnode:8088/cluster

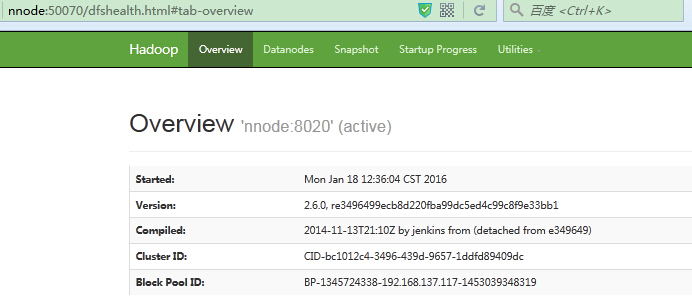

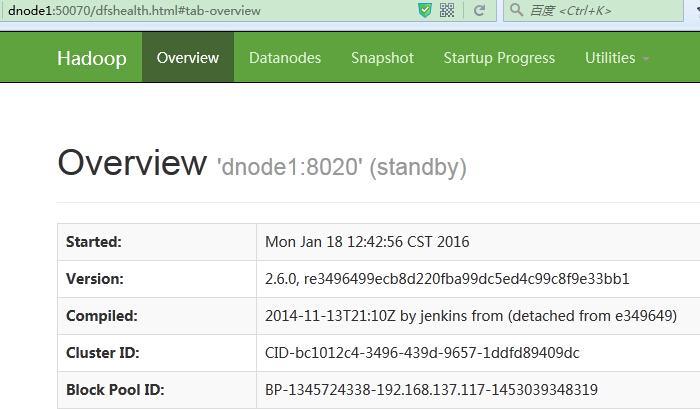

13、验证HA的故障自动转移是否好用

http://nnode:50070 http://dnode1:50070 # 此时两个namenode一个为active一个为standby,将active的namenode进程kill后, # standby状态的namenode成为active

总结

Hadoop集群环境部署完成后,以后再启动时步骤如下(start-all.sh启动方式不易排查错误)。

1、启动ZK集群

在三个节点依次执行zkStart.sh start

2、启动journalnode

在三个节点依次执行hadoop-daemon.sh start journalnode

3、启动NameNode

在nnode和dnode1依次执行hadoop-daemon.sh start namenode

4、启动DataNode

在任意节点执行hadoop-daemons.sh start datanode

5、启动yarn

在任意节点执行start-yarn.sh

6、启动ZKFailOver

在节点nnode和dnode1依次执行hadoop-daemon.sh start zkfc

启动时WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform:

由于hadoop默认为32位的,而linux系统为64位的,native库不兼容,从网上下载native后替换:

# 在三台主机依次执行 [hadoop@nnode lib]$ pwd /lucl/hadoop-2.6.0/lib [hadoop@nnode lib]$ ll 总用量 4 drwxr-xr-x 2 hadoop hadoop 4096 11月 14 2014 native [hadoop@nnode lib]$ mv native native_x86 [hadoop@nnode lib]$ cp -r /mnt/hgfs/Share/native . [hadoop@nnode lib]$ ll 总用量 8 drwxrwxr-x 2 hadoop hadoop 4096 1月 18 13:08 native drwxr-xr-x 2 hadoop hadoop 4096 11月 14 2014 native_x86 [hadoop@nnode lib]$

替换后警告消失。

[hadoop@nnode lib]$ hdfs dfs -ls -R / drwxr-xr-x - hadoop supergroup 0 2016-01-18 12:47 /user drwxr-xr-x - hadoop supergroup 0 2016-01-18 12:47 /user/hadoop [hadoop@nnode lib]$