ZooKeeper-3.4.6学习笔记(一)集群配置

鲁春利的工作笔记,好记性不如烂笔头

系统环境准备

1、操作系统

VMware安装三台虚拟机: 系统:CentOS-6.5-x86_64

操作系统安装过程省略,安装时创建用户hadoop,密码为password。

2、主机信息

| IP |

主机名 |

内存 |

磁盘 |

用户 |

备注 |

| 192.168.137.117 |

nnode |

2G |

20G |

root/root hadoop/password |

|

| 192.168.137.118 |

dnode1 |

2G |

20G |

root/root hadoop/password |

|

| 192.168.137.119 |

dnode2 |

2G |

20G |

root/root hadoop/password |

3、修改主机名

# 查看主机名 [root@nnode ~]# hostname nnode [root@nnode ~]# # 修改主机名 [root@nnode ~]# vim /etc/sysconfig/network NETWORKING=yes HOSTNAME=nnode # 主机名

说明:三台节点的主机名分别为nnode、dnode1、dnode2

4、修改hosts文件

[root@nnode ~]# vim /etc/hosts # 127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4 # ::1 localhost localhost.localdomain localhost6 localhost6.localdomain6 192.168.137.117 nnode nnode 192.168.137.118 dnode1 dnode1 192.168.137.119 dnode2 dnode2

说明:将127.0.0.1这两行原有的注释掉

5、关闭防火墙

# 查看状态 service iptables status # 临时关闭 service iptables stop # 永久关闭 chkconfig iptables off

6、关闭SELinux

[root@nnode ~]# vim /etc/sysconfig/selinux # This file controls the state of SELinux on the system. # SELINUX= can take one of these three values: # enforcing - SELinux security policy is enforced. # permissive - SELinux prints warnings instead of enforcing. # disabled - No SELinux policy is loaded. # SELINUX=enforcing SELINUX=disabled # SELINUXTYPE= can take one of these two values: # targeted - Targeted processes are protected, # mls - Multi Level Security protection. SELINUXTYPE=targeted

7、配置静态IP

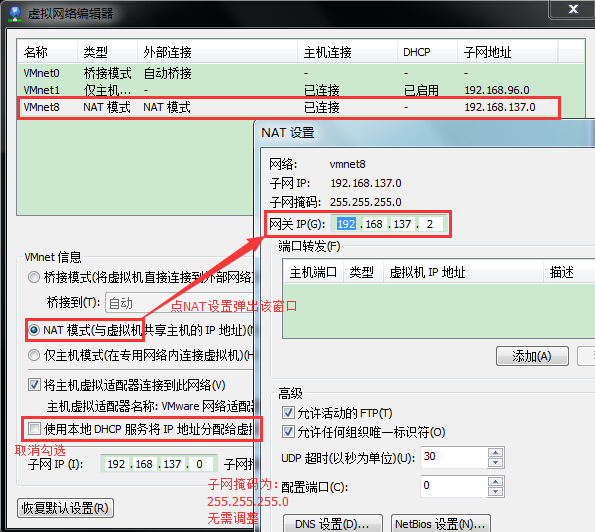

说明:

为了保证虚拟机与物理机的通信不受物理机所在子网的影响,通过VMware配置了NAT的网络连接方式。

编辑-->虚拟机网络编辑器,选中VMnet8,VMnet信息选中NAT模式

[root@nnode ~]# vim /etc/sysconfig/network-scripts/ifcfg-eth0 DEVICE="eth0" # 静态IP BOOTPROTO=static IPV6INIT="yes" NM_CONTROLLED="yes" ONBOOT="yes" TYPE="Ethernet" UUID="81c9009f-6b9f-4f72-8a38-ab91fecf788a" HWADDR=00:0C:29:67:EF:06 # IP地址 IPADDR=192.168.137.117 # 子网掩码255.255.255.0 PREFIX=24 # 网关地址,为VMware指定的那个值 GATEWAY=192.168.137.2 # 使用免费DNS服务器 DNS1=114.114.115.115 DEFROUTE=yes IPV4_FAILURE_FATAL=yes IPV6_AUTOCONF=yes IPV6_DEFROUTE=yes IPV6_PEERDNS=yes IPV6_PEERROUTES=yes IPV6_FAILURE_FATAL=no # 自动生成的网卡名称 NAME="System eth0" [root@nnode ~]#

说明:可以通过图形化界面或配置文件修改;另外两台机器配置方式类似。

8、查看IP配置

[root@nnode ~]# ifconfig eth0 Link encap:Ethernet HWaddr 00:0C:29:67:EF:06 inet addr:192.168.137.117 Bcast:192.168.137.255 Mask:255.255.255.0 inet6 addr: fe80::20c:29ff:fe67:ef06/64 Scope:Link UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1 RX packets:1814 errors:0 dropped:0 overruns:0 frame:0 TX packets:658 errors:0 dropped:0 overruns:0 carrier:0 collisions:0 txqueuelen:1000 RX bytes:186698 (182.3 KiB) TX bytes:75045 (73.2 KiB) lo Link encap:Local Loopback inet addr:127.0.0.1 Mask:255.0.0.0 inet6 addr: ::1/128 Scope:Host UP LOOPBACK RUNNING MTU:16436 Metric:1 RX packets:8 errors:0 dropped:0 overruns:0 frame:0 TX packets:8 errors:0 dropped:0 overruns:0 carrier:0 collisions:0 txqueuelen:0 RX bytes:480 (480.0 b) TX bytes:480 (480.0 b) [root@nnode ~]#

9、验证网络连接

[root@nnode ~]# ping www.baidu.com PING www.a.shifen.com (119.75.218.70) 56(84) bytes of data. 64 bytes from 119.75.218.70: icmp_seq=1 ttl=128 time=7.57 ms 64 bytes from 119.75.218.70: icmp_seq=2 ttl=128 time=7.52 ms 64 bytes from 119.75.218.70: icmp_seq=3 ttl=128 time=6.02 ms 64 bytes from 119.75.218.70: icmp_seq=4 ttl=128 time=3.91 ms --- www.a.shifen.com ping statistics --- 4 packets transmitted, 4 received, 0% packet loss, time 2851ms rtt min/avg/max/mdev = 3.917/5.585/7.577/1.312 ms [root@nnode ~]#

安装JDK

为了方便物理机与虚拟机之间文件共享,通过VMware设置共享目录。

选中某一个主机节点->右键设置-->选项共享文件夹->文件夹共享选中“总是启用“,然后添加共享目录。

登录Liunx验证共享目录:

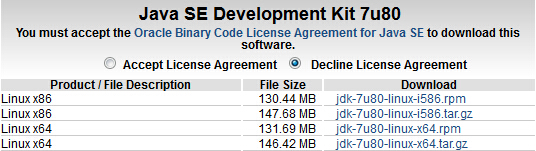

1、下载JDK

下载地址:

http://www.oracle.com/technetwork/java/javase/downloads/jdk7-downloads-1880260.html

使用版本:jdk-7u80-linux-x64.tar.gz

[root@nnode hgfs]# cd /mnt/hgfs/ [root@nnode hgfs]# ls Share [root@nnode hgfs]# cd Share/ [root@nnode hgfs]# cp jdk-7u80-linux-x64.tar.gz /lucl/

2、解压

[root@nnode lucl]# tar -xzv -f jdk-7u80-linux-x64.tar.gz

说明:

在三台机器依次解压java的gz文件;

大数据相关的程序均部署于/lucl目录下。

程序的执行通过用户hadoop来调用(chown -R hadoop:hadoop /lucl)。

3、配置Java环境变量

[root@nnode lucl]# vim /etc/profile # 新增 export JAVA_HOME=/lucl/jdk1.7.0_80 export PATH=$PATH:$JAVA_HOME/bin export CLASSPATH=.:$JAVA_HOME/lib/dt.jar:$JAVA_HOME/lib/tools.jar

4、验证配置

[root@nnode lucl]# source /etc/profile [root@nnode ~]# java -version java version "1.7.0_80" Java(TM) SE Runtime Environment (build 1.7.0_80-b15) Java HotSpot(TM) 64-Bit Server VM (build 24.80-b11, mixed mode) [root@nnode ~]#

配置SSH免密码登录

1、在三台主机依次生成公钥和私钥

[hadoop@nnode ~]$ ssh-keygen -t rsa -P "" -f ~/.ssh/id_rsa Generating public/private rsa key pair. Your identification has been saved in /home/hadoop/.ssh/id_rsa. Your public key has been saved in /home/hadoop/.ssh/id_rsa.pub. The key fingerprint is: 48:03:e8:86:0a:59:77:94:f1:68:80:fc:28:29:a4:be hadoop@nnode The key's randomart image is: +--[ RSA 2048]----+ | . oo.oo | | .= .ooo | |o* + .= . | |O + .o o | |=o . S | |.. | | . | | E | | | +-----------------+ [hadoop@nnode ~]$

2、创建authorized_keys文件

[hadoop@nnode ~]$ ll .ssh total 8 -rw------- 1 hadoop hadoop 1671 Jan 9 19:15 id_rsa -rw-r--r-- 1 hadoop hadoop 394 Jan 9 19:15 id_rsa.pub [hadoop@nnode ~]$ cd .ssh/ [hadoop@nnode .ssh]$ cp id_rsa.pub authorized_keys

说明:在三台主机依次执行。

3、合并密钥文件

将节点dnode1和dnode2上的authorized_keys复制到nnode节点:

# 拷贝dnode1上的authorized_keys到nnode

[hadoop@dnode1 .ssh]$ scp authorized_keys hadoop@nnode:/home/hadoop/dnode1_authorized_keys The authenticity of host 'nnode (192.168.137.117)' can't be established. RSA key fingerprint is 90:7a:48:8d:b9:ed:c9:92:56:01:f2:e7:49:99:1c:93. Are you sure you want to continue connecting (yes/no)? yes Warning: Permanently added 'nnode,192.168.137.117' (RSA) to the list of known hosts. hadoop@nnode's password: authorized_keys 100% 395 0.4KB/s 00:00 # 重命名authorized_keys文件 [hadoop@dnode1 .ssh]$ mv authorized_keys authorized_keys_backup [hadoop@dnode1 .ssh]$

# 拷贝dnode2上的authorized_keys到nnode

[hadoop@dnode2 .ssh]$ scp authorized_keys hadoop@nnode:/home/hadoop/dnode2_authorized_keys The authenticity of host 'nnode (192.168.137.117)' can't be established. RSA key fingerprint is 90:7a:48:8d:b9:ed:c9:92:56:01:f2:e7:49:99:1c:93. Are you sure you want to continue connecting (yes/no)? yes Warning: Permanently added 'nnode,192.168.137.117' (RSA) to the list of known hosts. hadoop@nnode's password: authorized_keys 100% 395 0.4KB/s 00:00 # 重命名authorized_keys文件 [hadoop@dnode2 .ssh]$ mv authorized_keys authorized_keys_backup [hadoop@dnode2 .ssh]$

# 合并authorized_keys文件

[hadoop@nnode .ssh]$ cat /home/hadoop/dnode1_authorized_keys >> authorized_keys [hadoop@nnode .ssh]$ cat /home/hadoop/dnode2_authorized_keys >> authorized_keys [hadoop@nnode .ssh]$ cd .. # 必须是700 [hadoop@nnode ~]$ chmod 700 ~/.ssh # 最好是600 [hadoop@nnode ~]$ chmod 600 ~/.ssh/authorized_keys

# 分发合并后的authorized_keys到节点dnode1和dnode2

[hadoop@nnode .ssh]$ scp authorized_keys hadoop@dnode1:/home/hadoop/.ssh hadoop@dnode1's password: authorized_keys 100% 1184 1.2KB/s 00:00 [hadoop@nnode .ssh]$ scp authorized_keys hadoop@dnode2:/home/hadoop/.ssh hadoop@dnode2's password: authorized_keys 100% 1184 1.2KB/s 00:00 [hadoop@nnode .ssh]$

# 调整文件的权限

# 在节点dnode1和dnode2分别执行 # 必须是700 chmod 700 ~/.ssh # 最好是600 chmod 600 ~/.ssh/authorized_keys

4、测试验证

# 通过nnode节点验证 [hadoop@nnode ~]$ ssh nnode Last login: Sat Jan 9 19:25:18 2016 from nnode [hadoop@nnode ~]$ exit logout Connection to nnode closed. [hadoop@nnode ~]$ ssh dnode1 Last login: Sat Jan 9 19:25:08 2016 from dnode2 [hadoop@dnode1 ~]$ exit logout Connection to dnode1 closed. [hadoop@nnode ~]$ ssh dnode2 Last login: Sat Jan 9 19:25:12 2016 from dnode2 [hadoop@dnode2 ~]$ exit logout Connection to dnode2 closed. [hadoop@nnode ~]$ # 通过dnode1节点验证 [hadoop@dnode1 ~]$ ssh dnode1 Last login: Sat Jan 9 19:35:08 2016 from nnode [hadoop@dnode1 ~]$ exit logout Connection to dnode1 closed. [hadoop@dnode1 ~]$ ssh nnode Last login: Sat Jan 9 19:35:04 2016 from nnode [hadoop@nnode ~]$ exit logout Connection to nnode closed. [hadoop@dnode1 ~]$ ssh dnode2 Last login: Sat Jan 9 19:35:12 2016 from nnode [hadoop@dnode2 ~]$ exit logout Connection to dnode2 closed. [hadoop@dnode1 ~]$ # 通过dnode2节点验证 [hadoop@dnode2 ~]$ ssh dnode2 Last login: Sat Jan 9 19:35:59 2016 from dnode1 [hadoop@dnode2 ~]$ exit logout Connection to dnode2 closed. [hadoop@dnode2 ~]$ ssh nnode Last login: Sat Jan 9 19:35:56 2016 from dnode1 [hadoop@nnode ~]$ exit logout Connection to nnode closed. [hadoop@dnode2 ~]$ ssh dnode1 Last login: Sat Jan 9 19:35:52 2016 from dnode1 [hadoop@dnode1 ~]$ exit logout Connection to dnode1 closed. [hadoop@dnode2 ~]$

配置NTP服务

1、日期时间本地化

[hadoop@nnode ~]$ date Sun Jan 17 06:05:20 PST 2016 [hadoop@nnode ~]$

说明:Linux默认采用的是UTC时间,而我们需要使用的是东八区时间。

[root@nnode ~]# mv /etc/localtime /etc/localtime_backup [root@nnode ~]# [root@nnode ~]# cp /usr/share/zoneinfo/Asia/Shanghai /etc/localtime

调整显示格式:

# 临时调整 LANG="zh_CN.UTF-8" # 永久调整 [root@nnode ~]# vim /etc/sysconfig/i18n 将LANG="en_US.UTF-8" 换成 LANG="zh_CN.UTF-8"

2、NTP配置

暂略

ZooKeeper集群配置

1、下载Zookeeper

从地址http://zookeeper.apache.org/下载zookeeper安装包

下载版本:zookeeper-3.4.6.tar.gz

[hadoop@nnode lucl]$ tar -xzv -f zookeeper-3.4.6.tar.gz

2、配置zookeeper环境变量

# hadoop体系的软件均通过hadoop用户来运行 vim /home/hadoop/.bash_profile export ZOOKEEPER_HOME=/lucl/zookeeper-3.4.6 export PATH=$ZOOKEEPER_HOME/bin:$ZOOKEEPER_HOME/conf:$PATH

3、修改配置文件zoo.cfg

# 将$ZOOKEEPER_HOME$\conf目录下的zoo_sample.cfg文件复制一个并重命名为zoo.cfg [hadoop@nnode conf]$ cat zoo.cfg # Zookeeper基本时间单元(滴答时间,单位为毫秒),其他运行时时间间隔为tickTime的倍数 tickTime=2000 # 配置Leader服务器等待Follower启动,并完成数据同步的时间。Follower服务器在启动的过程中, # 会与Leader服务器建立连接并完成对数据的同步,从而确定自己对外提供服务的起始状态,Leader # 服务器允许Follower在initLimit时间内(10个tickTime)完成这个工作。 initLimit=10 # 配置Leader服务器和Follower之间进行心跳检测的最大延迟时间。在Zookeeper集群运行过程中, # Leader服务器会与所有的Follower进行心跳检测来确认该服务器是否存活。如果Leader服务器在 # syncLimit时间内(5个tickTime)无法获取到Follower的心跳检测响应,那么Leader就会认为该 # Follower已经脱离了与自己的同步。 syncLimit=5 # 配置Zookeeper服务器存储快照文件的目录。 dataDir=/lucl/storage/zk/data # 配置Zookeeper服务器存储事务日志文件的目录,默认为dataDir dataLogDir=/lucl/storage/zk/logs # 服务器对外提供的服务端口,客户端通过该端口与Zookeeper服务进行连接 clientPort=2181 # 支持的客户端最大连接数 maxClientCnxns=100 # # Be sure to read the maintenance section of the administrator guide before turning on autopurge. # http://zookeeper.apache.org/doc/current/zookeeperAdmin.html#sc_maintenance # # 从3.4.0开始,Zookeeper提供了对历史事务日志和快照数据自动清理的支持。 # autopurge.snapRetainCount配置Zookeeper在自动清理时保留的快照文件数量和对应的事务日志文件。 # autopurge.snapRetainCount最小值为3,如果小于3则自动调整为3(至少保留3个)。 # autopurge.snapRetainCount=3 # 自动清除的时间间隔,以小时为单,如果设置为0,将不会执行该任务。默认值为0。 # autopurge.purgeInterval=1 # server.id=host:port:port # 单机模式 # server.1=nnode:2888:3888 # 伪集群模式 # server.1=nnode:2888:3888 # server.2=nnode:2889:3889 # server.3=nnode:2890:3890 # 集群模式 server.117=nnode:2888:3888 server.118=dnode1:2888:3888 server.119=dnode2:2888:3888 # 参数说明: # id:ServerID(1-255),标识机器在集群中的序号,与dataDir目录下myid文件中的内容对应; # host:机器的主机名; # port:Follower服务器与Leader进行运行时通信和数据同步时使用的端口; # port:执行Leader选举时服务器相互通信的端口。 # 伪集群host都一样,Zookeeper实例通信端口号不能一样,所以要给它们分配不同的端口。

说明:在每个主机节点的lucl目录下新建存储目录storage,用来作为zookeeper、hadoop、hbase等的存储目录。

[hadoop@nnode storage]$ pwd /lucl/storage [hadoop@nnode storage]$ ll zk/ total 12 drwxrwxr-x 2 hadoop hadoop 4096 Jan 13 19:07 data drwxrwxr-x 2 hadoop hadoop 4096 Jan 13 19:02 logs drwxrwxr-x 2 hadoop hadoop 4096 Jan 13 19:02 tmp [hadoop@nnode storage]$

3、修改zkEnv.sh,增加ZOO_LOG_DIR

ZOO_LOG_DIR=/lucl/storage/zk/logs

说明:Zookeeper的启动脚本为zkServer.sh,其中在启动时会生成日志文件zookeeper.out,若未指定该日志文件的位置,则会在调用zkServer.sh命令的目录生成该文件。

[hadoop@nnode bin]$ cat zkServer.sh|grep zookeeper.out _ZOO_DAEMON_OUT="$ZOO_LOG_DIR/zookeeper.out" [hadoop@nnode bin]$

4、将配置好的zookeeper分发到其他机器

[hadoop@nnode lucl]$ scp -r zookeeper-3.4.6 dnode1:/lucl/ [hadoop@nnode lucl]$ scp -r zookeeper-3.4.6 dnode2:/lucl/

5、创建myid文件

在集群模式下,每台机器的dataDir目录下都需要创建一个myid文件,文件只有一行,写的是一个数字,该数字需要与zoo.cfg中当前机器的ServerID一致,如:

server.117=host1:2888:3888,那么host1主机的dataDir目录下的myid文件中只有一个数字为117; server.118=host2:2888:3888,那么host2主机的dataDir目录下的myid文件中只有一个数字为118。 server.119=host3:2888:3888,那么host3主机的dataDir目录下的myid文件中只有一个数字为119 # nnode [hadoop@nnode data]$ cat myid 117 [hadoop@nnode data]$ pwd /lucl/storage/zk/data [hadoop@nnode data]$ cat myid 117 [hadoop@nnode data]$ # dnode1 [hadoop@dnode1 data]$ pwd /lucl/storage/zk/data [hadoop@dnode1 data]$ cat myid 118 [hadoop@dnode1 data]$ # dnode2 [hadoop@dnode2 data]$ pwd /lucl/zookeeper-3.4.6/data [hadoop@dnode2 data]$ cat myid 119 [hadoop@dnode2 data]$

6、ZooKeeper可执行脚本

[hadoop@nnode bin]$ ll total 60 -rwxr-xr-x 1 hadoop hadoop 238 Feb 20 2014 README.txt -rwxr-xr-x 1 hadoop hadoop 1937 Feb 20 2014 zkCleanup.sh -rwxr-xr-x 1 hadoop hadoop 1049 Feb 20 2014 zkCli.cmd -rwxr-xr-x 1 hadoop hadoop 1534 Feb 20 2014 zkCli.sh -rwxr-xr-x 1 hadoop hadoop 1333 Feb 20 2014 zkEnv.cmd -rwxr-xr-x 1 hadoop hadoop 2756 Jun 8 14:10 zkEnv.sh -rwxr-xr-x 1 hadoop hadoop 1084 Feb 20 2014 zkServer.cmd -rwxr-xr-x 1 hadoop hadoop 5742 Feb 20 2014 zkServer.sh [hadoop@nnode bin]$

脚本说明

zkServer.sh : ZooKeeper服务器的启动、停止和重启脚本; zkCli.sh : ZooKeeper的简易客户端; zkEnv.sh : 设置ZooKeeper的环境变量; zkCleanup.sh : 清理ZooKeeper历史数据,包括事务日志文件和快照数据文件。

7、启动服务

[hadoop@nnode ~]$ zkServer.sh start JMX enabled by default Using config: /lucl/zookeeper-3.4.6/bin/../conf/zoo.cfg Starting zookeeper ... STARTED [hadoop@nnode ~]$ [hadoop@dnode1 ~]$ zkServer.sh start JMX enabled by default Using config: /lucl/zookeeper-3.4.6/bin/../conf/zoo.cfg Starting zookeeper ... STARTED [hadoop@dnode1 ~]$ [hadoop@dnode1 ~]$ zkServer.sh start JMX enabled by default Using config: /lucl/zookeeper-3.4.6/bin/../conf/zoo.cfg Starting zookeeper ... STARTED [hadoop@dnode1 ~]$

说明:

查看zk集群中节点的输出日志时个别主机有错误数据:

原因为:

启动的顺序是nnode>dnode1>dnode2,由于ZooKeeper集群启动的时候,每个结点都试图去连接集群中的其它结点,先启动的肯定连不上后面还没启动的,所以日志前面部分可能会有异常报出。

最后集群在选出一个Leader后就稳定了,其他结点可能也出现类似问题,属于正常。

8、查看状态

[hadoop@nnode ~]$ zkServer.sh status JMX enabled by default Using config: /lucl/zookeeper-3.4.6/bin/../conf/zoo.cfg Mode: follower [hadoop@nnode ~]$ [hadoop@dnode1 ~]$ zkServer.sh status JMX enabled by default Using config: /lucl/zookeeper-3.4.6/bin/../conf/zoo.cfg Mode: leader [hadoop@dnode1 ~]$ [hadoop@dnode2 data]$ zkServer.sh status JMX enabled by default Using config: /lucl/zookeeper-3.4.6/bin/../conf/zoo.cfg Mode: follower [hadoop@dnode2 data]$

9、通过telnet验证

# nnode [hadoop@nnode ~]$ telnet 127.0.0.1 2181 Trying 127.0.0.1... Connected to 127.0.0.1. Escape character is '^]'. stat Zookeeper version: 3.4.6-1569965, built on 02/20/2014 09:09 GMT Clients: /127.0.0.1:38315[0](queued=0,recved=1,sent=0) Latency min/avg/max: 0/0/0 Received: 2 Sent: 1 Connections: 1 Outstanding: 0 Zxid: 0x3200000025 Mode: follower # follower Node count: 39 Connection closed by foreign host. # dnode1 [hadoop@nnode ~]$ telnet dnode1 2181 Trying 192.168.137.118... Connected to dnode1. Escape character is '^]'. stat Zookeeper version: 3.4.6-1569965, built on 02/20/2014 09:09 GMT Clients: /192.168.137.117:45071[0](queued=0,recved=1,sent=0) Latency min/avg/max: 0/0/0 Received: 2 Sent: 1 Connections: 1 Outstanding: 0 Zxid: 0x3300000000 Mode: leader # leader Node count: 39 Connection closed by foreign host.

10、客户端脚本

[hadoop@nnode bin]$ zkCli.sh Connecting to localhost:2181 // ...... WATCHER:: WatchedEvent state:SyncConnected type:None path:null # 读取znode数据 [zk: localhost:2181(CONNECTED) 0] ls / [zookeeper] # 第一次部署Zookeeper集群,默认创建名为/zookeeper的节点 [zk: localhost:2181(CONNECTED) 1] ls /zookeeper [quota] [zk: localhost:2181(CONNECTED) 2] ls /zookeeper/quota [] [zk: localhost:2181(CONNECTED) 3] get /zookeeper/quota # 读取znode数据 cZxid = 0x0 ctime = Wed Dec 31 16:00:00 PST 1969 mZxid = 0x0 mtime = Wed Dec 31 16:00:00 PST 1969 pZxid = 0x0 cversion = 0 dataVersion = 0 aclVersion = 0 ephemeralOwner = 0x0 dataLength = 0 numChildren = 0 # 创建znode [zk: localhost:2181(CONNECTED) 4] create /zk-book 123 Created /zk-book [zk: localhost:2181(CONNECTED) 5] ls / [zk-book, zookeeper] [zk: localhost:2181(CONNECTED) 6] get /zk-book 123 cZxid = 0x3400000006 ctime = Sat Aug 08 21:18:47 CST 2015 mZxid = 0x3400000006 mtime = Sat Aug 08 21:18:47 CST 2015 pZxid = 0x3400000006 cversion = 0 dataVersion = 0 # version的版本为0 aclVersion = 0 ephemeralOwner = 0x0 dataLength = 3 numChildren = 0 # 更新znode的值 [zk: localhost:2181(CONNECTED) 7] set /zk-book 456 cZxid = 0x3400000006 ctime = Sat Aug 08 21:18:47 CST 2015 mZxid = 0x3400000007 mtime = Sat Aug 08 21:19:54 CST 2015 pZxid = 0x3400000006 cversion = 0 dataVersion = 1 # 更新后version的版本为1 aclVersion = 0 ephemeralOwner = 0x0 dataLength = 3 numChildren = 0 [zk: localhost:2181(CONNECTED) 8] get /zk-book 456 cZxid = 0x3400000006 ctime = Sat Aug 08 21:18:47 CST 2015 mZxid = 0x3400000007 mtime = Sat Aug 08 21:19:54 CST 2015 pZxid = 0x3400000006 cversion = 0 dataVersion = 1 aclVersion = 0 ephemeralOwner = 0x0 dataLength = 3 numChildren = 0 # 删除 [zk: localhost:2181(CONNECTED) 9] delete /zk-book WATCHER:: WatchedEvent state:SyncConnected type:NodeDeleted path:/zk-book # 再次查看发现/zk-book节点已被成功删除 [zk: localhost:2181(CONNECTED) 10] ls / [zookeeper] # 帮助 [zk: localhost:2181(CONNECTED) 11] help ZooKeeper -server host:port cmd args connect host:port get path [watch] ls path [watch] set path data [version] rmr path delquota [-n|-b] path quit printwatches on|off create [-s] [-e] path data acl stat path [watch] close ls2 path [watch] history listquota path setAcl path acl getAcl path sync path redo cmdno addauth scheme auth delete path [version] setquota -n|-b val path # 退出zkCli [zk: localhost:2181(CONNECTED) 12] quit Quitting... 2015-08-08 21:21:06,508 [myid:] - INFO [main:ZooKeeper@684] - Session: 0x14f0d5f49760002 closed 2015-08-08 21:21:06,510 [myid:] - INFO [main-EventThread:ClientCnxn$EventThread@512] - EventThread shut down [hadoop@nnode bin]$

说明:

连接远程Zookeeper服务器zkCli.sh -server ip:port

通过help命令可以查询命令使用的详细信息

停止服务

# 在每台机器上依次执行zkServer.sh stop [hadoop@nnode~]$zkServer.sh stop

11、zkCli.sh脚本解析

#!/bin/sh

# $0:脚本本身的名字,${ZOOKEEPER_HOME}/bin/zkCli.sh

ZOOBIN="${BASH_SOURCE-$0}" # ${ZOOKEEPER_HOME}/bin/zkCli.sh

ZOOBIN=`dirname ${ZOOBIN}` # ${ZOOKEEPER_HOME}/bin

ZOOBINDIR=`cd ${ZOOBIN}; pwd` # ${ZOOKEEPER_HOME}/bin

# 如果存在${ZOOKEEPER_HOME}/libexec/zkEnv.sh文件

if [ -e "$ZOOBIN/../libexec/zkEnv.sh" ]; then

. "$ZOOBINDIR"/../libexec/zkEnv.sh

else # 否则调用${ZOOKEEPER_HOME}/bin/zkEnv.sh,点表示当前目录

. "$ZOOBINDIR"/zkEnv.sh

fi

# ZOO_LOG_DIR在zkEnv.sh中配置,如${ZOOKEEPER_HOME}/log,zkServer.sh中会将zookeeper.out生成在该目录下

# ZOO_LOG4J_PROP : INFO,CONSOLE

# CLASSPATH : Zookeeper的类路径

# $@ :传给脚本的所有参数的列表

# java :zkEnv.sh里面配置的$JAVA_HOME/bin/java

$JAVA "-Dzookeeper.log.dir=${ZOO_LOG_DIR}" "-Dzookeeper.root.logger=${ZOO_LOG4J_PROP}" \

-cp "$CLASSPATH" $CLIENT_JVMFLAGS $JVMFLAGS org.apache.zookeeper.ZooKeeperMain "$@"

zkCli.sh中实际调用的是ZooKeeperMain类,在该类中接收客户端的输入,并在后台调用。