Zookeeper实战技能

Zookeeper实战运用

1.分布式安装部署

1.1 集群规划

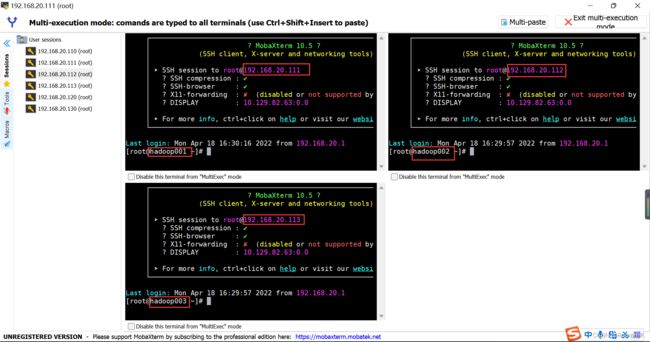

在hadoop001、hadoop002和hadoop003三个节点上部署Zookeeper。

1.2 解压安装

(1)解压Zookeeper安装包到 /usr/local/目录下

tar -zxvf zookeeper-3.4.6.tar.gz -C /usr/local/

(2)在/usr/local/zookeeper-3.4.6/这个目录下创建zkData

mkdir -p zkData

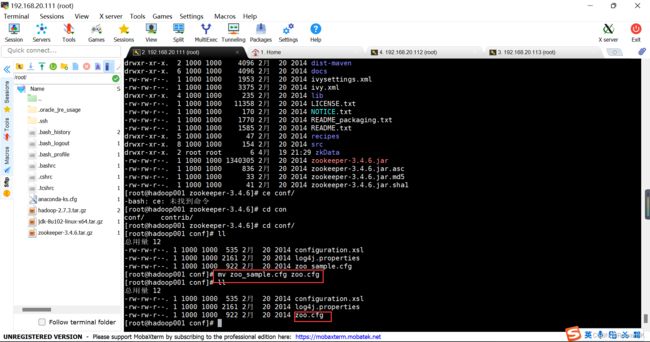

(3)重命名 /usr/local/zookeeper-3.4.6/conf/这个目录下的zoo_sample.cfg为zoo.cfg 便于后续操作

mv zoo_sample.cfg zoo.cfg

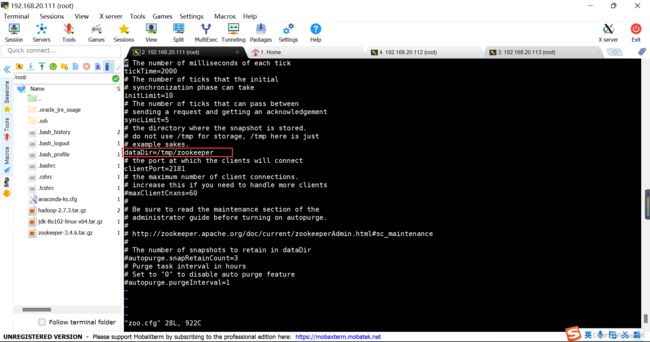

1.3 配置zoo.cfg文件

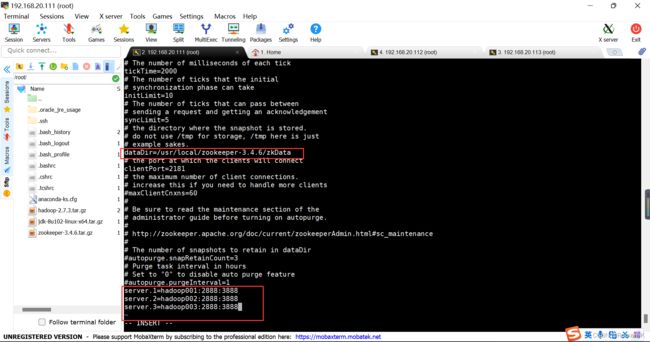

dataDir=/usr/local/zookeeper-3.4.6/zkData

并且在下面增加如下配置(本集群)

server.1=hadoop001:2888:3888

server.2=hadoop002:2888:3888

server.3=hadoop003:2888:3888

server.A=B:C:D

A是一个数字,表示这个是第几号服务器;

B是这个服务器的ip地址;

C是这个服务器与集群中的Leader服务器交换信息的端口;

D是万一集群中的Leader服务器挂了,需要一个端口来重新进行选举,选出一个新的Leader,而这个端口就是用来执行选举时服务器相互通信的端口。

集群模式下配置一个文件myid,这个文件在dataDir目录下,这个文件里面有一个数据就是A的值,Zookeeper启动时读取此文件,拿到里面的数据与zoo.cfg里面的配置信息比较从而判断到底是哪个server。

1.4 集群操作

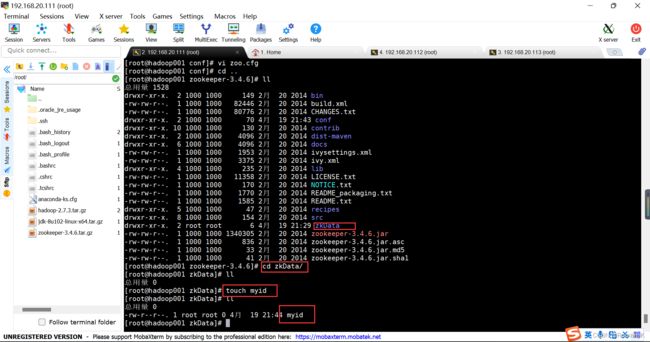

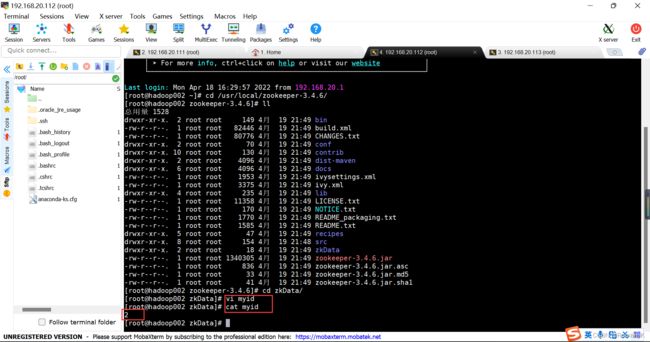

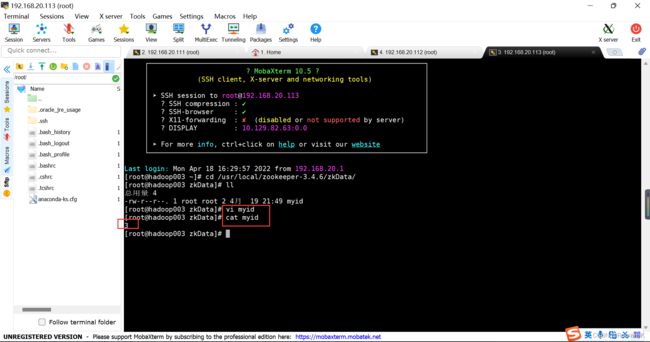

1.4.1 在/usr/local/zookeeper-3.4.6/zkData目录下创建一个myid的文件

touch myid

添加myid文件,注意一定要在linux里面创建,在notepad++里面很可能乱码

1.4.2 编辑myid文件

vi myid

1.4.3 拷贝配置好的zookeeper到其他机器上

scp -r /usr/local/zookeeper-3.4.6/ hadoop002:/usr/local/

scp -r /usr/local/zookeeper-3.4.6/ hadoop003:/usr/local/

分别在hadoop002修改myid文件内容为2、在hadoop003中修改myid文件内容为3

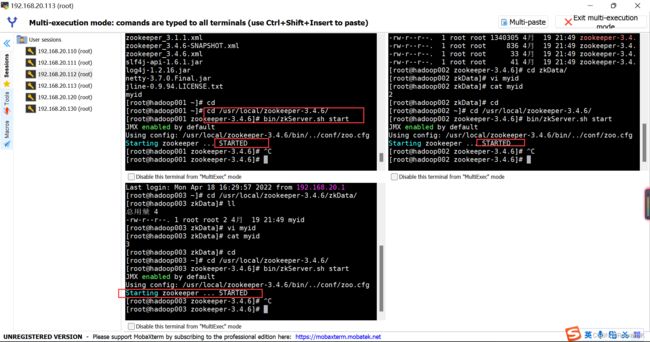

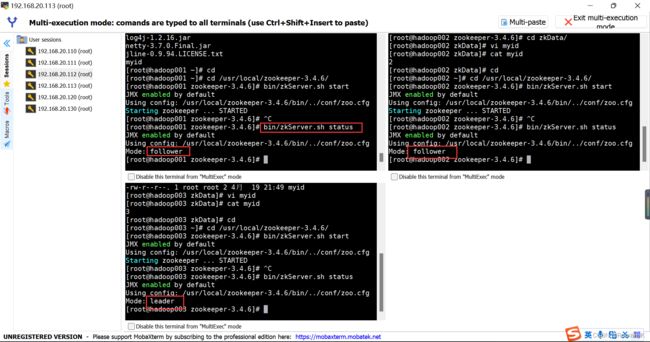

1.4.4 分别启动Zookeeper

1.4.5 查看状态

bin/zkServer.sh status

注:可知此次启动的leader为hadoop003,follower为hadoop001与hadoop002

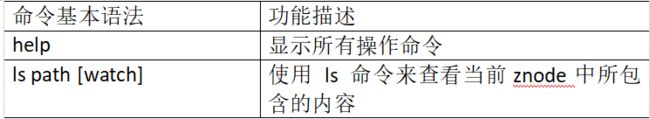

2.客户端命令行操作

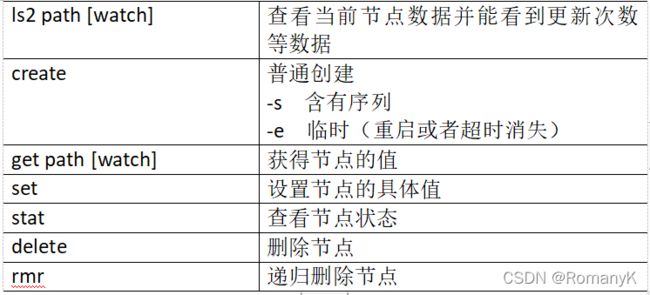

2.1 启动客户端

[root@hadoop001 zookeeper-3.4.6]# bin/zkCli.sh

2.2 显示所有操作命令

help

[zk: localhost:2181(CONNECTED) 0] help

ZooKeeper -server host:port cmd args

stat path [watch]

set path data [version]

ls path [watch]

delquota [-n|-b] path

ls2 path [watch]

setAcl path acl

setquota -n|-b val path

history

redo cmdno

printwatches on|off

delete path [version]

sync path

listquota path

rmr path

get path [watch]

create [-s] [-e] path data acl

addauth scheme auth

quit

getAcl path

close

connect host:port

2.3 查看当前znode中所包含的内容

ls /

[zk: localhost:2181(CONNECTED) 1] ls /

[zookeeper]

[zk: localhost:2181(CONNECTED) 2]

2.4 查看当前节点数据并能看到更新次数等数据

ls2 /

[zk: localhost:2181(CONNECTED) 2] ls2 /

[zookeeper]

cZxid = 0x0

ctime = Thu Jan 01 08:00:00 CST 1970

mZxid = 0x0

mtime = Thu Jan 01 08:00:00 CST 1970

pZxid = 0x0

cversion = -1

dataVersion = 0

aclVersion = 0

ephemeralOwner = 0x0

dataLength = 0

numChildren = 1

[zk: localhost:2181(CONNECTED) 3]

2.5 创建普通节点

[zk: localhost:2181(CONNECTED) 2] create /app1 “hello app1”

Created /app1

[zk: localhost:2181(CONNECTED) 4] create /app1/server01 “192.168.1.101”

Created /app1/server01

2.6 获得节点的值

[zk: localhost:2181(CONNECTED) 6] get /app1

hello app1

cZxid = 0x20000000a

ctime = Mon Jul 17 16:08:35 CST 2017

mZxid = 0x20000000a

mtime = Mon Jul 17 16:08:35 CST 2017

pZxid = 0x20000000b

cversion = 1

dataVersion = 0

aclVersion = 0

ephemeralOwner = 0x0

dataLength = 10

numChildren = 1

[zk: localhost:2181(CONNECTED) 8] get /app1/server01

192.168.1.101

cZxid = 0x20000000b

ctime = Mon Jul 17 16:11:04 CST 2017

mZxid = 0x20000000b

mtime = Mon Jul 17 16:11:04 CST 2017

pZxid = 0x20000000b

cversion = 0

dataVersion = 0

aclVersion = 0

ephemeralOwner = 0x0

dataLength = 13

numChildren = 0

2.7 创建短暂节点

[zk: localhost:2181(CONNECTED) 9] create -e /app-emp 8888

(1)在当前客户端是能查看到的

[zk: localhost:2181(CONNECTED) 10] ls /

[app1, app-emp, zookeeper]

(2)退出当前客户端然后再重启客户端

[zk: localhost:2181(CONNECTED) 12] quit

[root@hadoop01 zookeeper-3.4.10]# bin/zkCli.sh

(3)再次查看根目录下短暂节点已经删除

[zk: localhost:2181(CONNECTED) 0] ls /

[app1, zookeeper]

2.8 创建带序号的节点

(1)先创建一个普通的根节点app2

[zk: localhost:2181(CONNECTED) 11] create /app2 “app2”

(2)创建带序号的节点

[zk: localhost:2181(CONNECTED) 13] create -s /app2/aa 888

Created /app2/aa0000000000

[zk: localhost:2181(CONNECTED) 14] create -s /app2/bb 888

Created /app2/bb0000000001

[zk: localhost:2181(CONNECTED) 15] create -s /app2/cc 888

Created /app2/cc0000000002

如果原节点下有1个节点,则再排序时从1开始,以此类推。

[zk: localhost:2181(CONNECTED) 16] create -s /app1/aa 888

Created /app1/aa0000000001

2.9 修改节点数据值

[zk: localhost:2181(CONNECTED) 2] set /app1 999

2.10 节点的值变化监听

(1)在01主机上注册监听/app1节点数据变化

[zk: localhost:2181(CONNECTED) 26] get /app1 watch

(2)在02主机上修改/app1节点的数据

[zk: localhost:2181(CONNECTED) 5] set /app1 777

(3)观察03主机收到数据变化的监听

WATCHER::

WatchedEvent state:SyncConnected type:NodeDataChanged path:/app1

2.11 节点的子节点变化监听(路径变化)

(1)在03主机上注册监听/app1节点的子节点变化

[zk: localhost:2181(CONNECTED) 1] ls /app1 watch

[aa0000000001, server101]

(2)在02主机/app1节点上创建子节点

[zk: localhost:2181(CONNECTED) 6] create /app1/bb 666

Created /app1/bb

(3)观察03主机收到子节点变化的监听

WATCHER::

WatchedEvent state:SyncConnected type:NodeChildrenChanged path:/app1

2.12 删除节点

[zk: localhost:2181(CONNECTED) 4] delete /app1/bb

2.13 递归删除节点

[zk: localhost:2181(CONNECTED) 7] rmr /app2

慎用

2.14 查看节点状态

[zk: localhost:2181(CONNECTED) 12] stat /app1

cZxid = 0x20000000a

ctime = Mon Jul 17 16:08:35 CST 2017

mZxid = 0x200000018

mtime = Mon Jul 17 16:54:38 CST 2017

pZxid = 0x20000001c

cversion = 4

dataVersion = 2

aclVersion = 0

ephemeralOwner = 0x0

dataLength = 3

numChildren = 2