keepalived构建高可用集群

HA Cluster配置前提:

1、本机的主机名,要与hostname(uname -n)获得的名称保持一致;

CentOS 6: /etc/sysconfig/network

CentOS 7: hostnamectl set-hostname HOSTNAME

各节点要能互相解析主机名;一般建议通过hosts文件进行解析(防止DNS服务无法访问);

2、各节点时间同步;

3、确保iptables及selinux不会成为服务阻碍;

keepalived是vrrp协议在Linux主机上以守护进程方式的实现,能够根据配置文件自动生成ipvs规则;

可以对各RS做健康状态检测;

配置文件的组成部分:keepalived.conf文件

1.GLOBAL CONFIGURATION

2.VRRPD CONFIGURATION

vrrp instance

vrrp synchonization group

3.LVS CONFIGURATION

示例:

global_defs {

notification_email {

[email protected]

[email protected] #指明多个服务监控中收集的信息发送给哪些收件人

[email protected]

}

notification_email_from [email protected]#指明发件人

smtp_server 127.0.0.1

smtp_connect_timeout 30

router_id LVS_DEVEL

#vrrp_mcast_group1 224.0.0.100 #指定节点间传递心跳的多播地址

}

vrrp_instance VI_1 {

state MASTER #初始状态 master和backup两种

interface eth0 #流动ip绑定于那块网卡上

#use_vmac <VMAC_INTERFACE> 指定虚拟mac地址,可选

virtual_router_id 51 #虚拟路由组自己的ID号,用于区分多个虚拟路由组,须唯一

priority 100 #抢占模式下,即使state定义为master,而自身优先级不高还是会被抢占

advert_int 1 #每隔多少秒向外发送一次心跳信息

authentication {

auth_type PASS 认证方式PASS表示简单字符认证,还有MD5认证

auth_pass 1111 认证密码 (可以使用openssl命令生成Openssl rand -hex 4)

}

virtual_ipaddress {

192.168.200.16

192.168.200.17 #虚拟ip地址,下面的示例表示可以给出详细地址信息,如别名,设备等

192.168.200.18

}

nopreempt #非抢占模式;默认为抢占模式;

}

virtual_ipaddress {

<IPADDR>/<MASK> brd <IPADDR> dev <STRING> scope <SCOPE> label <LABEL>

192.168.200.17/24 dev eth1

192.168.200.18/24 dev eth2 label eth2:1

}

不重启服务,手动让主节点成为备节点:

#在vrrp上下文外定义一个脚本实现:

vrrp_script chk_mantaince_down {

script "[[ -f /etc/keepalived/down ]] && exit 1 || exit 0"

interval 1 #多久检测一次

weight -2 #检测到出现down文件时自身优先级减少几

}

#在vrrp上下文中调用

track_script {

chk_mantaince_down

}

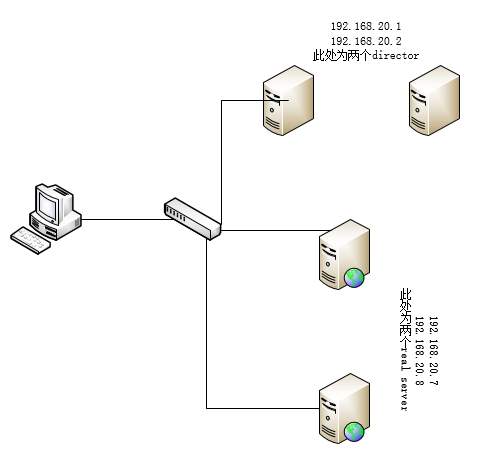

同步组定义:当我们基于keepalive做高可用,又为keepalive本身提供了负载均衡,这时需要定义两个虚拟路由分别负责外网和内网,外网ip移动到另一台主机时,内网ip也需要流动,内网ip移动到另一主机时,外网同理也需要移动。最后将两个虚拟路由归并到一个组中,如下图LVS-NAT模型负载均衡就需要这样做

vrrp_sync_group VG_1 {

group {

VI_1 # name of vrrp_instance (below)

VI_2 # One for each moveable IP.

}

}

vrrp_instance VI_1 {

eth0 #外网网卡

vip

}

vrrp_instance VI_2 {

eth1 #内网网卡

dip

}

在virtual instance中的主机状态发生改变时发送通知:

# notify scripts, alert as above notify_master <STRING>|<QUOTED-STRING> #当前主机转换为主节点时发送通知 notify_backup <STRING>|<QUOTED-STRING> #当前主机转换为备节点时发送通知 notify_fault <STRING>|<QUOTED-STRING> #当前主机故障时发送通知 notify <STRING>|<QUOTED-STRING> #自行指明 smtp_alert 例如:(在VI上下文中定义) notify_master "/etc/keepalived/notify.sh master" notify_backup "/etc/keepalived/notify.sh backup" notify_fault "/etc/keepalived/notify.sh fault"

脚本简单示例:

vip=172.16.20.100

contact='root@localhost'

notify() {

mailsubject="`hostname` to be $1: $vip floating"

mailbody="`date '+%F %H:%M:%S'`: vrrp transition, `hostname` changed to be $1"

echo $mailbody | mail -s "$mailsubject" $contact

}

case "$1" in

master)

notify master

# /etc/rc.d/init.d/keepalived start

exit 0

;;

backup)

notify backup

# /etc/rc.d/init.d/keepalived stop

exit 0

;;

fault)

notify fault

# /etc/rc.d/init.d/keepalived stop

exit 0

;;

*)

echo 'Usage: `basename $0` {master|backup|fault}'

exit 1

;;

esac

案例1:lvs-dr+keepalived实现负载均衡和高可用

1.初始化两个real server配置:在r1和r2上分别执行./lvs.sh start

#!/bin/bash

#

case $1 in

start)

echo 1 > /proc/sys/net/ipv4/conf/all/arp_ignore

echo 1 > /proc/sys/net/ipv4/conf/lo/arp_ignore

echo 2 > /proc/sys/net/ipv4/conf/all/arp_announce

echo 2 > /proc/sys/net/ipv4/conf/all/arp_announce

;;

stop)

echo 0 > /proc/sys/net/ipv4/conf/all/arp_ignore

echo 0 > /proc/sys/net/ipv4/conf/lo/arp_ignore

echo 0 > /proc/sys/net/ipv4/conf/all/arp_announce

echo 0 > /proc/sys/net/ipv4/conf/all/arp_announce

;;

esac

2.为两个real server配置vip,添加路由:

[root@node1 ~]# ifconfig lo:0 192.168.20.100 netmask 255.255.255.255 broadcast 192.168.20.100 up

[root@node1 ~]# route add -host 192.168.20.100 dev lo:0

测试阶段:使用keepalived前先测试lvs是好用的

其中一个director上配置vip:

ip addr add 192.168.20.100/32 dev eno50332208

[root@node3 ~]# ip addr list eno50332208 | grep 100

4: eno50332208: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

inet 192.168.20.100/32 scope global eno50332208

director上添加规则:

[root@node3 ~]# ipvsadm -A -t 192.168.20.100:80 -s rr

[root@node3 ~]# ipvsadm -a -t 192.168.20.100:80 -r 192.168.20.7 -g -w 1

[root@node3 ~]# ipvsadm -a -t 192.168.20.100:80 -r 192.168.20.8 -g -w 1

另一台主机访问虚拟ip发现以轮询:

[root@node4 ~]# curl 192.168.20.100

httpd on node1

[root@node4 ~]# curl 192.168.20.100

httpd on node3

3.在两个director上安装httpd作为sorry server

yum install httpd

配置sorry server页面

echo "sorry , maintannancing,here is director1" > /var/www/html/index.html

echo "sorry , maintannancing,here is director2" > /var/www/html/index.html

4.配置keepalived

yum install keepalived

keepalived.conf文件:

! Configuration File for keepalived

global_defs {

notification_email {

root@localhost

}

notification_email_from leeha@localhost

smtp_server 127.0.0.1

smtp_connect_timeout 30

router_id LVS_DEVEL

}

vrrp_script chk_mantaince_down {

script "[[ -f /etc/keepalived/down ]] && exit 1 || exit 0"

interval 1

weight -2

}

vrrp_instance VI_1 {

state MASTER #第二个director上定义为BACKUP

interface eno50332208

virtual_router_id 51

priority 100 #第二个director上定以为99

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.20.100 dev eno50332208

}

track_script {

chk_mantaince_down

}

notify_master "/etc/keepalived/notify.sh master"

notify_backup "/etc/keepalived/notify.sh backup"

notify_fault "/etc/keepalived/notify.sh fault"

}

#ipvs配置:

virtual_server 192.168.20.100 80 {

delay_loop 6

lb_algo wrr

lb_kind DR

nat_mask 255.255.0.0

persistence_timeout 0

protocol TCP

sorry_server 127.0.0.1 80 #配置sorry server

#real server健康监测,使用HTTP_GET

real_server 192.168.20.7 80 {

weight 1

HTTP_GET {

url {

path /

status_code 200

}

# url {

# path /mrtg/

# digest 9b3a0c85a887a256d6939da88aabd8cd

#}

connect_timeout 3

nb_get_retry 3

delay_before_retry 3

}

}

#real server健康监测

real_server 192.168.20.8 80 {

weight 1

HTTP_GET {

url {

path /

status_code 200

}

# url {

# path /mrtg/

# digest 9b3a0c85a887a256d6939da88aabd8cd

#}

connect_timeout 3

nb_get_retry 3

delay_before_retry 3

}

}

}

TIPS:健康监测也可以用tcp_check

## TCP_CHECK {

## connect_timeout 3

## }

给予notify脚本:

使用上文中脚本简单示例给出的即可

两个director上启动keepalived

测试:

1.抓包查看:

tcpdump -i eno50332208 -nn host 192.168.20.1

2.lvs规则自动根据keepalived中配置生成了

[root@node3 keepalived]# ipvsadm -L -n

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 192.168.20.100:80 wrr

-> 192.168.20.7:80 Route 1 0 0

-> 192.168.20.8:80 Route 1 0 0

3.vip自动配置上去了

[root@node3 keepalived]# ip addr list | grep 100/32

inet 192.168.20.100/32 scope global eno50332208

4.通知邮件收到:

Message 23:

From [email protected] Wed Oct 21 02:23:16 2015

Return-Path: <[email protected]>

X-Original-To: root@localhost

Delivered-To: [email protected]

Date: Wed, 21 Oct 2015 02:23:16 -0700

To: [email protected]

Subject: node3.lee.com to be master: 192.168.20.100 floating

User-Agent: Heirloom mailx 12.5 7/5/10

Content-Type: text/plain; charset=us-ascii

From: [email protected] (root)

Status: RO

2015-10-21 02:23:16: vrrp transition, node3.lee.com changed to be master

5.让192.168.20.7这个real server下线:service httpd stop

在director上查看发现规则被删除

[root@node3 keepalived]# ipvsadm -L -n

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 192.168.20.100:80 wrr

-> 192.168.20.8:80 Route 1 0 0

You have new mail in /var/spool/mail/root

6.让第一个director下线,测试

在director1的/etc/keepalived/下创建down文件,发现地址转移到第二个director上,访问real server成功

7.让两个real server都下线,看看sorry server是否生效

[root@node3 keepalived]# ipvsadm -L -n

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 192.168.20.100:80 wrr

-> 127.0.0.1:80 Route 1 0 0

案例二:keepalived+nginx实现高可用负载均衡web

负载均衡nginx

配置两个节点nginx的实现后端主机负载均衡

upstreamupservers {

server 192.168.20.7 weight=1;

server 192.168.20.8 weight=2;

}

调用:

location/ {

proxy_pass http://upservers/;

index index.html index.htm;

proxy_set_header Host $host;

proxy_set_header x-Real-IP$remote_addr;

}

tips:Killall -0 nginx 可以判断某个进程是否在线

keepalived.conf配置:

! Configuration File for keepalived

global_defs {

notification_email {

root@localhost

}

notification_email_from leeha@localhost

smtp_server 127.0.0.1

smtp_connect_timeout 30

router_id LVS_DEVEL

}

vrrp_script chk_mantaince_down {

script "[[ -f /etc/keepalived/down ]] && exit 1 || exit 0"

interval 1

weight -2

}

#写一个脚本使keepalived监控nginx服务

vrrp_script chk_nginx {

script "killall -0 nginx &> /dev/null"

interval 1

weight -10

}

vrrp_instance VI_1 {

state MASTER #第二个节点改为BACKUP

interface eno50332208

virtual_router_id 51

priority 100 #第二个节点改成99

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.20.100 dev eno50332208 label eno50332208:0

}

track_script {

chk_mantaince_down

}

#调用上面那个检测nginx的脚本

track_script {

chk_nginx

}

notify_master "/etc/keepalived/notify.sh master"

notify_backup "/etc/keepalived/notify.sh backup"

notify_fault "/etc/keepalived/notify.sh fault"

}

如果使用双主则再加一个VI,这样就实现两个节点nginx都在提供服务,万一其中一个down了另一个就承载两个vip

双主情况下在notify脚本中就不能定义systemctl restart nginx.service,因为这样备节点ginx挂了会影响主节点nginx正常提供服务

vrrp_instance VI_2 {

state BACKUP

interface eno50332208

virtual_router_id 61

priority 99

advert_int 1

authentication {

auth_type PASS

auth_pass 2222

}

virtual_ipaddress {

192.168.20.111 dev eno50332208 label eno50332208:1

}

track_script {

chk_mantaince_down

}

track_script {

chk_nginx

}

notify_master "/etc/keepalived/notify.sh master"

notify_backup "/etc/keepalived/notify.sh backup"

notify_fault "/etc/keepalived/notify.sh fault"

}

定义好后重启keepalived和nginx服务,主节点上有了vip 192.168.20.100,因为上面脚本设置了监控nginx服务当主节点的nginx服务down了,vip转移到备节点

配置notify脚本:

#!/bin/bash

# Author: MageEdu <[email protected]>

# description: An example of notify script

#

vip=192.168.20.100

contact='root@localhost'

notify() {

mailsubject="`hostname` to be $1: $vip floating"

mailbody="`date '+%F %H:%M:%S'`: vrrp transition, `hostname` changed to be $1"

echo $mailbody | mail -s "$mailsubject" $contact

}

case "$1" in

master)

notify master

#systemctl restart nginx.service #这个是作用只要主节点只要在线就一定使用主节点

exit 0

;;

backup)

notify backup

#systemctl restart nginx.service

exit 0

;;

fault)

notify fault

exit 0

;;

*)

echo 'Usage: `basename $0` {master|backup|fault}'

exit 1

;;

esac