CentOS5.8 HA集群之基于crm配置 corosync + pacemaker + NFS + httpd

大纲

一、什么是AIS和OpenAIS

二、什么是corosync

三、corosync安装与配置

四、基于crm配置 corosync + pacemaker + NFS + httpd

一、什么是AIS和OpenAIS

应用接口规范AIS是用来定义应用程序接口API的开放性规范的集合这些应用程序作为中间件为应用服务提供一种开放、高移植性的程序接口。是在实现高可用应用过程中是亟需的。服务可用性论坛SA Forum是一个开放性论坛它开发并发布这些免费规范。使用AIS规范的应用程序接口API可以减少应用程序的复杂性和缩短应用程序的开发时间这些规范的主要目的就是为了提高中间组件可移植性和应用程序的高可用性。SAF AIS是一个开放性工程在不断更新中。

OpenAIS是基于SA Forum 标准的集群框架的应用程序接口规范。OpenAIS提供一种集群模式这个模式包括集群框架集群成员管理通信方式集群监测等能够为集群软件或工具提供满足 AIS标准的集群接口但是它没有集群资源管理功能不能独立形成一个集群。OpenAIS组件包括AMF,CLM,CKPT,EVT,LCK,MSGTMR,CPG,EVS等因OpenAIS分支不同组件略有不同。下面介绍OpenAIS主要包含三个分支PicachoWhitetankWilson。Wilson是最新的比较稳定的版本是从openais 1.0.0到openais1.1.4。Whitetank现在是主流分支版本比较稳定的版本是openais0.80到openais0.86。Picacho第一代的OpenAIS的分支比较稳定的版本是openais0.70和openais0.71。现在比较常用的是Whitetank和Wilson两者之间有很多不同。OpenAIS从Whitetank升级到Wilson版本后组件变化很大Wilson把Openais核心架构组件独立出来放在CorosyncCorosync是一个集群管理引擎里面。Whitetank包含的组件有AMFCLMCKPTEVTLCK ,MSG, CPGCFG,EVS, aisparser, VSF_ykdbojdb等。而Wilson只含有AMFCLMCKPTLCK, MSG,EVT,TMRTMRWhitetank里面没有这些都是AIS组件。其他核心组件被放到了Corosync内。Wilson被当做Corosync的一个插件。

二、什么是corosync

Corosync是OpenAIS发展到Wilson版本后衍生出来的开放性集群引擎工程。可以说Corosync是OpenAIS工程的一部分。OpenAIS从openais0.90开始独立成两部分一个是Corosync另一个是AIS标准接口Wilson。Corosync包含OpenAIS的核心框架用来对Wilson的标准接口的使用、管理。它为商用的或开源性的集群提供集群执行框架。Corosync执行高可用应用程序的通信组系统它有以下特征

一个封闭的程序组A closed process group communication model通信模式这个模式提供一种虚拟的同步方式来保证能够复制服务器的状态。

一个简单可用性管理组件A simple availability manager这个管理组件可以重新启动应用程序的进程当它失败后。

一个配置和内存数据的统计A configuration and statistics in-memory database内存数据能够被设置回复接受通知的更改信息。

一个定额的系统A quorum system,定额完成或者丢失时通知应用程序。

三、corosync安装与配置

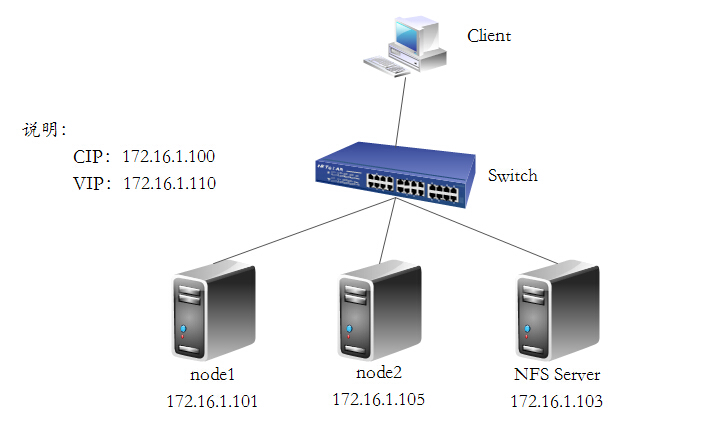

系统环境

CentOS5.8 x86_64

node1.network.com node1 172.16.1.101

node2.network.com node2 172.16.1.105

NFS Server /www 172.16.1.102

软件版本

corosync-1.2.7-1.1.el5.x86_64.rpm

pacemaker-1.0.12-1.el5.centos.x86_64.rpm

拓扑图

1、准备工作

(1)、时间同步

[root@node1 ~]# ntpdate s2c.time.edu.cn [root@node2 ~]# ntpdate s2c.time.edu.cn 可根据需要在每个节点上定义crontab任务 [root@node1 ~]# which ntpdate /sbin/ntpdate [root@node1 ~]# echo "*/5 * * * * /sbin/ntpdate s2c.time.edu.cn &> /dev/null" >> /var/spool/cron/root [root@node1 ~]# crontab -l */5 * * * * /sbin/ntpdate s2c.time.edu.cn &> /dev/null

(2)、主机名称要与uname -n,并通过/etc/hosts解析

node1 [root@node1 ~]# hostname node1.network.com [root@node1 ~]# uname -n node1.network.com [root@node1 ~]# sed -i 's@\(HOSTNAME=\).*@\1node1.network.com@g' /etc/sysconfig/network node2 [root@node2 ~]# hostname node2.network.com [root@node2 ~]# uname -n node2.network.com [root@node2 ~]# sed -i 's@\(HOSTNAME=\).*@\1node2.network.com@g' /etc/sysconfig/network node1添加hosts解析 [root@node1 ~]# vim /etc/hosts [root@node1 ~]# cat /etc/hosts 127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4 ::1 localhost localhost.localdomain localhost6 localhost6.localdomain6 172.16.1.101 node1.network.com node1 172.16.1.105 node2.network.com node2 拷贝此hosts文件至node2 [root@node1 ~]# scp /etc/hosts node2:/etc/ The authenticity of host 'node2 (172.16.1.105)' can't be established. RSA key fingerprint is 13:42:92:7b:ff:61:d8:f3:7c:97:5f:22:f6:71:b3:24. Are you sure you want to continue connecting (yes/no)? yes Warning: Permanently added 'node2,172.16.1.105' (RSA) to the list of known hosts. root@node2's password: hosts 100% 233 0.2KB/s 00:00

(3)、ssh互信通信

node1 [root@node1 ~]# ssh-keygen -t rsa -f ~/.ssh/id_rsa -P '' Generating public/private rsa key pair. /root/.ssh/id_rsa already exists. Overwrite (y/n)? n # 我这里已经生成过了 [root@node1 ~]# ssh-copy-id -i ~/.ssh/id_rsa.pub node2 root@node2's password: Now try logging into the machine, with "ssh 'node2'", and check in: .ssh/authorized_keys to make sure we haven't added extra keys that you weren't expecting. [root@node1 ~]# setenforce 0 [root@node1 ~]# ssh node2 'ifconfig' eth0 Link encap:Ethernet HWaddr 00:0C:29:D6:03:52 inet addr:172.16.1.105 Bcast:255.255.255.255 Mask:255.255.255.0 inet6 addr: fe80::20c:29ff:fed6:352/64 Scope:Link UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1 RX packets:9881 errors:0 dropped:0 overruns:0 frame:0 TX packets:11220 errors:0 dropped:0 overruns:0 carrier:0 collisions:0 txqueuelen:1000 RX bytes:5898514 (5.6 MiB) TX bytes:1850217 (1.7 MiB) lo Link encap:Local Loopback inet addr:127.0.0.1 Mask:255.0.0.0 inet6 addr: ::1/128 Scope:Host UP LOOPBACK RUNNING MTU:16436 Metric:1 RX packets:16 errors:0 dropped:0 overruns:0 frame:0 TX packets:16 errors:0 dropped:0 overruns:0 carrier:0 collisions:0 txqueuelen:0 RX bytes:1112 (1.0 KiB) TX bytes:1112 (1.0 KiB) 同理node2也需要做同样的双击互信,一样的操作,此处不再演示

(4)、关闭iptables和selinux

node1

[root@node1 ~]# service iptables stop [root@node1 ~]# vim /etc/sysconfig/selinux [root@node1 ~]# cat /etc/sysconfig/selinux # This file controls the state of SELinux on the system. # SELINUX= can take one of these three values: # enforcing - SELinux security policy is enforced. # permissive - SELinux prints warnings instead of enforcing. # disabled - SELinux is fully disabled. #SELINUX=permissive SELINUX=disabled # SELINUXTYPE= type of policy in use. Possible values are: # targeted - Only targeted network daemons are protected. # strict - Full SELinux protection. SELINUXTYPE=targeted

node2

[root@node2 ~]# service iptables stop [root@node2 ~]# vim /etc/sysconfig/selinux [root@node2 ~]# cat /etc/sysconfig/selinux # This file controls the state of SELinux on the system. # SELINUX= can take one of these three values: # enforcing - SELinux security policy is enforced. # permissive - SELinux prints warnings instead of enforcing. # disabled - SELinux is fully disabled. #SELINUX=permissive SELINUX=disabled # SELINUXTYPE= type of policy in use. Possible values are: # targeted - Only targeted network daemons are protected. # strict - Full SELinux protection. SELINUXTYPE=targeted

2、配置集群软件的epel源

node1

[root@node1 ~]# cd /etc/yum.repos.d/ [root@node1 yum.repos.d]# wget http://clusterlabs.org/rpm/epel-5/clusterlabs.repo [root@node1 yum.repos.d]# yum install -y pacemaker corosync

node2

[root@node2 ~]# cd /etc/yum.repos.d/ [root@node2 yum.repos.d]# wget http://clusterlabs.org/rpm/epel-5/clusterlabs.repo [root@node2 yum.repos.d]# yum install -y pacemaker corosync

3、编辑corosync的主配置文件

[root@node1 ~]# cd /etc/corosync/

[root@node1 corosync]# ls

corosync.conf.example service.d uidgid.d

[root@node1 corosync]# cp corosync.conf.example corosync.conf

[root@node1 corosync]# vi corosync.conf

# Please read the corosync.conf.5 manual page

compatibility: whitetank

totem {

version: 2 # 配置文件版本号,目前合法的版本号是2,不能修改

secauth: on # 开启安全认证功能,当使用aisexec时,会非常消耗CPU

threads: 2 # 线程数,根据CPU个数和核心数确定

interface {

ringnumber: 0 # 冗余环号,节点有多个网卡是可定义对应网卡在一个环内

bindnetaddr: 172.16.1.0 # 注意,这里填的是网络地址,不是某个ip

mcastaddr: 226.94.19.37 # 心跳信息传递的组播地址

mcastport: 5405 # 心跳信息组播使用端口

}

}

logging {

fileline: off # 指定要打印的行

to_stderr: no # 是否发送到标准错误输出,即显示器,建议不要开启

to_logfile: yes # 定义是否记录到日志文件

to_syslog: no # 定义是否记录到syslog,与logfile只启用一个即可

logfile: /var/log/cluster/corosync.log # 定义日志文件的保存位置

debug: off # 是否开启debug功能

timestamp: on # 是否打印时间戳,利于定位错误,但会消耗CPU

logger_subsys {

subsys: AMF

debug: off

}

}

service {

ver: 0

name: pacemaker # 定义pacemaker作为corosync的插件运行,启动sync时则会同时启动pacemaker

# use_mgmtd: yes

}

aisexec {

user: root

group: root

}

amf {

mode: disabled

}

注:各指令详细信息可man corosync.conf

4、生成authkey文件

[root@node1 corosync]# corosync-keygen Corosync Cluster Engine Authentication key generator. Gathering 1024 bits for key from /dev/random. Press keys on your keyboard to generate entropy. Writing corosync key to /etc/corosync/authkey. [root@node1 corosync]# ll total 40 -r-------- 1 root root 128 Jan 8 14:50 authkey # 权限为400 -rw-r--r-- 1 root root 536 Jan 8 14:48 corosync.conf -rw-r--r-- 1 root root 436 Jul 28 2010 corosync.conf.example drwxr-xr-x 2 root root 4096 Jul 28 2010 service.d drwxr-xr-x 2 root root 4096 Jul 28 2010 uidgid.d

5、将主配置文件和密钥认证文件拷贝至node2节点

[root@node1 corosync]# scp -p authkey corosync.conf node2:/etc/corosync/ authkey 100% 128 0.1KB/s 00:00 corosync.conf 100% 536 0.5KB/s 00:00 由于我们定义了记录到日志文件中,所以此处还得创建那个日志目录 [root@node1 corosync]# mkdir /var/log/cluster [root@node1 corosync]# ssh node2 'mkdir /var/log/cluster'

6、启动corosync

首先启动corosync,再查看启动过程信息,这里以node1为例

[root@node1 ~]# service corosync start

Starting Corosync Cluster Engine (corosync): [ OK ]

[root@node1 ~]# ssh node2 'service corosync start'

Starting Corosync Cluster Engine (corosync): [ OK ]

查看corosync引擎是否正常启动

[root@node1 corosync]# grep -e "Corosync Cluster Engine" -e "configuration file" /var/log/cluster/corosync.log

Jan 08 15:37:22 corosync [MAIN ] Corosync Cluster Engine ('1.2.7'): started and ready to provide service.

Jan 08 15:37:22 corosync [MAIN ] Successfully read main configuration file '/etc/corosync/corosync.conf'.

查看初始化成员节点通知是否正常发出

[root@node1 corosync]# grep TOTEM /var/log/cluster/corosync.log

Jan 08 15:37:22 corosync [TOTEM ] Initializing transport (UDP/IP).

Jan 08 15:37:22 corosync [TOTEM ] Initializing transmit/receive security: libtomcrypt SOBER128/SHA1HMAC (mode 0).

Jan 08 15:37:22 corosync [TOTEM ] The network interface [172.16.1.101] is now up.

Jan 08 15:37:22 corosync [TOTEM ] A processor joined or left the membership and a new membership was formed.

Jan 08 15:37:30 corosync [TOTEM ] A processor joined or left the membership and a new membership was formed.

检查启动过程中是否有错误产生

[root@node1 corosync]# grep ERROR: /var/log/cluster/corosync.log

Jan 08 15:37:46 node1.network.com pengine: [3688]: ERROR: unpack_resources: Resource start-up disabled since no STONITH resources have been defined

Jan 08 15:37:46 node1.network.com pengine: [3688]: ERROR: unpack_resources: Either configure some or disable STONITH with the stonith-enabled option

Jan 08 15:37:46 node1.network.com pengine: [3688]: ERROR: unpack_resources: NOTE: Clusters with shared data need STONITH to ensure data integrity

查看pacemaker是否正常启动

[root@node1 corosync]# grep pcmk_startup /var/log/cluster/corosync.log

Jan 08 15:37:22 corosync [pcmk ] info: pcmk_startup: CRM: Initialized

Jan 08 15:37:22 corosync [pcmk ] Logging: Initialized pcmk_startup

Jan 08 15:37:22 corosync [pcmk ] info: pcmk_startup: Maximum core file size is: 18446744073709551615

Jan 08 15:37:22 corosync [pcmk ] info: pcmk_startup: Service: 9

Jan 08 15:37:22 corosync [pcmk ] info: pcmk_startup: Local hostname: node1.network.com

查看集群节点状态

[root@node1 ~]# crm status

============

Last updated: Fri Jan 8 18:32:18 2016

Stack: openais

Current DC: node1.network.com - partition with quorum

Version: 1.0.12-unknown

2 Nodes configured, 2 expected votes

0 Resources configured.

============

Online: [ node1.network.com node2.network.com ]

7、禁用STONITH设备(如果没有stonith设备的话)及配置no-quorum-policy

随便哪个节点上运行crm都可以,会自动同步操作至DC [root@node1 ~]# crm crm(live)# status ============ Last updated: Fri Jan 8 19:48:51 2016 Stack: openais Current DC: node2.network.com - partition with quorum Version: 1.0.12-unknown 2 Nodes configured, 2 expected votes 0 Resources configured. ============ Online: [ node1.network.com node2.network.com ] crm(live)# configure crm(live)configure# verify crm_verify[10513]: 2016/01/08_19:48:54 ERROR: unpack_resources: Resource start-up disabled since no STONITH resources have been defined crm_verify[10513]: 2016/01/08_19:48:54 ERROR: unpack_resources: Either configure some or disable STONITH with the stonith-enabled option crm_verify[10513]: 2016/01/08_19:48:54 ERROR: unpack_resources: NOTE: Clusters with shared data need STONITH to ensure data integrity Errors found during check: config not valid crm(live)configure# property stonith-enabled=false # 禁用stonith设备 crm(live)configure# property no-quorum-policy=ignore # 不具备法定票数时采取的动作 crm(live)configure# verify crm(live)configure# commit 查看配置文件中生成的定义 crm(live)configure# show node node1.network.com node node2.network.com property $id="cib-bootstrap-options" \ dc-version="1.0.12-unknown" \ cluster-infrastructure="openais" \ expected-quorum-votes="2" \ stonith-enabled="false" \ no-quorum-policy="ignore"

四、基于crm配置 corosync + pacemaker + NFS + httpd

1、配置webip

[root@node1 ~]# crm crm(live)# configure crm(live)configure# primitive webip ocf:heartbeat:IPaddr params ip=172.16.1.110 cidr_netmask=16 nic=eth0 crm(live)configure# verify crm(live)configure# commit 查看配置文件中生成的定义 crm(live)configure# show node node1.network.com node node2.network.com primitive webip ocf:heartbeat:IPaddr \ params ip="172.16.1.110" cidr_netmask="16" nic="eth0" property $id="cib-bootstrap-options" \ dc-version="1.0.12-unknown" \ cluster-infrastructure="openais" \ expected-quorum-votes="2" \ stonith-enabled="false" \ no-quorum-policy="ignore" 查看资源的启用状态 crm(live)configure# cd crm(live)# status ============ Last updated: Fri Jan 8 20:08:20 2016 Stack: openais Current DC: node2.network.com - partition with quorum Version: 1.0.12-unknown 2 Nodes configured, 2 expected votes 1 Resources configured. ============ Online: [ node1.network.com node2.network.com ] webip (ocf::heartbeat:IPaddr): Started node1.network.com 在命令行下查看是否配置了ip地址 [root@node1 ~]# ifconfig eth0 Link encap:Ethernet HWaddr 00:0C:29:FE:82:38 inet addr:172.16.1.101 Bcast:255.255.255.255 Mask:255.255.255.0 inet6 addr: fe80::20c:29ff:fefe:8238/64 Scope:Link UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1 RX packets:8967 errors:0 dropped:0 overruns:0 frame:0 TX packets:12584 errors:0 dropped:0 overruns:0 carrier:0 collisions:0 txqueuelen:1000 RX bytes:1401041 (1.3 MiB) TX bytes:1914819 (1.8 MiB) eth0:0 Link encap:Ethernet HWaddr 00:0C:29:FE:82:38 inet addr:172.16.1.110 Bcast:172.16.255.255 Mask:255.255.0.0 UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1 lo Link encap:Local Loopback inet addr:127.0.0.1 Mask:255.0.0.0 inet6 addr: ::1/128 Scope:Host UP LOOPBACK RUNNING MTU:16436 Metric:1 RX packets:8 errors:0 dropped:0 overruns:0 frame:0 TX packets:8 errors:0 dropped:0 overruns:0 carrier:0 collisions:0 txqueuelen:0 RX bytes:560 (560.0 b) TX bytes:560 (560.0 b)

2、配置webstore

[root@node1 ~]# crm crm(live)# status ============ Last updated: Fri Jan 8 20:23:57 2016 Stack: openais Current DC: node2.network.com - partition with quorum Version: 1.0.12-unknown 2 Nodes configured, 2 expected votes 1 Resources configured. ============ Online: [ node1.network.com node2.network.com ] webip (ocf::heartbeat:IPaddr): Started node1.network.com crm(live)# configure crm(live)configure# primitive webstore ocf:heartbeat:Filesystem params device=172.16.1.102:/www directory=/var/www/html fstype=nfs WARNING: webstore: default timeout 20s for start is smaller than the advised 60 WARNING: webstore: default timeout 20s for stop is smaller than the advised 60 crm(live)configure# delete webstore crm(live)configure# primitive webstore ocf:heartbeat:Filesystem params device=172.16.1.102:/www directory=/var/www/html fstype=nfs op start timeout=60s op stop timeout=60s crm(live)configure# verify crm(live)configure# commit 查看配置文件中生成的定义 crm(live)configure# show node node1.network.com node node2.network.com primitive webip ocf:heartbeat:IPaddr \ params ip="172.16.1.110" cidr_netmask="16" nic="eth0" primitive webstore ocf:heartbeat:Filesystem \ params device="172.16.1.102:/www" directory="/var/www/html" fstype="nfs" \ op start interval="0" timeout="60s" \ op stop interval="0" timeout="60s" property $id="cib-bootstrap-options" \ dc-version="1.0.12-unknown" \ cluster-infrastructure="openais" \ expected-quorum-votes="2" \ stonith-enabled="false" \ no-quorum-policy="ignore" crm(live)configure# cd 查看资源的启用状态 crm(live)# status ============ Last updated: Fri Jan 8 20:27:00 2016 Stack: openais Current DC: node2.network.com - partition with quorum Version: 1.0.12-unknown 2 Nodes configured, 2 expected votes 2 Resources configured. ============ Online: [ node1.network.com node2.network.com ] webip (ocf::heartbeat:IPaddr): Started node1.network.com webstore (ocf::heartbeat:Filesystem): Started node2.network.com

3、配置httpd

crm(live)# configure crm(live)configure# primitive httpd lsb:httpd crm(live)configure# verify crm(live)configure# commit 查看配置文件中生成的定义 crm(live)configure# show node node1.network.com node node2.network.com primitive httpd lsb:httpd primitive webip ocf:heartbeat:IPaddr \ params ip="172.16.1.110" cidr_netmask="16" nic="eth0" primitive webstore ocf:heartbeat:Filesystem \ params device="172.16.1.102:/www" directory="/var/www/html" fstype="nfs" \ op start interval="0" timeout="60s" \ op stop interval="0" timeout="60s" property $id="cib-bootstrap-options" \ dc-version="1.0.12-unknown" \ cluster-infrastructure="openais" \ expected-quorum-votes="2" \ stonith-enabled="false" \ no-quorum-policy="ignore" crm(live)configure# cd 查看资源的启用状态 crm(live)# status ============ Last updated: Fri Jan 8 20:29:55 2016 Stack: openais Current DC: node2.network.com - partition with quorum Version: 1.0.12-unknown 2 Nodes configured, 2 expected votes 3 Resources configured. ============ Online: [ node1.network.com node2.network.com ] webip (ocf::heartbeat:IPaddr): Started node1.network.com webstore (ocf::heartbeat:Filesystem): Started node2.network.com httpd (lsb:httpd): Started node1.network.com

4、配置webservice组资源

crm(live)configure# group webservice webip webstore httpd crm(live)configure# verify crm(live)configure# commit 查看配置文件中生成的定义 crm(live)configure# show node node1.network.com node node2.network.com primitive httpd lsb:httpd primitive webip ocf:heartbeat:IPaddr \ params ip="172.16.1.110" cidr_netmask="16" nic="eth0" primitive webstore ocf:heartbeat:Filesystem \ params device="172.16.1.102:/www" directory="/var/www/html" fstype="nfs" \ op start interval="0" timeout="60s" \ op stop interval="0" timeout="60s" group webservice webip webstore httpd property $id="cib-bootstrap-options" \ dc-version="1.0.12-unknown" \ cluster-infrastructure="openais" \ expected-quorum-votes="2" \ stonith-enabled="false" \ no-quorum-policy="ignore" crm(live)configure# cd 查看资源的启用状态 crm(live)# status ============ Last updated: Fri Jan 8 20:34:36 2016 Stack: openais Current DC: node2.network.com - partition with quorum Version: 1.0.12-unknown 2 Nodes configured, 2 expected votes 1 Resources configured. ============ Online: [ node1.network.com node2.network.com ] Resource Group: webservice webip (ocf::heartbeat:IPaddr): Started node1.network.com webstore (ocf::heartbeat:Filesystem): Started node1.network.com httpd (lsb:httpd): Started node1.network.com

5、定义约束

crm(live)configure# order webip_before_webstore_before_httpd mandatory: webip webstore httpd crm(live)configure# location webservice_on_node1 webservice rule 100: #uname eq node1.network.com crm(live)configure# verify crm(live)configure# commit 查看配置文件中生成的定义 crm(live)configure# show node node1.network.com \ attributes standby="off" node node2.network.com primitive httpd lsb:httpd primitive webip ocf:heartbeat:IPaddr \ params ip="172.16.1.110" cidr_netmask="16" nic="eth0" primitive webstore ocf:heartbeat:Filesystem \ params device="172.16.1.102:/www" directory="/var/www/html" fstype="nfs" \ op start interval="0" timeout="60s" \ op stop interval="0" timeout="60s" group webservice webip webstore httpd \ meta target-role="Started" location webservice_on_node1 webservice \ rule $id="webservice_on_node1-rule" 100: #uname eq node1.network.com order webip_before_webstore_before_httpd inf: webip webstore httpd property $id="cib-bootstrap-options" \ dc-version="1.0.12-unknown" \ cluster-infrastructure="openais" \ expected-quorum-votes="2" \ stonith-enabled="false" \ no-quorum-policy="ignore" crm(live)configure# cd 查看资源的启用状态 crm(live)# status ============ Last updated: Fri Jan 8 21:28:29 2016 Stack: openais Current DC: node1.network.com - partition WITHOUT quorum Version: 1.0.12-unknown 2 Nodes configured, 2 expected votes 1 Resources configured. ============ Online: [ node1.network.com ] OFFLINE: [ node2.network.com ] Resource Group: webservice webip (ocf::heartbeat:IPaddr): Started node1.network.com webstore (ocf::heartbeat:Filesystem): Started node1.network.com httpd (lsb:httpd): Started node1.network.com

6、测试是否高可用

首先查看一下资源当前运行的节点 [root@node1 ~]# crm status ============ Last updated: Fri Jan 8 21:33:36 2016 Stack: openais Current DC: node1.network.com - partition with quorum Version: 1.0.12-unknown 2 Nodes configured, 2 expected votes 1 Resources configured. ============ Online: [ node1.network.com node2.network.com ] Resource Group: webservice webip (ocf::heartbeat:IPaddr): Started node1.network.com webstore (ocf::heartbeat:Filesystem): Started node1.network.com httpd (lsb:httpd): Started node1.network.com 让node1成为备节点,看资源是否会自动转移 [root@node1 ~]# crm node standby node1.network.com 再来查看资源的启用状态 [root@node1 ~]# crm status ============ Last updated: Fri Jan 8 21:33:49 2016 Stack: openais Current DC: node1.network.com - partition with quorum Version: 1.0.12-unknown 2 Nodes configured, 2 expected votes 1 Resources configured. ============ Node node1.network.com: standby Online: [ node2.network.com ] Resource Group: webservice # 可以看到资源成功转移到node2上了 webip (ocf::heartbeat:IPaddr): Started node2.network.com webstore (ocf::heartbeat:Filesystem): Started node2.network.com httpd (lsb:httpd): Stopped 再让node1上线,看资源是否会转回 [root@node1 ~]# crm node online node1.network.com 再次查看资源的启用状态 [root@node1 ~]# crm status ============ Last updated: Fri Jan 8 21:34:07 2016 Stack: openais Current DC: node1.network.com - partition with quorum Version: 1.0.12-unknown 2 Nodes configured, 2 expected votes 1 Resources configured. ============ Online: [ node1.network.com node2.network.com ] Resource Group: webservice # 资源自动转回,因为我们定义了位置约束 webip (ocf::heartbeat:IPaddr): Started node1.network.com webstore (ocf::heartbeat:Filesystem): Started node1.network.com httpd (lsb:httpd): Started node1.network.com

可以看到,此时集群已正常运行,能够提供一个正常的高可用服务