opencv 手势识别 【附源代码】

我使用OpenCV2.4.4的windows版本+Qt4.8.3+VS2010的编译器做了一个手势识别的小程序。

本程序主要使到了Opencv的特征训练库和最基本的图像处理的知识,包括肤色检测等等。

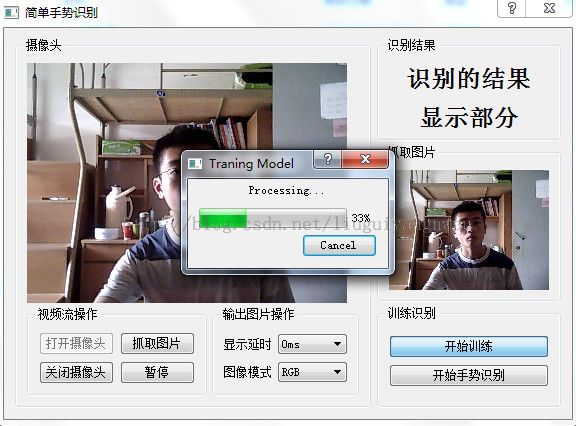

废话不多,先看一下基本的界面设计,以及主要功能:

相信对于Qt有一些了解的人都不会对这个界面的设计感到陌生吧!(该死,该死!)我们向下走:

紧接着是Qt导入OPenCV2.4.4的库文件:(先看一下Qt的工程文件吧)

#-------------------------------------------------

#

# Project created by QtCreator 2013-05-25T11:16:11

#

#-------------------------------------------------

QT += core gui

CONFIG += warn_off

greaterThan(QT_MAJOR_VERSION, 4): QT += widgets

TARGET = HandGesture

TEMPLATE = app

INCLUDEPATH += E:/MyQtCreator/MyOpenCV/opencv/build/include

SOURCES += main.cpp\

handgesturedialog.cpp \

SRC/GestrueInfo.cpp \

SRC/AIGesture.cpp

HEADERS += handgesturedialog.h \

SRC/GestureStruct.h \

SRC/GestrueInfo.h \

SRC/AIGesture.h

FORMS += handgesturedialog.ui

#Load OpenCV runtime libs

win32:CONFIG(release, debug|release): LIBS += -L$$PWD/../../../MyQtCreator/MyOpenCV/opencv/build/x86/vc10/lib/ -lopencv_core244

else:win32:CONFIG(debug, debug|release): LIBS += -L$$PWD/../../../MyQtCreator/MyOpenCV/opencv/build/x86/vc10/lib/ -lopencv_core244d

INCLUDEPATH += $$PWD/../../../MyQtCreator/MyOpenCV/opencv/build/x86/vc10

DEPENDPATH += $$PWD/../../../MyQtCreator/MyOpenCV/opencv/build/x86/vc10

win32:CONFIG(release, debug|release): LIBS += -L$$PWD/../../../MyQtCreator/MyOpenCV/opencv/build/x86/vc10/lib/ -lopencv_features2d244

else:win32:CONFIG(debug, debug|release): LIBS += -L$$PWD/../../../MyQtCreator/MyOpenCV/opencv/build/x86/vc10/lib/ -lopencv_features2d244d

INCLUDEPATH += $$PWD/../../../MyQtCreator/MyOpenCV/opencv/build/x86/vc10

DEPENDPATH += $$PWD/../../../MyQtCreator/MyOpenCV/opencv/build/x86/vc10

win32:CONFIG(release, debug|release): LIBS += -L$$PWD/../../../MyQtCreator/MyOpenCV/opencv/build/x86/vc10/lib/ -lopencv_haartraining_engine

else:win32:CONFIG(debug, debug|release): LIBS += -L$$PWD/../../../MyQtCreator/MyOpenCV/opencv/build/x86/vc10/lib/ -lopencv_haartraining_engined

INCLUDEPATH += $$PWD/../../../MyQtCreator/MyOpenCV/opencv/build/x86/vc10

DEPENDPATH += $$PWD/../../../MyQtCreator/MyOpenCV/opencv/build/x86/vc10

win32:CONFIG(release, debug|release): LIBS += -L$$PWD/../../../MyQtCreator/MyOpenCV/opencv/build/x86/vc10/lib/ -lopencv_highgui244

else:win32:CONFIG(debug, debug|release): LIBS += -L$$PWD/../../../MyQtCreator/MyOpenCV/opencv/build/x86/vc10/lib/ -lopencv_highgui244d

INCLUDEPATH += $$PWD/../../../MyQtCreator/MyOpenCV/opencv/build/x86/vc10

DEPENDPATH += $$PWD/../../../MyQtCreator/MyOpenCV/opencv/build/x86/vc10

win32:CONFIG(release, debug|release): LIBS += -L$$PWD/../../../MyQtCreator/MyOpenCV/opencv/build/x86/vc10/lib/ -lopencv_objdetect244

else:win32:CONFIG(debug, debug|release): LIBS += -L$$PWD/../../../MyQtCreator/MyOpenCV/opencv/build/x86/vc10/lib/ -lopencv_objdetect244d

INCLUDEPATH += $$PWD/../../../MyQtCreator/MyOpenCV/opencv/build/x86/vc10

DEPENDPATH += $$PWD/../../../MyQtCreator/MyOpenCV/opencv/build/x86/vc10

win32:CONFIG(release, debug|release): LIBS += -L$$PWD/../../../MyQtCreator/MyOpenCV/opencv/build/x86/vc10/lib/ -lopencv_video244

else:win32:CONFIG(debug, debug|release): LIBS += -L$$PWD/../../../MyQtCreator/MyOpenCV/opencv/build/x86/vc10/lib/ -lopencv_video244d

INCLUDEPATH += $$PWD/../../../MyQtCreator/MyOpenCV/opencv/build/x86/vc10

DEPENDPATH += $$PWD/../../../MyQtCreator/MyOpenCV/opencv/build/x86/vc10

win32:CONFIG(release, debug|release): LIBS += -L$$PWD/../../../MyQtCreator/MyOpenCV/opencv/build/x86/vc10/lib/ -lopencv_calib3d244

else:win32:CONFIG(debug, debug|release): LIBS += -L$$PWD/../../../MyQtCreator/MyOpenCV/opencv/build/x86/vc10/lib/ -lopencv_calib3d244d

INCLUDEPATH += $$PWD/../../../MyQtCreator/MyOpenCV/opencv/build/x86/vc10

DEPENDPATH += $$PWD/../../../MyQtCreator/MyOpenCV/opencv/build/x86/vc10

win32:CONFIG(release, debug|release): LIBS += -L$$PWD/../../../MyQtCreator/MyOpenCV/opencv/build/x86/vc10/lib/ -lopencv_contrib244

else:win32:CONFIG(debug, debug|release): LIBS += -L$$PWD/../../../MyQtCreator/MyOpenCV/opencv/build/x86/vc10/lib/ -lopencv_contrib244d

INCLUDEPATH += $$PWD/../../../MyQtCreator/MyOpenCV/opencv/build/x86/vc10

DEPENDPATH += $$PWD/../../../MyQtCreator/MyOpenCV/opencv/build/x86/vc10

win32:CONFIG(release, debug|release): LIBS += -L$$PWD/../../../MyQtCreator/MyOpenCV/opencv/build/x86/vc10/lib/ -lopencv_imgproc244

else:win32:CONFIG(debug, debug|release): LIBS += -L$$PWD/../../../MyQtCreator/MyOpenCV/opencv/build/x86/vc10/lib/ -lopencv_imgproc244d

INCLUDEPATH += $$PWD/../../../MyQtCreator/MyOpenCV/opencv/build/x86/vc10

DEPENDPATH += $$PWD/../../../MyQtCreator/MyOpenCV/opencv/build/x86/vc10

win32:CONFIG(release, debug|release): LIBS += -L$$PWD/../../../MyQtCreator/MyOpenCV/opencv/build/x86/vc10/lib/ -lopencv_legacy244

else:win32:CONFIG(debug, debug|release): LIBS += -L$$PWD/../../../MyQtCreator/MyOpenCV/opencv/build/x86/vc10/lib/ -lopencv_legacy244d

INCLUDEPATH += $$PWD/../../../MyQtCreator/MyOpenCV/opencv/build/x86/vc10

DEPENDPATH += $$PWD/../../../MyQtCreator/MyOpenCV/opencv/build/x86/vc10

win32:CONFIG(release, debug|release): LIBS += -L$$PWD/../../../MyQtCreator/MyOpenCV/opencv/build/x86/vc10/lib/ -lopencv_ml244

else:win32:CONFIG(debug, debug|release): LIBS += -L$$PWD/../../../MyQtCreator/MyOpenCV/opencv/build/x86/vc10/lib/ -lopencv_ml244d

INCLUDEPATH += $$PWD/../../../MyQtCreator/MyOpenCV/opencv/build/x86/vc10

DEPENDPATH += $$PWD/../../../MyQtCreator/MyOpenCV/opencv/build/x86/vc10

win32:CONFIG(release, debug|release): LIBS += -L$$PWD/../../../MyQtCreator/MyOpenCV/opencv/build/x86/vc10/lib/ -lopencv_photo244

else:win32:CONFIG(debug, debug|release): LIBS += -L$$PWD/../../../MyQtCreator/MyOpenCV/opencv/build/x86/vc10/lib/ -lopencv_photo244d

INCLUDEPATH += $$PWD/../../../MyQtCreator/MyOpenCV/opencv/build/x86/vc10

DEPENDPATH += $$PWD/../../../MyQtCreator/MyOpenCV/opencv/build/x86/vc10

win32:CONFIG(release, debug|release): LIBS += -L$$PWD/../../../MyQtCreator/MyOpenCV/opencv/build/x86/vc10/lib/ -lopencv_nonfree244

else:win32:CONFIG(debug, debug|release): LIBS += -L$$PWD/../../../MyQtCreator/MyOpenCV/opencv/build/x86/vc10/lib/ -lopencv_nonfree244d

INCLUDEPATH += $$PWD/../../../MyQtCreator/MyOpenCV/opencv/build/x86/vc10

DEPENDPATH += $$PWD/../../../MyQtCreator/MyOpenCV/opencv/build/x86/vc10

当做好以上的基本配置之后,我们进行手势识别的开发:

第一:要采集到原始的图片

采集好原始图片后进行修正,包括尺寸大小,那时我还使用到了matlab这个强大的工具,

紧接着进行图像的样本特征提取,到网上把,CSDN中有大量的关于对图像特征训练库的识别与训练,按照他们一步一步的操作模式不会有问题的饿

下面是要通过摄像头进行图像的采集,直接贴代码:

void HandGestureDialog::on_pushButton_OpenCamera_clicked()

{

cam = cvCreateCameraCapture(0);

timer->start(time_intervals);

frame = cvQueryFrame(cam);

ui->pushButton_OpenCamera->setDisabled (true);

ui->pushButton_CloseCamera->setEnabled (true);

ui->pushButton_ShowPause->setEnabled (true);

ui->pushButton_SnapImage->setEnabled (true);

afterSkin = cvCreateImage (cvSize(frame->width,frame->height),IPL_DEPTH_8U,1);

}

void HandGestureDialog::readFarme()

{

frame = cvQueryFrame(cam);

QImage image((const uchar*)frame->imageData,

frame->width,

frame->height,

QImage::Format_RGB888);

image = image.rgbSwapped();

image = image.scaled(320,240);

ui->label_CameraShow->setPixmap(QPixmap::fromImage(image));

gesture.SkinDetect (frame,afterSkin);

/*next to opencv*/

if(status_switch == Recongnise)

{

// Flips the frame into mirror image

cvFlip(frame,frame,1);

// Call the function to detect and draw the hand positions

StartRecongizeHand(frame);

}

}

查看一下样例图片:

开始训练的核心代码:

void HandGestureDialog::on_pushButton_StartTrain_clicked()

{

QProgressDialog* process = new QProgressDialog(this);

process->setWindowTitle ("Traning Model");

process->setLabelText("Processing...");

process->setModal(true);

process->show ();

gesture.setMainUIPointer (this);

gesture.Train(process);

QMessageBox::about (this,tr("完成"),tr("手势训练模型完成"));

}

void CAIGesture::Train(QProgressDialog *pBar)//对指定训练文件夹里面的所有手势进行训练

{

QString curStr = QDir::currentPath ();

QString fp1 = "InfoDoc/gestureFeatureFile.yml";

fp1 = curStr + "/" + fp1;

CvFileStorage *GestureFeature=cvOpenFileStorage(fp1.toStdString ().c_str (),0,CV_STORAGE_WRITE);

FILE* fp;

QString fp2 = "InfoDoc/gestureFile.txt";

fp2 = curStr + "/" + fp2;

fp=fopen(fp2.toStdString ().c_str (),"w");

int FolderCount=0;

/*获取当前的目录,然后得到当前的子目录*/

QString trainStr = curStr;

trainStr += "/TraningSample/";

QDir trainDir(trainStr);

GestureStruct gesture;

QFileInfoList list = trainDir.entryInfoList();

pBar->setRange(0,list.size ()-2);

for(int i=2;i<list.size ();i++)

{

pBar->setValue(i-1);

QFileInfo fileInfo = list.at (i);

if(fileInfo.isDir () == true)

{

FolderCount++;

QString tempStr = fileInfo.fileName ();

fprintf(fp,"%s\n",tempStr.toStdString ().c_str ());

gesture.angleName = tempStr.toStdString ()+"angleName";

gesture.anglechaName = tempStr.toStdString ()+"anglechaName";

gesture.countName = tempStr.toStdString ()+"anglecountName";

tempStr = trainStr + tempStr + "/";

QDir subDir(tempStr);

OneGestureTrain(subDir,GestureFeature,gesture);

}

}

pBar->autoClose ();

delete pBar;

pBar = NULL;

fprintf(fp,"%s%d","Hand Gesture Number: ",FolderCount);

fclose(fp);

cvReleaseFileStorage(&GestureFeature);

}

void CAIGesture::OneGestureTrain(QDir GestureDir,CvFileStorage *fs,GestureStruct gesture)//对单张图片进行训练

{

IplImage* TrainImage=0;

IplImage* dst=0;

CvSeq* contour=NULL;

CvMemStorage* storage;

storage = cvCreateMemStorage(0);

CvPoint center=cvPoint(0,0);

float radius=0.0;

float angle[FeatureNum][10]={0},anglecha[FeatureNum][10]={0},anglesum[FeatureNum][10]={0},anglechasum[FeatureNum][10]={0};

float count[FeatureNum]={0},countsum[FeatureNum]={0};

int FileCount=0;

/*读取该目录下的所有jpg文件*/

QFileInfoList list = GestureDir.entryInfoList();

QString currentDirPath = GestureDir.absolutePath ();

currentDirPath += "/";

for(int k=2;k<list.size ();k++)

{

QFileInfo tempInfo = list.at (k);

if(tempInfo.isFile () == true)

{

QString fileNamePath = currentDirPath + tempInfo.fileName ();

TrainImage=cvLoadImage(fileNamePath.toStdString ().c_str(),1);

if(TrainImage==NULL)

{

cout << "can't load image" << endl;

cvReleaseMemStorage(&storage);

cvReleaseImage(&dst);

cvReleaseImage(&TrainImage);

return;

}

if(dst==NULL&&TrainImage!=NULL)

dst=cvCreateImage(cvGetSize(TrainImage),8,1);

SkinDetect(TrainImage,dst);

FindBigContour(dst,contour,storage);

cvZero(dst);

cvDrawContours( dst, contour, CV_RGB(255,255,255),CV_RGB(255,255,255), -1, -1, 8 );

ComputeCenter(contour,center,radius);

GetFeature(dst,center,radius,angle,anglecha,count);

for(int j=0;j<FeatureNum;j++)

{

countsum[j]+=count[j];

for(int k=0;k<10;k++)

{

anglesum[j][k]+=angle[j][k];

anglechasum[j][k]+=anglecha[j][k];

}

}

FileCount++;

cvReleaseImage(&TrainImage);

}

}

for(int i=0;i<FeatureNum;i++)

{

gesture.count[i]=countsum[i]/FileCount;

for(int j=0;j<10;j++)

{

gesture.angle[i][j]=anglesum[i][j]/FileCount;

gesture.anglecha[i][j]=anglechasum[i][j]/FileCount;

}

}

cvStartWriteStruct(fs,gesture.angleName.c_str (),CV_NODE_SEQ,NULL);//开始写入yml文件

int i=0;

for(i=0;i<FeatureNum;i++)

cvWriteRawData(fs,&gesture.angle[i][0],10,"f");//写入肤色角度的值

cvEndWriteStruct(fs);

cvStartWriteStruct(fs,gesture.anglechaName.c_str (),CV_NODE_SEQ,NULL);

for(i=0;i<FeatureNum;i++)

cvWriteRawData(fs,&gesture.anglecha[i][0],10,"f");//写入非肤色角度的值

cvEndWriteStruct(fs);

cvStartWriteStruct(fs,gesture.countName.c_str (),CV_NODE_SEQ,NULL);

cvWriteRawData(fs,&gesture.count[0],FeatureNum,"f");//写入肤色角度的个数

cvEndWriteStruct(fs);

cvReleaseMemStorage(&storage);

cvReleaseImage(&dst);

}

void CAIGesture::SkinDetect(IplImage* src,IplImage* dst)

{

IplImage* hsv = cvCreateImage(cvGetSize(src), IPL_DEPTH_8U, 3);//use to split to HSV

IplImage* tmpH1 = cvCreateImage( cvGetSize(src), IPL_DEPTH_8U, 1);//Use To Skin Detect

IplImage* tmpS1 = cvCreateImage(cvGetSize(src), IPL_DEPTH_8U, 1);

IplImage* tmpH2 = cvCreateImage(cvGetSize(src), IPL_DEPTH_8U, 1);

IplImage* tmpS3 = cvCreateImage(cvGetSize(src), IPL_DEPTH_8U, 1);

IplImage* tmpH3 = cvCreateImage(cvGetSize(src), IPL_DEPTH_8U, 1);

IplImage* tmpS2 = cvCreateImage(cvGetSize(src), IPL_DEPTH_8U, 1);

IplImage* H = cvCreateImage( cvGetSize(src), IPL_DEPTH_8U, 1);

IplImage* S = cvCreateImage( cvGetSize(src), IPL_DEPTH_8U, 1);

IplImage* V = cvCreateImage( cvGetSize(src), IPL_DEPTH_8U, 1);

IplImage* src_tmp1=cvCreateImage(cvGetSize(src),8,3);

cvSmooth(src,src_tmp1,CV_GAUSSIAN,3,3); //Gaussian Blur

cvCvtColor(src_tmp1, hsv, CV_BGR2HSV );//Color Space to Convert

cvCvtPixToPlane(hsv,H,S,V,0);//To Split 3 channel

/*********************Skin Detect**************/

cvInRangeS(H,cvScalar(0.0,0.0,0,0),cvScalar(20.0,0.0,0,0),tmpH1);

cvInRangeS(S,cvScalar(75.0,0.0,0,0),cvScalar(200.0,0.0,0,0),tmpS1);

cvAnd(tmpH1,tmpS1,tmpH1,0);

// Red Hue with Low Saturation

// Hue 0 to 26 degree and Sat 20 to 90

cvInRangeS(H,cvScalar(0.0,0.0,0,0),cvScalar(13.0,0.0,0,0),tmpH2);

cvInRangeS(S,cvScalar(20.0,0.0,0,0),cvScalar(90.0,0.0,0,0),tmpS2);

cvAnd(tmpH2,tmpS2,tmpH2,0);

// Red Hue to Pink with Low Saturation

// Hue 340 to 360 degree and Sat 15 to 90

cvInRangeS(H,cvScalar(170.0,0.0,0,0),cvScalar(180.0,0.0,0,0),tmpH3);

cvInRangeS(S,cvScalar(15.0,0.0,0,0),cvScalar(90.,0.0,0,0),tmpS3);

cvAnd(tmpH3,tmpS3,tmpH3,0);

// Combine the Hue and Sat detections

cvOr(tmpH3,tmpH2,tmpH2,0);

cvOr(tmpH1,tmpH2,tmpH1,0);

cvCopy(tmpH1,dst);

cvReleaseImage(&hsv);

cvReleaseImage(&tmpH1);

cvReleaseImage(&tmpS1);

cvReleaseImage(&tmpH2);

cvReleaseImage(&tmpS2);

cvReleaseImage(&tmpH3);

cvReleaseImage(&tmpS3);

cvReleaseImage(&H);

cvReleaseImage(&S);

cvReleaseImage(&V);

cvReleaseImage(&src_tmp1);

}

//To Find The biggest Countour

void CAIGesture::FindBigContour(IplImage* src,CvSeq* (&contour),CvMemStorage* storage)

{

CvSeq* contour_tmp,*contourPos;

int contourcount=cvFindContours(src, storage, &contour_tmp, sizeof(CvContour), CV_RETR_LIST, CV_CHAIN_APPROX_NONE );

if(contourcount==0)

return;

CvRect bndRect = cvRect(0,0,0,0);

double contourArea,maxcontArea=0;

for( ; contour_tmp != 0; contour_tmp = contour_tmp->h_next )

{

bndRect = cvBoundingRect( contour_tmp, 0 );

contourArea=bndRect.width*bndRect.height;

if(contourArea>=maxcontArea)//find Biggest Countour

{

maxcontArea=contourArea;

contourPos=contour_tmp;

}

}

contour=contourPos;

}

//Calculate The Center

void CAIGesture::ComputeCenter(CvSeq* (&contour),CvPoint& center,float& radius)

{

CvMoments m;

double M00,X,Y;

cvMoments(contour,&m,0);

M00=cvGetSpatialMoment(&m,0,0);

X=cvGetSpatialMoment(&m,1,0)/M00;

Y=cvGetSpatialMoment(&m,0,1)/M00;

center.x=(int)X;

center.y=(int)Y;

/*******************tO find radius**********************/

int hullcount;

CvSeq* hull;

CvPoint pt;

double tmpr1,r=0;

hull=cvConvexHull2(contour,0,CV_COUNTER_CLOCKWISE,0);

hullcount=hull->total;

for(int i=1;i<hullcount;i++)

{

pt=**CV_GET_SEQ_ELEM(CvPoint*,hull,i);//get each point

tmpr1=sqrt((double)((center.x-pt.x)*(center.x-pt.x))+(double)((center.y-pt.y)*(center.y-pt.y)));//计算与中心点的大小

if(tmpr1>r)//as the max radius

r=tmpr1;

}

radius=r;

}

void CAIGesture::GetFeature(IplImage* src,CvPoint& center,float radius,

float angle[FeatureNum][10],

float anglecha[FeatureNum][10],

float count[FeatureNum])

{

int width=src->width;

int height=src->height;

int step=src->widthStep/sizeof(uchar);

uchar* data=(uchar*)src->imageData;

float R=0.0;

int a1,b1,x1,y1,a2,b2,x2,y2;//the distance of the center to other point

float angle1_tmp[200]={0},angle2_tmp[200]={0},angle1[50]={0},angle2[50]={0};//temp instance to calculate angule

int angle1_tmp_count=0,angle2_tmp_count=0,angle1count=0,angle2count=0,anglecount=0;

for(int i=0;i<FeatureNum;i++)//分FeatureNum层进行特征提取(也就是5层)分析

{

R=(i+4)*radius/9;

for(int j=0;j<=3600;j++)

{

if(j<=900)

{

a1=(int)(R*sin(j*3.14/1800));//这个要自己实际画一张图就明白了

b1=(int)(R*cos(j*3.14/1800));

x1=center.x-b1;

y1=center.y-a1;

a2=(int)(R*sin((j+1)*3.14/1800));

b2=(int)(R*cos((j+1)*3.14/1800));

x2=center.x-b2;

y2=center.y-a2;

}

else

{

if(j>900&&j<=1800)

{

a1=(int)(R*sin((j-900)*3.14/1800));

b1=(int)(R*cos((j-900)*3.14/1800));

x1=center.x+a1;

y1=center.y-b1;

a2=(int)(R*sin((j+1-900)*3.14/1800));

b2=(int)(R*cos((j+1-900)*3.14/1800));

x2=center.x+a2;

y2=center.y-b2;

}

else

{

if(j>1800&&j<2700)

{

a1=(int)(R*sin((j-1800)*3.14/1800));

b1=(int)(R*cos((j-1800)*3.14/1800));

x1=center.x+b1;

y1=center.y+a1;

a2=(int)(R*sin((j+1-1800)*3.14/1800));

b2=(int)(R*cos((j+1-1800)*3.14/1800));

x2=center.x+b2;

y2=center.y+a2;

}

else

{

a1=(int)(R*sin((j-2700)*3.14/1800));

b1=(int)(R*cos((j-2700)*3.14/1800));

x1=center.x-a1;

y1=center.y+b1;

a2=(int)(R*sin((j+1-2700)*3.14/1800));

b2=(int)(R*cos((j+1-2700)*3.14/1800));

x2=center.x-a2;

y2=center.y+b2;

}

}

}

if(x1>0&&x1<width&&x2>0&&x2<width&&y1>0&&y1<height&&y2>0&&y2<height)

{

if((int)data[y1*step+x1]==255&&(int)data[y2*step+x2]==0)

{

angle1_tmp[angle1_tmp_count]=(float)(j*0.1);//从肤色到非肤色的角度

angle1_tmp_count++;

}

else if((int)data[y1*step+x1]==0&&(int)data[y2*step+x2]==255)

{

angle2_tmp[angle2_tmp_count]=(float)(j*0.1);//从非肤色到肤色的角度

angle2_tmp_count++;

}

}

}

int j=0;

for(j=0;j<angle1_tmp_count;j++)

{

if(angle1_tmp[j]-angle1_tmp[j-1]<0.2)//忽略太小的角度

continue;

angle1[angle1count]=angle1_tmp[j];

angle1count++;

}

for(j=0;j<angle2_tmp_count;j++)

{

if(angle2_tmp[j]-angle2_tmp[j-1]<0.2)

continue;

angle2[angle2count]=angle2_tmp[j];

angle2count++;

}

for(j=0;j<max(angle1count,angle2count);j++)

{

if(angle1[0]>angle2[0])

{

if(angle1[j]-angle2[j]<7)//忽略小于7度的角度,因为人的手指一般都大于这个值

continue;

angle[i][anglecount]=(float)((angle1[j]-angle2[j])*0.01);//肤色的角度

anglecha[i][anglecount]=(float)((angle2[j+1]-angle1[j])*0.01);//非肤色的角度,例如手指间的角度

anglecount++;

}

else

{

if(angle1[j+1]-angle2[j]<7)

continue;

anglecount++;

angle[i][anglecount]=(float)((angle1[j+1]-angle2[j])*0.01);

anglecha[i][anglecount]=(float)((angle2[j]-angle1[j])*0.01);

}

}

if(angle1[0]<angle2[0])

angle[i][0]=(float)((angle1[0]+360-angle2[angle2count-1])*0.01);

else

anglecha[i][0]=(float)((angle2[0]+360-angle1[angle1count-1])*0.01);

count[i]=(float)anglecount;

angle1_tmp_count=0,angle2_tmp_count=0,angle1count=0,angle2count=0,anglecount=0;

for(j=0;j<200;j++)

{

angle1_tmp[j]=0;

angle2_tmp[j]=0;

}

for(j=0;j<50;j++)

{

angle1[j]=0;

angle2[j]=0;

}

}

}

基本上对于自己使用代码创建的训练库的特征提取函数和基本的肤色检测和连通域的检测的函数的核心代码都已经贴到上面去了。

然后再看一下对于特定的手势识别的文件:

void HandGestureDialog::on_pushButton_StartRecongnise_clicked()

{

if(cam==NULL)

{

QMessageBox::warning (this,tr("Warning"),tr("Please Check Camera !"));

return;

}

status_switch = Nothing;

status_switch = Recongnise;

}

void HandGestureDialog::StartRecongizeHand (IplImage *img)

{

// Create a string that contains the exact cascade name

// Contains the trained classifer for detecting hand

const char *cascade_name="hand.xml";

// Create memory for calculations

static CvMemStorage* storage = 0;

// Create a new Haar classifier

static CvHaarClassifierCascade* cascade = 0;

// Sets the scale with which the rectangle is drawn with

int scale = 1;

// Create two points to represent the hand locations

CvPoint pt1, pt2;

// Looping variable

int i;

// Load the HaarClassifierCascade

cascade = (CvHaarClassifierCascade*)cvLoad( cascade_name, 0, 0, 0 );

// Check whether the cascade has loaded successfully. Else report and error and quit

if( !cascade )

{

fprintf( stderr, "ERROR: Could not load classifier cascade\n" );

return;

}

// Allocate the memory storage

storage = cvCreateMemStorage(0);

// Create a new named window with title: result

cvNamedWindow( "result", 1 );

// Clear the memory storage which was used before

cvClearMemStorage( storage );

// Find whether the cascade is loaded, to find the hands. If yes, then:

if( cascade )

{

// There can be more than one hand in an image. So create a growable sequence of hands.

// Detect the objects and store them in the sequence

CvSeq* hands = cvHaarDetectObjects( img, cascade, storage,

1.1, 2, CV_HAAR_DO_CANNY_PRUNING,

cvSize(40, 40) );

// Loop the number of hands found.

for( i = 0; i < (hands ? hands->total : 0); i++ )

{

// Create a new rectangle for drawing the hand

CvRect* r = (CvRect*)cvGetSeqElem( hands, i );

// Find the dimensions of the hand,and scale it if necessary

pt1.x = r->x*scale;

pt2.x = (r->x+r->width)*scale;

pt1.y = r->y*scale;

pt2.y = (r->y+r->height)*scale;

// Draw the rectangle in the input image

cvRectangle( img, pt1, pt2, CV_RGB(230,20,232), 3, 8, 0 );

}

}

// Show the image in the window named "result"

cvShowImage( "result", img );

cvWaitKey (30);

}

注意该特征文件包含了手掌半握式的手势效果较好:

多谢大家,这么长时间的阅读和浏览,小弟做的很粗糙还有一些地方自已也没有弄明白,希望各位大神批评指教!

我已把源代码上传到对应的资源中去,以便大家学习修改!

http://download.csdn.net/detail/liuguiyangnwpu/7467891