Hadoop Web项目--Friend Find系统

项目使用软件:Myeclipse10.0,JDK1.7,Hadoop2.6,MySQL5.6,EasyUI1.3.6,jQuery2.0,Spring4.1.3,

Hibernate4.3.1,struts2.3.1,Tomcat7 ,Maven3.2.1。

项目下载地址:https://github.com/fansy1990/friend_find ,项目部署参考:http://blog.csdn.net/fansy1990/article/details/46481409 。

Hadoop Web项目--Friend Find系统

1. 项目介绍

Friend Find系统是一个寻找相似用户的系统。用户填写自己的信息后就可以在本系统内找到和自己志同道合的朋友。本系统使用的是在http://stackoverflow.com/网站上的用户数据。Stack Overflow是一个程序设计领域的问答网站,隶属Stack Exchange Network。网站允许注册用户提出或回答问题,还允许对已有问题或答案加分、扣分或进行修改,条件是用户达到一定的“声望值”。“声望值”就是用户进行网站交互时能获取的分数。当声望值达到某个程度时,用户的权限就会增加,比如声望值超过50点就可以评论答案。当用户的声望值达到某个阶段时,网站还会给用户颁发贡献徽章,以此来激励用户对网站做出贡献。该项目建立在下面的假设基础上,假设用户对于一个领域问题的“态度”就可以反映出该用户的价值取向,并依据此价值取向来对用户进行聚类分组。这里的态度可以使用几个指标属性来评判,在本系统中原始数据(即用户信息数据)包含的属性有多个,从中挑选出最能符合用户观点的属性,作为该用户的“态度”进行分析。这里挑选的属性是:reputation,upVotes,downVotes,views,即使用这4个属性来对用户进行聚类。同时,这里使用MR实现的Clustering by fast search and find of density peaks聚类算法,这里的实现和 http://blog.csdn.net/fansy1990/article/details/46364697这里的实现原始是不同的。

2. 项目运行

2.1 准备

1. 下载工程,参考上面的连接 https://github.com/fansy1990/friend_find,并参考 http://blog.csdn.net/fansy1990/article/details/46481409把它部署上去;

1) 注意根据数据库的配置,在mysql数据库中新建一个friend数据库;

2)直接运行部署工程,即可在数据库中自动建立相应的表,包括:hconstants、loginuser、userdata、usergroup,其中loginuser是用户登录表,会自动初始化(默认有两个用户admin/admin、test/test),hconstants是云平台参数数据表、userdata存储原始用户数据、usergroup存储聚类分群后每个用户的组别。

2. 部署云平台Hadoop2.6(伪分布式或者完全分布式都可以,本项目测试使用伪分布式),同时需要注意:设置云平台系统linux的时间和运行tomcat的机器的时间一样,因为在云平台任务监控的时候使用了时间作为监控停止的信号(具体可以参考后面)。

3. 使用MyEclipse的export功能把所有源码打包,然后把打包后的jar文件拷贝到hadoop集群的$HADOOP_HOME/share/hadoop/mapreduce/目录下面。

2.2 运行

1. 初始化相应的表

初始化集群配置表hconstants

访问系统首页:http://localhost/friend_find (这里部署的tomcat默认使用80端口,同时web部署的名称为friend_find),即可看到下面的页面(系统首页):

点击登录,即可看到系统介绍。

点击初始化表,依次选择对应的表,即可完成初始化

点击Hadoop集群配置表,查看数据:

这里初始化使用的是lz的虚拟机的配置,所以需要修改为自己的集群配置,点击某一行数据,在toolbar里即可选择修改或保存等。

2. 系统原始文件:

系统原始文件在工程的:

3. 项目实现流程

项目实现的流程按照系统首页左边导航栏的顺序从上到下运行,完成数据挖掘的各个步骤。

3.1 数据探索

下载原始数据ask_ubuntu_users.xml 文件,打开,可以看到:

原始数据一共有19550条记录,去除第1、2、最后一行外其他都是用户数据(第3行不是用户数据,是该网站的描述);

用户数据需要使用一个主键来唯一标示该用户,这里不是选择Id,而是使用EmailHash(这里假设每个EmailHash相同的账号其是同一个人)。使用上面的假设后,对原始数据进行分析(这里是全部导入到数据库后发现的),发现EmailHash是有重复记录的,所以这里需要对数据进行预处理--去重;

3.2 数据预处理

1. 数据去重

数据去重采用云平台Hadoop进行处理,首先把ask_ubuntu_users.xml文件上传到云平台,接着运行MR任务进行过滤。

2. 数据序列化

由于计算用户向量两两之间的距离的MR任务使用的是序列化的文件,所以这里需要对数据进行序列化处理;

3.3 建模

建模即使用快速聚类算法来对原始数据进行聚类,主要包括下面几个步骤:

1. 计算用户向量两两之间的距离;

2. 根据距离求解每个用户向量的局部密度;

3. 根据1.和2.的结果求解每个用户向量的最小距离;

4. 根据2,3的结果画出决策图,并判断聚类中心的局部密度和最小距离的阈值;

5. 根据局部密度和最小距离阈值来寻找聚类中心向量;

6. 根据聚类中心向量来进行分类;

3.4 推荐

建模后的结果即可以得到聚类中心向量以及每个分群的百分比,同时根据分类的结果来对用户进行组内推荐。

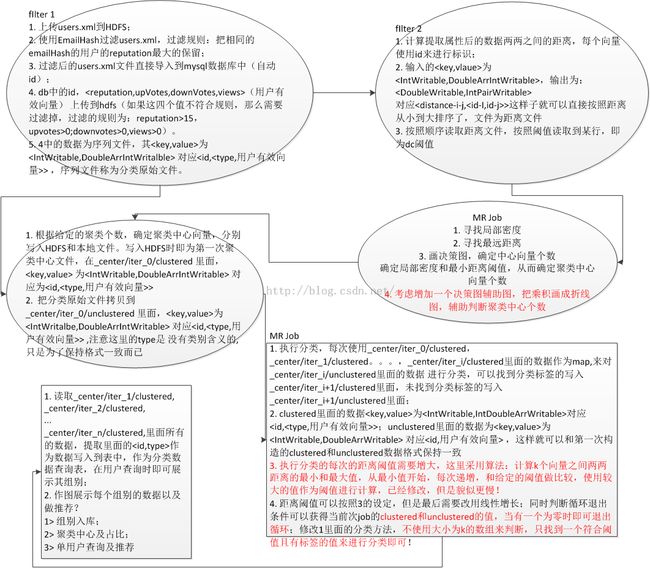

项目流程图如下:

4. 项目功能及实现原理

项目功能主要包括下面:

4.1 数据库表维护

数据库表维护主要包括:数据库表初始化,即用户登录表和Hadoop集群配置表的初始化;数据库表增删改查查看:即用户登录表、用户数据表、Hadoop集群配置表的增删改查。

数据库表增删改查使用同一个DBService类来进行处理,(这里的DAO使用的是通用的)如果针对每个表都建立一个DAO,那么代码就很臃肿,所以这里把这些数据库表都是实现一个接口ObjectInterface,该接口使用一个Map来实例化各个对象。

public interface ObjectInterface {

/**

* 不用每个表都建立一个方法,这里根据表名自动装配

* @param map

* @return

*/

public Object setObjectByMap(Map<String,Object> map);

}

在进行保存的时候,直接使用前台传入的表名和json字符串进行更新即可

/**

* 更新或者插入表

* 不用每个表都建立一个方法,这里根据表名自动装配

* @param tableName

* @param json

* @return

*/

public boolean updateOrSave(String tableName,String json){

try{

// 根据表名获得实体类,并赋值

Object o = Utils.getEntity(Utils.getEntityPackages(tableName),json);

baseDao.saveOrUpdate(o);

log.info("保存表{}!",new Object[]{tableName});

}catch(Exception e){

e.printStackTrace();

return false;

}

return true;

}

/**

* 根据类名获得实体类

* @param tableName

* @param json

* @return

* @throws ClassNotFoundException

* @throws IllegalAccessException

* @throws InstantiationException

* @throws IOException

* @throws JsonMappingException

* @throws JsonParseException

*/

@SuppressWarnings("unchecked")

public static Object getEntity(String tableName, String json) throws ClassNotFoundException, InstantiationException, IllegalAccessException, JsonParseException, JsonMappingException, IOException {

Class<?> cl = Class.forName(tableName);

ObjectInterface o = (ObjectInterface)cl.newInstance();

Map<String,Object> map = new HashMap<String,Object>();

ObjectMapper mapper = new ObjectMapper();

try {

//convert JSON string to Map

map = mapper.readValue(json, Map.class);

return o.setObjectByMap(map);

} catch (Exception e) {

e.printStackTrace();

}

return null;

}

4.2 数据预处理

数据预处理包括文件上传、文件去重、文件下载、数据入库、DB过滤到HDFS、距离计算、最佳DC。

1. 文件上传

文件上传即是把文件从本地上传到HDFS,如下界面:

fs.copyFromLocalFile(src, dst);

上传成功即可显示操作成功,这里使用aJax异步提交:

// =====uploadId,数据上传button绑定 click方法

$('#uploadId').bind('click', function(){

var input_i=$('#localFileId').val();

// 弹出进度框

popupProgressbar('数据上传','数据上传中...',1000);

// ajax 异步提交任务

callByAJax('cloud/cloud_upload.action',{input:input_i});

});其中调用aJax使用一个封装的方法,以后都可以调用,如下:

// 调用ajax异步提交

// 任务返回成功,则提示成功,否则提示失败的信息

function callByAJax(url,data_){

$.ajax({

url : url,

data: data_,

async:true,

dataType:"json",

context : document.body,

success : function(data) {

// $.messager.progress('close');

closeProgressbar();

console.info("data.flag:"+data.flag);

var retMsg;

if("true"==data.flag){

retMsg='操作成功!';

}else{

retMsg='操作失败!失败原因:'+data.msg;

}

$.messager.show({

title : '提示',

msg : retMsg

});

if("true"==data.flag&&"true"==data.monitor){// 添加监控页面

// 使用单独Tab的方式

layout_center_addTabFun({

title : 'MR算法监控',

closable : true,

// iconCls : node.iconCls,

href : 'cluster/monitor_one.jsp'

});

}

}

});

}后台返回的是json数据,并且这里为了和云平台监控任务兼容(考虑通用性),这里还添加了一个打开监控的代码。

2. 文件去重

在导航栏选择文件去重,即可看到下面的界面:

点击去重即可提交任务到云平台,并且会打开MR的监控,如下图:

在点击”去重“按钮时,会启动一个后台线程Thread:

/**

* 去重任务提交

*/

public void deduplicate(){

Map<String ,Object> map = new HashMap<String,Object>();

try{

HUtils.setJobStartTime(System.currentTimeMillis()-10000);

HUtils.JOBNUM=1;

new Thread(new Deduplicate(input,output)).start();

map.put("flag", "true");

map.put("monitor", "true");

} catch (Exception e) {

e.printStackTrace();

map.put("flag", "false");

map.put("monitor", "false");

map.put("msg", e.getMessage());

}

Utils.write2PrintWriter(JSON.toJSONString(map));

}首先设置全部任务的起始时间,这里往前推迟了10s,是为了防止时间相差太大(也可以设置2s左右,如果tomcat所在机器和集群机器时间一样则不用设置);接着设置任务的总个数;最后启动多线程运行MR任务。

在任务监控界面,启动一个定时器,会定时向后台请求任务的监控信息,当任务全部完成则会关闭该定时器。

<script type="text/javascript">

// 自动定时刷新 1s

var monitor_cf_interval= setInterval("monitor_one_refresh()",3000);

</script>

function monitor_one_refresh(){

$.ajax({ // ajax提交

url : 'cloud/cloud_monitorone.action',

dataType : "json",

success : function(data) {

if (data.finished == 'error') {// 获取信息错误 ,返回数据设置为0,否则正常返回

clearInterval(monitor_cf_interval);

setJobInfoValues(data);

console.info("monitor,finished:"+data.finished);

$.messager.show({

title : '提示',

msg : '任务运行失败!'

});

} else if(data.finished == 'true'){

// 所有任务运行成功则停止timer

console.info('monitor,data.finished='+data.finished);

setJobInfoValues(data);

clearInterval(monitor_cf_interval);

$.messager.show({

title : '提示',

msg : '所有任务成功运行完成!'

});

}else{

// 设置提示,并更改页面数据,多行显示job任务信息

setJobInfoValues(data);

}

}

});

}

后台获取任务的监控信息,使用下面的方式:

1)使用JobClient.getAllJobs()获取所有任务的监控信息;

2)使用前面设置的所有任务的启动时间来过滤每个任务;

3)对过滤后的任务按照启动时间进行排序并返回;

4)根据返回任务信息的个数和设置的应该的个数来判断是否停止监控;

/**

* 单个任务监控

* @throws IOException

*/

public void monitorone() throws IOException{

Map<String ,Object> jsonMap = new HashMap<String,Object>();

List<CurrentJobInfo> currJobList =null;

try{

currJobList= HUtils.getJobs();

// jsonMap.put("rows", currJobList);// 放入数据

jsonMap.put("jobnums", HUtils.JOBNUM);

// 任务完成的标识是获取的任务个数必须等于jobNum,同时最后一个job完成

// true 所有任务完成

// false 任务正在运行

// error 某一个任务运行失败,则不再监控

if(currJobList.size()>=HUtils.JOBNUM){// 如果返回的list有JOBNUM个,那么才可能完成任务

if("success".equals(HUtils.hasFinished(currJobList.get(currJobList.size()-1)))){

jsonMap.put("finished", "true");

// 运行完成,初始化时间点

HUtils.setJobStartTime(System.currentTimeMillis());

}else if("running".equals(HUtils.hasFinished(currJobList.get(currJobList.size()-1)))){

jsonMap.put("finished", "false");

}else{// fail 或者kill则设置为error

jsonMap.put("finished", "error");

HUtils.setJobStartTime(System.currentTimeMillis());

}

}else if(currJobList.size()>0){

if("fail".equals(HUtils.hasFinished(currJobList.get(currJobList.size()-1)))||

"kill".equals(HUtils.hasFinished(currJobList.get(currJobList.size()-1)))){

jsonMap.put("finished", "error");

HUtils.setJobStartTime(System.currentTimeMillis());

}else{

jsonMap.put("finished", "false");

}

}

if(currJobList.size()==0){

jsonMap.put("finished", "false");

// return ;

}else{

if(jsonMap.get("finished").equals("error")){

CurrentJobInfo cj =currJobList.get(currJobList.size()-1);

cj.setRunState("Error!");

jsonMap.put("rows", cj);

}else{

jsonMap.put("rows", currJobList.get(currJobList.size()-1));

}

}

jsonMap.put("currjob", currJobList.size());

}catch(Exception e){

e.printStackTrace();

jsonMap.put("finished", "error");

HUtils.setJobStartTime(System.currentTimeMillis());

}

System.out.println(new java.util.Date()+":"+JSON.toJSONString(jsonMap));

Utils.write2PrintWriter(JSON.toJSONString(jsonMap));// 使用JSON数据传输

return ;

}

获取所有任务,并过滤的代码:

/**

* 根据时间来判断,然后获得Job的状态,以此来进行监控 Job的启动时间和使用system.currentTimeMillis获得的时间是一致的,

* 不存在时区不同的问题;

*

* @return

* @throws IOException

*/

public static List<CurrentJobInfo> getJobs() throws IOException {

JobStatus[] jss = getJobClient().getAllJobs();

List<CurrentJobInfo> jsList = new ArrayList<CurrentJobInfo>();

jsList.clear();

for (JobStatus js : jss) {

if (js.getStartTime() > jobStartTime) {

jsList.add(new CurrentJobInfo(getJobClient().getJob(

js.getJobID()), js.getStartTime(), js.getRunState()));

}

}

Collections.sort(jsList);

return jsList;

}当有多个任务时,使用此监控也是可以的,只用设置HUtils.JOBNUM的值即可。

3. 文件下载

文件下载即是把过滤后的文件下载到本地,(因为过滤后的文件需要导入到数据库Mysql,所以这里提供下载功能)

文件下载使用FilsSystem.copyToLocalFile()静态方法:

fs.copyToLocalFile(false, file.getPath(), new Path(dst, "hdfs_" + (i++) + HUtils.DOWNLOAD_EXTENSION), true);4.数据入库

数据入库即文件从去重后的本地文件导入到MySql数据库中:

这里使用的是批量插入,同时这里不使用xml的解析,而是直接使用字符串的解析,因为在云平台过滤的时候,是去掉了第1,2,最后一行,所以xml文件是不完整的,不能使用xml解析,所以直接使用读取文件,然后进行字符串的解析。

/**

* 批量插入xmlPath数据

* @param xmlPath

* @return

*/

public Map<String,Object> insertUserData(String xmlPath){

Map<String,Object> map = new HashMap<String,Object>();

try{

baseDao.executeHql("delete UserData");

// if(!Utils.changeDat2Xml(xmlPath)){

// map.put("flag", "false");

// map.put("msg", "HDFS文件转为xml失败");

// return map;

// }

// List<String[]> strings= Utils.parseXmlFolder2StrArr(xmlPath);

// ---解析不使用xml解析,直接使用定制解析即可

//---

List<String[]>strings = Utils.parseDatFolder2StrArr(xmlPath);

List<Object> uds = new ArrayList<Object>();

for(String[] s:strings){

uds.add(new UserData(s));

}

int ret =baseDao.saveBatch(uds);

log.info("用户表批量插入了{}条记录!",ret);

}catch(Exception e){

e.printStackTrace();

map.put("flag", "false");

map.put("msg", e.getMessage());

return map;

}

map.put("flag", "true");

return map;

}

public Integer saveBatch(List<Object> lists) {

Session session = this.getCurrentSession();

// org.hibernate.Transaction tx = session.beginTransaction();

int i=0;

try{

for ( Object l:lists) {

i++;

session.save(l);

if( i % 50 == 0 ) { // Same as the JDBC batch size

//flush a batch of inserts and release memory:

session.flush();

session.clear();

if(i%1000==0){

System.out.println(new java.util.Date()+":已经预插入了"+i+"条记录...");

}

}

}}catch(Exception e){

e.printStackTrace();

}

// tx.commit();

// session.close();

Utils.simpleLog("插入数据数为:"+i);

return i;

}

5. DB过滤到HDFS

MySQL的用户数据过滤到HDFS,即使用下面的规则进行过滤:

规则 :reputation>15,upVotes>0,downVotes>0,views>0的用户;

接着,上传这些用户,使用SequenceFile进行写入,因为下面的距离计算即是使用序列化文件作为输入的,所以这里直接写入序列化文件;

private static boolean db2hdfs(List<Object> list, Path path) throws IOException {

boolean flag =false;

int recordNum=0;

SequenceFile.Writer writer = null;

Configuration conf = getConf();

try {

Option optPath = SequenceFile.Writer.file(path);

Option optKey = SequenceFile.Writer

.keyClass(IntWritable.class);

Option optVal = SequenceFile.Writer.valueClass(DoubleArrIntWritable.class);

writer = SequenceFile.createWriter(conf, optPath, optKey, optVal);

DoubleArrIntWritable dVal = new DoubleArrIntWritable();

IntWritable dKey = new IntWritable();

for (Object user : list) {

if(!checkUser(user)){

continue; // 不符合规则

}

dVal.setValue(getDoubleArr(user),-1);

dKey.set(getIntVal(user));

writer.append(dKey, dVal);// 用户id,<type,用户的有效向量 >// 后面执行分类的时候需要统一格式,所以这里需要反过来

recordNum++;

}

} catch (IOException e) {

Utils.simpleLog("db2HDFS失败,+hdfs file:"+path.toString());

e.printStackTrace();

flag =false;

throw e;

} finally {

IOUtils.closeStream(writer);

}

flag=true;

Utils.simpleLog("db2HDFS 完成,hdfs file:"+path.toString()+",records:"+recordNum);

return flag;

}

生成文件个数即是HDFS中文件的个数;

6. 距离计算

6. 距离计算

距离计算即计算每个用户直接的距离,使用方法即使用两次循环遍历文件,不过这里一共有N*(N-1)/2个输出,因为针对外层用户ID大于内层用户ID的记录,不进行输出,这里使用MR进行。

Mapper的map函数:输出的key-value对是<DoubleWritable,<int,int>>--><距离,<用户i的ID,用户j的ID>>,且用户i的ID<用户j的ID;

public void map(IntWritable key,DoubleArrIntWritable value,Context cxt)throws InterruptedException,IOException{

cxt.getCounter(FilterCounter.MAP_COUNTER).increment(1L);

if(cxt.getCounter(FilterCounter.MAP_COUNTER).getValue()%3000==0){

log.info("Map处理了{}条记录...",cxt.getCounter(FilterCounter.MAP_COUNTER).getValue());

log.info("Map生成了{}条记录...",cxt.getCounter(FilterCounter.MAP_OUT_COUNTER).getValue());

}

Configuration conf = cxt.getConfiguration();

SequenceFile.Reader reader = null;

FileStatus[] fss=input.getFileSystem(conf).listStatus(input);

for(FileStatus f:fss){

if(!f.toString().contains("part")){

continue; // 排除其他文件

}

try {

reader = new SequenceFile.Reader(conf, Reader.file(f.getPath()),

Reader.bufferSize(4096), Reader.start(0));

IntWritable dKey = (IntWritable) ReflectionUtils.newInstance(

reader.getKeyClass(), conf);

DoubleArrIntWritable dVal = (DoubleArrIntWritable) ReflectionUtils.newInstance(

reader.getValueClass(), conf);

while (reader.next(dKey, dVal)) {// 循环读取文件

// 当前IntWritable需要小于给定的dKey

if(key.get()<dKey.get()){

cxt.getCounter(FilterCounter.MAP_OUT_COUNTER).increment(1L);

double dis= HUtils.getDistance(value.getDoubleArr(), dVal.getDoubleArr());

newKey.set(dis);

newValue.setValue(key.get(), dKey.get());

cxt.write(newKey, newValue);

}

}

} catch (Exception e) {

e.printStackTrace();

} finally {

IOUtils.closeStream(reader);

}

}

}Reducer的reduce函数直接输出:

public void reduce(DoubleWritable key,Iterable<IntPairWritable> values,Context cxt)throws InterruptedException,IOException{

for(IntPairWritable v:values){

cxt.getCounter(FilterCounter.REDUCE_COUNTER).increment(1);

cxt.write(key, v);

}

}

6. 最佳DC

最佳DC是在”聚类算法“-->”执行聚类“时使用的参数,具体可以参考Clustering by fast search and find of density peaks相关论文。

在寻找最佳DC时是把所有距离按照从大到小进行排序,然后顺序遍历这些距离,取前面的2%左右的数据。这里排序由于在”计算距离“MR任务时,已经利用其Map->reduce的排序性即可,其距离已经按照距离的大小从小到大排序了,所以只需遍历即可,这里使用直接遍历序列文件的方式,如下:

/**

* 根据给定的阈值百分比返回阈值

*

* @param percent

* 一般为1~2%

* @return

*/

public static double findInitDC(double percent, String path,long iNPUT_RECORDS2) {

Path input = null;

if (path == null) {

input = new Path(HUtils.getHDFSPath(HUtils.FILTER_CALDISTANCE

+ "/part-r-00000"));

} else {

input = new Path(HUtils.getHDFSPath(path + "/part-r-00000"));

}

Configuration conf = HUtils.getConf();

SequenceFile.Reader reader = null;

long counter = 0;

long percent_ = (long) (percent * iNPUT_RECORDS2);

try {

reader = new SequenceFile.Reader(conf, Reader.file(input),

Reader.bufferSize(4096), Reader.start(0));

DoubleWritable dkey = (DoubleWritable) ReflectionUtils.newInstance(

reader.getKeyClass(), conf);

Writable dvalue = (Writable) ReflectionUtils.newInstance(

reader.getValueClass(), conf);

while (reader.next(dkey, dvalue)) {// 循环读取文件

counter++;

if(counter%1000==0){

Utils.simpleLog("读取了"+counter+"条记录。。。");

}

if (counter >= percent_) {

HUtils.DELTA_DC = dkey.get();// 赋予最佳DC阈值

break;

}

}

} catch (Exception e) {

e.printStackTrace();

} finally {

IOUtils.closeStream(reader);

}

return HUtils.DELTA_DC;

}

这里需要说明一下,经过试验,发现使用距离阈值29.4时,聚类的决策图中的聚类中心向量并不是十分明显,所以在下面使用的阈值是100;

4.3 聚类算法

1. 执行聚类

执行聚类包括三个MR任务:局部密度MR、最小距离MR以及排序MR任务:

1)局部密度MR

局部密度计算使用的输入文件即是前面计算的距离文件,其MR数据流如下:

/** * Find the local density of every point vector * * 输入为 <key,value>--> <distance,<id_i,id_j>> * <距离,<向量i编号,向量j编号>> * * Mapper: * 输出向量i编号,1 * 向量j编号,1 * Reducer: * 输出 * 向量i编号,局部密度 * 有些向量是没有局部密度的,当某个向量距离其他点的距离全部都大于给定阈值dc时就会发生 * @author fansy * @date 2015-7-3 */Mapper的逻辑如下:

/** * 输入为<距离d_ij,<向量i编号,向量j编号>> * 根据距离dc阈值判断距离d_ij是否小于dc,符合要求则 * 输出 * 向量i编号,1 * 向量j编号,1 * @author fansy * @date 2015-7-3 */map函数:

public void map(DoubleWritable key,IntPairWritable value,Context cxt)throws InterruptedException,IOException{

double distance= key.get();

if(method.equals("gaussian")){

one.set(Math.pow(Math.E, -(distance/dc)*(distance/dc)));

}

if(distance<dc){

vectorId.set(value.getFirst());

cxt.write(vectorId, one);

vectorId.set(value.getSecond());

cxt.write(vectorId, one);

}

}这里的密度有两种计算方式,根据前台传入的参数选择不同的算法即可,这里默认使用的cut-off,即局部密度有一个点则局部密度加1;

reducer中的reduce逻辑即把相同的点的局部密度全部加起来即可:

public void reduce(IntWritable key, Iterable<DoubleWritable> values,Context cxt)

throws IOException,InterruptedException{

double sum =0;

for(DoubleWritable v:values){

sum+=v.get();

}

sumAll.set(sum);//

cxt.write(key, sumAll);

Utils.simpleLog("vectorI:"+key.get()+",density:"+sumAll);

}2)最小距离MR

最小距离MR逻辑如下:

/** * find delta distance of every point * 寻找大于自身密度的最小其他向量的距离 * mapper输入: * 输入为<距离d_ij,<向量i编号,向量j编号>> * 把LocalDensityJob的输出 * i,density_i * 放入一个map中,用于在mapper中进行判断两个局部密度的大小以决定是否输出 * mapper输出: * i,<density_i,min_distance_j> * IntWritable,DoublePairWritable * reducer 输出: * <density_i*min_distancd_j> <density_i,min_distance_j,i> * DoubleWritable, IntDoublePairWritable * @author fansy * @date 2015-7-3 */这里reducer输出为每个点(即每个用户)局部密度和最小距离的乘积,一种方式寻找聚类中心个数的方法就是把这个乘积从大到小排序,并把这些点画折线图,看其斜率变化最大的点,取前面点的个数即为聚类中心个数。

3)排序MR

排序MR即把2)的局部密度和最小距离的乘积进行排序,这里可以利用map-reduce的排序性,自定义一个Writable,然后让其按照值的大小从大到小排序。

/**

*

*/

package com.fz.fastcluster.keytype;

/**

* 自定义DoubleWritable

* 修改其排序方式,

* 从大到小排列

* @author fansy

* @date 2015-7-3

*/

import java.io.DataInput;

import java.io.DataOutput;

import java.io.IOException;

import org.apache.hadoop.classification.InterfaceAudience;

import org.apache.hadoop.classification.InterfaceStability;

import org.apache.hadoop.io.WritableComparable;

import org.apache.hadoop.io.WritableComparator;

/**

* Writable for Double values.

*/

@InterfaceAudience.Public

@InterfaceStability.Stable

public class CustomDoubleWritable implements WritableComparable<CustomDoubleWritable> {

private double value = 0.0;

public CustomDoubleWritable() {

}

public CustomDoubleWritable(double value) {

set(value);

}

@Override

public void readFields(DataInput in) throws IOException {

value = in.readDouble();

}

@Override

public void write(DataOutput out) throws IOException {

out.writeDouble(value);

}

public void set(double value) { this.value = value; }

public double get() { return value; }

/**

* Returns true iff <code>o</code> is a DoubleWritable with the same value.

*/

@Override

public boolean equals(Object o) {

if (!(o instanceof CustomDoubleWritable)) {

return false;

}

CustomDoubleWritable other = (CustomDoubleWritable)o;

return this.value == other.value;

}

@Override

public int hashCode() {

return (int)Double.doubleToLongBits(value);

}

@Override

public int compareTo(CustomDoubleWritable o) {// 修改这里即可

return (value < o.value ? 1 : (value == o.value ? 0 : -1));

}

@Override

public String toString() {

return Double.toString(value);

}

/** A Comparator optimized for DoubleWritable. */

public static class Comparator extends WritableComparator {

public Comparator() {

super(CustomDoubleWritable.class);

}

@Override

public int compare(byte[] b1, int s1, int l1,

byte[] b2, int s2, int l2) {

double thisValue = readDouble(b1, s1);

double thatValue = readDouble(b2, s2);

return (thisValue < thatValue ? 1 : (thisValue == thatValue ? 0 : -1));

}

}

static { // register this comparator

WritableComparator.define(CustomDoubleWritable.class, new Comparator());

}

}

2. 画决策图

画决策图,直接解析云平台的排序MR的输出,然后取前面的500条记录(前面500条记录包含的局部密度和最小距离的乘积的最大的500个,后面的点更不可能成为聚类中心点,所以这里只取500个,同时需要注意,如果前面设置排序MR的reducer个数大于一个,那么其输出为多个文件,则这里是取每个文件的前面500个向量)

依次点击画图,展示决策图,即可看到画出的决策图:

聚类中心应该是取右上角位置的点,所以这里选择去点密度大于50,点距离大于50的点,这里有3个,加上没有画出来的局部密度最大的点,一共有4个聚类中心向量。

3. 寻找聚类中心

寻找聚类中心就是根据前面决策图得到的点密度和点距离阈值来过滤排序MR的输出,得到符合要求的用户ID,这些用户ID即是聚类中心向量的ID。接着,根据这些ID在数据库中找到每个用户ID对应的有效向量(reputation,upVotes,downVotes,views)写入HDFS和本地文件。写入HDFS是为了作为分类的中心点,写入本地是为了后面查看的方便。

代码如下:

/**

* 根据给定的阈值寻找聚类中心向量,并写入hdfs

* 非MR任务,不需要监控,注意返回值

*/

public void center2hdfs(){

// localfile:method

// 1. 读取SortJob的输出,获取前面k条记录中的大于局部密度和最小距离阈值的id;

// 2. 根据id,找到每个id对应的记录;

// 3. 把记录转为double[] ;

// 4. 把向量写入hdfs

// 5. 把向量写入本地文件中,方便后面的查看

Map<String,Object> retMap=new HashMap<String,Object>();

Map<Object,Object> firstK =null;

List<Integer> ids= null;

List<UserData> users=null;

try{

firstK=HUtils.readSeq(input==null?HUtils.SORTOUTPUT+"/part-r-00000":input,

100);// 这里默认使用 前100条记录

ids=HUtils.getCentIds(firstK,numReducerDensity,numReducerDistance);

// 2

users = dBService.getTableData("UserData",ids);

Utils.simpleLog("聚类中心向量有"+users.size()+"个!");

// 3,4,5

HUtils.writecenter2hdfs(users,method,output);

}catch(Exception e){

e.printStackTrace();

retMap.put("flag", "false");

retMap.put("msg", e.getMessage());

Utils.write2PrintWriter(JSON.toJSONString(retMap));

return ;

}

retMap.put("flag", "true");

Utils.write2PrintWriter(JSON.toJSONString(retMap));

return ;

}写入HDFS和本地的聚类中心如下: