学习OpenCV——ORB & BRIEF(特征点篇)&Location

首先介绍什么是ORB:

(此部分转自http://www.cvchina.info/2011/07/04/whats-orb/)

ORB是是ORiented Brief的简称。ORB的描述在下面文章中:

Ethan Rublee and Vincent Rabaud and Kurt Konolige and Gary Bradski,ORB: an efficient alternative to SIFT or SURF, ICCV 2011

论文已经可以去google下载,OpenCV2.3中已经有了实现,WillowGarage有一个talk也提到了这个算法,因此我不揣浅陋,在这里总结一下。

Brief

Brief是Binary Robust Independent Elementary Features的缩写。这个特征描述子是由EPFL的Calonder在ECCV2010上提出的。主要思路就是在特征点附近随机选取若干点对,将这些点对的灰度值的大小,组合成一个二进制串,并将这个二进制串作为该特征点的特征描述子。详细算法描述参考如下论文:

Calonder M., Lepetit V., Strecha C., Fua P.: BRIEF: Binary Robust Independent Elementary Features. ECCV 2010

注意在BRIEF eccv2010的文章中,BRIEF描述子中的每一位是由随机选取的两个像素点做二进制比较得来的。文章同样提到,在此之前,需要选取合适的gaussian kernel对图像做平滑处理。(为什么要强调这一点,因为下述的ORB对此作了改进。)

BRIEF的优点在于速度,缺点也相当明显:

1:不具备旋转不变性。

2:对噪声敏感

3:不具备尺度不变性。

ORB就是试图解决上述缺点中的1和2.

如何解决旋转不变性:

在ORB的方案中,是采用了FAST作为特征点检测算子。FAST应用的很多了,是出名的快,以防有人不知道,请看这里:

在Sift的方案中,特征点的主方向是由梯度直方图的最大值和次大值所在的bin对应的方向决定的。略嫌耗时。

在ORB的方案中,特征点的主方向是通过矩(moment)计算而来,公式如下:

其中,零阶矩的计算如下:

一阶矩的计算如下:

有了主方向之后,就可以依据该主方向提取BRIEF描述子。但是由此带来的问题是,由于主方向会发生变化,随机点对的相关性会比较大,从而降低描述子的判别性。解决方案也很直接,采取贪婪的,穷举的方法,暴力找到相关性较低的随机点对。

如何解决对噪声敏感的问题:

在前面提到过,在最早的eccv2010的文章中,BRIEF使用的是pixel跟pixel的大小来构造描述子的每一个bit。这样的后果就是对噪声敏感。因此,在ORB的方案中,做了这样的改进,不再使用pixel-pair,而是使用9×9的patch-pair,也就是说,对比patch的像素值之和。(可以通过积分图快速计算)。

关于尺度不变性:

ORB没有试图解决尺度不变性,(因为FAST本身就不具有尺度不变性。)但是这样只求速度的特征描述子,一般都是应用在实时的视频处理中的,这样的话就可以通过跟踪还有一些启发式的策略来解决尺度不变性的问题。

关于计算速度:

ORB是sift的100倍,是surf的10倍。

关于性能:

下面是一个性能对比,ORB还是很给力。点击看大图。

参考Slides

代码:

ORB检测+透视变换定位

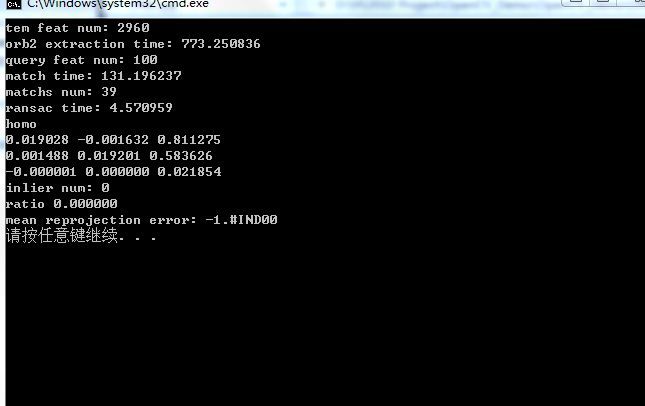

为了达到实时定位跟踪的效果,可以通过降低KeyPoints数量来减少检测和match时间(src.keypoint=30,img.keypoints=100时满足实时需求);

但是由于计算透视变换

//寻找两个矩阵的透视变换3*3矩阵☆☆☆☆☆☆☆☆☆☆☆☆;

homo1 = findHomography(pt1, pt2, CV_RANSAC, (5.0));

导致了花费时间过多,这一步可以改进(通过计算keypoint的平均坐标,或者拟合边缘,下一步可以试一试)

下面附上代码:

#include "opencv2/objdetect/objdetect.hpp"

#include "opencv2/features2d/features2d.hpp"

#include "opencv2/highgui/highgui.hpp"

#include "opencv2/calib3d/calib3d.hpp"

#include "opencv2/imgproc/imgproc_c.h"

#include "opencv2/imgproc/imgproc.hpp"

#include <string>

#include <vector>

#include <iostream>

using namespace cv;

using namespace std;

char* image_filename2 = "D:/src.jpg";

char* image_filename1 = "D:/Demo1.jpg";

unsigned int hamdist(unsigned int x, unsigned int y)

{

unsigned int dist = 0, val = x ^ y;

// Count the number of set bits

while(val)

{

++dist;

val &= val - 1;

}

return dist;

}

unsigned int hamdist2(unsigned char* a, unsigned char* b, size_t size)

{

HammingLUT lut;

unsigned int result;

result = lut((a), (b), size);

return result;

}

void naive_nn_search(vector<KeyPoint>& keys1, Mat& descp1, vector<KeyPoint>& keys2, Mat& descp2, vector<DMatch>& matches)

{

for( int i = 0; i < (int)keys2.size(); i++)

{

unsigned int min_dist = INT_MAX;

int min_idx = -1;

//注意指针的用法:Mat.ptr(i)☆☆☆☆☆☆☆☆☆☆☆☆☆☆☆☆☆☆☆☆☆☆☆☆☆☆☆☆

unsigned char* query_feat = descp2.ptr(i);

for( int j = 0; j < (int)keys1.size(); j++)

{

unsigned char* train_feat = descp1.ptr(j);

unsigned int dist = hamdist2(query_feat, train_feat, 32);

if(dist < min_dist)

{

min_dist = dist;

min_idx = j;

}

}

//if(min_dist <= (unsigned int)(second_dist * 0.8){

if(min_dist <= 50)

{

matches.push_back(DMatch(i, min_idx, 0, (float)min_dist));

}

}

}

void naive_nn_search2(vector<KeyPoint>& keys1, Mat& descp1, vector<KeyPoint>& keys2, Mat& descp2, vector<DMatch>& matches)

{

for( int i = 0; i < (int)keys2.size(); i++)

{

unsigned int min_dist = INT_MAX;

unsigned int sec_dist = INT_MAX;

int min_idx = -1, sec_idx = -1;

unsigned char* query_feat = descp2.ptr(i);

for( int j = 0; j < (int)keys1.size(); j++)

{

unsigned char* train_feat = descp1.ptr(j);

unsigned int dist = hamdist2(query_feat, train_feat, 32);

//最短距离

if(dist < min_dist)

{

sec_dist = min_dist;

sec_idx = min_idx;

min_dist = dist;

min_idx = j;

}

//次短距离

else if(dist < sec_dist)

{

sec_dist = dist; sec_idx = j;

}

}

if(min_dist <= (unsigned int)(sec_dist * 0.8) && min_dist <=50)

{

matches.push_back(DMatch(i, min_idx, 0, (float)min_dist));

}

}

}

int main(int argc, char* argv[])

{

Mat img1 = imread(image_filename1, 0);

Mat img2 = imread(image_filename2, 0);

//GaussianBlur(img1, img1, Size(5, 5), 0);

//GaussianBlur(img2, img2, Size(5, 5), 0);

ORB orb1(100, ORB::CommonParams(1.2, 1));

ORB orb2(10, ORB::CommonParams(1.2, 1));

vector<KeyPoint> keys1, keys2;

Mat descriptors1, descriptors2;

int64 st, et;

//提取ORB特征;

orb1(img1, Mat(), keys1, descriptors1, false);

printf("tem feat num: %d\n", keys1.size());

st = cvGetTickCount();

orb2(img2, Mat(), keys2, descriptors2, false);

et = cvGetTickCount();

printf("orb2 extraction time: %f\n", (et-st)/(double)cvGetTickFrequency()/1000.);

printf("query feat num: %d\n", keys2.size());

// find matches

vector<DMatch> matches;

st = cvGetTickCount();

for(int i = 0; i < 10; i++)

{

naive_nn_search2(keys1, descriptors1, keys2, descriptors2, matches);

}

et = cvGetTickCount();

printf("match time: %f\n", (et-st)/(double)cvGetTickFrequency()/1000.);

printf("matchs num: %d\n", matches.size());

Mat showImg;

drawMatches(img2, keys2, img1, keys1, matches, showImg, CV_RGB(0, 255, 0), CV_RGB(0, 0, 255));

string winName = "Matches";

namedWindow( winName, WINDOW_AUTOSIZE );

imshow( winName, showImg );

waitKey(0); vector<Point2f> pt1; vector<Point2f> pt2;

for(int i = 0; i < (int)matches.size(); i++)

{

pt1.push_back(Point2f(keys2[matches[i].queryIdx].pt.x, keys2[matches[i].queryIdx].pt.y));

pt2.push_back(Point2f(keys1[matches[i].trainIdx].pt.x, keys1[matches[i].trainIdx].pt.y));

}

Mat homo,homo1;

st = cvGetTickCount(); //寻找两个矩阵的透视变换3*3矩阵☆☆☆☆☆☆☆☆☆☆☆☆;

homo1 = findHomography(pt1, pt2, CV_RANSAC, (5.0));

homo1.convertTo(homo,CV_32F);

//normalize(homo,homo,1,0); printf("homo\n"

"%f %f %f\n"

"%f %f %f\n"

"%f %f %f\n",

homo.at<float>(0,0), homo.at<float>(0,1), homo.at<float>(0,2),

homo.at<float>(1,0), homo.at<float>(1,1), homo.at<float>(1,2),

homo.at<float>(2,0), homo.at<float>(2,1), homo.at<float>(2,2));

//目标图像顶点坐标☆☆☆☆☆☆☆☆☆☆☆☆

CvPoint src_corners[4] = {{0,0}, {img2.cols,0}, {img2.cols, img2.rows}, {0, img2.rows}};

CvPoint dst_corners[4];

double h[9];

h[0]=homo.at<float>(0,0);

h[1]=homo.at<float>(0,1);

h[2]=homo.at<float>(0,2);

h[3]=homo.at<float>(1,0);

h[4]=homo.at<float>(1,1);

h[5]=homo.at<float>(1,2);

h[6]=homo.at<float>(2,0);

h[7]=homo.at<float>(2,1);

h[8]=homo.at<float>(2,2);

size_t i;

//利用提取到的3*3透视变换矩阵,对目标图像的四个顶点的坐标进行透视变换

for( i = 0; i < 4; i++ )

{

double x = src_corners[i].x, y = src_corners[i].y;

double Z = 1./(h[6]*x + h[7]*y + h[8]);

double X = (h[0]*x + h[1]*y + h[2])*Z;

double Y = (h[3]*x + h[4]*y + h[5])*Z;

dst_corners[i] = cvPoint(cvRound(X), cvRound(Y));

}

Mat img=imread(image_filename1, 1);

//把变换后的坐标用直线连接,定位!

for( i = 0; i < 4; i++ )

{

CvPoint r1 = dst_corners[i%4];

CvPoint r2 = dst_corners[(i+1)%4];

line( img, cvPoint(r1.x, r1.y),

cvPoint(r2.x, r2.y ), Scalar(0,0,255),2 );

}

imshow( "location", img);

et = cvGetTickCount();

printf("ransac time: %fms\n", (et-st)/(double)cvGetTickFrequency()/1000.);

waitKey(0);

vector<Point2f> reproj;

reproj.resize(pt1.size());

//向量数组的透视变换;☆☆☆☆☆☆☆☆☆☆☆☆

perspectiveTransform(pt1, reproj, homo);

Mat diff;

diff = Mat(reproj) - Mat(pt2);

int inlier = 0;

double err_sum = 0;

for(int i = 0; i < diff.rows; i++)

{

uchar* ptr = diff.ptr(i);

float err = ptr[0]*ptr[0] + ptr[1]*ptr[1];

if(err < 25.f)

{

inlier++; err_sum += sqrt(err);

}

}

printf("inlier num: %d\n", inlier);

printf("ratio %f\n", inlier / (float)(diff.rows));

printf("mean reprojection error: %f\n", err_sum / inlier);

return 0;

}