[置顶] iOS8 Core Image In Swift:更复杂的滤镜

iOS8 Core Image In Swift:自动改善图像以及内置滤镜的使用

iOS8 Core Image In Swift:更复杂的滤镜

iOS8 Core Image In Swift:人脸检测以及马赛克

iOS8 Core Image In Swift:视频实时滤镜

上面那篇文章主要是Core Image的基础,只是为了说明CIImage、CIFilter、CIContext,以及基础滤镜的简单使用。在上一篇中几乎没有对滤镜进行更复杂的操作,都是直接把inputImage扔给CIFilter而已,而Core Image实际上还能对滤镜进行更加细粒度的控制,我们在新的工程中对其进行探索。为此,我重新建立了一个空的workspace,并把之前所使用的工程添加到这个workspace中,编译、运行,没问题的话我们就开始创建新的工程。

通过workspace左下角的Add Files to添加已有的工程文件(xx.xcodeproj):

| 当添加工程到workspace的时候,记得要把被添加的工程关掉,不然workspacce不能识别。 另外,在流程上这篇也会与上一篇不同,上一篇一开始我就给出了代码,然后先看效果再步步为营,这篇不会在一开始给出代码。 |

动态改变滤镜参数的值

用Single View Application的工程模板建立一个新的工程,在View上放一个UIImageView,还是同样的frame,同样的ContentMode设置为Aspect Fit,同样的关闭Auto Layout以及Size Classes,最后把上个工程中使用的图片复制过来,在这个工程中同样使用这张图。

做完上面这些基础工作后,我们回到VC中,把showFiltersInConsole方法从上个工程中复制过来,然后在viewDidLoad里调用,在运行之前我们先看看Core Image有哪些类别,毕竟全部的滤镜有127种,不可能一一用到的。

类别有很多,而且我们从上一篇中知道了滤镜可以同时属于不同的类别,除此之外,类别还分为两大类:

按效果分类:

- kCICategoryDistortionEffect 扭曲效果,比如bump、旋转、hole

- kCICategoryGeometryAdjustment 几何开着调整,比如仿射变换、平切、透视转换

- kCICategoryCompositeOperation 合并,比如源覆盖(source over)、最小化、源在顶(source atop)、色彩混合模式

- kCICategoryHalftoneEffect Halftone效果,比如screen、line screen、hatched

- kCICategoryColorAdjustment 色彩调整,比如伽马调整、白点调整、曝光

- kCICategoryColorEffect 色彩效果,比如色调调整、posterize

- kCICategoryTransition 图像间转换,比如dissolve、disintegrate with mask、swipe

- kCICategoryTileEffect 瓦片效果,比如parallelogram、triangle

- kCICategoryGenerator 图像生成器,比如stripes、constant color、checkerboard

- kCICategoryGradient 渐变,比如轴向渐变、仿射渐变、高斯渐变

- kCICategoryStylize 风格化,比如像素化、水晶化

- kCICategorySharpen 锐化、发光

- kCICategoryBlur 模糊,比如高斯模糊、焦点模糊、运动模糊

按使用场景分类:

- kCICategoryStillImage 能用于静态图像

- kCICategoryVideo 能用于视频

- kCICategoryInterlaced 能用于交错图像

- kCICategoryNonSquarePixels 能用于非矩形像素

- kCICategoryHighDynamicRange 能用于HDR

| 这些专业词太难翻译了,有不准确的地方还望告知 |

- 参数类型:NSNumber

- 默认值:0

- kCIAttributeIdentity:虽然这个值大部分情况下与默认值是一样的,但是它们的含义不一样,kCIAttributeIdentity表示的含义是这个值被应用到参数上的时候,就表示被应用的参数不会对inputImage造成任何影响

- 最大值:Ԉ

- 最小值:-Ԉ

- 属性类型:角度

class ViewController: UIViewController {

@IBOutlet var imageView: UIImageView!

@IBOutlet var slider: UISlider!

lazy var originalImage: UIImage = {

return UIImage(named: "Image")

}()

lazy var context: CIContext = {

return CIContext(options: nil)

}()

var filter: CIFilter!

......

把UIImageView及UISlider的连线与VC中的连接起来,然后我们在viewDidLoad方法里写上:

override func viewDidLoad() {

super.viewDidLoad()

imageView.layer.shadowOpacity = 0.8

imageView.layer.shadowColor = UIColor.blackColor().CGColor

imageView.layer.shadowOffset = CGSize(width: 1, height: 1)

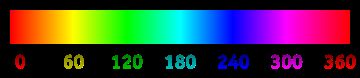

slider.maximumValue = Float(M_PI)

slider.minimumValue = Float(-M_PI)

slider.value = 0

slider.addTarget(self, action: "valueChanged", forControlEvents: UIControlEvents.ValueChanged)

let inputImage = CIImage(image: originalImage)

filter = CIFilter(name: "CIHueAdjust")

filter.setValue(inputImage, forKey: kCIInputImageKey)

slider.sendActionsForControlEvents(UIControlEvents.ValueChanged)

showFiltersInConsole()

}

接着对slider初始化,在之前我们了解到CIHueAdjust滤镜的inputAngle参数最大值是Ԉ,最小值是负Ԉ,默认值是0,就用这些值来初始化,然后添加一个当值发生改变时触发的事件。

初始化filter,由于只有一个滤镜,filter对象也可以重用,设置完inputImage后,触发slider的事件就可以了。

valueChanged方法实现:

@IBAction func valueChanged() {

filter.setValue(slider.value, forKey: kCIInputAngleKey)

let outputImage = filter.outputImage

let cgImage = context.createCGImage(outputImage, fromRect: outputImage.extent())

imageView.image = UIImage(CGImage: cgImage)

}

虽然我并不是在Storyboard里把slider的valueChanged事件连接到VC的方法上,但是在这里使用@IBAction也是适当的,这样可以表明这个方法不是业务逻辑方法,而是一个UI控件触发的方法。

编译、运行,应该可以看到效果了。

复合滤镜--老电影效果

- 需要使用CISepiaTone滤镜,CISepiaTone能使整体颜色偏棕褐色,又有点像复古

- 需要创建随机噪点图,很像以前电视机没信号时显示的图像,再通过它生成一张白斑图滤镜

- 需要创建另一个随机噪点图,然后通过它生成一张黑色磨砂图滤镜,就像是一张使用过的黑色砂纸一样

- 把它们组合起来

应用CISepiaTone滤镜到原图上

- 设置inputImage为原图

- 设置inputIntensity为1.0

创建白斑图滤镜

- 设置inputImage为CIRandomGenerator生成的随机噪点图

- 设置inputRVector、inputGVector和inputBVector为(0,1,0,0)

- 设置inputBiasVector为(0,0,0,0)

- 设置inputImage为CISepiaTone滤镜生成的图

- 设置inputBackgroundImage为白斑图滤镜

创建黑色磨砂图滤镜

- 设置inputImage为CIRandomGenerator生成的随机噪点图

- 设置inputTransform为x放大1.5倍、y放大25倍,把点拉长、拉厚,但是它们仍然是有颜色的

- 设置inputImage为CIAffineTransform生成的图

- 设置inputRVector为(4,0,0,0)

- 设置inputGVector、inputBVector和inputAVector为(0,0,0,0)

- 设置inputBiasVector为(0,1,1,1)

把所有的滤镜组合起来

- 设置inputImage为CISourceOverCompositing滤镜生成的图(内含CISepiaTone、白斑图滤镜的效果)

- 设置inputBackgroundImage为CIMinimumComponent滤镜生成的图(内含黑色磨砂图滤镜效果)

这里是oldFilmEffect方法实现:

@IBAction func oldFilmEffect() {

let inputImage = CIImage(image: originalImage)

// 1.创建CISepiaTone滤镜

let sepiaToneFilter = CIFilter(name: "CISepiaTone")

sepiaToneFilter.setValue(inputImage, forKey: kCIInputImageKey)

sepiaToneFilter.setValue(1, forKey: kCIInputIntensityKey)

// 2.创建白斑图滤镜

let whiteSpecksFilter = CIFilter(name: "CIColorMatrix")

whiteSpecksFilter.setValue(CIFilter(name: "CIRandomGenerator").outputImage.imageByCroppingToRect(inputImage.extent()), forKey: kCIInputImageKey)

whiteSpecksFilter.setValue(CIVector(x: 0, y: 1, z: 0, w: 0), forKey: "inputRVector")

whiteSpecksFilter.setValue(CIVector(x: 0, y: 1, z: 0, w: 0), forKey: "inputGVector")

whiteSpecksFilter.setValue(CIVector(x: 0, y: 1, z: 0, w: 0), forKey: "inputBVector")

whiteSpecksFilter.setValue(CIVector(x: 0, y: 0, z: 0, w: 0), forKey: "inputBiasVector")

// 3.把CISepiaTone滤镜和白斑图滤镜以源覆盖(source over)的方式先组合起来

let sourceOverCompositingFilter = CIFilter(name: "CISourceOverCompositing")

sourceOverCompositingFilter.setValue(whiteSpecksFilter.outputImage, forKey: kCIInputBackgroundImageKey)

sourceOverCompositingFilter.setValue(sepiaToneFilter.outputImage, forKey: kCIInputImageKey)

// ---------上面算是完成了一半

// 4.用CIAffineTransform滤镜先对随机噪点图进行处理

let affineTransformFilter = CIFilter(name: "CIAffineTransform")

affineTransformFilter.setValue(CIFilter(name: "CIRandomGenerator").outputImage.imageByCroppingToRect(inputImage.extent()), forKey: kCIInputImageKey

affineTransformFilter.setValue(NSValue(CGAffineTransform: CGAffineTransformMakeScale(1.5, 25)), forKey: kCIInputTransformKey)

// 5.创建蓝绿色磨砂图滤镜

let darkScratchesFilter = CIFilter(name: "CIColorMatrix")

darkScratchesFilter.setValue(affineTransformFilter.outputImage, forKey: kCIInputImageKey)

darkScratchesFilter.setValue(CIVector(x: 4, y: 0, z: 0, w: 0), forKey: "inputRVector")

darkScratchesFilter.setValue(CIVector(x: 0, y: 0, z: 0, w: 0), forKey: "inputGVector")

darkScratchesFilter.setValue(CIVector(x: 0, y: 0, z: 0, w: 0), forKey: "inputBVector")

darkScratchesFilter.setValue(CIVector(x: 0, y: 0, z: 0, w: 0), forKey: "inputAVector")

darkScratchesFilter.setValue(CIVector(x: 0, y: 1, z: 1, w: 1), forKey: "inputBiasVector")

// 6.用CIMinimumComponent滤镜把蓝绿色磨砂图滤镜处理成黑色磨砂图滤镜

let minimumComponentFilter = CIFilter(name: "CIMinimumComponent")

minimumComponentFilter.setValue(darkScratchesFilter.outputImage, forKey: kCIInputImageKey)

// ---------上面算是基本完成了

// 7.最终组合在一起

let multiplyCompositingFilter = CIFilter(name: "CIMultiplyCompositing")

multiplyCompositingFilter.setValue(minimumComponentFilter.outputImage, forKey: kCIInputBackgroundImageKey)

multiplyCompositingFilter.setValue(sourceOverCompositingFilter.outputImage, forKey: kCIInputImageKey)

// 8.最后输出

let outputImage = multiplyCompositingFilter.outputImage

let cgImage = context.createCGImage(outputImage, fromRect: outputImage.extent())

imageView.image = UIImage(CGImage: cgImage)

}

子类化CIFilter

- 新建一个Cocoa Touch Class,类名就叫CIColorInvert,继承自CIFilter。

- 添加一个inputImage参数,类型自然是CIImage,由外界赋值。

- 重写outputImage属性的getter。如果你之前写过Objective-C,应该对属性有这样一个印象:子类要重写父类的属性,只需要单独写个getter或setter方法就行了,但在Swift里,不能通过这种方式重写属性,必须连getter、setter(如果父类的属性支持setter的话)一起重写。在我们的例子中outputImage在CIFilter中只是一个getter属性,

- 在outputImage里通过CIColorMatrix滤镜对图像的各向量进行调整。

class CIColorInvert: CIFilter {

var inputImage: CIImage!

override var outputImage: CIImage! {

get {

return CIFilter(name: "CIColorMatrix", withInputParameters: [

kCIInputImageKey : inputImage,

"inputRVector" : CIVector(x: -1, y: 0, z: 0),

"inputGVector" : CIVector(x: 0, y: -1, z: 0),

"inputBVector" : CIVector(x: 0, y: 0, z: -1),

"inputBiasVector" : CIVector(x: 1, y: 1, z: 1),

]).outputImage

}

}

}

@IBAction func colorInvert() {

let colorInvertFilter = CIColorInvert()

colorInvertFilter.inputImage = CIImage(image: imageView.image)

let outputImage = colorInvertFilter.outputImage

let cgImage = context.createCGImage(outputImage, fromRect: outputImage.extent())

imageView.image = UIImage(CGImage: cgImage)

}

简单抠图并更换背景

......

lazy var originalImage: UIImage = {

return UIImage(named: "Image2")

}()

......

@IBAction func showOriginalImage() {

self.imageView.image = originalImage

}

- 消除深绿色

- 组合图片

消除深绿色

struct CubeMap {

int length;

float dimension;

float *data;

};

struct CubeMap createCubeMap(float minHueAngle, float maxHueAngle) {

const unsigned int size = 64;

struct CubeMap map;

map.length = size * size * size * sizeof (float) * 4;

map.dimension = size;

float *cubeData = (float *)malloc (map.length);

float rgb[3], hsv[3], *c = cubeData;

for (int z = 0; z < size; z++){

rgb[2] = ((double)z)/(size-1); // Blue value

for (int y = 0; y < size; y++){

rgb[1] = ((double)y)/(size-1); // Green value

for (int x = 0; x < size; x ++){

rgb[0] = ((double)x)/(size-1); // Red value

rgbToHSV(rgb,hsv);

// Use the hue value to determine which to make transparent

// The minimum and maximum hue angle depends on

// the color you want to remove

float alpha = (hsv[0] > minHueAngle && hsv[0] < maxHueAngle) ? 0.0f: 1.0f;

// Calculate premultiplied alpha values for the cube

c[0] = rgb[0] * alpha;

c[1] = rgb[1] * alpha;

c[2] = rgb[2] * alpha;

c[3] = alpha;

c += 4; // advance our pointer into memory for the next color value

}

}

}

map.data = cubeData;

return map;

}

void rgbToHSV(float *rgb, float *hsv) {

float min, max, delta;

float r = rgb[0], g = rgb[1], b = rgb[2];

float *h = hsv, *s = hsv + 1, *v = hsv + 2;

min = fmin(fmin(r, g), b );

max = fmax(fmax(r, g), b );

*v = max;

delta = max - min;

if( max != 0 )

*s = delta / max;

else {

*s = 0;

*h = -1;

return;

}

if( r == max )

*h = ( g - b ) / delta;

else if( g == max )

*h = 2 + ( b - r ) / delta;

else

*h = 4 + ( r - g ) / delta;

*h *= 60;

if( *h < 0 )

*h += 360;

}

#include <stdio.h>

#include <stdlib.h>

#include <math.h>

// ComplexFilters-Bridging-Header.h

// Use this file to import your target's public headers that you would like to expose to Swift.

//

#import "CubeMap.c"

组合图片

VC中的 replaceBackground方法只需要做三件事:- 创建Cube Map表

- 创建CIColorCube滤镜并使用Cube Map

- 用CISourceOverCompositing滤镜将处理过的人物图像和未处理过的背景图粘合起来

@IBAction func replaceBackground() {

let cubeMap = createCubeMap(60,90)

let data = NSData(bytesNoCopy: cubeMap.data, length: Int(cubeMap.length), freeWhenDone: true)

let colorCubeFilter = CIFilter(name: "CIColorCube")

colorCubeFilter.setValue(cubeMap.dimension, forKey: "inputCubeDimension")

colorCubeFilter.setValue(data, forKey: "inputCubeData")

colorCubeFilter.setValue(CIImage(image: imageView.image), forKey: kCIInputImageKey)

var outputImage = colorCubeFilter.outputImage

let sourceOverCompositingFilter = CIFilter(name: "CISourceOverCompositing")

sourceOverCompositingFilter.setValue(outputImage, forKey: kCIInputImageKey)

sourceOverCompositingFilter.setValue(CIImage(image: UIImage(named: "background")), forKey: kCIInputBackgroundImageKey)

outputImage = sourceOverCompositingFilter.outputImage

let cgImage = context.createCGImage(outputImage, fromRect: outputImage.extent())

imageView.image = UIImage(CGImage: cgImage)

}

GitHub下载地址

我在GitHub上会保持更新。

UPDATED:

参考资料:

http://www.docin.com/p-387777241.html

https://developer.apple.com/library/mac/documentation/graphicsimaging/conceptual/CoreImaging/ci_intro/ci_intro.html

![[置顶] iOS8 Core Image In Swift:更复杂的滤镜_第1张图片](http://img.e-com-net.com/image/info5/eb9d02bbb8c5446b8861b24f28c51679.jpg)

![[置顶] iOS8 Core Image In Swift:更复杂的滤镜_第2张图片](http://img.e-com-net.com/image/info5/a890ea1d76bb4b2a95e6f77908239d21.jpg)

![[置顶] iOS8 Core Image In Swift:更复杂的滤镜_第3张图片](http://img.e-com-net.com/image/info5/bd793f12fb2a4957978fe58382c8d9f5.jpg)

![[置顶] iOS8 Core Image In Swift:更复杂的滤镜_第4张图片](http://img.e-com-net.com/image/info5/e955fbb0686747c5a1bc6dd046c63d2b.jpg)

![[置顶] iOS8 Core Image In Swift:更复杂的滤镜_第5张图片](http://img.e-com-net.com/image/info5/e81c8462d59c4f41aa6cc015b5a1837b.jpg)

![[置顶] iOS8 Core Image In Swift:更复杂的滤镜_第6张图片](http://img.e-com-net.com/image/info5/184bbc16cc0c4b1ab96de8e72c9a1ef6.jpg)

![[置顶] iOS8 Core Image In Swift:更复杂的滤镜_第7张图片](http://img.e-com-net.com/image/info5/130bfeb2d08f4b9783d910c6a1b621de.jpg)

![[置顶] iOS8 Core Image In Swift:更复杂的滤镜_第8张图片](http://img.e-com-net.com/image/info5/b3e13e04d3a346dd812f9b8379d6f3cd.jpg)

![[置顶] iOS8 Core Image In Swift:更复杂的滤镜_第9张图片](http://img.e-com-net.com/image/info5/921b2d66a43c4a2f9fe63bef8c43efcb.jpg)

![[置顶] iOS8 Core Image In Swift:更复杂的滤镜_第10张图片](http://img.e-com-net.com/image/info5/635aaa82c1fe4d15b82625fcce1298a0.jpg)

![[置顶] iOS8 Core Image In Swift:更复杂的滤镜_第11张图片](http://img.e-com-net.com/image/info5/444e5935d71b43bbb6a46f3c813c3726.jpg)