iOS 使用libx264编码视频

首先视频采集使用AVCaptureSession,输出参数设置如下

AVCaptureVideoDataOutput *newVideoOutput = [[AVCaptureVideoDataOutput alloc] init];

[newVideoOutput setAlwaysDiscardsLateVideoFrames:YES];

NSDictionary *settings = [[NSDictionary alloc] initWithObjectsAndKeys:

[NSNumber numberWithUnsignedInt:kCVPixelFormatType_420YpCbCr8BiPlanarVideoRange],

// [NSNumber numberWithInt:kCVPixelFormatType_32BGRA],

kCVPixelBufferPixelFormatTypeKey,

nil];

在代理里面取得视频的buffer,先把x264编译,我参照了http://blog.csdn.net/ixfly/article/details/7621258。

首先到http://www.videolan.org/developers/x264.html下载x264的库,然后解压,我解压出是x264-snapshot-20130604-2245文件夹

打开shell,进入x264的目录,执行如下语句

CC=/Applications/Xcode.app/Contents/Developer/Platforms/iPhoneOS.platform/Developer/usr/bin/gcc ./configure --host=arm-apple-darwin --sysroot=/Applications/Xcode.app/Contents/Developer/Platforms/iPhoneOS.platform/Developer/SDKs/iPhoneOS6.1.sdk --prefix='dist' --extra-cflags='-arch armv7s' --extra-ldflags='-arch armv7s -L/Applications/Xcode.app/Contents/Developer/Platforms/iPhoneOS.platform/Developer/SDKs/iPhoneOS6.1.sdk/usr/lib/system' --enable-pic

然后make&&make install,libx264.a就出来啦。

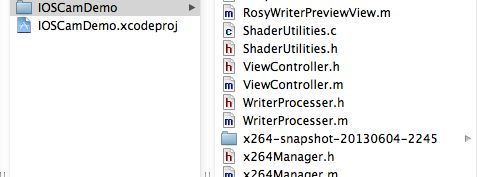

将libx264.a添加到工程

将x264目录手动拷贝到工程下面,方便以后跟着工程走

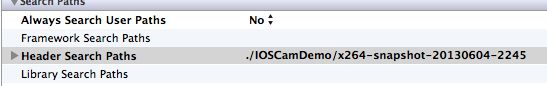

在xcode下面BuildSetting里面设置Header Search Paths,一相对路径的形式指向你刚才拷贝的目录

好啦,现在可以使用x264了

要包含下面三个.h文件

#include "x264.h"

#include "common/common.h"

#include "encoder/set.h"

我写了个x264管理的类

代码如下

头文件

#import <Foundation/Foundation.h>

#import <CoreMedia/CoreMedia.h>

#include "x264.h"

#include "common/common.h"

#include "encoder/set.h"

@interface x264Manager : NSObject{

x264_param_t * p264Param;

x264_picture_t * p264Pic;

x264_t *p264Handle;

x264_nal_t *p264Nal;

int previous_nal_size;

unsigned char * pNal;

FILE *fp;

unsigned char szBodyBuffer[1024*32];

}

- (void)initForX264; //初始化x264

- (void)initForFilePath; //初始化编码后文件的保存路径

- (void)encoderToH264:(CMSampleBufferRef )pixelBuffer; //将CMSampleBufferRef格式的数据编码成h264并写入文件

@end

//

// x264Manager.m

// IOSCamDemo

//

// Created by huangyuling on 6/5/13.

// Copyright (c) 2013 hyl. All rights reserved.

//

#import "x264Manager.h"

@implementation x264Manager

- (void)initForX264{

p264Param = malloc(sizeof(x264_param_t));

p264Pic = malloc(sizeof(x264_picture_t));

memset(p264Pic,0,sizeof(x264_picture_t));

//x264_param_default(p264Param); //set default param

x264_param_default_preset(p264Param, "veryfast", "zerolatency");

p264Param->i_threads = 1;

p264Param->i_width = 352; //set frame width

p264Param->i_height = 288; //set frame height

p264Param->b_cabac =0;

p264Param->i_bframe =0;

p264Param->b_interlaced=0;

p264Param->rc.i_rc_method=X264_RC_ABR;//X264_RC_CQP

p264Param->i_level_idc=21;

p264Param->rc.i_bitrate=128;

p264Param->b_intra_refresh = 1;

p264Param->b_annexb = 1;

p264Param->i_keyint_max=25;

p264Param->i_fps_num=15;

p264Param->i_fps_den=1;

p264Param->b_annexb = 1;

// p264Param->i_csp = X264_CSP_I420;

x264_param_apply_profile(p264Param, "baseline");

if((p264Handle = x264_encoder_open(p264Param)) == NULL)

{

fprintf( stderr, "x264_encoder_open failed/n" );

return ;

}

x264_picture_alloc(p264Pic, X264_CSP_I420, p264Param->i_width, p264Param->i_height);

p264Pic->i_type = X264_TYPE_AUTO;

}

- (void)initForFilePath{

char *path = [self GetFilePathByfileName:"IOSCamDemo.h264"];

NSLog(@"%s",path);

fp = fopen(path,"wb");

}

- (char*)GetFilePathByfileName:(char*)filename

{

NSArray *paths = NSSearchPathForDirectoriesInDomains(NSDocumentDirectory, NSUserDomainMask,YES);

NSString *documentsDirectory = [paths objectAtIndex:0];

NSString *strName = [NSString stringWithFormat:@"%s",filename];

NSString *writablePath = [documentsDirectory stringByAppendingPathComponent:strName];

int len = [writablePath length];

char *filepath = (char*)malloc(sizeof(char) * (len + 1));

[writablePath getCString:filepath maxLength:len + 1 encoding:[NSString defaultCStringEncoding]];

return filepath;

}

- (void)encoderToH264:(CMSampleBufferRef)sampleBuffer{

CVImageBufferRef pixelBuffer = CMSampleBufferGetImageBuffer(sampleBuffer);

CVPixelBufferLockBaseAddress(pixelBuffer, 0);

uint8_t *baseAddress0 = (uint8_t *)CVPixelBufferGetBaseAddressOfPlane(pixelBuffer, 0);

uint8_t *baseAddress1 = (uint8_t *)CVPixelBufferGetBaseAddressOfPlane(pixelBuffer, 1);

int i264Nal;

x264_picture_t pic_out;

memcpy(p264Pic->img.plane[0], baseAddress0, 352*288);

uint8_t * pDst1 = p264Pic->img.plane[1];

uint8_t * pDst2 = p264Pic->img.plane[2];

for( int i = 0; i < 352*288/4; i ++ )

{

*pDst1++ = *baseAddress1++;

*pDst2++ = *baseAddress1++;

}

if( x264_encoder_encode( p264Handle, &p264Nal, &i264Nal, p264Pic ,&pic_out) < 0 )

{

fprintf( stderr, "x264_encoder_encode failed/n" );

}

NSLog(@"i264Nal======%d",i264Nal);

if (i264Nal > 0) {

int i_size;

char * data=(char *)szBodyBuffer+100;

for (int i=0 ; i<i264Nal; i++) {

if (p264Handle->nal_buffer_size < p264Nal[i].i_payload*3/2+4) {

p264Handle->nal_buffer_size = p264Nal[i].i_payload*2+4;

x264_free( p264Handle->nal_buffer );

p264Handle->nal_buffer = x264_malloc( p264Handle->nal_buffer_size );

}

i_size = p264Nal[i].i_payload;

memcpy(data, p264Nal[i].p_payload, p264Nal[i].i_payload);

fwrite(data, 1, i_size, fp);

}

}

CVPixelBufferUnlockBaseAddress(pixelBuffer, 0);

}

@end

注意到这里x264设置的时候也是352x288的,要与上面output里面设置的一致才好,你也选择其他宽高

然后先初始化保存路径,在哪里初始化自己选择,我的程序是

[self.manager264 initForFilePath];

在

AVCaptureVideoDataOutputSampleBufferDelegate代理的方法中我们会获得数据,在那里调用前面的方法编码,不过记得编码签要初始化x264,我实在一开始就初始化了

x264Manager *m264 = [[x264Manager alloc]init];

[m264 initForX264];

self.manager264 = m264;

[m264 release];

下面是代理的方法

#pragma - remark AVCaptureVideo(Audio)DataOutputSampleBufferDelegate method

- (void)captureOutput:(AVCaptureOutput *)captureOutput didOutputSampleBuffer:(CMSampleBufferRef)sampleBuffer fromConnection:(AVCaptureConnection *)connection{

if (captureOutput == self.captureManager.videOutput) {

[self.manager264 encoderToH264:sampleBuffer];

}

}