Top 10 Performance Improvements in IIS 7.0

Ref: http://technet.microsoft.com/en-us/magazine/cc745952.aspx

Mike Volodarsky

At a Glance:

- Minimize your application footprint

- Reduce bandwidth costs

- Use enhanced caching capabilities

When I meet with companies planning to adopt IIS 7.0, one question that inevitably comes up is whether moving to IIS 7.0 will improve the performance of servers or applications. The

answer is generally yes, but don't be surprised when you don't see performance improvements when you first migrate your applications to the IIS 7.0.

To understand this, you need to understand the nature of the latest release. IIS 6.0 was an engineering release aimed at accomplishing three things: stronger security, higher reliability, and improved performance. By contrast, IIS 7.0 is a platform release. Its purpose was to convert the high-quality base Web server core of its predecessor to a modular and extensible platform with support for key modern deployment and management scenarios.

Many of the architectural changes and new features may actually have a negative impact on the performance of the Web server—considering, for example, that one of the key changes broke out tightly optimized Web server code paths into standalone modules that get no special treatment from the Web server. However, thanks to a lot of performance work done by the IIS team, IIS 7.0 remains at performance parity with its predecessor and exceeds it in certain areas. As I can personally tell you from working on the Web server core and the Integrated pipeline, achieving this required a lot of ingenuity in design and hard work in the implementation of the product.

Nonetheless, IIS 7.0 has a much higher potential to deliver significant performance improvements and reduce performance-related costs for your server farm than any other IIS release in the past.

You are not necessarily going to see these benefits simply by migrating to IIS 7.0, but some environments will. For example, Microsoft.com saw a 10 percent improvement in CPU efficiency (you can find the complete analysis on the Microsoft.com Team Blog at go.microsoft.com/fwlink/?LinkId=122497). You may also notice pronounced improvements in specific areas, including secure sockets layer (SSL) and Windows® authentication (both now performed in the kernel), and better vertical scalability on multicore and multiprocessor machines.

Still, the real IIS 7.0 horsepower doesn't come from incremental performance improvements to existing features. Rather, the improvements come from new capabilities that you must actively make use of. These capabilities are often rooted in the flexibility and the extensible nature of the platform and in new performance features. In this article, I will show you 10 of the most important sources of the performance improvements you can unlock by moving to IIS 7.0.

Leaner Web Servers

The ability to deploy lean IIS 7.0 servers draws from the modular architecture of the server. Practically all of its Web server features are implemented as modular components that can be added or removed individually. Thus you can remove any server features that are not necessary for an application's operation, resulting in noteworthy benefits including a significantly reduced attack surface area, a smaller operating footprint, and improved performance.

The full IIS 7.0 Web server feature set contains 44 modules, including native IIS modules and ASP.NET modules that provide services for the Integrated pipeline. These modules deliver such services as authentication (Windows Authentication and Digest Authentication modules), application framework support (FastCGI module), application services (Session State module), security (Request Filtering module), and performance (Output Cache module). Yet a minimal Web server that simply needs to serve static content can be functional with just 2 modules!

The overhead from unnecessary modules can be fairly significant—for instance, in the case of request logging and failed request tracing modules. Removing such modules in environments where they are not required can result in higher total throughput, and faster responses that lower the time to first byte (TTFB) and time to last byte (TTLB) metrics for the server workload.

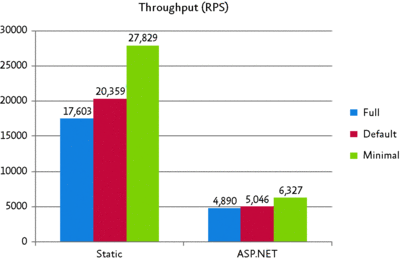

Figure 1 shows the results of a simple throughput test of an HTML file (static workload) and a hello world ASP.NET page (ASP.NET workload) when configured with the full set of features, the default set of features for that workload, and the absolutely minimal set of features needed for the workload. Even though most nonessential features are already disabled in the Full configuration, removing them completely in the Minimal configuration results in a considerable increase in throughput for both the static workload and the ASP.NET workload.

Figure 1

Throughput rates of the static workload and the ASP.NET workload in three different configurations with 100 concurrent clients (Click the image for a larger view)

In addition, you may see improvements in the memory footprint and higher site density, especially in shared hosting environments or cases where a large number of worker processes are being used. This is typically gained by loading fewer DLLs into each process and eliminating any memory allocations occurring during module initialization and request processing.

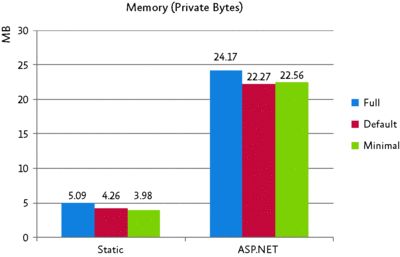

Figure 2 shows the memory usage (private bytes of the IIS worker process) in the same throughput test. While the improvements are not as pronounced in this example, the increases are in line with expectations, as the ASP.NET appdomain overhead is relatively higher than the baseline savings provided by the removed modules.

Figure 2

Memory usage (private bytes) of the static and ASP.NET workloads in three different configurations with 100 concurrent clients (Click the image for a larger view)

IIS 7.0 provides additional control over which modules are enabled at the application level, as well as control over module usage based on workload through module pre-conditions. While this requires advanced configuration, it can provide additional benefits in multi-site environments or for apps that support multiple distinct workloads.

A word of caution: determining the functionality you require can be tricky. You must consider not only the minimal functionality needed to support your application framework, but also any of the additional features on which your application may indirectly rely. For example, your application may depend on specific authentication methods or rely on authorization semantics provided by various IIS features, in which case removing those may adversely impact the security of your application. Likewise, your application may rely on some of the modules for functional or performance effects, in which case removing them may result in incorrect behavior or performance loss.

Build on a Lean OS

Windows Server® 2008 also provides OS-level componentization that you can use to further reduce the surface area of your Web server. Server Core is a minimal installation option for the Windows Server 2008 operating system and contains a minimal set of core OS subsystems. Server Core is an ideal environment for lean IIS 7.0 Web servers.

But when evaluating Server Core, be aware that some limitations may impact your application. Server Core does not provide Microsoft® .NET Framework support, which means no ASP.NET, no .NET extensibility for IIS, and no IIS Manager. Also, local management tasks will require command-line tools since there is no Microsoft Management Console (MMC). From a performance perspective, if you are already properly taking advantage of IIS componentization, you may not see a difference in memory footprint or throughput of your application workload running on Server Core versus a full Windows Server implementation. This is because the work performed by IIS and your application is the same on both platforms. However, there is one place where you'll see a difference: the overall footprint of the server, both in terms of disk space and memory usage.

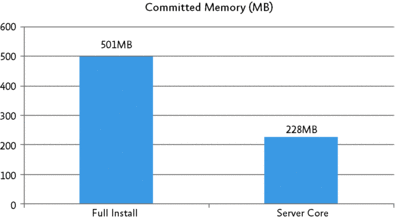

As an example,

Figure 3 shows the difference in footprint after performing the static workload test on a full Windows Server 2008 configuration and on Server Core. While the IIS footprint is virtually identical in both cases, the lower overall OS footprint of Server Core allows the workload to be supported with significantly less memory. The lower footprint may actually allow you to deploy your application workload on less-powerful hardware, focusing on the processing demands of the application and not the operating environment. Of course, this makes Server Core an excellent option for virtualized environments.

Figure 3

Memory footprint of full Windows Server 2008 versus Server Core after performing the static workload test (Click the image for a larger view)

Specialized Application Topologies

When optimizing for application workloads, you can separate the workload into distinct parts. For example, instead of running your application on a farm of 30 identical Web servers, you can run it on 10 Web servers, 5 application servers, and 3 proxy servers that perform caching and compression.

There are several reasons why this specialization works. First, the performance of many application workloads depends heavily on a variety of shared resources, such as memory, which may be contested between multiple parts of the application. The competition for these resources can create bottlenecks that prevent each server from being fully utilized across other resources. Separating parts of the application gives the parts ready access to the otherwise contested resources, resulting in higher efficiencies on the same set of hardware resources.

Second, specialization may enable the application to achieve greater cache locality, thereby improving performance. This includes low-level caches, such as the virtual memory Translation Lookaside Buffer (TLB), the file system cache, and other operating system and application caches. Another source of locality gains come from the CPU. By reducing the number of parallel activities that are taking place by focusing only on a specific part of the application, the application can reduce the number of context switches and maximize the work performed by the CPU.

Third, specialization enables workload-specific optimization, making each part of the application work more efficiently. Many of these optimizations are not possible when the entire application is supported on the same server due to conflicting needs of different parts of the application.

One common place where this occurs is the IIS and ASP.NET threading configuration, which significantly affects concurrency and indirectly affects memory usage, latency, and throughput for many applications. The IIS static file workload is highly asynchronous and requires a high concurrent request limit, but it thrives on a very low number of available threads. On the other hand, many of the ASP.NET features are synchronous and long-blocking and require a high thread count, while still others require a lower concurrency limit to reduce the impact on memory. By separating the static workload and breaking out parts of the request-processing pipeline into a separate process or server, you can optimize the concurrency of each individual workload.

Finally, specialization can enable greater overall scalability by allowing the application to scale out discrete workloads or application parts independent of one another. This can enable the application topology to deliver increased capacity and redundancy where it is most needed, rather than requiring you to apply the same template to the entire application. In this model, the specialized resources may be physical servers, virtual servers, or even application pools on the same machine.

When evaluating the potential benefits of specialization in your application topology, start by finding the processing or resource-intensive bottlenecks in your application. These areas should be the first candidates for specialization because they may provide a significant potential for optimization when isolated, and because they will demand the highest horizontal scale-out. Then, evaluate whether isolating them enables the rest of the application to utilize resources more effectively. Finally, you will need to evaluate the overhead from the connectivity mechanism needed to bring the isolated application components together.

Improved Application Support

IIS 7.0 comes with extended support for application frameworks through FastCGI, an open protocol that is supported by many open-source application frameworks that otherwise may not support stable and high-performance native integration with IIS. Unlike the CGI (Common Gateway Interface) protocol, which has been supported by IIS for a long time, FastCGI provides much improved performance on the Windows platform. This is primarily due to the FastCGI reusable process architecture, which eliminates the heavy per-request process creation overhead, enabling clients to take advantage of persistent keep-alive connections.

If you are supporting application frameworks on IIS using CGI or another mechanism, you might achieve improved performance (and in some cases, stability) by moving to the FastCGI protocol.

The first application framework to take advantage of this support is PHP. In fact, the IIS team has worked directly with Zend Technologies to make sure that the IIS FastCGI implementation works well with PHP and enables performance improvements in the PHP framework on Windows. (For more on the project, see the posting on my blog at go.microsoft.com/fwlink/?LinkId=122509.) If you have a mix of Web server technologies that include ASP or ASP.NET applications running on IIS, and PHP or other FastCGI-compliant application frameworks using other technologies, you may be able to consolidate these apps on the IIS 7.0 platform.

Moving PHP and other application frameworks to IIS 7.0 and FastCGI will allow you to take advantage of various IIS 7.0 features, including the ASP.NET Integrated pipeline. This provides a very convenient option for enhancing application frameworks with ASP.NET services without converting them to ASP.NET. And this also enables applications using different frameworks to collaborate. For an example of how this can be used to enhance existing apps with functionality and improve performance without changing any code, see my MSDN® Magazine article "Enhance Your Apps with the Integrated ASP.NET Pipeline" (available at msdn.microsoft.com/magazine/cc135973.aspx).

Increase Application Density

IIS 7.0 includes many enhancements intended to increase the density of apps that can be hosted on a single server. These enhancements are part of many features that IIS 7.0 delivers to improve the quality of shared hosting, which include improved site provisioning and better application isolation.

From a performance perspective, the improvements focus mainly on two aspects of increasing application density—increasing the number of applications that can be provisioned on an IIS 7.0 server and increasing the number of applications that can be active at any given time.

Note that IIS 7.0 makes it possible to provision a larger number of applications on each server than previously possible—in some internal tests, up to 100,000 sites on a single server was possible. This draws on the ability to create and configure a large number of sites and applications.

A word of caution: in order to achieve high-speed provisioning, you need to move to the new configuration APIs, as older configuration APIs cannot achieve it. Additionally, not all IIS configuration APIs provide the same performance characteristics, so a careful choice is required to ensure high performance. When in doubt, go for configuration options that leverage the new IIS configuration objects directly, including the Microsoft.Web.Administration namespace, AppCmd.exe command line tool, and the IIS COM configuration objects accessed from script, .NET, or C++ code.

The difference between how many sites can be provisioned and how many can be active is a very important distinction in the IIS architecture, which uses persistent applications and worker processes to perform request processing. In this model, active applications consume more resources on the server, but also provide better sustained performance due to caching and removed initialization costs.

Because each active application requires a certain amount of memory and a separate worker process (if the recommended application isolation model is being used), the number of active applications depends heavily on the application memory footprint. Therefore, while a single application that only serves static content may require only 3MB for its worker process (and can even share the same worker process with other static content applications), some dynamic applications may require 100MB or more of RAM even under low usage. This makes the memory overhead of the IIS worker process itself insignificant when compared to the application's footprint when defining the maximum possible density.

In the typical shared hosting scenario, it is common to see what is called an 80/20 distribution, where 80 percent of requests go to 20 percent of sites. This results in a small percentage of sites being active at any given time. To allow a higher number of active sites, IIS 7.0 provides active lifetime management. This can help you to reclaim memory from inactive applications in order to allow more active applications to be hosted.

IIS 7.0 introduces a new algorithm called dynamic idle, which proactively adjusts the idle timeouts of worker processes based on memory available on the server. As memory becomes low, idle applications are removed more rapidly, thereby allowing more active applications to be hosted. However, if the memory is available, the timeouts can remain large to provide better performance and maintain application state. In addition to allowing more applications to be active, dynamic idle also helps avoid low memory conditions that cause severe performance degradation due to thrashing.

To make hosting of many active applications possible, you should also take advantage of a 64-bit operating system, as this will allow the OS to address more than 4GB of memory. On top of this, you can configure the IIS worker processes to run in 32-bit mode (SysWoW64), where they consume less memory themselves while allowing the OS to address more memory than what's possible in a 32-bit environment.

Bandwidth Reduction from Compression

It comes as no surprise that bandwidth costs are one of the top costs of running an Internet-facing datacenter. In addition, the bandwidth required to deliver requested content is a key factor in the perceived responsiveness of your application.

One of the most effective ways to reduce the bandwidth needed to deliver the application responses is to use HTTP compression. This can reduce the size of the response by a substantial amount, often by a factor of 10 when applied to easily compressible text content such as HTML. The best part is that virtually all desktop browsers support it, and decompression costs on desktop hardware are minor compared to the latency savings from sending less data. And since compression is based on Content-Encoding negotiation defined in the HTTP 1.1 protocol, enabling it is safe for clients that do not support compression—these clients simply receive an uncompressed version of the content.

IIS 7.0 provides the two compression features introduced by its predecessor: static compression and dynamic compression. Static compression pre-compresses static content and saves it on disk, thereby allowing future requests to serve compressed content directly without compression overhead. Dynamic compression compresses the response in real time, and therefore enables compression for responses generated by applications. Any application framework on IIS 7.0 can take advantage of dynamic compression—including ASP, ASP.NET, or PHP.

Despite a common myth, dynamic compression usually does not have a prohibitive CPU overhead. In fact, dynamic compression often causes less than 5 percent of the total CPU utilization on a busy server. Dynamic compression can be deployed somewhat liberally to allow for maximum bandwidth savings for any application workloads.

You can further optimize compression overhead by configuring the compression strength in order to achieve the desired compression versus CPU overhead ratio. But it doesn't stop there—you can also configure your application to enable the caching of compressed content, which eliminates the compression overhead on cache hits by serving already-compressed content. Note that both ASP.NET and IIS Output caches have been upgraded with the optional capability to cache compressed content for clients that support it, as well as handle requests from clients that require uncompressed content.

Media Bit Rate Throttling

With increasing numbers of sites offering media content, the bandwidth costs for many businesses are skyrocketing. What's worse, a large percentage of media bandwidth is wasted because the media content sent to the client is never really used. Why does this happen?

Think about the last time you watched an online video or saw a video ad when browsing. Did you watch the video to the very end? It is common for users browsing video sites to watch only part of a video before moving on to the next video or leaving the page. However, a Web server using progressive download to deliver the video will typically send significantly more data than is required for those few seconds of playtime. Most of that data never gets used.

If your videos on average only get 5 seconds of viewing time but deliver (buffer) 30 seconds worth of video data in those 5 seconds, you are potentially wasting more than 80 percent of your bandwidth!

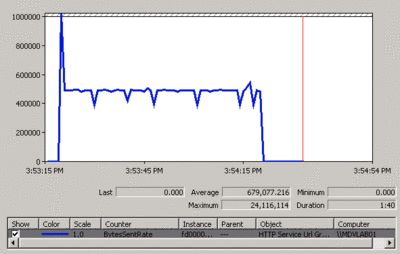

A year ago, to solve this problem for a customer adopting a beta release of IIS 7.0, I wrote a bandwidth throttling module that automatically detected the video bit rate and made sure that the server delivered the video to the client at roughly the same rate. The IIS media team picked up this module, which is known as the Bit Rate Throttling Module (see

Figure 4) and is available at the iis.net download center ( iis.net/downloads/?tabid=34&g=6&i=1640). You can learn more about it on Scott Guthrie's blog (available at go.microsoft.com/fwlink/?LinkId=122514).

The Bit Rate Throttling Module automatically detects the encoding rate of the most popular video types. You can control how much data you would like to pre-send to the client in order to eliminate initial buffering delays (fast start) and at what percentage of the encoded rate you would like to deliver the content. You can also configure many other options, such as maximum bandwidth and concurrent connections, and control the module programmatically.

Output Caching

Generally speaking, caching is the number-one way to improve the performance of applications that execute repeated work. Unlike application-specific performance improvements, which often require a lot of effort and chew away at the processing overhead of an app, caching eliminates the overhead of repeat requests for the same content.

Prior to IIS 7.0, both IIS and ASP.NET have offered caching capabilities in the form of the IIS kernel cache and the ASP.NET Output Cache. The IIS kernel cache provided maximum performance, but was restricted primarily to static content. The ASP.NET Output Cache was a far more complete solution for caching dynamic content, albeit with slower performance and less efficient memory management. The new Output Cache in IIS 7.0 bridges the gap between the IIS kernel cache and the ASP.NET Output Cache.

The IIS 7.0 Output Cache enables caching of dynamic content from any application, including ASP, ASP.NET, PHP, and any other IIS 7.0-compatible application framework. By providing basic support for content variability and expiration, this new feature makes it possible to implement caching for content that cannot be cached by the IIS kernel cache. Still, it enables the use of the kernel cache for content that does meet the restrictions.

Additionally, the IIS 7.0 Output Cache also provides a higher performance alternative to the ASP.NET Output Cache for ASP.NET content that does not require the advanced caching features (such as cache dependencies or invalidation) that are only available in the ASP.NET Output Cache.

When it comes to output caching, the challenges normally lie in defining the proper content expiration, invalidation, and variability policies that allow efficient response caching while maintaining the required cache correctness and freshness. Much of the time, you can do this simply by configuring the proper caching rules, though some situations require more complex policies that depend on runtime information. To handle this, the IIS 7.0 Output Cache provides programmatic APIs that can be used to ensure the required caching behavior. This unlocks the caching performance potential for content that otherwise would not benefit from caching. In addition, the ASP.NET Integrated Pipeline enables the use of the ASP.NET Output Cache for non-ASP.NET content.

Deploying output caching for dynamic content can be tricky and may require a multitier strategy for capitalizing on the efficiencies and flexibility of the different caching features on the IIS 7.0 platform. This often includes describing multiple parameters that affect the response and defining both expiration and invalidation strategies to maintain the freshness of the cache, and then determining which cache will support the required semantics.

But this complexity is almost always worthwhile. By implementing IIS 7.0 Output Cache, you can achieve improvements of up to several orders of magnitude in overall throughput of your application and a matching reduction of load on your database and business tier components.

Converting ISAPI Code to IIS 7.0 Modules

IIS 7.0 introduces a new server API on which all of the IIS 7.0 modules are based. This replaces the legacy ISAPI Filter and ISAPI Extension APIs used in previous versions of IIS. For new modules that do not need to support previous versions, the new APIs are easier to use, help produce more reliable server-side code, and are much more powerful.

However, IIS 7.0 provides an option to support existing ISAPI filters and extensions through a compatibility layer implemented through optional IIS 7.0 modules. This allows existing ISAPI components to function on IIS 7.0 without requiring a rewrite.

Although using existing ISAPI investments lowers the bar to IIS 7.0 migration, you should strongly consider porting the legacy ISAPI code to the new IIS 7.0 APIs. Doing so eliminates the overhead of the ISAPI compatibility layer and unlocks performance benefits that are not available for ISAPI components. Depending on the work being performed by the ISAPI component, these performance benefits can be fairly significant. For example, the IIS 7.0 module API provides built-in support for caching configuration metadata and other arbitrary data associated with sites, applications, and URLs, which can significantly speed up internal operations of the component.

Additionally, the IIS module API allows modules to perform long-running operations asynchronously, such as receiving request entity data or sending response. Performing these tasks asynchronously allows the server to scale to a very large number of concurrent clients (tens of thousands), instead of maxing out at tens or, at most, a few hundred concurrent clients due to limits on the number of request threads. Asynchronous processing can also eliminate the effect of processing on other requests and activities in the application, reduce memory usage, and enable much better CPU utilization.

On top of the specific performance-affecting patterns, the native API gives developers greater flexibility for request-processing tasks. This will let you improve the design of the server component and, in turn, enable large efficiencies. For example, certain filtering tasks that previously required wildcard ISAPI extensions and child request execution can now be performed in a module during the original request, and can also enable IIS kernel caching for the response.

This may require some prototyping and experimentation to determine the optimal design that maximizes the benefits of the migration. Due to the fundamental architecture differences between ISAPI and the IIS 7.0 module API, the direct porting route is not necessarily the right one. The good news is that the IIS 7.0 module API is also more straightforward to use than the ISAPI, which makes migration less difficult.

Server Extensibility

IIS 7.0 offers end-to-end extensibility. It requires a good deal of up-front knowledge about the server operation, but it also unlocks tremendous potential for application-specific performance gains. Armed with some understanding of the server architecture and request processing, you can take advantage of this extensibility to design custom performance solutions tailored to your application, workload, and hardware requirements.

This can come in the form of replacing any of the built-in IIS 7.0 features with custom implementations. While IIS 7.0 features have undergone a lot of optimization and performance testing, they, of course, have not been optimized for every possible use. Therefore, you may be able to improve the performance of specific modules by building in optimizations for your specific workload. For example, you may decide to re-implement the directory listing module to cache directory listings in memory instead of using file system APIs to enumerate files.

Alternatively, extensibility can be used to build new performance features. The built-in examples of such features include the Output Cache, the compression modules, and several other internal caches.

In order to determine the need for a custom performance feature, you need to understand the performance characteristics of your application and its workload as well as know the bottleneck you need to address. IIS 7.0 provides ample diagnostic support for performance analysis, which can make the requirements and possible design of the features you need more clear.

Wrapping Up

While the IIS 7.0 release is primarily a functional release, it offers noteworthy performance improvements derived from its modular architecture, configurability, and new performance features. These improvements can pave the way to significant business savings through server consolidation and reduced bandwidth costs, as well as provide a better user experience.

To properly leverage many of these improvements, it is often necessary to perform a thorough analysis of your current application platform and gain a fair amount of understanding of the IIS 7.0 architecture. To learn more about the IIS 7.0 architecture and features mentioned in this article, please visit iis.net. At mvolo.com, I will be blogging more about techniques that proactively leverage IIS 7.0 to improve application performance and reduce operating costs.

Mike Volodarsky is a former Program Manager on the IIS team at Microsoft. Over the past five years, he has driven the design and development of core features for ASP.NET 2.0 and IIS 7.0. Now he builds advanced Web server technologies for high-performance Web farms using IIS 7.0 and Windows Server 2008. Mike is the lead author of the book

IIS 7.0 Resource Kit and writes actively for

MSDN Magazine and his IIS 7.0 blog, mvolo.com.