Android 2.3 Gallery3D添加gif支持——修改代码(三)

概要

前两篇文章:

《Android 2.3 Gallery3D添加gif支持——概要(一)》

《Android 2.3 Gallery3D添加gif支持——图片显示(二)》

看过Gallery3D代码的童鞋都知道,其代码不仅多而且很复杂,因此对于代码这里不会做过于详细的分析,重点是流程。毕竟关键的方法就那么几个,其他修改不外乎为了完善它,仅此而已。

欢迎转载,请务必注明出处:http://blog.csdn.net/yihongyuelan/

对于让Android显示gif图片,其实有很多种做法,比如:

(1). 以Java的方式对gif进行解析并显示。

在Gallery3D中,当获取到该图片为gif格式时,调用一套单独的解析显示流程。解析包括获取当前gif中每一张图片,以及每一张图片之间的显示间隔等等;显示包括用一个循环的方式让每一张图片。当然我们可以将解析方式以Jar包的方式引入,网上的现成例子有很多。

(2).以C/C++的方式对gif进行解析并显示。

Android 2.3 集成了Skia这个强大的图形显示引擎,有多强大呢?(那么那么强大!!o(╯□╰)o还是自己百度吧),Skia是支持对gif解析的。但为什么google没有使用Skia来进行gif解析呢?这是有多方面原因的,Android默认开发都是针对模拟器的,但模拟器的性能大家都知道吧,CPU资源少,内存资源少等等,总的来讲google为了保证模拟器的“正常运行”就没有加入gif的显示。

回到文章主题,也就是说我们可以通过Skia对gif进行解码,然后修改一下Gallery3D显示逻辑,从而完成gif的显示。这样子不用我说,大家也知道效率比Java高很多倍了吧!!既然能够通过Skia来解析gif图片,自然可以通过类似的方式来改造ImageView等等。

基本框架

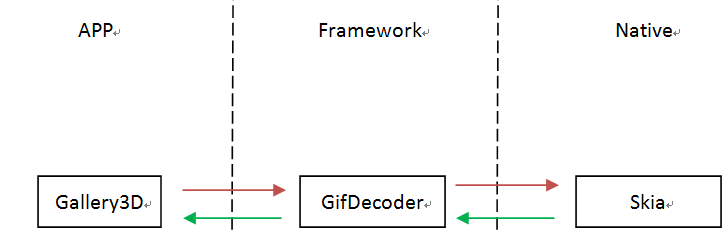

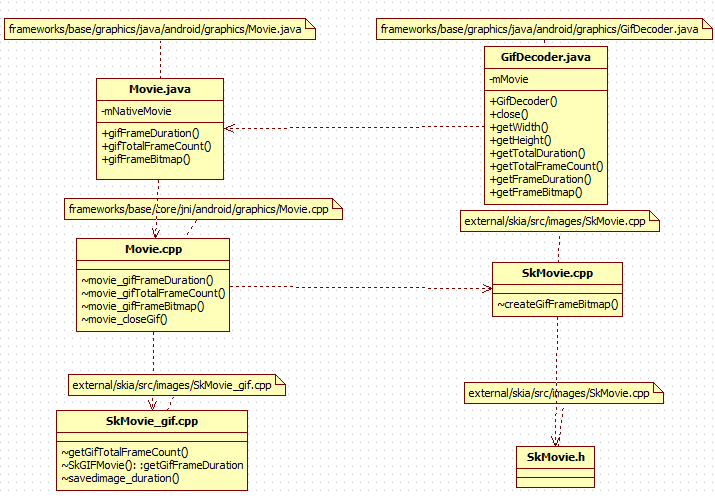

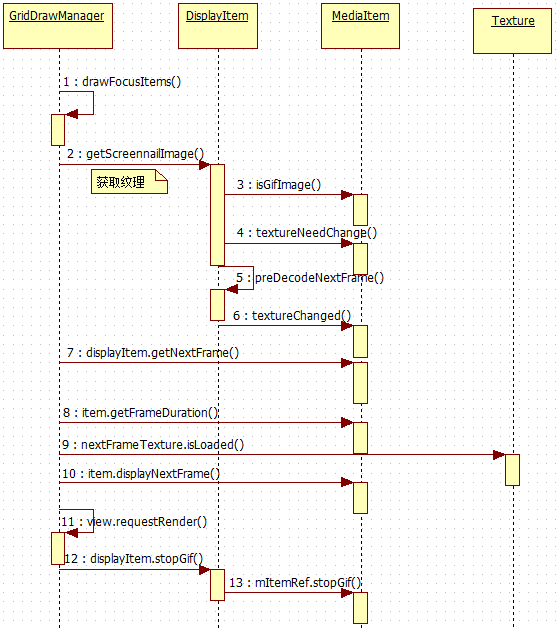

本文采用第二种方案,大致流程如图1所示:

图1

框架解析

APP层

void drawFocusItems(RenderView view, GL11 gl, float zoomValue, boolean slideshowMode, float timeElapsedSinceView) {

int selectedSlotIndex = mSelectedSlot;

GridDrawables drawables = mDrawables;

int firstBufferedVisibleSlot = mBufferedVisibleRange.begin;

int lastBufferedVisibleSlot = mBufferedVisibleRange.end;

boolean isCameraZAnimating = mCamera.isZAnimating();

+ //如果不满足gif播放条件则停止播放

+ boolean playGifMode = false;

for (int i = firstBufferedVisibleSlot; i <= lastBufferedVisibleSlot; ++i) {

+ DisplayItem displayItem = displayItems[(i - firstBufferedVisibleSlot) * GridLayer.MAX_ITEMS_PER_SLOT];

+ if (i != selectedSlotIndex && displayItem != null) {

+ displayItem.stopGif();

+ }

if (selectedSlotIndex != Shared.INVALID && (i >= selectedSlotIndex - 2 && i <= selectedSlotIndex + 2)) {

continue;

}

- DisplayItem displayItem = displayItems[(i - firstBufferedVisibleSlot) * GridLayer.MAX_ITEMS_PER_SLOT];

if (displayItem != null) {

displayItem.clearScreennailImage();

}

return;

}

boolean focusItemTextureLoaded = false;

- Texture centerTexture = centerDisplayItem.getScreennailImage(view.getContext());

+ //获取图片纹理信息

+ Texture centerTexture = centerDisplayItem.getScreennailImage(view);

if (centerTexture != null && centerTexture.isLoaded()) {

focusItemTextureLoaded = true;

}

view.setAlpha(1.0f);

gl.glEnable(GL11.GL_BLEND);

gl.glBlendFunc(GL11.GL_ONE, GL11.GL_ONE);

+ int selectedIndexInDrawnArray = (selectedSlotIndex - firstBufferedVisibleSlot)

+ * GridLayer.MAX_ITEMS_PER_SLOT;

+ DisplayItem selectedDisplayItem = displayItems[selectedIndexInDrawnArray];

+ MediaItem selectedItem = selectedDisplayItem.mItemRef;

+ if (selectedItem.isGifImage() && !slideshowMode) {

+ playGifMode = true;

+ mDisplayGifItem = selectedDisplayItem;

+ }

float backupImageTheta = 0.0f;

for (int i = -1; i <= 1; ++i) {

if (slideshowMode && timeElapsedSinceView > 1.0f && i != 0)

continue;

+ //添加是否是GifMode判断条件

+ if (playGifMode && i != 0) {

+ continue;

+ }

float viewAspect = camera.mAspectRatio;

int selectedSlotToUse = selectedSlotIndex + i;

if (selectedSlotToUse >= 0 && selectedSlotToUse <= lastBufferedVisibleSlot) {

DisplayItem displayItem = displayItems[indexInDrawnArray];

MediaItem item = displayItem.mItemRef;

final Texture thumbnailTexture = displayItem.getThumbnailImage(view.getContext(), sThumbnailConfig);

- Texture texture = displayItem.getScreennailImage(view.getContext());

- if (isCameraZAnimating && (texture == null || !texture.isLoaded())) {

+ //获取下一张图片

+ Texture texture = displayItem.getScreennailImage(view);

+ Texture nextFrameTexture = displayItem.getNextFrame();

+ if (playGifMode) {

+ long now = SystemClock.uptimeMillis();

+ if ((now - mPreTime) > item.getFrameDuration() && nextFrameTexture != null

+ && nextFrameTexture.isLoaded()) {

+ item.displayNextFrame();

+ mPreTime = now;

+ }

+ view.requestRender();

+ }

+ //添加是否是GifMode判断条件

+ if (!playGifMode && isCameraZAnimating && (texture == null || !texture.isLoaded())) {

texture = thumbnailTexture;

mSelectedMixRatio.setValue(0f);

mSelectedMixRatio.animateValue(1f, 0.75f, view.getFrameTime());

}

- Texture hiRes = (zoomValue != 1.0f && i == 0 && item.getMediaType() != MediaItem.MEDIA_TYPE_VIDEO) ? displayItem

+ //添加是否是GifMode判断条件

+ Texture hiRes = (!playGifMode && zoomValue != 1.0f && i == 0 && item.getMediaType() != MediaItem.MEDIA_TYPE_VIDEO) ? displayItem

.getHiResImage(view.getContext())

: null;

if (App.PIXEL_DENSITY > 1.0f) {

}

}

texture = thumbnailTexture;

- if (i == 0) {

+ //添加是否是GifMode判断条件

+ if (i == 0 && !playGifMode) {

mSelectedMixRatio.setValue(0f);

mSelectedMixRatio.animateValue(1f, 0.75f, view.getFrameTime());

- if (i == 0) {

+ //添加是否是GifMode判断条件

+ if (i == 0 && !playGifMode) {

mSelectedMixRatio.setValue(0f);

- if (i == 0) {

- if (i == 0) {

+ //添加是否是GifMode判断条件

+ if (i == 0 && !playGifMode) {

mSelectedMixRatio.setValue(0f);

mSelectedMixRatio.animateValue(1f, 0.75f, view.getFrameTime());

+ //判断纹理是否已经加载,如果是则预先解析第二祯图片

+ }

+ } else if (texture.isLoaded()) {

+ if (playGifMode && nextFrameTexture == null) {

+ mPreTime = SystemClock.uptimeMillis();

+ displayItem.preDecodeNextFrame(view);

}

}

if (mCamera.isAnimating() || slideshowMode) {

continue;

}

}

- int theta = (int) displayItem.getImageTheta();

+ //取绝对值

+ int theta = Math.abs((int) displayItem.getImageTheta());

// If it is in slideshow mode, we draw the previous item in

// the next item's position.

if (slideshowMode && timeElapsedSinceView < 1.0f && timeElapsedSinceView != 0) {

int vboIndex = i + 1;

float alpha = view.getAlpha();

float selectedMixRatio = mSelectedMixRatio.getValue(view.getFrameTime());

- if (selectedMixRatio != 1f) {

+ //添加是否是GifMode判断条件

+ if (selectedMixRatio != 1f && !playGifMode) {

texture = thumbnailTexture;

view.setAlpha(alpha * (1.0f - selectedMixRatio));

}

view.unbindMixed();

}

}

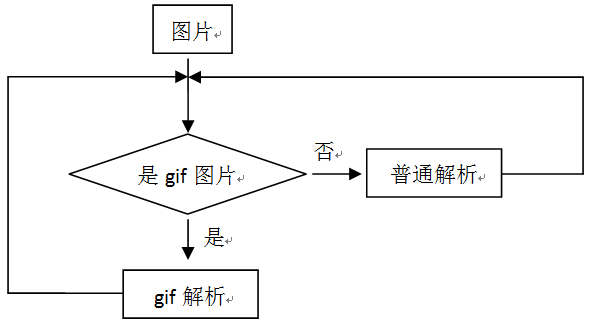

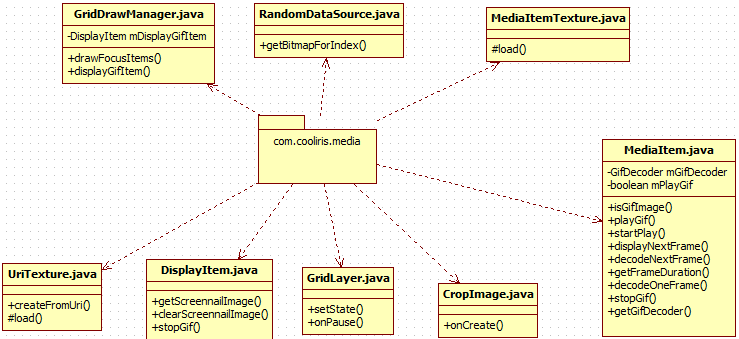

这就是图2中所表达的gif图片显示逻辑,但整个APP层需要修改的地方有很多,如图3所示:

+ //判断是否是gif图片

+ public boolean isGifImage() {

+ return ("image/gif".equals(mMimeType));

+ }

+ //播放gif

+ public boolean playGif() {

+ return mPlayGif;

+ }

+ //获取播放索引

+ public int getDisplayIndex() {

+ return mDisplayIndex;

+ }

+ //获取解码索引

+ public int getDecodeIndex() {

+ return mDecodeIndex;

+ }

+ //获取下一帧图片

+ public void displayNextFrame() {

+ if (mGifDecoder != null) {

+ int count = mGifDecoder.getTotalFrameCount() - 1;

+ if (mDisplayIndex < count) {

+ mDisplayIndex++;

+ } else if (mDisplayIndex == count) {

+ mDisplayIndex = 0;

+ }

+ mTextureNeedChange = true;

+ }

+ }

+ //纹理需要改变

+ public boolean textureNeedChange() {

+ return mTextureNeedChange;

+ }

+ //纹理已经改变

+ public void textureChanged() {

+ mTextureNeedChange = false;

+ }

+ //获取gif解码器,通过底层必经之路

+ public synchronized GifDecoder getGifDecoder(Context context) {

+ if (mGifDecoder == null) {

+ InputStream inputStream = null;

+ ContentResolver cr = context.getContentResolver();

+ try {

+ inputStream = cr.openInputStream(Uri.parse(mContentUri));

+ mGifDecoder = new GifDecoder(inputStream);

+ inputStream.close();

+ } catch (FileNotFoundException e) {

+ // TODO Auto-generated catch block

+ e.printStackTrace();

+ } catch (IOException e) {

+ e.printStackTrace();

+ }

+ }

+ return mGifDecoder;

+ }

+ //获取单张图片显示时间

+ public int getFrameDuration() {

+ if (mGifDecoder != null) {

+ return mGifDecoder.getFrameDuration(mDisplayIndex);

+ }

+ return Shared.INFINITY;

+ }

+ //解析一张图片

+ public synchronized Bitmap decodeOneFrame() {

+ Bitmap bitmap = null;

+ if (mGifDecoder != null) {

+ int count = mGifDecoder.getTotalFrameCount() - 1;

+ bitmap = mGifDecoder.getFrameBitmap(mDecodeIndex);

+ // draw the bitmap to a white canvas

+ bitmap = Util.whiteBackground(bitmap);

+ if (mDecodeIndex < count) {

+ mDecodeIndex++;

+ } else if (mDecodeIndex == count) {

+ mDecodeIndex = 0;

+ }

+ }

+ return bitmap;

+ }

+ 停止播放

+ public void stopGif() {

+ if (mGifDecoder != null) {

+ mGifDecoder.close();

+ mGifDecoder = null;

+ }

+ mPlayGif = false;

+ mDisplayIndex = 0;

+ mDecodeIndex = 0;

+ mTextureNeedChange = false;

+ }

Framework层

package android.graphics;

import java.io.InputStream;

import java.io.FileInputStream;

import java.io.BufferedInputStream;

import android.util.Log;

import android.graphics.Bitmap;

public class GifDecoder {

/**

* Specify the minimal frame duration in GIF file, unit is ms.

* Set as 100, then gif animation hehaves mostly as other gif viewer.

*/

private static final int MINIMAL_DURATION = 100;

/**

* Invalid returned value of some memeber function, such as getWidth()

* getTotalFrameCount(), getFrameDuration()

*/

public static final int INVALID_VALUE = 0;

/**

* Movie object maitained by GifDecoder, it contains raw GIF info

* like graphic control informations, color table, pixel indexes,

* called when application is no longer interested in gif info.

* It is contains GIF frame bitmap, 8-bits per pixel,

* using SkColorTable to specify the colors, which is much

* memory efficient than ARGB_8888 config. This is why we

* maintain a Movie object rather than a set of ARGB_8888 Bitmaps.

*/

private Movie mMovie;

/**

* Constructor of GifDecoder, which receives InputStream as

* parameter. Decode an InputStream into Movie object.

* If the InputStream is null, no decoding will be performed

*

* @param is InputStream representing the file to be decoded.

*/

public GifDecoder(InputStream is) {

if (is == null)

return;

mMovie = Movie.decodeStream(is);

}

public GifDecoder(byte[] data, int offset,int length) {

if (data == null)

return;

mMovie = Movie.decodeByteArray(data, offset, length);

}

/**

* Constructor of GifDecoder, which receives file path name as

* parameter. Decode a file path into Movie object.

* If the specified file name is null, no decoding will be performed

*

* @param pathName complete path name for the file to be decoded.

*/

public GifDecoder(String pathName) {

if (pathName == null)

return;

mMovie = Movie.decodeFile(pathName);

}

/**

* Close gif file, release all informations like frame count,

* graphic control informations, color table, pixel indexes,

* called when application is no longer interested in gif info.

* It will release all the memory mMovie occupies. After close()

* is call, GifDecoder should no longer been used.

*/

public synchronized void close(){

if (mMovie == null)

return;

mMovie.closeGif();

mMovie = null;

}

/**

* Get width of images in gif file.

* if member mMovie is null, returns INVALID_VALUE

*

* @return The total frame count of gif file,

* or INVALID_VALUE if the mMovie is null

*/

public synchronized int getWidth() {

if (mMovie == null)

return INVALID_VALUE;

return mMovie.width();

}

/**

* Get height of images in gif file.

* if member mMovie is null, returns INVALID_VALUE

*

* @return The total frame count of gif file,

* or INVALID_VALUE if the mMovie is null

*/

public synchronized int getHeight() {

if (mMovie == null)

return INVALID_VALUE;

return mMovie.height();

}

/**

* Get total duration of gif file.

* if member mMovie is null, returns INVALID_VALUE

*

* @return The total duration of gif file,

* or INVALID_VALUE if the mMovie is null

*/

public synchronized int getTotalDuration() {

if (mMovie == null)

return INVALID_VALUE;

return mMovie.duration();

}

/**

* Get total frame count of gif file.

* if member mMovie is null, returns INVALID_VALUE

*

* @return The total frame count of gif file,

* or INVALID_VALUE if the mMovie is null

*/

public synchronized int getTotalFrameCount() {

if (mMovie == null)

return INVALID_VALUE;

return mMovie.gifTotalFrameCount();

}

/**

* Get frame duration specified with frame index of gif file.

* if member mMovie is null, returns INVALID_VALUE

*

* @param frameIndex index of frame interested.

* @return The duration of the specified frame,

* or INVALID_VALUE if the mMovie is null

*/

public synchronized int getFrameDuration(int frameIndex) {

if (mMovie == null)

return INVALID_VALUE;

int duration = mMovie.gifFrameDuration(frameIndex);

if (duration < MINIMAL_DURATION)

duration = MINIMAL_DURATION;

return duration;

}

/**

* Get frame bitmap specified with frame index of gif file.

* if member mMovie is null, returns null

*

* @param frameIndex index of frame interested.

* @return The decoded bitmap, or null if the mMovie is null

*/

public synchronized Bitmap getFrameBitmap(int frameIndex) {

if (mMovie == null)

return null;

return mMovie.gifFrameBitmap(frameIndex);

}

}

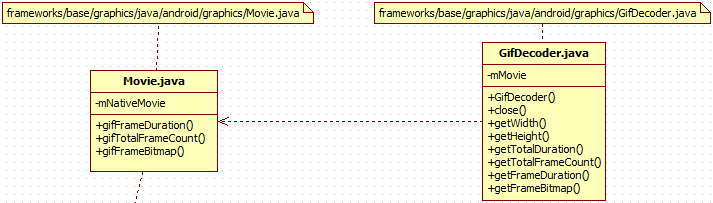

其中都有注释,这里就不细说了。通过查看代码可以知道,实际上我们的GifDecoder调用的是Movie。也就是AndroidSource/frameworks/base/graphics/java/android/graphics/Movie.java。这里为什么调用Movie.java呢?仔细查看可以知道,我们的GifDecoder调用了Movie中的decodeStream方法,但是还有很多通往底层的接口没有打通,怎么办呢?与其另起炉灶不如就借Movie.java的地,直接声明引用Native方法。当然我们是可以自己写一个,不过有现成的干嘛不用呢?Movie.java代码如下:

public class Movie {

private final int mNativeMovie;

public native boolean setTime(int relativeMilliseconds);

public native void draw(Canvas canvas, float x, float y, Paint paint);

+ //这里添加我们通往底层的接口

+ //please see GifDecoder for information

+ public native int gifFrameDuration(int frameIndex);

+ public native int gifTotalFrameCount();

+ public native Bitmap gifFrameBitmap(int frameIndex);

+ public native void closeGif();

public void draw(Canvas canvas, float x, float y) {

draw(canvas, x, y, null);

}

- public static native Movie decodeStream(InputStream is);

+ //这里的修改是为了让解码器正常工作

+

+ public static Movie decodeStream(InputStream is) {

+ // we need mark/reset to work properly

+

+ if (!is.markSupported()) {

+ //the size of Buffer is aligned with BufferedInputStream

+ // used in BitmapFactory of Android default version.

+ is = new BufferedInputStream(is, 8*1024);

+ }

+ is.mark(1024);

+

+ return decodeMarkedStream(is);

+ }

+

+// public static native Movie decodeStream(InputStream is);

+ private static native Movie decodeMarkedStream(InputStream is);

在Framework层中的Java修改就主要涉及到GifDecoder.java和Movie.java,如图4:

Native层

+//please see Movie.java for information

+static int movie_gifFrameDuration(JNIEnv* env, jobject movie, int frameIndex) {

+ NPE_CHECK_RETURN_ZERO(env, movie);

+ SkMovie* m = J2Movie(env, movie);

+//LOGE("Movie:movie_gifFrameDuration: frame number %d, duration is %d", frameIndex,m->getGifFrameDuration(frameIndex));

+ return m->getGifFrameDuration(frameIndex);

+}

+

+static jobject movie_gifFrameBitmap(JNIEnv* env, jobject movie, int frameIndex) {

+ NPE_CHECK_RETURN_ZERO(env, movie);

+ SkMovie* m = J2Movie(env, movie);

+ int averTimePoint = 0;

+ int frameDuration = 0;

+ int frameCount = m->getGifTotalFrameCount();

+ if (frameIndex < 0 && frameIndex >= frameCount )

+ return NULL;

+ m->setCurrFrame(frameIndex);

+//then we get frameIndex Bitmap (the current frame of movie is frameIndex now)

+ SkBitmap *createdBitmap = m->createGifFrameBitmap();

+ SkBitmap *createdBitmap = m->createGifFrameBitmap();

+ if (createdBitmap != NULL)

+ {

+ return GraphicsJNI::createBitmap(env, createdBitmap, false, NULL);

+ }

+ else

+ {

+ return NULL;

+ }

+}

+

+static int movie_gifTotalFrameCount(JNIEnv* env, jobject movie) {

+ NPE_CHECK_RETURN_ZERO(env, movie);

+ SkMovie* m = J2Movie(env, movie);

+//LOGE("Movie:movie_gifTotalFrameCount: frame count %d", m->getGifTotalFrameCount());

+ return m->getGifTotalFrameCount();

+}

+

+static void movie_closeGif(JNIEnv* env, jobject movie) {

+ NPE_CHECK_RETURN_VOID(env, movie);

+ SkMovie* m = J2Movie(env, movie);

+//LOGE("Movie:movie_closeGif()");

+ delete m;

+}

+

static jobject movie_decodeStream(JNIEnv* env, jobject clazz, jobject istream) {

NPE_CHECK_RETURN_ZERO(env, istream);

{ "setTime", "(I)Z", (void*)movie_setTime },

{ "draw", "(Landroid/graphics/Canvas;FFLandroid/graphics/Paint;)V",

(void*)movie_draw },

- { "decodeStream", "(Ljava/io/InputStream;)Landroid/graphics/Movie;",

+//please see Movie.java for information

+ { "gifFrameDuration", "(I)I",

+ (void*)movie_gifFrameDuration },

+ { "gifFrameBitmap", "(I)Landroid/graphics/Bitmap;",

+ (void*)movie_gifFrameBitmap },

+ { "gifTotalFrameCount", "()I",

+ (void*)movie_gifTotalFrameCount },

+ { "closeGif", "()V",

+ (void*)movie_closeGif },

+ { "decodeMarkedStream", "(Ljava/io/InputStream;)Landroid/graphics/Movie;",

(void*)movie_decodeStream },

+// { "decodeStream", "(Ljava/io/InputStream;)Landroid/graphics/Movie;",

+// (void*)movie_decodeStream },

{ "decodeByteArray", "([BII)Landroid/graphics/Movie;",

(void*)movie_decodeByteArray },

};

通过以上代码我们可以知道,实际上我又调用到了AndroidSource/external/skia/src/images/SkMovie.cpp以及AndroidSource/external/skia/src/images/SkMovie_gif.cpp中,先来看下SkMovie.cpp中的修改:

+int SkMovie::getGifFrameDuration(int frameIndex)

+{

+ return 0;

+}

+

+int SkMovie::getGifTotalFrameCount()

+{

+ return 0;

+}

+

+bool SkMovie::setCurrFrame(int frameIndex)

+{

+ return true;

+}

+

+SkBitmap* SkMovie::createGifFrameBitmap()

+{

+ //get default bitmap, create a new bitmap and returns.

+ SkBitmap *copyedBitmap = new SkBitmap();

+ bool copyDone = false;

+ if (fNeedBitmap)

+ {

+ if (!this->onGetBitmap(&fBitmap)) // failure

+ fBitmap.reset();

+{

+ return 0;

+}

+

+int SkMovie::getGifTotalFrameCount()

+{

+ return 0;

+}

+

+bool SkMovie::setCurrFrame(int frameIndex)

+{

+ return true;

+}

+}

+

+SkBitmap* SkMovie::createGifFrameBitmap()

+{

+ //get default bitmap, create a new bitmap and returns.

+ SkBitmap *copyedBitmap = new SkBitmap();

+ bool copyDone = false;

+ if (fNeedBitmap)

+ {

+ if (!this->onGetBitmap(&fBitmap)) // failure

+ fBitmap.reset();

+ }

+ //now create a new bitmap from fBitmap

+ if (fBitmap.canCopyTo(SkBitmap::kARGB_8888_Config) )

+ {

+//LOGE("SkMovie:createGifFrameBitmap:fBitmap can copy to 8888 config, then copy...");

+ copyDone = fBitmap.copyTo(copyedBitmap, SkBitmap::kARGB_8888_Config);

+ }

+ else

+ {

+ copyDone = false;

+//LOGE("SkMovie:createGifFrameBitmap:fBitmap can NOT copy to 8888 config");

+ }

+

+ if (copyDone)

+ {

+ return copyedBitmap;

+ }

+ else

+ {

+ return NULL;

+ }

+}

接下来是针对SkMovie_gif.cpp的修改(因为平台原因,这里直接贴代码),代码如下:

#include "SkMovie.h"

#include "SkColor.h"

#include "SkColorPriv.h"

#include "SkStream.h"

#include "SkTemplates.h"

#include "SkUtils.h"

#include <stdlib.h>

#include <stdio.h>

#include <string.h>

#include "gif_lib.h"

#include "utils/Log.h"

class SkGIFMovie : public SkMovie {

public:

SkGIFMovie(SkStream* stream);

virtual ~SkGIFMovie();

protected:

virtual bool onGetInfo(Info*);

virtual bool onSetTime(SkMSec);

virtual bool onGetBitmap(SkBitmap*);

//please see Movie.cpp for information

virtual int getGifFrameDuration(int frameIndex);

virtual int getGifTotalFrameCount();

virtual bool setCurrFrame(int frameIndex);

private:

bool checkGifStream(SkStream* stream);

bool getWordFromStream(SkStream* stream,int* word);

bool getRecordType(SkStream* stream,GifRecordType* Type);

bool checkImageDesc(SkStream* stream,char* buf);

bool skipExtension(SkStream* stream,char* buf);

bool skipComment(SkStream* stream,char* buf);

bool skipGraphics(SkStream* stream,char* buf);

bool skipPlaintext(SkStream* stream,char* buf);

bool skipApplication(SkStream* stream,char* buf);

bool skipSubblocksWithTerminator(SkStream* stream,char* buf);

private:

GifFileType* fGIF;

int fCurrIndex;

int fLastDrawIndex;

SkBitmap fBackup;

};

static int Decode(GifFileType* fileType, GifByteType* out, int size) {

SkStream* stream = (SkStream*) fileType->UserData;

return (int) stream->read(out, size);

}

SkGIFMovie::SkGIFMovie(SkStream* stream)

{

int streamLength = stream->getLength();

//if length of SkStream is below zero, no need for further parsing

if (streamLength <= 0) {

LOGE("SkGIFMovie:SkGIFMovie: GIF source file length is below 0");

return;

}

//allocate a buffer to hold content of SkStream

void * streamBuffer = malloc(streamLength+1);

if (streamBuffer == 0) {

LOGE("SkGIFMovie:SkGIFMovie: malloc Memory stream buffer failed");

return;

}

//Fetch SkStream content into buffer

if (streamLength != stream->read(streamBuffer, stream->getLength())) {

LOGE("SkGIFMovie:SkGIFMovie: read GIF source to Memory Buffer failed");

free(streamBuffer);

return;

}

//we wrap stream with SkmemoryStream, cause

//its rewind does not make mark on InputStream be

//invalid.

SkStream* memStream = new SkMemoryStream(streamBuffer,streamLength);

bool bRewindable = memStream->rewind();

if (bRewindable)

{

//check if GIF file is valid to decode

bool bGifValid = checkGifStream(memStream);

if (! bGifValid)

{

free(streamBuffer);

fGIF = NULL;

return;

}

//GIF file stream seems to be OK,

// rewind stream for gif decoding

memStream->rewind();

}

fGIF = DGifOpen( memStream, Decode );

if (NULL == fGIF) {

free(streamBuffer);

return;

}

if (DGifSlurp(fGIF) != GIF_OK)

{

DGifCloseFile(fGIF);

fGIF = NULL;

}

fCurrIndex = -1;

fLastDrawIndex = -1;

//release stream buffer when decoding is done.

free(streamBuffer);

}

SkGIFMovie::~SkGIFMovie()

{

if (fGIF)

DGifCloseFile(fGIF);

}

static SkMSec savedimage_duration(const SavedImage* image)

{

for (int j = 0; j < image->ExtensionBlockCount; j++)

{

if (image->ExtensionBlocks[j].Function == GRAPHICS_EXT_FUNC_CODE)

{

int size = image->ExtensionBlocks[j].ByteCount;

SkASSERT(size >= 4);

const uint8_t* b = (const uint8_t*)image->ExtensionBlocks[j].Bytes;

return ((b[2] << 8) | b[1]) * 10;

}

}

return 0;

}

int SkGIFMovie::getGifFrameDuration(int frameIndex)

{

//for wrong frame index, return 0

if (frameIndex < 0 || NULL == fGIF || frameIndex >= fGIF->ImageCount)

return 0;

return savedimage_duration(&fGIF->SavedImages[frameIndex]);

}

int SkGIFMovie::getGifTotalFrameCount()

{

//if fGIF is not valid, return 0

if (NULL == fGIF)

return 0;

return fGIF->ImageCount < 0 ? 0 : fGIF->ImageCount;

}

bool SkGIFMovie::setCurrFrame(int frameIndex)

{

if (NULL == fGIF)

return false;

if (frameIndex >= 0 && frameIndex < fGIF->ImageCount)

fCurrIndex = frameIndex;

else

fCurrIndex = 0;

return true;

}

bool SkGIFMovie::getWordFromStream(SkStream* stream,int* word)

{

unsigned char buf[2];

if (stream->read(buf, 2) != 2) {

LOGE("SkGIFMovie:getWordFromStream: read from stream failed");

return false;

}

*word = (((unsigned int)buf[1]) << 8) + buf[0];

return true;

}

bool SkGIFMovie::getRecordType(SkStream* stream,GifRecordType* Type)

{

unsigned char buf;

//read a record type to buffer

if (stream->read(&buf, 1) != 1) {

LOGE("SkGIFMovie:getRecordType: read from stream failed");

return false;

}

//identify record type

switch (buf) {

case ',':

*Type = IMAGE_DESC_RECORD_TYPE;

break;

case '!':

*Type = EXTENSION_RECORD_TYPE;

break;

case ';':

*Type = TERMINATE_RECORD_TYPE;

break;

default:

*Type = UNDEFINED_RECORD_TYPE;

LOGE("SkGIFMovie:getRecordType: wrong gif record type");

return false;

}

return true;

}

/******************************************************************************

* calculate all image frame count without decode image frame.

* SkStream associated with GifFile and GifFile state is not

* affected

*****************************************************************************/

bool SkGIFMovie::checkGifStream(SkStream* stream)

{

char buf[16];

int screenWidth, screenHeight;

int BitsPerPixel = 0;

int frameCount = 0;

GifRecordType RecordType;

//maximum stream length is set to be 10M, if the stream is

//larger than that, no further action is needed

size_t length = stream->getLength();

if (length > 10*1024*1024) {

LOGE("SkGIFMovie:checkGifStream: stream length larger than 10M");

return false;

}

if (GIF_STAMP_LEN != stream->read(buf, GIF_STAMP_LEN)) {

LOGE("SkGIFMovie:checkGifStream: read GIF STAMP failed");

return false;

}

//Check whether the first three charactar is "GIF", version

// number is ignored.

buf[GIF_STAMP_LEN] = 0;

if (strncmp(GIF_STAMP, buf, GIF_VERSION_POS) != 0) {

LOGE("SkGIFMovie:checkGifStream: check GIF stamp failed");

return false;

}

//read screen width and height from stream

screenWidth = 0;

screenHeight = 0;

if (! getWordFromStream(stream,&screenWidth) ||

! getWordFromStream(stream,&screenHeight)) {

LOGE("SkGIFMovie:checkGifStream: get screen dimension failed");

return false;

}

//check whether screen dimension is too large

//maximum pixels in a single frame is constrained to 1.5M

//which is aligned withSkImageDecoder_libgif.cpp

if (screenWidth*screenHeight > 1536*1024) {

LOGE("SkGIFMovie:checkGifStream: screen dimension is larger than 1.5M");

return false;

}

//read screen color resolution and color map information

if (3 != stream->read(buf, 3)) {

LOGE("SkGIFMovie:checkGifStream: read color info failed");

return false;

}

BitsPerPixel = (buf[0] & 0x07) + 1;

if (buf[0] & 0x80) {

//If we have global color table, skip it

unsigned int colorTableBytes = (unsigned)(1 << BitsPerPixel) * 3;

if (colorTableBytes != stream->skip(colorTableBytes)) {

LOGE("SkGIFMovie:checkGifStream: skip global color table failed");

return false;

}

} else {

}

//DGifOpen is over, now for DGifSlurp

do {

if (getRecordType(stream, &RecordType) == false)

return false;

switch (RecordType) {

case IMAGE_DESC_RECORD_TYPE:

if (checkImageDesc(stream,buf) == false)

return false;

frameCount ++;

if (1 != stream->skip(1)) {

LOGE("SkGIFMovie:checkGifStream: skip code size failed");

return false;

}

if (skipSubblocksWithTerminator(stream,buf) == false) {

LOGE("SkGIFMovie:checkGifStream: skip compressed image data failed");

return false;

}

break;

case EXTENSION_RECORD_TYPE:

if (skipExtension(stream,buf) == false) {

LOGE("SkGIFMovie:checkGifStream: skip extensions failed");

return false;

}

break;

case TERMINATE_RECORD_TYPE:

break;

default: /* Should be trapped by DGifGetRecordType */

break;

}

} while (RecordType != TERMINATE_RECORD_TYPE);

//maximum pixels in all gif frames is constrained to 5M

//although each frame has its own dimension, we estimate the total

//pixels which the decoded gif file had be screen dimension multiply

//total image count, this should be the worst case

if (screenWidth * screenHeight * frameCount > 1024*1024*5) {

LOGE("SkGIFMovie:checkGifStream: total pixels is larger than 5M");

return false;

}

return true;

}

bool SkGIFMovie::checkImageDesc(SkStream* stream,char* buf)

{

int imageWidth,imageHeight;

int BitsPerPixel;

if (4 != stream->skip(4)) {

LOGE("SkGIFMovie:getImageDesc: skip image left-top position");

return false;

}

if (! getWordFromStream(stream,&imageWidth)||

! getWordFromStream(stream,&imageHeight)) {

LOGE("SkGIFMovie:getImageDesc: read image width & height");

return false;

}

if (1 != stream->read(buf, 1)) {

LOGE("SkGIFMovie:getImageDesc: read image info failed");

return false;

}

BitsPerPixel = (buf[0] & 0x07) + 1;

if (buf[0] & 0x80) {

//If this image have local color map, skip it

unsigned int colorTableBytes = (unsigned)(1 << BitsPerPixel) * 3;

if (colorTableBytes != stream->skip(colorTableBytes)) {

LOGE("SkGIFMovie:getImageDesc: skip global color table failed");

return false;

}

} else {

}

return true;

}

bool SkGIFMovie::skipExtension(SkStream* stream,char* buf)

{

int imageWidth,imageHeight;

int BitsPerPixel;

if (1 != stream->read(buf, 1)) {

LOGE("SkGIFMovie:skipExtension: read extension type failed");

return false;

}

switch (buf[0]) {

case COMMENT_EXT_FUNC_CODE:

if (skipComment(stream,buf)==false) {

LOGE("SkGIFMovie:skipExtension: skip comment failed");

return false;

}

break;

case GRAPHICS_EXT_FUNC_CODE:

if (skipGraphics(stream,buf)==false) {

LOGE("SkGIFMovie:skipExtension: skip graphics failed");

return false;

}

break;

case PLAINTEXT_EXT_FUNC_CODE:

if (skipPlaintext(stream,buf)==false) {

LOGE("SkGIFMovie:skipExtension: skip plaintext failed");

return false;

}

break;

case APPLICATION_EXT_FUNC_CODE:

if (skipApplication(stream,buf)==false) {

LOGE("SkGIFMovie:skipExtension: skip application failed");

return false;

}

break;

default:

LOGE("SkGIFMovie:skipExtension: wrong gif extension type");

return false;

}

return true;

}

bool SkGIFMovie::skipComment(SkStream* stream,char* buf)

{

return skipSubblocksWithTerminator(stream,buf);

}

bool SkGIFMovie::skipGraphics(SkStream* stream,char* buf)

{

return skipSubblocksWithTerminator(stream,buf);

}

bool SkGIFMovie::skipPlaintext(SkStream* stream,char* buf)

{

return skipSubblocksWithTerminator(stream,buf);

}

bool SkGIFMovie::skipApplication(SkStream* stream,char* buf)

{

return skipSubblocksWithTerminator(stream,buf);

}

bool SkGIFMovie::skipSubblocksWithTerminator(SkStream* stream,char* buf)

{

do {//skip the whole compressed image data.

//read sub-block size

if (1 != stream->read(buf,1)) {

LOGE("SkGIFMovie:skipSubblocksWithTerminator: read sub block size failed");

return false;

}

if (buf[0] > 0) {

if (buf[0] != stream->skip(buf[0])) {

LOGE("SkGIFMovie:skipSubblocksWithTerminator: skip sub block failed");

return false;

}

}

} while(buf[0]!=0);

return true;

}

bool SkGIFMovie::onGetInfo(Info* info)

{

if (NULL == fGIF)

return false;

SkMSec dur = 0;

for (int i = 0; i < fGIF->ImageCount; i++)

dur += savedimage_duration(&fGIF->SavedImages[i]);

info->fDuration = dur;

info->fWidth = fGIF->SWidth;

info->fHeight = fGIF->SHeight;

info->fIsOpaque = false;

return true;

}

bool SkGIFMovie::onSetTime(SkMSec time)

{

if (NULL == fGIF)

return false;

SkMSec dur = 0;

for (int i = 0; i < fGIF->ImageCount; i++)

{

dur += savedimage_duration(&fGIF->SavedImages[i]);

if (dur >= time)

{

fCurrIndex = i;

return fLastDrawIndex != fCurrIndex;

}

}

fCurrIndex = fGIF->ImageCount - 1;

return true;

}

static void copyLine(uint32_t* dst, const unsigned char* src, const ColorMapObject* cmap,

int transparent, int width)

{

for (; width > 0; width--, src++, dst++) {

if (*src != transparent) {

const GifColorType& col = cmap->Colors[*src];

*dst = SkPackARGB32(0xFF, col.Red, col.Green, col.Blue);

}

}

}

static void copyInterlaceGroup(SkBitmap* bm, const unsigned char*& src,

const ColorMapObject* cmap, int transparent, int copyWidth,

int copyHeight, const GifImageDesc& imageDesc, int rowStep,

int startRow)

{

int row;

// every 'rowStep'th row, starting with row 'startRow'

for (row = startRow; row < copyHeight; row += rowStep) {

uint32_t* dst = bm->getAddr32(imageDesc.Left, imageDesc.Top + row);

copyLine(dst, src, cmap, transparent, copyWidth);

src += imageDesc.Width;

}

// pad for rest height

src += imageDesc.Width * ((imageDesc.Height - row + rowStep - 1) / rowStep);

}

static void blitInterlace(SkBitmap* bm, const SavedImage* frame, const ColorMapObject* cmap,

int transparent)

{

int width = bm->width();

int height = bm->height();

GifWord copyWidth = frame->ImageDesc.Width;

if (frame->ImageDesc.Left + copyWidth > width) {

copyWidth = width - frame->ImageDesc.Left;

}

GifWord copyHeight = frame->ImageDesc.Height;

if (frame->ImageDesc.Top + copyHeight > height) {

copyHeight = height - frame->ImageDesc.Top;

}

// deinterlace

const unsigned char* src = (unsigned char*)frame->RasterBits;

// group 1 - every 8th row, starting with row 0

copyInterlaceGroup(bm, src, cmap, transparent, copyWidth, copyHeight, frame->ImageDesc, 8, 0);

// group 2 - every 8th row, starting with row 4

copyInterlaceGroup(bm, src, cmap, transparent, copyWidth, copyHeight, frame->ImageDesc, 8, 4);

// group 3 - every 4th row, starting with row 2

copyInterlaceGroup(bm, src, cmap, transparent, copyWidth, copyHeight, frame->ImageDesc, 4, 2);

copyInterlaceGroup(bm, src, cmap, transparent, copyWidth, copyHeight, frame->ImageDesc, 2, 1);

}

static void blitNormal(SkBitmap* bm, const SavedImage* frame, const ColorMapObject* cmap,

int transparent)

{

int width = bm->width();

int height = bm->height();

const unsigned char* src = (unsigned char*)frame->RasterBits;

uint32_t* dst = bm->getAddr32(frame->ImageDesc.Left, frame->ImageDesc.Top);

GifWord copyWidth = frame->ImageDesc.Width;

if (frame->ImageDesc.Left + copyWidth > width) {

copyWidth = width - frame->ImageDesc.Left;

}

GifWord copyHeight = frame->ImageDesc.Height;

if (frame->ImageDesc.Top + copyHeight > height) {

copyHeight = height - frame->ImageDesc.Top;

}

int srcPad, dstPad;

dstPad = width - copyWidth;

srcPad = frame->ImageDesc.Width - copyWidth;

for (; copyHeight > 0; copyHeight--) {

copyLine(dst, src, cmap, transparent, copyWidth);

src += frame->ImageDesc.Width;

dst += width;

}

}

static void fillRect(SkBitmap* bm, GifWord left, GifWord top, GifWord width, GifWord height,

uint32_t col)

{

int bmWidth = bm->width();

int bmHeight = bm->height();

uint32_t* dst = bm->getAddr32(left, top);

GifWord copyWidth = width;

if (left + copyWidth > bmWidth) {

copyWidth = bmWidth - left;

}

GifWord copyHeight = height;

if (top + copyHeight > bmHeight) {

copyHeight = bmHeight - top;

}

for (; copyHeight > 0; copyHeight--) {

sk_memset32(dst, col, copyWidth);

dst += bmWidth;

}

}

static void drawFrame(SkBitmap* bm, const SavedImage* frame, const ColorMapObject* cmap)

{

int transparent = -1;

for (int i = 0; i < frame->ExtensionBlockCount; ++i) {

ExtensionBlock* eb = frame->ExtensionBlocks + i;

if (eb->Function == GRAPHICS_EXT_FUNC_CODE &&

eb->ByteCount == 4) {

bool has_transparency = ((eb->Bytes[0] & 1) == 1);

if (has_transparency) {

transparent = (unsigned char)eb->Bytes[3];

}

}

}

if (frame->ImageDesc.ColorMap != NULL) {

// use local color table

cmap = frame->ImageDesc.ColorMap;

}

if (cmap == NULL || cmap->ColorCount != (1 << cmap->BitsPerPixel)) {

SkASSERT(!"bad colortable setup");

return;

}

if (frame->ImageDesc.Interlace) {

blitInterlace(bm, frame, cmap, transparent);

} else {

blitNormal(bm, frame, cmap, transparent);

}

}

static bool checkIfWillBeCleared(const SavedImage* frame)

{

for (int i = 0; i < frame->ExtensionBlockCount; ++i) {

ExtensionBlock* eb = frame->ExtensionBlocks + i;

if (eb->Function == GRAPHICS_EXT_FUNC_CODE &&

eb->ByteCount == 4) {

// check disposal method

int disposal = ((eb->Bytes[0] >> 2) & 7);

if (disposal == 2 || disposal == 3) {

return true;

}

}

}

return false;

}

static void getTransparencyAndDisposalMethod(const SavedImage* frame, bool* trans, int* disposal)

{

*trans = false;

*disposal = 0;

for (int i = 0; i < frame->ExtensionBlockCount; ++i) {

ExtensionBlock* eb = frame->ExtensionBlocks + i;

if (eb->Function == GRAPHICS_EXT_FUNC_CODE &&

eb->ByteCount == 4) {

*trans = ((eb->Bytes[0] & 1) == 1);

*disposal = ((eb->Bytes[0] >> 2) & 7);

}

}

}

// return true if area of 'target' is completely covers area of 'covered'

static bool checkIfCover(const SavedImage* target, const SavedImage* covered)

{

if (target->ImageDesc.Left <= covered->ImageDesc.Left

&& covered->ImageDesc.Left + covered->ImageDesc.Width <=

target->ImageDesc.Left + target->ImageDesc.Width

&& target->ImageDesc.Top <= covered->ImageDesc.Top

&& covered->ImageDesc.Top + covered->ImageDesc.Height <=

target->ImageDesc.Top + target->ImageDesc.Height) {

return true;

}

return false;

}

static void disposeFrameIfNeeded(SkBitmap* bm, const SavedImage* cur, const SavedImage* next,

SkBitmap* backup, SkColor color)

{

// We can skip disposal process if next frame is not transparent

// and completely covers current area

bool curTrans;

int curDisposal;

getTransparencyAndDisposalMethod(cur, &curTrans, &curDisposal);

bool nextTrans;

int nextDisposal;

getTransparencyAndDisposalMethod(next, &nextTrans, &nextDisposal);

if ((curDisposal == 2 || curDisposal == 3)

&& (nextTrans || !checkIfCover(next, cur))) {

switch (curDisposal) {

// restore to background color

// -> 'background' means background under this image.

case 2:

fillRect(bm, cur->ImageDesc.Left, cur->ImageDesc.Top,

cur->ImageDesc.Width, cur->ImageDesc.Height,

color);

break;

// restore to previous

case 3:

bm->swap(*backup);

break;

}

}

// Save current image if next frame's disposal method == 3

if (nextDisposal == 3) {

const uint32_t* src = bm->getAddr32(0, 0);

uint32_t* dst = backup->getAddr32(0, 0);

int cnt = bm->width() * bm->height();

memcpy(dst, src, cnt*sizeof(uint32_t));

}

}

bool SkGIFMovie::onGetBitmap(SkBitmap* bm)

{

const GifFileType* gif = fGIF;

if (NULL == gif)

return false;

if (gif->ImageCount < 1) {

return false;

}

const int width = gif->SWidth;

const int height = gif->SHeight;

if (width <= 0 || height <= 0) {

return false;

}

// no need to draw

if (fLastDrawIndex >= 0 && fLastDrawIndex == fCurrIndex) {

return true;

}

int startIndex = fLastDrawIndex + 1;

if (fLastDrawIndex < 0 || !bm->readyToDraw()) {

// first time

startIndex = 0;

// create bitmap

bm->setConfig(SkBitmap::kARGB_8888_Config, width, height, 0);

if (!bm->allocPixels(NULL)) {

return false;

}

// create bitmap for backup

fBackup.setConfig(SkBitmap::kARGB_8888_Config, width, height, 0);

if (!fBackup.allocPixels(NULL)) {

return false;

}

} else if (startIndex > fCurrIndex) {

// rewind to 1st frame for repeat

startIndex = 0;

}

int lastIndex = fCurrIndex;

if (lastIndex < 0) {

// first time

lastIndex = 0;

} else if (lastIndex > fGIF->ImageCount - 1) {

// this block must not be reached.

lastIndex = fGIF->ImageCount - 1;

}

SkColor bgColor = SkPackARGB32(0, 0, 0, 0);

if (gif->SColorMap != NULL) {

const GifColorType& col = gif->SColorMap->Colors[fGIF->SBackGroundColor];

bgColor = SkColorSetARGB(0xFF, col.Red, col.Green, col.Blue);

}

static SkColor paintingColor = SkPackARGB32(0, 0, 0, 0);

// draw each frames - not intelligent way

for (int i = startIndex; i <= lastIndex; i++) {

const SavedImage* cur = &fGIF->SavedImages[i];

if (i == 0) {

bool trans;

int disposal;

getTransparencyAndDisposalMethod(cur, &trans, &disposal);

if (!trans && gif->SColorMap != NULL) {

paintingColor = bgColor;

} else {

paintingColor = SkColorSetARGB(0, 0, 0, 0);

}

bm->eraseColor(paintingColor);

fBackup.eraseColor(paintingColor);

} else {

// Dispose previous frame before move to next frame.

const SavedImage* prev = &fGIF->SavedImages[i-1];

disposeFrameIfNeeded(bm, prev, cur, &fBackup, paintingColor);

}

// Draw frame

// We can skip this process if this index is not last and disposal

// method == 2 or method == 3

if (i == lastIndex || !checkIfWillBeCleared(cur)) {

drawFrame(bm, cur, gif->SColorMap);

}

}

// save index

fLastDrawIndex = lastIndex;

return true;

}

///////////////////////////////////////////////////////////////////////////////

#include "SkTRegistry.h"

SkMovie* Factory(SkStream* stream) {

char buf[GIF_STAMP_LEN];

if (stream->read(buf, GIF_STAMP_LEN) == GIF_STAMP_LEN) {

if (memcmp(GIF_STAMP, buf, GIF_STAMP_LEN) == 0 ||

memcmp(GIF87_STAMP, buf, GIF_STAMP_LEN) == 0 ||

memcmp(GIF89_STAMP, buf, GIF_STAMP_LEN) == 0) {

// must rewind here, since our construct wants to re-read the data

stream->rewind();

return SkNEW_ARGS(SkGIFMovie, (stream));

}

}

return NULL;

}

static SkTRegistry<SkMovie*, SkStream*> gReg(Factory);

完成以上Native层代码的修改,可别忘了修改AndroidSourceCode/ external/skia/include/images/SkMovie.h哦,代码修改如下:

bool setTime(SkMSec);

+ virtual int getGifFrameDuration(int frameIndex);

+ virtual int getGifTotalFrameCount();

+ SkBitmap* createGifFrameBitmap();

+ virtual bool setCurrFrame(int frameIndex);

const SkBitmap& bitmap();

接下 来就是调用具体的解码器来进行解码了,这部分内容因为系统已经做好了,因此到这里,gif的支持添加基本完成。