libsvm代码阅读:关于Solver类分析(一)

update:2014-2-27 LinJM @HQU 『 libsvm专栏地址:http://blog.csdn.net/column/details/libsvm.html 』

现在我们涉及到的Solver类是一个SVM优化求解的实现技术:SMO,即序贯最小优化算法。libsvm中最原始的Solver的代码有六百多行,再加上各种变形就上千行了,为了好理解,我们先来看看理论问题。

代码的开头如下:

// An SMO algorithm in Fan et al., JMLR 6(2005), p. 1889--1918 // Solves: // // min 0.5(\alpha^T Q \alpha) + p^T \alpha // // y^T \alpha = \delta // y_i = +1 or -1 // 0 <= alpha_i <= Cp for y_i = 1 // 0 <= alpha_i <= Cn for y_i = -1即代码的实现主要参考文献:

Fan R E, Chen P H, Lin C J. Working set selection using second order information for training support vector machines[J]. The Journal of Machine Learning Research, 2005, 6: 1889-1918.

那么,我们就来读读这篇文章:

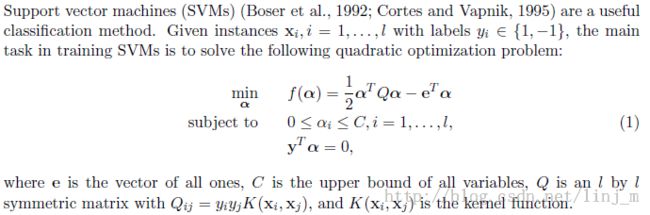

SVM的优化问题如下:

核心目标是:求出最优解alpha*。

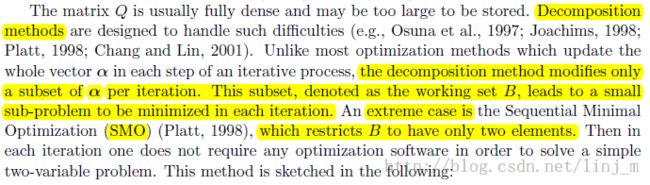

分解算法只更新拉格朗日乘子alpha_i的一个固定大小的子集,其他保持不变。因此,每当更新一个新点加入到工作集,另一个点要被移除。在这个算法中,目标是每次在数据的一个小的子集上优化全局问题。

而SMO算法是将分解算法思想推向极致得出的,即每次迭代仅优化两个点的最小子集。

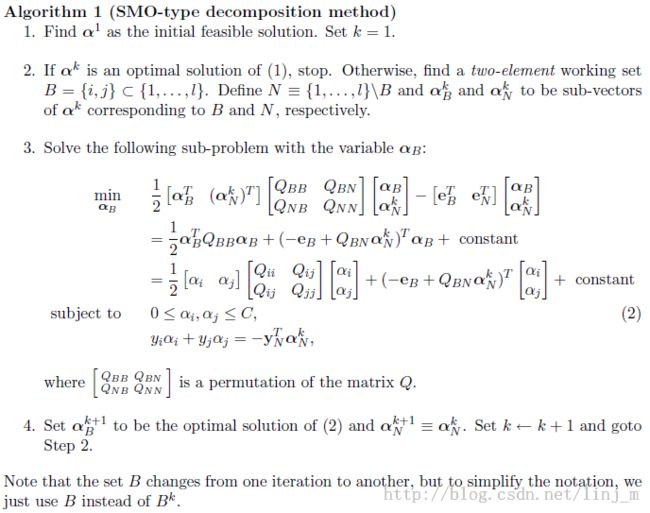

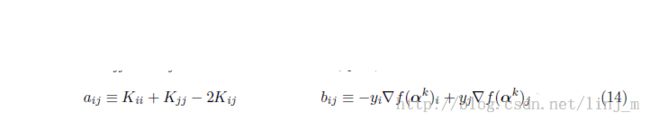

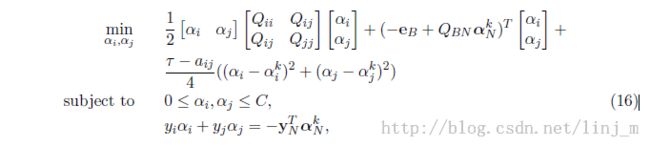

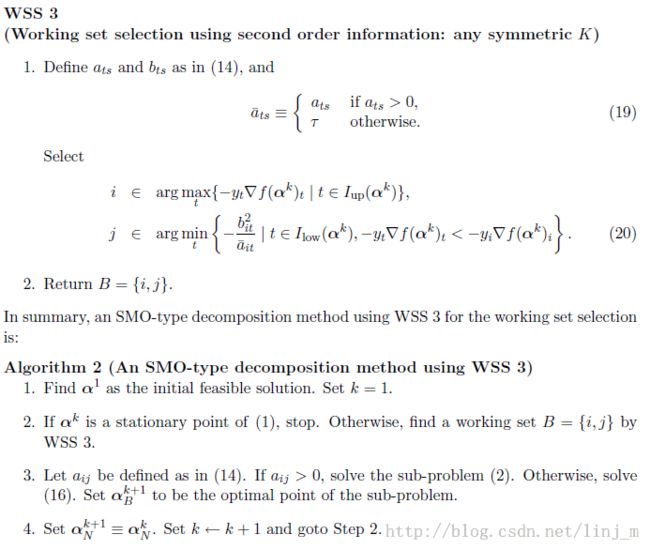

算法的流程如上所示。但是,如何find a two-element working set B,上面的算并没有讲。下面我们直接贴出本篇论文的Select_B 方法:

上面的算法的流程还是相对清晰的。下面,我们来看看SMO的伪代码(Algorithm 2)

Inputs:

y: array of {+1, -1}: class of the i-th instance

Q: Q[i][j] = y[i]*y[j]*K[i][j]; K: kernel matrix

len: number of instances

//parameters

eps = 1e-3 // stopping tolerance

tau = 1e-12

//main routine

initialize alpha array A to all zero

initialize gradient array G to all -1

while(1)

{

(i,j) = selectB()

if (j == -1)

break

//working set is (i,j)

a = Q[i][i]+Q[j][j]-2*y[i]y[j]*Q[i][j]

if (a <= 0)

a = tau

b = -y[i]*G[i]+y[j]*G[j]

//update alpha

oldAi = A[i], oldAj = A[j]

A[i] += y[i]*b/a

A[j] -= y[j]*b/a

//project alpha back to the feasible region

sum = y[i]*oldAi + y[j]*oldAj

if A[i] > C

A[i] = C

if A[i] < 0

A[i] = 0

A[j] = y[j]*(sum - y[i]*A[i])

if A[j] > C

A[j] = C

if A[j] < 0

A[j] = 0

A[i] = y[i]*(sum - y[j]*A[j])

//update gradient

deltaAi = A[i] - oldAi, deltaAj = A[j] - oldAj

for t = 1 to len

G[t] += Q[t][i]*deltaAi + Q[t][j]*deltaAj

}

procedure selectB

//select i

i = -1

G_max = -inf

G_min = inf

for t = 1 to len

if(y[t]==+1 and A[t] < C) or (y[t]==-1 and A[t] >0)

{

if(-y[t]*G[t] >= G_max)

{

i = t

G_max = -y[t]*G[t]

}

}

//select j

j = -1

obj_min = inf

for t = 1 to len

{

if(y[t]==+1 and A[t] >0)or(y[t]==-1 and A[t] < C)

{

b = G_max + y[t]*G[t]

if (-y[t]*G[t] <= G_min)

G_min = -y[t]*G[t]

if (b > 0)

{

a = Q[i][i]+Q[t][t]-2*y[i]*y[t]*Q[i][t]

if (a <= 0)

a = tau

if (-(b*b)/a <= obj_min)

{

j = t

obj_min = -(b*b)/a

}

}

}

}

if (G_max-G_min < eps)

return (-1,-1)

return (i,j)

end procedure

本文地址:http://blog.csdn.net/linj_m/article/details/19698463

微博:林建民-机器视觉