【OpenStack】Nova中的rebuild和evacuate

声明:

本博客欢迎转发,但请保留原作者信息!

新浪微博:@孔令贤HW;

博客地址:http://blog.csdn.net/lynn_kong

内容系本人学习、研究和总结,如有雷同,实属荣幸!

更新记录:

2013.06.24 增加在实际环境中rebuild的操作示例

2013.06.26 后端卷启动的虚拟机,rebuild无效

版本:Grizzly 2013.06.15

hypervisor:KVM

1、概念

rebuild:xp系统的虚拟机用烦了,想换个linux的操作系统,就可以使用rebuild。

evacuate:虚拟机所在的host宕机了,可以使用evacuate将虚拟机在另外一个host上启起来,其实利用这个接口配合host监控工具,可以实现虚拟机的HA能力。

为什么要将这两个一起说呢,是因为在底层,这两个接口其实对应一个操作。

2、rebuild

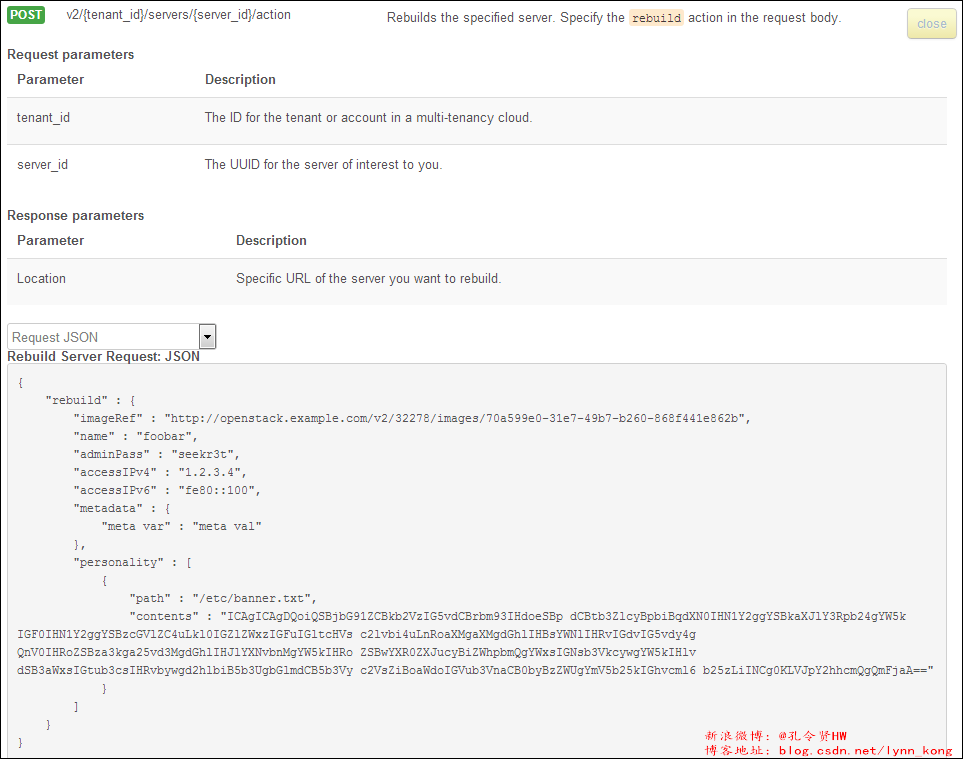

引用一下官方的API文档说明:

底层的实现,其实就是在虚拟机所在的host上,将原来的虚拟机干掉,然后再根据新的镜像创建一个新的虚拟机,实现虚拟机系统盘的变更,而用户盘的数据是不变的(软件的安装和配置会丢失),虚拟机的网络信息也不变。API里的accessIPv4和accessIPv6参数,在使用Quantum的场景下,是无效的。

目前rebuild仅支持active和stopped状态的虚拟机。而且使用后端卷启动的虚拟机,rebuild之后系统盘不会发生变化,见后面的实验部分。

3、evacuate

引用官方的API文档说明:

该接口使用的前提是虚拟机所在的host宕机。

参数onSharedStorage是让使用者指明,计算节点是否使用共享存储。其实在计算节点是有能力判断是否使用共享存储的(并且计算节点也确实会再进行判断),这里写在接口里,猜测应该是为了在API层做判断吧。

当使用共享存储时,才是真正意义上的HA,虚拟机的软件和数据不会丢失;否则,只有虚拟机的用户盘数据不会丢失,系统盘是全新的系统盘。

上面提到,rebuild和evacuate在底层的实现是一样的。其实想想也是,两个接口都需要重新创建虚拟机,唯一的区别是:

1、rebuild需要多做一步删除虚拟机的操作,而evacuate是直接创建新虚拟机。

2、rebuild使用的镜像是接口指定的新的镜像(可以与老镜像相同),而evacuate使用的是虚拟机原来的镜像。

再次引用下wiki上的evacuate流程图:

但evacuate也有一些不足,比如如果支持系统自动选择主机,用户体验可能会更好;还有,同rebuild一样,目前evacuate仅支持active和stopped状态的虚拟机,其他状态(paused,suspended等)的虚拟机是不支持的,这也就意味着其他状态的虚拟机遇到host故障的时候是无法恢复的。

顺带说一句,目前只有libvirt driver支持rebuild和evacuate。

4、实践

4.1、rebuild

步骤如下:

1、先使用keypaire创建cirros虚拟机,关联floatingip,创建成功后,ssh登录,操作正常。

root@controller231:~# nova show rebuild-test2

+-------------------------------------+----------------------------------------------------------+

| Property | Value |

+-------------------------------------+----------------------------------------------------------+

| status | ACTIVE |

| updated | 2013-06-24T08:14:45Z |

| OS-EXT-STS:task_state | None |

| OS-EXT-SRV-ATTR:host | controller231 |

| key_name | mykey |

| image | cirros (4851d2f2-ef75-4a80-91c6-f0fcbcd7276a) |

| hostId | 083729f2f8f664fffd4cffb8c3e76615d7abc1e11efc993528dd88b9 |

| OS-EXT-STS:vm_state | active |

| OS-EXT-SRV-ATTR:instance_name | instance-0000000e |

| OS-EXT-SRV-ATTR:hypervisor_hostname | controller231.openstack.org |

| flavor | m1.small (2) |

| id | 03774415-d9ce-4b34-b012-6891d248b767 |

| security_groups | [{u'name': u'default'}] |

| user_id | f882feb345064e7d9392440a0f397c25 |

| name | rebuild-test2 |

| created | 2013-06-24T08:14:38Z |

| tenant_id | 6fbe9263116a4b68818cf1edce16bc4f |

| OS-DCF:diskConfig | MANUAL |

| metadata | {} |

| accessIPv4 | |

| accessIPv6 | |

| testnet01 network | 10.1.1.20, 192.150.73.3 |

| progress | 0 |

| OS-EXT-STS:power_state | 1 |

| OS-EXT-AZ:availability_zone | nova |

| config_drive | |

+-------------------------------------+----------------------------------------------------------+

root@network232:~# ssh -i mykey.pem -l cirros 192.150.73.3 OpenSSH_5.9p1 Debian-5ubuntu1.1, OpenSSL 1.0.1 14 Mar 2012 Authenticated to 192.150.73.3 ([192.150.73.3]:22). $ sudo passwd Changing password for root New password: Retype password: Password for root changed by root2、命令行执行rebuild,指定ubuntu镜像,注意此时虚拟机的image已经发生改变:

root@controller231:~# nova rebuild rebuild-test2 1f7f5763-33a1-4282-92b3-53366bf7c695

+-------------------------------------+-------------------------------------------------------------------+

| Property | Value |

+-------------------------------------+-------------------------------------------------------------------+

| status | REBUILD |

| updated | 2013-06-24T08:34:47Z |

| OS-EXT-STS:task_state | rebuilding |

| OS-EXT-SRV-ATTR:host | controller231 |

| key_name | mykey |

| image | Ubuntu 12.04 cloudimg i386 (1f7f5763-33a1-4282-92b3-53366bf7c695) |

| hostId | 083729f2f8f664fffd4cffb8c3e76615d7abc1e11efc993528dd88b9 |

| OS-EXT-STS:vm_state | active |

| OS-EXT-SRV-ATTR:instance_name | instance-0000000e |

| OS-EXT-SRV-ATTR:hypervisor_hostname | controller231.openstack.org |

| flavor | m1.small (2) |

| id | 03774415-d9ce-4b34-b012-6891d248b767 |

| security_groups | [{u'name': u'default'}] |

| user_id | f882feb345064e7d9392440a0f397c25 |

| name | rebuild-test2 |

| created | 2013-06-24T08:14:38Z |

| tenant_id | 6fbe9263116a4b68818cf1edce16bc4f |

| OS-DCF:diskConfig | MANUAL |

| metadata | {} |

| accessIPv4 | |

| accessIPv6 | |

| testnet01 network | 10.1.1.20, 192.150.73.3 |

| progress | 0 |

| OS-EXT-STS:power_state | 1 |

| OS-EXT-AZ:availability_zone | nova |

| config_drive | |

+-------------------------------------+-------------------------------------------------------------------+3、等待虚拟机状态变为ACTIVE,再次登录虚拟机:

root@network232:~# ssh -i mykey.pem 192.150.73.3 Welcome to Ubuntu 12.04.1 LTS (GNU/Linux 3.2.0-35-virtual i686) * Documentation: https://help.ubuntu.com/ System information as of Mon Jun 24 08:47:49 UTC 2013 System load: 0.0 Processes: 60 Usage of /: 2.9% of 19.67GB Users logged in: 0 Memory usage: 1% IP address for eth0: 10.1.1.20 Swap usage: 0% Graph this data and manage this system at https://landscape.canonical.com/ 0 packages can be updated. 0 updates are security updates. Get cloud support with Ubuntu Advantage Cloud Guest http://www.ubuntu.com/business/services/cloud Last login: Mon Jun 24 08:46:09 2013 from 192.168.82.232 root@rebuild-test2:~#

看到系统盘已经变成Ubuntu系统。

4、后端卷启动的虚拟机,rebuild

比如有一个虚拟机,后端卷启动,后端卷是cirros镜像:

root@controller231:~# nova show kong2

+-------------------------------------+----------------------------------------------------------+

| Property | Value |

+-------------------------------------+----------------------------------------------------------+

| status | ACTIVE |

| updated | 2013-06-26T10:01:29Z |

| OS-EXT-STS:task_state | None |

| OS-EXT-SRV-ATTR:host | controller231 |

| key_name | mykey |

| image | Attempt to boot from volume - no image supplied |

| hostId | 083729f2f8f664fffd4cffb8c3e76615d7abc1e11efc993528dd88b9 |

| OS-EXT-STS:vm_state | active |

| OS-EXT-SRV-ATTR:instance_name | instance-00000021 |

| OS-EXT-SRV-ATTR:hypervisor_hostname | controller231.openstack.org |

| flavor | kong_flavor (6) |

| id | 8989a10b-5a89-4f87-9b59-83578eabb997 |

| security_groups | [{u'name': u'default'}] |

| user_id | f882feb345064e7d9392440a0f397c25 |

| name | kong2 |

| created | 2013-06-26T10:00:51Z |

| tenant_id | 6fbe9263116a4b68818cf1edce16bc4f |

| OS-DCF:diskConfig | MANUAL |

| metadata | {} |

| accessIPv4 | |

| accessIPv6 | |

| testnet01 network | 10.1.1.6 |

| progress | 0 |

| OS-EXT-STS:power_state | 1 |

| OS-EXT-AZ:availability_zone | nova |

| config_drive | |

+-------------------------------------+----------------------------------------------------------+注意image字段中,显示该虚拟机是boot from volume。

对该虚拟机进行rebuild操作,指定ubuntu镜像:

root@controller231:~# nova rebuild kong2 1f7f5763-33a1-4282-92b3-53366bf7c695

+-------------------------------------+-------------------------------------------------------------------+

| Property | Value |

+-------------------------------------+-------------------------------------------------------------------+

| status | REBUILD |

| updated | 2013-06-26T10:25:03Z |

| OS-EXT-STS:task_state | rebuilding |

| OS-EXT-SRV-ATTR:host | controller231 |

| key_name | mykey |

| image | Ubuntu 12.04 cloudimg i386 (1f7f5763-33a1-4282-92b3-53366bf7c695) |

| hostId | 083729f2f8f664fffd4cffb8c3e76615d7abc1e11efc993528dd88b9 |

| OS-EXT-STS:vm_state | active |

| OS-EXT-SRV-ATTR:instance_name | instance-00000021 |

| OS-EXT-SRV-ATTR:hypervisor_hostname | controller231.openstack.org |

| flavor | kong_flavor (6) |

| id | 8989a10b-5a89-4f87-9b59-83578eabb997 |

| security_groups | [{u'name': u'default'}] |

| user_id | f882feb345064e7d9392440a0f397c25 |

| name | kong2 |

| created | 2013-06-26T10:00:51Z |

| tenant_id | 6fbe9263116a4b68818cf1edce16bc4f |

| OS-DCF:diskConfig | MANUAL |

| metadata | {} |

| accessIPv4 | |

| accessIPv6 | |

| testnet01 network | 10.1.1.6, 192.150.73.16 |

| progress | 0 |

| OS-EXT-STS:power_state | 1 |

| OS-EXT-AZ:availability_zone | nova |

| config_drive | |

+-------------------------------------+-------------------------------------------------------------------+待虚拟机active之后,VNC登录虚拟机,发现虚拟机并没有发生变化,还是cirros。

因为rebuild在nova driver层调用还是spawn函数创建新的虚拟机,而后端卷启动的虚拟机,是不会跟glance打交道的,还是直接挂载系统盘。