Android ilbc 语音对话示范(五)接收端处理

此系列文章拖了N久,有好多人发邮件来询问我第五次的文章为什么没有写,其实非常抱歉,本人学生一个,暑假一直

去公司实习,最近又忙着各种招聘找工作,没有时间好好写,现在抽空把最后一篇补上,水平有限,如过有不对的,请

各位指正~

前四篇文章分别介绍了 “代码结构”,“程序流程”,以及”发送方的处理”,现在就把接收方的处理流程做个介绍;

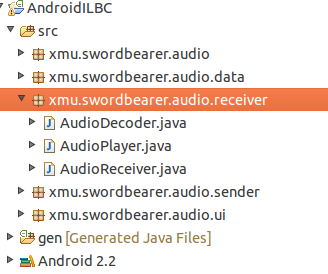

如上图所示,接收方的操作有三个类:AudioDecoder(负责解码),AudioPlayer(负责播放解码后的音频),

AudioReceiver(负责从服务器接收音频数据包),这三个类的流程在第三篇中有详细的介绍。

1.AudioReceiver代码:

AudioReceiver使用UDP方式从服务端接收音频数据,其过程比较简单,直接上代码:

package xmu.swordbearer.audio.receiver;

import java.io.IOException;

import java.net.DatagramPacket;

import java.net.DatagramSocket;

import java.net.SocketException;

import xmu.swordbearer.audio.MyConfig;

import android.util.Log;

public class AudioReceiver implements Runnable {

String LOG = "NET Reciever ";

int port = MyConfig.CLIENT_PORT;// 接收的端口

DatagramSocket socket;

DatagramPacket packet;

boolean isRunning = false;

private byte[] packetBuf = new byte[1024];

private int packetSize = 1024;

/*

* 开始接收数据

*/

public void startRecieving() {

if (socket == null) {

try {

socket = new DatagramSocket(port);

packet = new DatagramPacket(packetBuf, packetSize);

} catch (SocketException e) {

}

}

new Thread(this).start();

}

/*

* 停止接收数据

*/

public void stopRecieving() {

isRunning = false;

}

/*

* 释放资源

*/

private void release() {

if (packet != null) {

packet = null;

}

if (socket != null) {

socket.close();

socket = null;

}

}

public void run() {

// 在接收前,要先启动解码器

AudioDecoder decoder = AudioDecoder.getInstance();

decoder.startDecoding();

isRunning = true;

try {

while (isRunning) {

socket.receive(packet);

// 每接收一个UDP包,就交给解码器,等待解码

decoder.addData(packet.getData(), packet.getLength());

}

} catch (IOException e) {

Log.e(LOG, LOG + "RECIEVE ERROR!");

}

// 接收完成,停止解码器,释放资源

decoder.stopDecoding();

release();

Log.e(LOG, LOG + "stop recieving");

}

}

2.AudioDecoder代码:

解码的过程也很简单,由于接收端接收到了音频数据,然后就把数据交给解码器,所以解码的主要工作就是把接收端的数

据取出来进行解码,如果解码正确,就将解码后的数据再转交给AudioPlayer去播放,这三个类之间是依次传递的 :

AudioReceiver---->AudioDecoder--->AudioPlayer

下面代码中有个List变量 private List<AudioData> dataList = null;这个就是用来存放数据的,每次解码时,dataList.remove(0),

从最前端取出数据进行解码:

package xmu.swordbearer.audio.receiver;

import java.util.Collections;

import java.util.LinkedList;

import java.util.List;

import xmu.swordbearer.audio.AudioCodec;

import xmu.swordbearer.audio.data.AudioData;

import android.util.Log;

public class AudioDecoder implements Runnable {

String LOG = "CODEC Decoder ";

private static AudioDecoder decoder;

private static final int MAX_BUFFER_SIZE = 2048;

private byte[] decodedData = new byte[1024];// data of decoded

private boolean isDecoding = false;

private List<AudioData> dataList = null;

public static AudioDecoder getInstance() {

if (decoder == null) {

decoder = new AudioDecoder();

}

return decoder;

}

private AudioDecoder() {

this.dataList = Collections

.synchronizedList(new LinkedList<AudioData>());

}

/*

* add Data to be decoded

*

* @ data:the data recieved from server

*

* @ size:data size

*/

public void addData(byte[] data, int size) {

AudioData adata = new AudioData();

adata.setSize(size);

byte[] tempData = new byte[size];

System.arraycopy(data, 0, tempData, 0, size);

adata.setRealData(tempData);

dataList.add(adata);

System.out.println(LOG + "add data once");

}

/*

* start decode AMR data

*/

public void startDecoding() {

System.out.println(LOG + "start decoder");

if (isDecoding) {

return;

}

new Thread(this).start();

}

public void run() {

// start player first

AudioPlayer player = AudioPlayer.getInstance();

player.startPlaying();

//

this.isDecoding = true;

// init ILBC parameter:30 ,20, 15

AudioCodec.audio_codec_init(30);

Log.d(LOG, LOG + "initialized decoder");

int decodeSize = 0;

while (isDecoding) {

while (dataList.size() > 0) {

AudioData encodedData = dataList.remove(0);

decodedData = new byte[MAX_BUFFER_SIZE];

byte[] data = encodedData.getRealData();

//

decodeSize = AudioCodec.audio_decode(data, 0,

encodedData.getSize(), decodedData, 0);

if (decodeSize > 0) {

// add decoded audio to player

player.addData(decodedData, decodeSize);

// clear data

decodedData = new byte[decodedData.length];

}

}

}

System.out.println(LOG + "stop decoder");

// stop playback audio

player.stopPlaying();

}

public void stopDecoding() {

this.isDecoding = false;

}

}

3.AudioPlayer代码:

播放器的工作流程其实和解码器一模一样,都是启动一个线程,然后不断从自己的 dataList中提取数据。

不过要注意,播放器的一些参数配置非常的关键;

播放声音时,使用了Android自带的 AudioTrack 这个类,它有这个方法:

public int write(byte[] audioData,int offsetInBytes, int sizeInBytes)可以直接播放;

所有播放器的代码如下:

package xmu.swordbearer.audio.receiver;

import java.util.Collections;

import java.util.LinkedList;

import java.util.List;

import xmu.swordbearer.audio.data.AudioData;

import android.media.AudioFormat;

import android.media.AudioManager;

import android.media.AudioRecord;

import android.media.AudioTrack;

import android.util.Log;

public class AudioPlayer implements Runnable {

String LOG = "AudioPlayer ";

private static AudioPlayer player;

private List<AudioData> dataList = null;

private AudioData playData;

private boolean isPlaying = false;

private AudioTrack audioTrack;

private static final int sampleRate = 8000;

// 注意:参数配置

private static final int channelConfig = AudioFormat.CHANNEL_IN_MONO;

private static final int audioFormat = AudioFormat.ENCODING_PCM_16BIT;

private AudioPlayer() {

dataList = Collections.synchronizedList(new LinkedList<AudioData>());

}

public static AudioPlayer getInstance() {

if (player == null) {

player = new AudioPlayer();

}

return player;

}

public void addData(byte[] rawData, int size) {

AudioData decodedData = new AudioData();

decodedData.setSize(size);

byte[] tempData = new byte[size];

System.arraycopy(rawData, 0, tempData, 0, size);

decodedData.setRealData(tempData);

dataList.add(decodedData);

}

/*

* init Player parameters

*/

private boolean initAudioTrack() {

int bufferSize = AudioRecord.getMinBufferSize(sampleRate,

channelConfig, audioFormat);

if (bufferSize < 0) {

Log.e(LOG, LOG + "initialize error!");

return false;

}

audioTrack = new AudioTrack(AudioManager.STREAM_MUSIC, sampleRate,

channelConfig, audioFormat, bufferSize, AudioTrack.MODE_STREAM);

// set volume:设置播放音量

audioTrack.setStereoVolume(1.0f, 1.0f);

audioTrack.play();

return true;

}

private void playFromList() {

while (dataList.size() > 0 && isPlaying) {

playData = dataList.remove(0);

audioTrack.write(playData.getRealData(), 0, playData.getSize());

}

}

public void startPlaying() {

if (isPlaying) {

return;

}

new Thread(this).start();

}

public void run() {

this.isPlaying = true;

if (!initAudioTrack()) {

Log.e(LOG, LOG + "initialized player error!");

return;

}

while (isPlaying) {

if (dataList.size() > 0) {

playFromList();

} else {

try {

Thread.sleep(20);

} catch (InterruptedException e) {

}

}

}

if (this.audioTrack != null) {

if (this.audioTrack.getPlayState() == AudioTrack.PLAYSTATE_PLAYING) {

this.audioTrack.stop();

this.audioTrack.release();

}

}

Log.d(LOG, LOG + "end playing");

}

public void stopPlaying() {

this.isPlaying = false;

}

}

为了方便测试,我自己用Java 写了一个UDP的服务器,其功能非常的弱,就是接收,然后转发给另一方:

import java.io.IOException;

import java.net.DatagramPacket;

import java.net.DatagramSocket;

import java.net.InetAddress;

import java.net.SocketException;

import java.net.UnknownHostException;

public class AudioServer implements Runnable {

DatagramSocket socket;

DatagramPacket packet;// 从客户端接收到的UDP包

DatagramPacket sendPkt;// 转发给另一个客户端的UDP包

byte[] pktBuffer = new byte[1024];

int bufferSize = 1024;

boolean isRunning = false;

int myport = 5656;

// ///////////

String clientIpStr = "192.168.1.104";

InetAddress clientIp;

int clientPort = 5757;

public AudioServer() {

try {

clientIp = InetAddress.getByName(clientIpStr);

} catch (UnknownHostException e1) {

e1.printStackTrace();

}

try {

socket = new DatagramSocket(myport);

packet = new DatagramPacket(pktBuffer, bufferSize);

} catch (SocketException e) {

e.printStackTrace();

}

System.out.println("服务器初始化完成");

}

public void startServer() {

this.isRunning = true;

new Thread(this).start();

}

public void run() {

try {

while (isRunning) {

socket.receive(packet);

sendPkt = new DatagramPacket(packet.getData(),

packet.getLength(), packet.getAddress(), clientPort);

socket.send(sendPkt);

try {

Thread.sleep(20);

} catch (InterruptedException e) {

e.printStackTrace();

}

}

} catch (IOException e) {

}

}

// main

public static void main(String[] args) {

new AudioServer().startServer();

}

}

5.结语:

Android使用 ILBC 进行语音通话的大致过程就讲述完了,此系列只是做一个ILBC 使用原理的介绍,距离真正的语音

通话还有很多工作要做,缺点还是很多的:

1. 文章中介绍的只是单方通话,如果要做成双方互相通话或者一对多的通话,就需要增加更多的流程处理,其服务端

也要做很多工作;

2. 实时性:本程序在局域网中使用时,实时性还是较高的,但是再广域网中,效果可能会有所下降,除此之外,本

程序还缺少时间戳的处理,如果网络状况不理想,或者数据延迟,就会导致语音播放前后混乱;

3. 服务器很弱:真正的流媒体服务器,需要很强的功能,来对数据进行处理,我是为了方便,就写了一个简单的,

最近打算移植live555,用来做专门的流媒体服务器,用RTP协议对数据进行封装,这样效果应该会好很多。

现在,整个工程的代码都完成了,全部源代码可以在这里下载: http://download.csdn.net/detail/ranxiedao/4923759

BY:http://blog.csdn.net/ranxiedao