2014年人工智能领域的突破

2014 in Computing: Breakthroughs in Artificial Intelligence

The most striking research results in AI came from the field of deep learning, which involves using crude simulated neurons to process data.

Work in deep learning often focuses on images, which are easy for humans to understand but very difficult for software to decipher. Researchers at Facebook used that approach to make a system that can tell almost as well as a human whether two different photos depict the same person. Google showed off a system that can describe scenes using short sentences.

Results like these have led leading computing companies to compete fiercely for AI researchers. Google paid more than $600 million for a machine learning startup called DeepMind at the start of the year. When MIT Technology Review caught up with the company’s founder, Demis Hassabis, later in the year, he explained how DeepMind’s work was shaped by groundbreaking research into the human brain.

The search company Baidu, nicknamed “China’s Google,” also spent big on artificial intelligence. It set up a lab in Silicon Valley to expand its existing research into deep learning, and to compete with Google and others for talent. Stanford AI researcher and onetime Google collaborator Andrew Ng was hired to lead that effort. In our feature-length profile, he explained how artificial intelligence could turn people who have never been on the Web into users of Baidu’s Web search and other services.

Machine learning was also a source of new products this year from computing giants, small startups, and companies outside the computer industry.

Microsoft drew on its research into speech recognition and language comprehension to create its virtual assistant Cortana, which is built into the mobile version of Windows. The app tries to enter a back-and-forth dialogue with people. That’s intended both to make it more endearing and to help it learn what went wrong when it makes a mistake.

Startups launched products that used machine learning for tasks as varied as helping you get pregnant, letting you control home appliances with your voice, and making plans via text message .

Some of the most interesting applications of artificial intelligence came in health care. IBM is now close to seeing a version of its Jeopardy!-winning Watson software help cancer doctors use genomic data to choose personalized treatment plans for patients . Applying machine learning to a genetic database enabled one biotech company to invent a noninvasive test that prevents unnecessary surgery.

Using artificial intelligence techniques on genetic data is likely to get a lot more common now that Google, Amazon, and other large computing companies are getting into the business ofstoring digitized genomes.

However, the most advanced machine learning software must be trained with large data sets, something that is very energy intensive, even for companies with sophisticated infrastructure. That’s motivating work on a new type of “neuromorphic” chips modeled loosely on ideas from neuroscience. Those chips can run machine learning algorithms more efficiently.

This year, IBM began producing a prototype brain-inspired chip it says could be used in large numbers to build a kind of supercomputer specialized for learning. A more compact neuromorphic chip, developed by General Motors and the Boeing-owned research lab HRL, took flight in a tiny drone aircraft.

All this rapid progress in artificial intelligence led some people to ponder the possible downsides and long-term implications of the technology. One software engineer who has since joined Google cautioned that our instincts about privacy must change now that machines can decipher images.

Looking further ahead, biotech and satellite entrepreneur Martine Rothblatt predicted that our personal data could be used to create intelligent digital doppelgangers with a kind of life of their own. And neuroscientist Christof Koch, chief scientific officer of the Allen Institute for Brain Science in Seattle, warned that although intelligent software could never be conscious, it could still harm us if not designed correctly.

Meanwhile, a more benign view of the far future came from science fiction author Greg Egan. In a thoughtful response to the sci-fi movie Her, he suggested that conversational AI companions could make us better at interacting with other humans.

在今年,人工智能领域有许多的突破。尤其是一系列机器学习软件,作为人工智能的核心,能够实现在经验学习中改善自身性能。从生物技术到云计算行业,机器学习软件得到了许多企业级用户的青睐。

深度学习:计算机终于能读懂图像了!

在这其中,最显著的研究成果莫过于深度学习,通过建立、模拟人脑进行分析学习的神经网络,可以依照人脑的机制来解释数据。

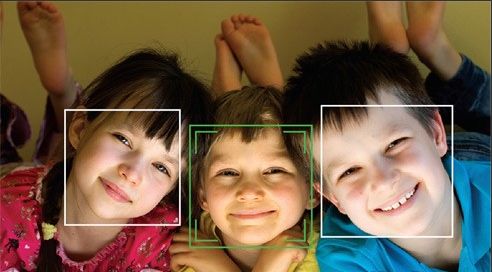

深度学习通常被用作处理图像,其实这些对于人类来讲非常容易读懂,但对于传统的计算机软件却像是“无字天书”那样难以解码。Facebook在去年耗费大力气打造了人工智能实验室,目前研究人员们已开发出了一种软件可以通过图像对比,推论出两张照片中究竟是否为一个人。此外,Google为其搜索引擎添加“短语描述”功能,例如当用户在搜索某个地址时,可以看到一些电脑自动推送的短语,用来说明这地地方因为什么而出名。

各企业抢夺人工智能人才

人工智能领域的火爆,决定了这个新兴的领域必然成为了巨头们的必争之地。Google在今年初斥巨资6亿美元收购了人工智能初创企业 DeepMind,把公司创始人——大名鼎鼎的Demis Hassabis招入麾下,并且在此之后Google就开始一直忙着招聘人工智能 人才。

图右为深度学习大牛——吴恩达先生

提到搜索引擎巨头公司,就不得不谈到百度(你可以呵呵它或者可以称它“中国的谷歌”)。老大哥Google潜心研究人工智能,百度当然不能落后。百度扩大了其在硅谷的深度学习研究团队规模,并且和Google开始了人才争夺大战。比如,深度学习大牛,斯坦福大学人工智能研究员吴恩达 (Andrew Ng)不惜离开了Google加盟百度,他也是世界上为数不多的深度学习专家,曾经负责的是Google X实验室中的重磅项目“Google Brain”。虽然说在全球范围内,Google的搜索引擎显然比百度更受欢迎,但是不得不承认百度“挖墙脚”功力也着实了得。

在今年,从巨头公司乃至小的创业团队,甚至是计算机领域外的企业,都开始争先恐后的制造出一系列和人工智能“沾光”的产品。

微软借助语音识别和语意理解技术,开发了智能助理小娜Cortana,将其打造成为Windows手机上的私人助手,能够尽可能的模拟人的说话语气和思考方式跟用户进行交流。在通过和“主人”一问一答的交流中,小娜能够通过经验积累自主学习,克服犯过的错误。

医疗健康类依旧是刚需

2014年诞生了许多优秀的人工智能软件,例如指导用户怀孕、用声音控制家中电器、自动解答数学题目等等。

在其中最有趣也是最受用户欢迎的莫过于医疗健康类软件。例如IBM打造的Jeopardy!-winning Watson云服务,能够帮助癌症医生借助分析云端的基因数据,为患者制定特定的治疗方案,还可以让生物技术企业进行无害的测试实验,来避免不必要的活体手术。

2014最突破的产品——神经形态芯片

然而必须要说明的是,若希望机器学习软件发挥其最大的潜力,则软件必须要训练经过海量数据的测试,对于公司来讲这无疑是一件耗时费力的事情。不过今年的突破性技术——神经形态芯片(neuromorphic chip),赋予计算机认知能力,可以探测和预测负责数据中的规律和模式,大大释放了机器学习软件的工作效率。

IBM生产了一款大脑原型芯片,主攻超级计算机专业学习领域。此外HRL 实验室的首席研究科学家 Narayan Srinivasa 发明了一种芯片,能够植入到鸟类设备的体中,可以根据室内环境飞行。值得一提的是, IBM 实验室和 HRL 实验室,已经花了 1 亿美元来为美国国防部高级研究项目局研发神经形态芯片。 此外高通公司的神经形态芯片预计会在2015年上市。