MapReduce进一步了解(二)——序列化

1、序列化概念

- 序列化(Serialization)是指把结构化对象转化为字节流。

- 反序列化(Deserialization)是序列化的逆过程,把字节流转回结构化对象。

- java序列化(java.io.Serialization)

2、hadoop序列化的特点

- 紧凑:高效实用存储空间

- 快速:读写数据的额外开销小

- 可扩展:可透明地读取老格式的数据

- 互操作:支持多语言的交互

========================================================================================

3、气象数据分析案例

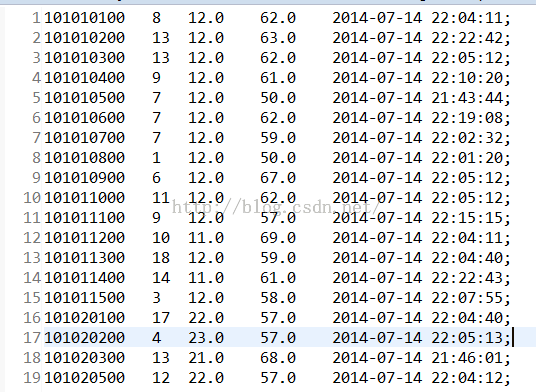

数据源类型:【气象站,温度,,,,气象时间,当前时间】

最终得到的数据:【气象站,最高气温出现的次数,最高气温,湿度,最高气温出现的最后一次时间】

首先定义一个SelBean类

package Test; import java.io.DataInput; import java.io.DataOutput; import java.io.IOException; import java.util.Set; import org.apache.hadoop.io.WritableComparable; public class SelBean implements WritableComparable<SelBean> { //定义气象站,温度,湿度,时间,同时右键对这些属性添加set和get方法 private String station; private double temp; private double humi; private String time; //添加有参的构造方法,对应的就应该添加一个无参的构造方法 public void set(String station, double temp, double humi, String time) { this.station = station; this.temp = temp; this.humi = humi; this.time = time; } //无参的构造方法 public void set (){} //反序列化,将字节流中的内容读取出来赋给对象 @Override public void readFields(DataInput in) throws IOException { // TODO Auto-generated method stub this.station = in.readUTF(); this.temp = in.readDouble(); this.humi = in.readDouble(); this.time = in.readUTF(); } //序列化,将字内存中的信息存放在字节流当中 //注意:序列化和反序列化中属性的顺序和类型 @Override public void write(DataOutput out) throws IOException { // TODO Auto-generated method stub out.writeUTF(station);//支持多种类型 out.writeDouble(temp); out.writeDouble(humi); out.writeUTF(time); } //重写tostring方法,将整体结果作为一个value返回 @Override public String toString() { // TODO Auto-generated method stub return this.station + "\t" + this.temp + "\t" + this.humi + "\t" + this.time + ";"; //return this.temp + "\t" + this.humi + "\t" + this.time; } //重写比较方法 @Override public int compareTo(SelBean o) { // TODO Auto-generated method stub if(this.temp == o.getTemp()) { return this.humi > o.getHumi() ? 1 : -1; } else { return this.temp > o.getTemp() ? -1 :1; } } public String getStation() { return station; } public void setStation(String station) { this.station = station; } public double getTemp() { return temp; } public void setTemp(double temp) { this.temp = temp; } public double getHumi() { return humi; } public void setHumi(double humi) { this.humi = humi; } public String getTime() { return time; } public void setTime(String time) { this.time = time; } }主类<pre class="java" name="code">package Test; import java.io.IOException; import java.util.ArrayList; import java.util.List; import org.apache.hadoop.conf.Configuration; import org.apache.hadoop.fs.Path; import org.apache.hadoop.io.LongWritable; import org.apache.hadoop.io.Text; import org.apache.hadoop.mapreduce.Job; import org.apache.hadoop.mapreduce.Mapper; import org.apache.hadoop.mapreduce.Reducer; import org.apache.hadoop.mapreduce.lib.input.FileInputFormat; import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat; import org.apache.hadoop.util.GenericOptionsParser; public class DataSelection { public static class DSelMapper extends Mapper<LongWritable, Text, Text, SelBean> { private Text k = new Text();; private SelBean v = new SelBean(); String ti= ""; //重写map方法 @Override protected void map(LongWritable key, Text value, Context context) throws IOException, InterruptedException { // TODO Auto-generated method stub //获取到一行内容 String line=value.toString(); //通过切分数据将数据存储在数组中 String[] fields=line.split(","); //获取到三个字段【气象站,温度,湿度,时间】 String s=fields[0].substring(1, fields[0].length()-1); String te=fields[1].substring(1, fields[1].length()-1); String h=fields[2].substring(1, fields[2].length()-1); //由于每一行的内容不一定相同,所以获取时间的时候要区分一下 if(fields.length == 7) { ti=fields[5].substring(1, fields[5].length()-1); } else ti= ""; //将获取得到的温度和湿度转换为double型 double te0=Double.parseDouble(te); double h0=Double.parseDouble(h); //设置key、value;key为气象站,value为【气象站,温度,湿度,时间】 k.set(s); v.set(s,te0,h0,ti); //写入context context.write(k,v); } } private static class DSelReducer extends Reducer<Text, SelBean, Text, SelBean> { private SelBean v = new SelBean(); //重写reduce方法 //这里要注意接收到的数据类型 //<key, value><station1,{SelBean(station1,temp1,h1,t1),SelBean(station1,temp2,h2,t2),SelBean(station1,temp3,h3,t3)......}> @Override protected void reduce(Text key, Iterable<SelBean> values,Context context) throws IOException, InterruptedException { // TODO Auto-generated method stub //定义最好气温、湿度、时间、最高气温出现次数、最高气温出现次数, double maxValue = Double.MIN_VALUE; double h = 0; String t = ""; String s = ""; int count = 0; List<Double> data = new ArrayList<Double>(); //循环取得每一个气象站的最高气温 for (SelBean bean : values) { //maxValue = bean.getTemp(); maxValue = Math.max(maxValue, bean.getTemp()); data.add(bean.getTemp()); if(bean.getTemp() >= maxValue) { h = bean.getHumi(); t = bean.getTime(); } //s = bean.getStation(); } //计算最高气温出现的次数 for (double bean : data) { if (bean == maxValue) count ++; } s= Integer.toString(count); v.set(s, maxValue, h , t); context.write(key, v); } } public static void main(String[] args) throws IOException, InterruptedException, ClassNotFoundException { Job job = Job.getInstance(new Configuration()); job.setJarByClass(DataSelection.class); job.setMapperClass(DSelMapper.class); job.setMapOutputKeyClass(Text.class); job.setMapOutputValueClass(SelBean.class); job.setReducerClass(DSelReducer.class); job.setOutputKeyClass(Text.class); job.setOutputValueClass(SelBean.class); FileInputFormat.addInputPath(job,new Path("hdfs://10.2.173.15:9000/user/guest/input01")); FileOutputFormat.setOutputPath(job, new Path("hdfs://10.2.173.15:9000/user/guest/0data3")); job.waitForCompletion(true); } }