Spark 开发搭建运行

环境:CentOS 6.3, Hadoop 1.1.2, JDK 1.6, Spark 1.0.0, Scala 2.10.3, Eclipse(Hadoop 可以不安装)

首先明白一点,spark就是scala项目。也就是说spark是用scala开发的。

1. 请确认你已正确安装Spark 并且运行过,如果没有,请安装如下链接进行安装。

http://blog.csdn.net/zlcd1988/article/details/21177187

2.新建Scala项目

请根据下面blog,创建scala项目

http://blog.csdn.net/zlcd1988/article/details/31366589

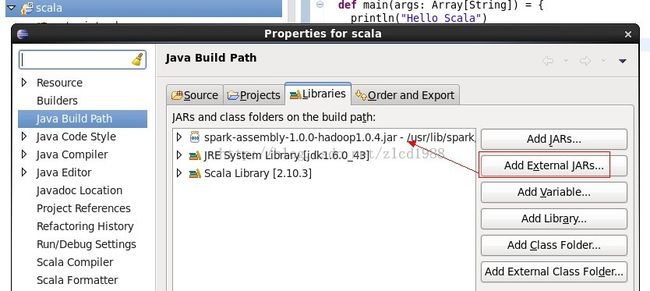

3. 导入spark jar包,就可以使用spark API了(这个jar包就是第二步里面编译生成的jar包)

在导入的项目,右击选择build path->config build path

4. 配置sbt 配置文件(类似于maven的pom.xml文件)

在项目根目录下,创建build.sbt文件,加入如下内容

name := "Simple Project" version := "1.0" scalaVersion := "2.10.3" libraryDependencies += "org.apache.spark" %% "spark-core" % "1.0.0" resolvers += "Akka Repository" at "http://repo.akka.io/releases/"

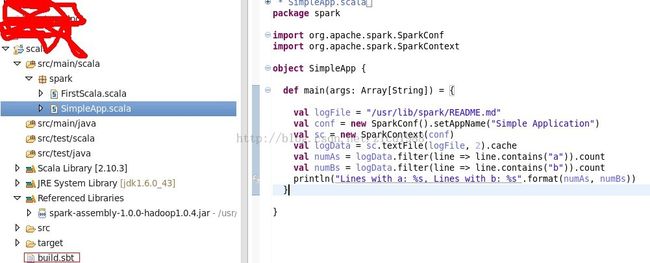

5. 编写一个spark 应用程序

package spark

import org.apache.spark.SparkConf

import org.apache.spark.SparkContext

object SimpleApp {

def main(args: Array[String]) = {

val logFile = "/usr/lib/spark/README.md"

val conf = new SparkConf().setAppName("Simple Application")

val sc = new SparkContext(conf)

val logData = sc.textFile(logFile, 2).cache

val numAs = logData.filter(line => line.contains("a")).count

val numBs = logData.filter(line => line.contains("b")).count

println("Lines with a: %s, Lines with b: %s".format(numAs, numBs))

}

}

进入到项目根目录

$ cd scala

$ sbt package

[info] Loading global plugins from /home/hadoop/.sbt/0.13/plugins

[info] Set current project to Simple Project (in build file:/home/hadoop/workspace/scala/)

[info] Compiling 1 Scala source to /home/hadoop/workspace/scala/target/scala-2.10/classes...

[info] Packaging /home/hadoop/workspace/scala/target/scala-2.10/simple-project_2.10-1.0.jar ...

[info] Done packaging.

[success] Total time: 5 s, completed Jun 15, 2014 10:32:48 PM

7. 运行

$ $SPARK_HOME/bin/spark-submit --class "spark.SimpleApp" --master local[4] target/scala-2.10/simple-project_2.10-1.0.jar

运行结果:

Lines with a: 73, Lines with b: 35

14/06/15 22:34:05 INFO TaskSetManager: Finished TID 3 in 24 ms on localhost (progress: 2/2)

14/06/15 22:34:05 INFO TaskSchedulerImpl: Removed TaskSet 1.0, whose tasks have all completed, from pool