android soundrecorder之二 应用层到HAL实现

转载请标注原文地址:http://blog.csdn.net/uranus_wm/article/details/12748559

前一篇文章介绍了linux alsa初始化的过程,并根据dai_link创建了设备节点,提供给上层应用作为访问接口

这篇文章主要介绍下android soundrecorder从应用层到HAL层的代码框架

后面一片文章重点介绍linux部分,然后说明一下音频数据在内存中的流向和一些相关的调试方法

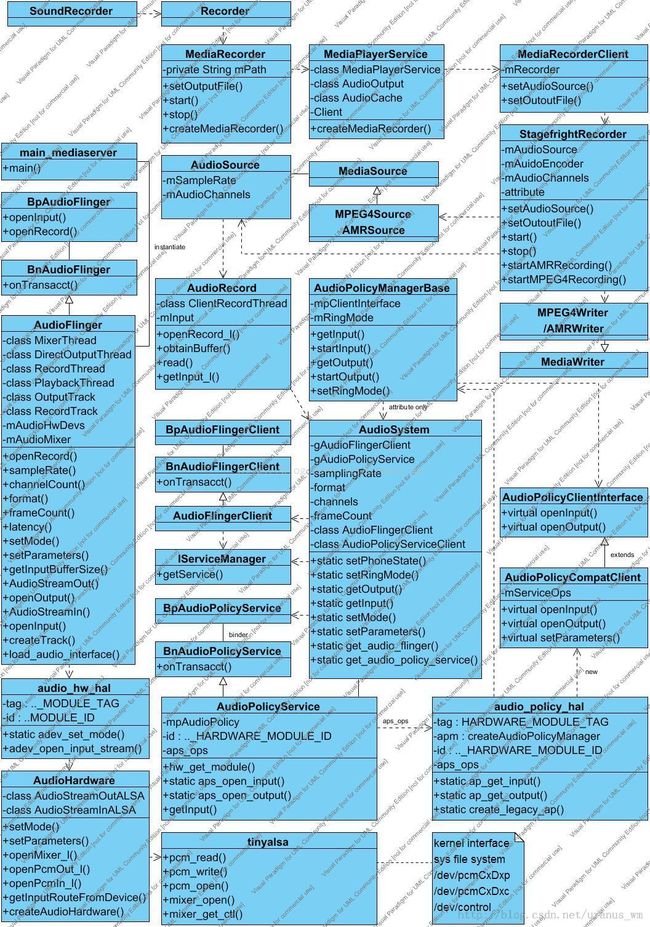

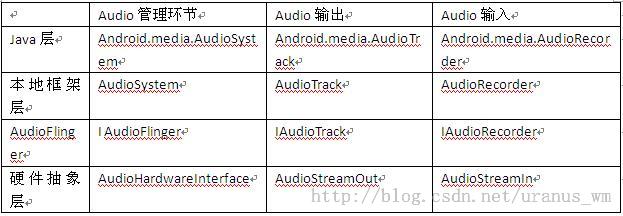

首先还是看图:

更正说明:tinyalsa访问设备节点应该是/dev/snd/pcmCxDxp, /dev/snd/pcmCxDxc和/dev/snd/controlCx,画图时没有确认造成笔误

这个框图大致把soundrecorder从app到framework到HAL的类图画了出来

有些子类父类继承关系没有表示出来,从soundrecorder到AudioRecord基本就是一条线下来

我也没有详细去看代码,stagefright这一块很庞大,实现了多种mime格式的编解码

音频数据生成文件保存在sd卡中应该是在MPEG4Writer这里完成的,这个没有细看

我们重点看下AudioSystem,AudioPolicyService,AudioFlinger和AudioHardware这块。

以open_record()和get_input()这两个方法为例我们看下这几个类之间的调用关系

从AudioRecord.cpp开始,文件位置:frameworks\base\media\libmedia\AudioRecord.cpp

status_t AudioRecord::openRecord_l(

uint32_t sampleRate,

uint32_t format,

uint32_t channelMask,

int frameCount,

uint32_t flags,

audio_io_handle_t input)

{

status_t status;

const sp<IAudioFlinger>& audioFlinger = AudioSystem::get_audio_flinger();

sp<IAudioRecord> record = audioFlinger->openRecord(getpid(), input,

sampleRate, format,

channelMask,

frameCount,

((uint16_t)flags) << 16,

&mSessionId,

&status);

...

}

audio_io_handle_t AudioRecord::getInput_l()

{

mInput = AudioSystem::getInput(mInputSource,

mCblk->sampleRate,

mFormat,

mChannelMask,

(audio_in_acoustics_t)mFlags,

mSessionId);

return mInput;

}

可以看到openRecord_l()直接用AudioSystem这个静态类获取AudioFlinger这个service

const sp<IAudioFlinger>& AudioSystem::get_audio_flinger()

{

Mutex::Autolock _l(gLock);

if (gAudioFlinger.get() == 0) {

sp<IServiceManager> sm = defaultServiceManager();

sp<IBinder> binder;

do {

binder = sm->getService(String16("media.audio_flinger"));

} while(true);

if (gAudioFlingerClient == NULL) {

gAudioFlingerClient = new AudioFlingerClient();

}

binder->linkToDeath(gAudioFlingerClient);

gAudioFlinger = interface_cast<IAudioFlinger>(binder);

gAudioFlinger->registerClient(gAudioFlingerClient);

}

return gAudioFlinger;

}

这里我碰到个问题,get_audio_flinger从ServiceManager获取audio_flinger service,但是我找了半天没有找到add这个service的地方

找到的朋友可以提醒我一下

另外一个getInput_l(),这是一个通路选择的方法,android也用一个service来维护:AudioPolicyService

这个Service对应一个虚拟的硬件设备,由audio_policy_hal提供接口访问,这么做我估计是为了结构上比较清晰,但是代码看起来就有点绕了

从上面类图来看getInput_l()的调用过程:

从AudioSystem->AudioPolicyService->audio_policy_hal->AudioPolicyManagerBase->AudioPolicyCompatClient->AudioPolicyService->AudioFlinger

文件位置:frameworks\base\media\libmedia\AudioSystem.cpp

audio_io_handle_t AudioSystem::getInput(int inputSource,

uint32_t samplingRate,

uint32_t format,

uint32_t channels,

audio_in_acoustics_t acoustics,

int sessionId)

{

const sp<IAudioPolicyService>& aps = AudioSystem::get_audio_policy_service();

if (aps == 0) return 0;

return aps->getInput(inputSource, samplingRate, format, channels, acoustics, sessionId);

}

文件位置:frameworks\base\services\audioflinger\AudioPolicyService.cpp

audio_io_handle_t AudioPolicyService::getInput(int inputSource,

uint32_t samplingRate,

uint32_t format,

uint32_t channels,

audio_in_acoustics_t acoustics,

int audioSession)

{

if (mpAudioPolicy == NULL) {

return 0;

}

Mutex::Autolock _l(mLock);

audio_io_handle_t input = mpAudioPolicy->get_input(mpAudioPolicy, inputSource, samplingRate,

format, channels, acoustics);

}

文件位置:hardware\libhardware_legacy\audio\audio_policy_hal.cpp

static audio_io_handle_t ap_get_input(struct audio_policy *pol, int inputSource,

uint32_t sampling_rate,

uint32_t format,

uint32_t channels,

audio_in_acoustics_t acoustics)

{

struct legacy_audio_policy *lap = to_lap(pol);

return lap->apm->getInput(inputSource, sampling_rate, format, channels,

(AudioSystem::audio_in_acoustics)acoustics);

}

文件位置:hardware\libhardware_legacy\audio\AudioPolicyManagerBase.cpp

audio_io_handle_t AudioPolicyManagerBase::getInput(int inputSource,

uint32_t samplingRate,

uint32_t format,

uint32_t channels,

AudioSystem::audio_in_acoustics acoustics)

{

input = mpClientInterface->openInput(&inputDesc->mDevice,

&inputDesc->mSamplingRate,

&inputDesc->mFormat,

&inputDesc->mChannels,

inputDesc->mAcoustics);

}

文件位置:hardware\libhardware_legacy\audio\AudioPolicyCompatClient.cpp

audio_io_handle_t AudioPolicyCompatClient::openOutput(uint32_t *pDevices,

uint32_t *pSamplingRate,

uint32_t *pFormat,

uint32_t *pChannels,

uint32_t *pLatencyMs,

AudioSystem::output_flags flags)

{

return mServiceOps->open_output(mService, pDevices, pSamplingRate, pFormat,

pChannels, pLatencyMs,

(audio_policy_output_flags_t)flags);

}

static audio_io_handle_t aps_open_input(void *service,

uint32_t *pDevices,

uint32_t *pSamplingRate,

uint32_t *pFormat,

uint32_t *pChannels,

uint32_t acoustics)

{

sp<IAudioFlinger> af = AudioSystem::get_audio_flinger();

return af->openInput(pDevices, pSamplingRate, pFormat, pChannels,

acoustics);

}

其关键就是AudioPolicyService将一个aps_ops结构体一层层传给AudioPolicyCompatClient,最后又调回到AudioPolicyService,这个地方有点绕

namespace {

struct audio_policy_service_ops aps_ops = {

open_output : aps_open_output,

open_duplicate_output : aps_open_dup_output,

close_output : aps_close_output,

suspend_output : aps_suspend_output,

restore_output : aps_restore_output,

open_input : aps_open_input,

close_input : aps_close_input,

set_stream_volume : aps_set_stream_volume,

set_stream_output : aps_set_stream_output,

set_parameters : aps_set_parameters,

get_parameters : aps_get_parameters,

start_tone : aps_start_tone,

stop_tone : aps_stop_tone,

set_voice_volume : aps_set_voice_volume,

move_effects : aps_move_effects,

};

}; // namespace <unnamed>

回过头我们还是看AudioFlinger.cpp这个文件,其内部包含好几个子类

class MixerThread是一个混音器,他可以将多个音频OutputTrack和InputTrack混合到一起,像正在播放的mp3是一个OutputTrack,录音是一个InputTrack

class DirectOutputThread是指不经过混音,直接输出的thread

class RecordThread就是我们soundRecorder用到的thread

class PlaybackThread支持混音输出的thread

AudioFlinger还是增加音效,功能很强,由于我调试的时间也短,还没能完全涉及到!

AudioFlinger之所以可以实现音频数据的输入输出是因为audio_hw_hal提供了硬件抽象层接口:

文件位置:hardware\libhardware_legacy\audio\audio_hw_hal.cpp

static int legacy_adev_open(const hw_module_t* module, const char* name,

hw_device_t** device)

{

struct legacy_audio_device *ladev;

int ret;

ladev = (struct legacy_audio_device *)calloc(1, sizeof(*ladev));

if (!ladev)

return -ENOMEM;

ladev->device.common.tag = HARDWARE_DEVICE_TAG;

ladev->device.common.version = 0;

ladev->device.common.module = const_cast<hw_module_t*>(module);

ladev->device.common.close = legacy_adev_close;

ladev->device.get_supported_devices = adev_get_supported_devices;

ladev->device.init_check = adev_init_check;

ladev->device.set_voice_volume = adev_set_voice_volume;

ladev->device.set_master_volume = adev_set_master_volume;

ladev->device.set_mode = adev_set_mode;

ladev->device.set_mic_mute = adev_set_mic_mute;

ladev->device.get_mic_mute = adev_get_mic_mute;

ladev->device.set_parameters = adev_set_parameters;

ladev->device.get_parameters = adev_get_parameters;

ladev->device.get_input_buffer_size = adev_get_input_buffer_size;

ladev->device.open_output_stream = adev_open_output_stream;

ladev->device.close_output_stream = adev_close_output_stream;

ladev->device.open_input_stream = adev_open_input_stream;

ladev->device.close_input_stream = adev_close_input_stream;

ladev->device.dump = adev_dump;

ladev->hwif = createAudioHardware();

if (!ladev->hwif) {

ret = -EIO;

goto err_create_audio_hw;

}

*device = &ladev->device.common;

return 0;

}

static struct hw_module_methods_t legacy_audio_module_methods = {

open: legacy_adev_open

};

struct legacy_audio_module HAL_MODULE_INFO_SYM = {

module: {

common: {

tag: HARDWARE_MODULE_TAG,

version_major: 1,

version_minor: 0,

id: AUDIO_HARDWARE_MODULE_ID,

name: "LEGACY Audio HW HAL",

author: "The Android Open Source Project",

methods: &legacy_audio_module_methods,

dso : NULL,

reserved : {0},

},

},

};

不同的平台都有自己的AudioHardware具体实现

借用一本android培训书籍的框图看下AudioHardware的层级架构,作者好像是韩超

可以看到对于输入流和输出流,从JAVA层到HAL层都有一个对应的子类来负责

在AudioHardware里面录音用到的就是AudioStreamIn,如下面这个方法:

status_t AudioHardware::AudioStreamInALSA::getNextBuffer(struct resampler_buffer *buffer)

{

if (mInputFramesIn == 0) {

TRACE_DRIVER_IN(DRV_PCM_READ)

mReadStatus = pcm_read(mPcm,(void*) mInputBuf, AUDIO_HW_IN_PERIOD_SZ * frameSize());

TRACE_DRIVER_OUT

if (mReadStatus != 0) {

buffer->raw = NULL;

buffer->frame_count = 0;

return mReadStatus;

}

mInputFramesIn = AUDIO_HW_IN_PERIOD_SZ;

}

buffer->frame_count = (buffer->frame_count > mInputFramesIn) ? mInputFramesIn:buffer->frame_count;

buffer->i16 = mInputBuf + (AUDIO_HW_IN_PERIOD_SZ - mInputFramesIn) * mChannelCount;

return mReadStatus;

}

这里有个pcm_read()函数就是google为了调用linux alsa提供的文件接口专门写得一个适配层,命名为tinyalsa

从AudioHardware到tinyalsa主要完成的任务就是:

- 设置当前系统音频模式:通话模式,录音模式还是播放模式

- 配置dapm通路:即main_mic还是aux_mic,spreaker还是receiver还是headset,aif1还是aif2或者aif.x

- 音效,音量等参数配置

- 读取输入的pcm数据和传送输出的pcm数据

android4.0上面AudioSystem代码基本完善,我们调试一个codec主要的工作就三点:

- bsp配置如i2c,micbias配置

- kernel dai_link实现和snd_pcm_hardware的注册

- 代码量最大的就是这里dapm的配置

我这里给出wolfson wm8994在4412上面的一些配置表,经过测试工作正常

dapm全称是dynamic audio power management,其主要作用就是配置codec内部的muxer和mixer,已实现路径的切换

具体来讲需要一个单独的章节,这里先贴代码:

typedef struct AudioMixer_tag {

const char *ctl;

const int val;

} AudioMixer;

const AudioMixer device_out_SPK[] = {

#if defined (USES_I2S_AUDIO) || defined (USES_PCM_AUDIO)

/*AIF1 to DAC1*/

{"DAC1L Mixer AIF1.1 Switch", 1}, // "OFF", "ON"

{"DAC1R Mixer AIF1.1 Switch", 1}, // "OFF", "ON"

{"DAC1 Switch", 1}, // "OFF OFF", "ON ON"

{"DAC1 Volume", 96}, //[0,1..96]:[MUTE,-71.62db..0db] 0610h_0611h

/*DAC1 to SPK*/

{"SPKL DAC1 Switch", 1}, // "OFF", "ON"

{"SPKL DAC1 Volume", 1}, // [0..1]:[-3db..0db] 0022h

{"SPKR DAC1 Switch", 1}, // "OFF", "ON"

{"SPKR DAC1 Volume", 1}, // [0..1]:[-3db..0db] 0023h

{"Speaker Mixer Volume", 3}, // [0..3]:[MUTE,-12db,-6db,0db] 0022h_0023h

{"Speaker Volume", 63}, // [0..63]:[-57db..+6db] 0026h_0027h

{"SPKL Boost SPKL Switch", 1}, // "OFF OFF", "ON ON"

{"SPKR Boost SPKR Switch", 1}, // "OFF OFF", "ON ON"

{"Speaker Boost Volume", 7}, // [0..7]:[0db..+12db] 0025h

/*DAC1 to Headphone*/

{"Right Headphone Mux", 1}, // "Mixer","DAC"

{"Left Headphone Mux", 1}, // "Mixer","DAC"

{"Headphone Switch", 1}, // "HP MUTE","UNMUTE"

#elif defined(USES_SPDIF_AUDIO)

#endif

{NULL, NULL}

};

const AudioMixer device_out_RING_SPK[] = {

/*AIF1 to DAC1*/

{"DAC1L Mixer AIF1.1 Switch", 1}, // "OFF", "ON"

{"DAC1R Mixer AIF1.1 Switch", 1}, // "OFF", "ON"

{"DAC1 Switch", 1}, // "OFF OFF", "ON ON"

{"DAC1 Volume", 96}, //[0,1..96]:[MUTE,-71.62db..0db] 0610h_0611h

/*DAC1 to SPK*/

{"SPKL DAC1 Switch", 1}, // "OFF", "ON"

{"SPKL DAC1 Volume", 1}, // [0..1]:[-3db..0db] 0022h

{"SPKR DAC1 Switch", 1}, // "OFF", "ON"

{"SPKR DAC1 Volume", 1}, // [0..1]:[-3db..0db] 0023h

{"Speaker Mixer Volume", 3}, // [0..3]:[MUTE,-12db,-6db,0db] 0022h_0023h

{"Speaker Volume", 63}, // [0..63]:[-57db..+6db] 0026h_0027h

{"SPKL Boost SPKL Switch", 1}, // "OFF OFF", "ON ON"

{"SPKR Boost SPKR Switch", 1}, // "OFF OFF", "ON ON"

{"Speaker Boost Volume", 7}, // [0..7]:[0db..+12db] 0025h

{NULL, NULL}

};

const AudioMixer device_voice_RCV[] = {

#if defined (USES_I2S_AUDIO) || defined (USES_PCM_AUDIO)

//Main_MIC(IN1L N_VMID) to MIXIN to ADC to DAC2 Mixer to AIF2(ADCDAT2)

//Main_MIC(IN1L N_VMID) to MIXINL

{"IN1L PGA IN1LN Switch", 1}, //OFF,IN1LN

{"IN1L PGA IN1LP Switch", 1}, //VMID,IN1LP

{"IN1L Switch", 1}, //[0..1]:[MUTE..UNMUTE] ,0018h:b7

{"IN1L Volume", 12}, //[1..31]:[-16.5db..+30db],0018h

{"MIXINL IN1L Switch", 1},

{"MIXINL IN1L Volume",1}, //[0..1]:[0db..+30db]],0029h

/*ADCL to AIF2ADC*/

{"ADCL Mux", 0}, //ADC,DMIC

{"Left Sidetone", 0}, //_D__DMIC1,DMIC2

{"AIF2DAC2L Mixer Left Sidetone Switch", 1}, //,0604h

//{"AIF2DAC2R Mixer Left Sidetone Switch", 1}, //,0605h

{"DAC2 Left Sidetone Volume", 12}, //[0..12]:[-36db..0db],0603h

{"DAC2 Right Sidetone Volume", 12}, //[0..12]:[-36db..0db],0603h

//////{"AIF2DAC2L Mixer AIF2 Switch", 1}, //,0604h ////////////////++ test

//////{"AIF2DAC2R Mixer AIF2 Switch", 1}, //,0604h ////////////////++ test

{"AIF2ADCL Source", 0}, //Left,Right

//{"AIF2ADCR Source", 0}, //Left,Right

{"AIF2ADC Mux", 0}, //AIF2ADCDAT,AIF3DACDAT

//{"AIF2ADC Volume", 119}, //[0,1..119]:[MUTE,-71.625db..+17.625db],0500h_0501h default:C0 eq 0db

{"DAC2 Switch", 1}, //[0..1]:[UNMUTE..MUTE] 0612h_0613h

{"DAC2 Volume", 96}, //[0,1..96]:[MUTE,-71.62db..0db] 0612h_0613h

//BB_in AIF2DAC(DACDAT2) to DAC1_R to MIXOUTL_R to SPKMIXL_R to SPK

/*MIXIN to MIXOUT for mic to hp test*/

//{"Left Output Mixer Left Input Switch", 1}, // "OFF", "ON" ,002dh ///////////////+

//{"Right Output Mixer Left Input Switch", 1}, // "OFF", "ON" ,002eh //////////////+

/*ADCL to DAC1 for sidetone*/

{"DAC1L Mixer Left Sidetone Switch", 1},

{"DAC1R Mixer Left Sidetone Switch", 1},

{"DAC1 Left Sidetone Volume", 0}, //[0..12]:[-36db..0db],0603h

{"DAC1 Right Sidetone Volume", 0}, //[0..12]:[-36db..0db],0603h

/*AIF2DAC to DAC1*/

{"AIF2DAC Mux", 0}, // AIF2DACDAT,AIF3DACDAT

{"DAC1L Mixer AIF2 Switch", 1}, // "OFF", "ON" ,0601h

{"DAC1R Mixer AIF2 Switch", 1}, // "OFF", "ON" ,0602h

{"DAC1 Switch", 1}, // "OFF OFF", "ON ON"

/*DAC1 to HP*/

{"Left Output Mixer DAC Switch", 1}, // "OFF OFF", "ON ON"

{"Right Output Mixer DAC Switch", 1}, // "OFF OFF", "ON ON"

{"Output Switch", 1}, // "OFF OFF", "ON ON"

{"Right Headphone Mux", 0}, // "Mixer","DAC"

{"Left Headphone Mux", 0}, // "Mixer","DAC"

{"Headphone Switch", 1}, // "HP MUTE","UNMUTE"

#elif defined(USES_SPDIF_AUDIO)

#endif

{NULL, NULL}

};

const AudioMixer device_input_Main_Mic[] = {

#if defined (USES_I2S_AUDIO) || defined (USES_PCM_AUDIO)

//Main_MIC(IN1L N_VMID) to MIXIN to ADC to DAC2 Mixer to AIF2(ADCDAT2)

//Main_MIC(IN1L N_VMID) to MIXINL

{"IN1L PGA IN1LN Switch", 1}, //OFF,IN1LN

{"IN1L PGA IN1LP Switch", 1}, //VMID,IN1LP

{"IN1L Switch", 1}, //[0..1]:[MUTE..UNMUTE] ,0018h:b7

{"IN1L Volume", 12}, //[1..31]:[-16.5db..+30db],0018h

{"MIXINL IN1L Switch", 1},

{"MIXINL IN1L Volume",1}, //[0..1]:[0db..+30db]],0029h

/*ADCL to AIF1ADC*/

{"ADCL Mux", 0}, //ADC,DMIC

{"AIF1ADCL Source", 0}, //Left,Right

{"AIF1ADCR Source", 0}, //Left,Right

{"AIF1ADC1 Volume", 119}, //[0,1..119]:[MUTE,-71.625db..+17.625db],0400h_0401h default:C0 eq 0db

{"AIF1ADC1L DRC Switch",1},

{"AIF1ADC1R DRC Switch",1},

{"AIF1ADC1L Mixer ADC/DMIC Switch",1},

{"AIF1ADC1R Mixer ADC/DMIC Switch",1},

#elif defined(USES_SPDIF_AUDIO)

#endif

{NULL, NULL}

};

每一行的{}里面有两个值,用“,”分开,实际上前一个值是有两部分组成,分别是“dest ctrl+control ctrl”,省略了src ctrl;后一个值通常是数字

注意这里的数字1不是true的意思,而是当前route里面的第几个的意思,是个index值,这个需要在wm8994驱动里面取对应查找

再说说AudioHardare

文件位置:\device\samsung\smdk_common\lib_audio\AudioHardware.cpp

重点关注下下面这些函数,当然函数很多,还得自己慢慢看代码

struct mixer *AudioHardware::openMixer_l()

{

LOGV("openMixer_l() mMixerOpenCnt: %d", mMixerOpenCnt);

if (mMixerOpenCnt++ == 0) {

if (mMixer != NULL) {

LOGE("openMixer_l() mMixerOpenCnt == 0 and mMixer == %p\n", mMixer);

mMixerOpenCnt--;

return NULL;

}

TRACE_DRIVER_IN(DRV_MIXER_OPEN)

mMixer = mixer_open(0);

TRACE_DRIVER_OUT

if (mMixer == NULL) {

LOGE("openMixer_l() cannot open mixer");

mMixerOpenCnt--;

return NULL;

}

}

return mMixer;

}

struct pcm *AudioHardware::openPcmOut_l()

{

LOGD("openPcmOut_l() mPcmOpenCnt: %d", mPcmOpenCnt);

if (mPcmOpenCnt++ == 0) {

if (mPcm != NULL) {

LOGE("openPcmOut_l() mPcmOpenCnt == 0 and mPcm == %p\n", mPcm);

mPcmOpenCnt--;

return NULL;

}

unsigned flags = PCM_OUT;

struct pcm_config config = {

channels : 2,

rate : AUDIO_HW_OUT_SAMPLERATE,

period_size : AUDIO_HW_OUT_PERIOD_SZ,

period_count : AUDIO_HW_OUT_PERIOD_CNT,

format : PCM_FORMAT_S16_LE,

start_threshold : 0,

stop_threshold : 0,

silence_threshold : 0,

};

TRACE_DRIVER_IN(DRV_PCM_OPEN)

#if defined(USES_PCM_AUDIO) || defined(USES_SPDIF_AUDIO)

mPcm = pcm_open(0, 0, flags, &config);

#elif defined(USES_I2S_AUDIO)

mPcm = pcm_open(0, 1, flags, &config);

#endif

TRACE_DRIVER_OUT

if (!pcm_is_ready(mPcm)) {

LOGE("openPcmOut_l() cannot open pcm_out driver: %s\n", pcm_get_error(mPcm));

TRACE_DRIVER_IN(DRV_PCM_CLOSE)

pcm_close(mPcm);

TRACE_DRIVER_OUT

mPcmOpenCnt--;

mPcm = NULL;

}

}

return mPcm;

}

const AudioMixer *AudioHardware::getInputRouteFromDevice(uint32_t device)

{

if (mMicMute) {

return device_input_MIC_OFF;

}

switch (device) {

case AudioSystem::DEVICE_IN_BUILTIN_MIC:

return device_input_Main_Mic;

case AudioSystem::DEVICE_IN_WIRED_HEADSET:

return device_input_Hands_Free_Mic;

case AudioSystem::DEVICE_IN_BLUETOOTH_SCO_HEADSET:

return device_input_BT_Sco_Mic;

default:

return device_input_MIC_OFF;

}

}

status_t AudioHardware::setMode(int mode)

{

sp<AudioStreamOutALSA> spOut;

sp<AudioStreamInALSA> spIn;

status_t status;

// Mutex acquisition order is always out -> in -> hw

AutoMutex lock(mLock);

spOut = mOutput;

// spOut is not 0 here only if the output is active

spIn = getActiveInput_l();

while (spIn != 0) {

int cnt = spIn->prepareLock();

mLock.unlock();

spIn->lock();

mLock.lock();

// make sure that another thread did not change input state while the

// mutex is released

if ((spIn == getActiveInput_l()) && (cnt == spIn->standbyCnt())) {

break;

}

spIn->unlock();

spIn = getActiveInput_l();

}

// spIn is not 0 here only if the input is active

int prevMode = mMode;

status = AudioHardwareBase::setMode(mode);

LOGV("setMode() : new %d, old %d", mMode, prevMode);

if (status == NO_ERROR) {

bool modeNeedsCPActive = mMode == AudioSystem::MODE_IN_CALL ||

mMode == AudioSystem::MODE_RINGTONE;

// activate call clock in radio when entering in call or ringtone mode

if (modeNeedsCPActive)

{

if ((!mActivatedCP) && (mSecRilLibHandle) && (connectRILDIfRequired() == OK)) {

setCallClockSync(mRilClient, SOUND_CLOCK_START);

mActivatedCP = true;

}

}

if (mMode == AudioSystem::MODE_IN_CALL && !mInCallAudioMode) {

if (spOut != 0) {

LOGV("setMode() in call force output standby");

spOut->doStandby_l();

}

if (spIn != 0) {

LOGV("setMode() in call force input standby");

spIn->doStandby_l();

}

LOGV("setMode() openPcmOut_l()");

openPcmOut_l();

openMixer_l();

setInputSource_l(AUDIO_SOURCE_DEFAULT);

setVoiceVolume_l(mVoiceVol);

mInCallAudioMode = true;

}

if (mMode != AudioSystem::MODE_IN_CALL && mInCallAudioMode) {

setInputSource_l(mInputSource);

if (mMixer != NULL) {

TRACE_DRIVER_IN(DRV_MIXER_GET)

struct mixer_ctl *ctl= mixer_get_ctl_by_name(mMixer, "Playback Path");

TRACE_DRIVER_OUT

if (ctl != NULL) {

LOGV("setMode() reset Playback Path to RCV");

TRACE_DRIVER_IN(DRV_MIXER_SEL)

mixer_ctl_set_enum_by_string(ctl, "RCV");

TRACE_DRIVER_OUT

}

}

LOGV("setMode() closePcmOut_l()");

closeMixer_l();

closePcmOut_l();

if (spOut != 0) {

LOGV("setMode() off call force output standby");

spOut->doStandby_l();

}

if (spIn != 0) {

LOGV("setMode() off call force input standby");

spIn->doStandby_l();

}

mInCallAudioMode = false;

}

if (!modeNeedsCPActive) {

if(mActivatedCP)

mActivatedCP = false;

}

}

return status;

}

特别是setMode这个函数,涉及到整个音频系统的状态,一定要搞清楚

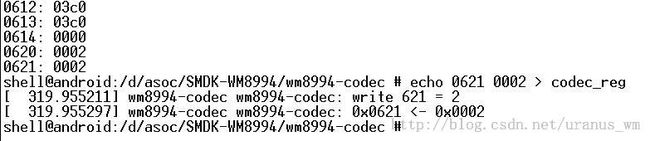

然后就是mixer的设置,还好wolfson在驱动里面实现了debugfs,可以在tty控制台输出

# mount-t debugfs none /d

打开debugfs,然后进入codec对应目录:

通过cat codec_reg可以查看wm8994所有寄存器,还可以实时修改,修改立刻生效

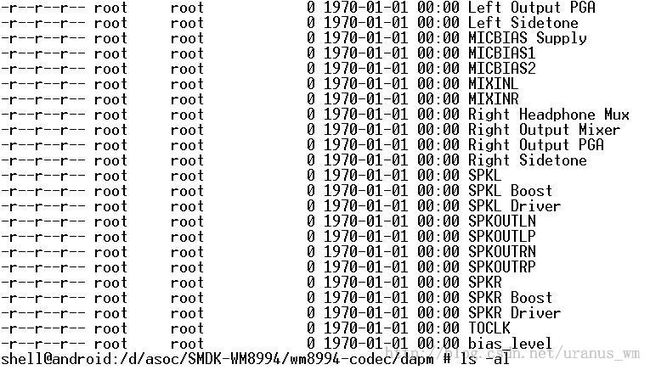

进入dapm目录可以查看所有mixer,我们配置的dapm必须在这里有值出现,否则不识别

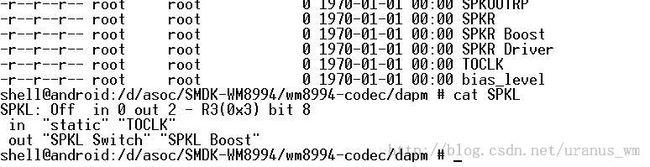

我们可以cat每个mixer,查看其状态:

注意当前SPKL状态为OFF,表示其不在开启的route通路上,或者说你希望开启的包含SPKL的route还没有开启

你需要检查这个route是否完整,音频信号在route里面通过就像能电流在电线内部一样,必须要有输入输出,分支也必须是环路

关于dapm后面再说,这里补充一个要点,就是AudioHardware里面pcm_open()时写的那个config:

struct pcm_config config = {

channels : 2,

rate : AUDIO_HW_OUT_SAMPLERATE,

period_size : AUDIO_HW_OUT_PERIOD_SZ,

period_count : AUDIO_HW_OUT_PERIOD_CNT,

format : PCM_FORMAT_S16_LE,

start_threshold : 0,

stop_threshold : 0,

silence_threshold : 0,

};

我在最开始调试的时候在这里浪费了很多时间,关键就在这个config值得配置上,它和codec_dai,cpu_dai和snd_pcm_hardware都有关系

需要仔细检查,这个在snd_pcm_open的时候会做一系列rules检查,下一章我再重点讲一下!