Hadoop 2.x(YARN)安装配置LZO

今天尝试在Hadoop 2.x(YARN)上安装和配置LZO,遇到了很多坑,网上的资料都是基于Hadoop 1.x的,基本没有对于Hadoop 2.x上应用LZO,我在这边记录整个安装配置过程

1. 安装LZO

下载lzo 2.06版本,编译64位版本,同步到集群中

wget http://www.oberhumer.com/opensource/lzo/download/lzo-2.06.tar.gz export CFLAGS=-m64 ./configure -enable-shared -prefix=/usr/local/hadoop/lzo/ make && make test && make install同步 /usr/local/hadoop/lzo/到整个集群上

2. 安装Hadoop-LZO

注意,Hadoop 1.x的时候我们是直接按照cloudera的文档clone https://github.com/kevinweil/hadoop-lzo.git上编译的,它是fork自https://github.com/twitter/hadoop-lzo。

但是kevinweil这个版本已经很久没有更新了,而且它是基于Hadoop 1.x去编译的,不能用于Hadoop 2.x。而twitter/hadoop-lzo三个月将Ant的编译方式切换为Maven,默认的dependency中Hadoop jar包就是2.x的,所以要clone twitter的hadoop-lzo,用Maven编译jar包和native library。

编译前先想pom中的hadoop-common和hadoop-mapreduce-client-core版本号改成2.1.0-beta

git clone https://github.com/twitter/hadoop-lzo.git export CFLAGS=-m64 export CXXFLAGS=-m64 export C_INCLUDE_PATH=/usr/local/hadoop/lzo/include export LIBRARY_PATH=/usr/local/hadoop/lzo/lib mvn clean package -Dmaven.test.skip=true tar -cBf - -C target/native/Linux-amd64-64/lib . | tar -xBvf - -C /usr/local/hadoop/hadoop-2.1.0-beta/lib/native/ cp target/hadoop-lzo-0.4.18-SNAPSHOT.jar /usr/local/hadoop/hadoop-2.1.0-beta/share/hadoop/common/

-rw-r--r-- 1 hadoop hadoop 104206 Sep 2 10:44 libgplcompression.a -rw-rw-r-- 1 hadoop hadoop 1121 Sep 2 10:44 libgplcompression.la lrwxrwxrwx 1 hadoop hadoop 26 Sep 2 10:47 libgplcompression.so -> libgplcompression.so.0.0.0 lrwxrwxrwx 1 hadoop hadoop 26 Sep 2 10:47 libgplcompression.so.0 -> libgplcompression.so.0.0.0 -rwxrwxr-x 1 hadoop hadoop 67833 Sep 2 10:44 libgplcompression.so.0.0.0 -rw-rw-r-- 1 hadoop hadoop 835968 Aug 29 17:12 libhadoop.a -rw-rw-r-- 1 hadoop hadoop 1482132 Aug 29 17:12 libhadooppipes.a lrwxrwxrwx 1 hadoop hadoop 18 Aug 29 17:12 libhadoop.so -> libhadoop.so.1.0.0 -rwxrwxr-x 1 hadoop hadoop 465801 Aug 29 17:12 libhadoop.so.1.0.0 -rw-rw-r-- 1 hadoop hadoop 580384 Aug 29 17:12 libhadooputils.a -rw-rw-r-- 1 hadoop hadoop 273642 Aug 29 17:12 libhdfs.a lrwxrwxrwx 1 hadoop hadoop 16 Aug 29 17:12 libhdfs.so -> libhdfs.so.0.0.0 -rwxrwxr-x 1 hadoop hadoop 181171 Aug 29 17:12 libhdfs.so.0.0.0将 hadoop-lzo-0.4.18-SNAPSHOT.jar和/usr/local/hadoop/hadoop-2.1.0-beta/lib/native/ 同步到整个集群中

3. 设置环境变量

在hadoop-env.sh中加入

export LD_LIBRARY_PATH=/usr/local/hadoop/lzo/lib

core-site加入

<property> <name>io.compression.codecs</name> <value>org.apache.hadoop.io.compress.GzipCodec,org.apache.hadoop.io.compress.DefaultCodec,com.hadoop.compression.lzo.LzoCodec,com.hadoop.compression.lzo.LzopCodec,org.apache.hadoop.io.compress.BZip2Codec</value> </property> <property> <name>io.compression.codec.lzo.class</name> <value>com.hadoop.compression.lzo.LzoCodec</value> </property>

mapred-site.xml加入

<property> <name>mapred.compress.map.output</name> <value>true</value> </property> <property> <name>mapred.map.output.compression.codec</name> <value>com.hadoop.compression.lzo.LzoCodec</value> </property> <property> <name>mapred.child.env</name> <value>LD_LIBRARY_PATH=/usr/local/hadoop/lzo/lib</value> </property>

其中mapred-site中设置mapred.child.env的LD_LIBRARY_PATH很重要,因为hadoop-lzo通过JNI调用(java.library.path)libgplcompression.so,然后libgplcompression.so再通过dlopen这个系统调用(其实是查找系统环境变量LD_LIBRARY_PATH)来加载liblzo2.so。container在启动的时候,需要设置LD_LIBRARY_PATH环境变量,来让LzoCodec加载native-lzo library,如果不设置的话,会在container的syslog中报下面的错误

2013-09-02 11:20:12,004 INFO [main] com.hadoop.compression.lzo.GPLNativeCodeLoader: Loaded native gpl library 2013-09-02 11:20:12,006 WARN [main] com.hadoop.compression.lzo.LzoCompressor: java.lang.UnsatisfiedLinkError: Cannot load liblzo2.so.2 (liblzo2.so.2: cannot open shared object file: No such file or directory)! 2013-09-02 11:20:12,006 ERROR [main] com.hadoop.compression.lzo.LzoCodec: Failed to load/initialize native-lzo library同步hadoop-env.sh, core-site.xml, mapred-site.xml到集群

LzoCodec加载gplcompression和lzo native library

static {

if (GPLNativeCodeLoader.isNativeCodeLoaded()) {

nativeLzoLoaded = LzoCompressor.isNativeLzoLoaded() &&

LzoDecompressor.isNativeLzoLoaded();

if (nativeLzoLoaded) {

LOG.info("Successfully loaded & initialized native-lzo library [hadoop-lzo rev " + getRevisionHash() + "]");

} else {

LOG.error("Failed to load/initialize native-lzo library");

}

} else {

LOG.error("Cannot load native-lzo without native-hadoop");

}

}

LzoCompressor和LzoDecompressor会调用本地方法initIDs

在impl/lzo/LzoCompressor.c中加载liblzo2.so

Java_com_hadoop_compression_lzo_LzoCompressor_initIDs(

JNIEnv *env, jclass class

) {

// Load liblzo2.so

liblzo2 = dlopen(HADOOP_LZO_LIBRARY, RTLD_LAZY | RTLD_GLOBAL);

if (!liblzo2) {

char* msg = (char*)malloc(1000);

snprintf(msg, 1000, "%s (%s)!", "Cannot load " HADOOP_LZO_LIBRARY, dlerror());

THROW(env, "java/lang/UnsatisfiedLinkError", msg);

return;

}

LzoCompressor_clazz = (*env)->GetStaticFieldID(env, class, "clazz",

"Ljava/lang/Class;");

LzoCompressor_finish = (*env)->GetFieldID(env, class, "finish", "Z");

LzoCompressor_finished = (*env)->GetFieldID(env, class, "finished", "Z");

LzoCompressor_uncompressedDirectBuf = (*env)->GetFieldID(env, class,

"uncompressedDirectBuf",

"Ljava/nio/ByteBuffer;");

LzoCompressor_uncompressedDirectBufLen = (*env)->GetFieldID(env, class,

"uncompressedDirectBufLen", "I");

LzoCompressor_compressedDirectBuf = (*env)->GetFieldID(env, class,

"compressedDirectBuf",

"Ljava/nio/ByteBuffer;");

LzoCompressor_directBufferSize = (*env)->GetFieldID(env, class,

"directBufferSize", "I");

LzoCompressor_lzoCompressor = (*env)->GetFieldID(env, class,

"lzoCompressor", "J");

LzoCompressor_lzoCompressionLevel = (*env)->GetFieldID(env, class,

"lzoCompressionLevel", "I");

LzoCompressor_workingMemoryBufLen = (*env)->GetFieldID(env, class,

"workingMemoryBufLen", "I");

LzoCompressor_workingMemoryBuf = (*env)->GetFieldID(env, class,

"workingMemoryBuf",

"Ljava/nio/ByteBuffer;");

// record lzo library version

void* lzo_version_ptr = NULL;

LOAD_DYNAMIC_SYMBOL(lzo_version_ptr, env, liblzo2, "lzo_version");

liblzo2_version = (NULL == lzo_version_ptr) ? 0

: (jint) ((unsigned (__LZO_CDECL *)())lzo_version_ptr)();

}

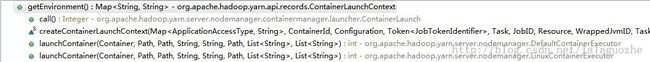

创建container上下文信息的时候,会读取mapred.child.env作为子进程环境变量的一部分

4. 测试mapreduce读lzo

hive新建一张表lzo_test

CREATE TABLE lzo_test( col String ) STORED AS INPUTFORMAT "com.hadoop.mapred.DeprecatedLzoTextInputFormat" OUTPUTFORMAT "org.apache.hadoop.hive.ql.io.HiveIgnoreKeyTextOutputFormat";下载lzop工具,load一个lzo文件进lzo_test表中,执行“select * from lzo_test"和"select count(1) from lzo_test"正确

同时用户可以通过单机作业或者分布式程序生成lzo.index文件

hadoop jar /usr/local/hadoop/hadoop-2.1.0-beta/share/hadoop/common/hadoop-lzo-0.4.18-SNAPSHOT.jar com.hadoop.compression.lzo.DistributedLzoIndexer /user/hive/warehouse/lzo_test/ hadoop jar /usr/local/hadoop/hadoop-2.1.0-beta/share/hadoop/common/hadoop-lzo-0.4.18-SNAPSHOT.jar com.hadoop.compression.lzo.LzoIndexer /user/hive/warehouse/lzo_test/

本文链接http://blog.csdn.net/lalaguozhe/article/details/10912527,转载请注明