WebRTC VideoEngine 本地Video数据处理-Encode

前面分析到,video数据由VideoCaptureInput,通过DeliverFrame函数传递到ViEEncoder来进行处理.

这里详细分析ViEEncoder对Video数据的处理过程:

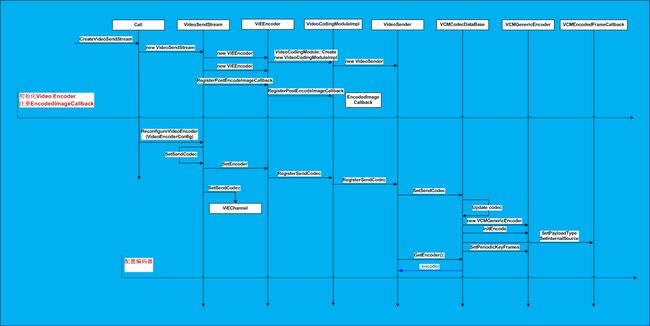

ViEEncoder对象初始化

在End_to_End_tests中的RunTest()中创建的VideoSendStream::Config send_config,并定义pre_encode_callback_,用于对送入Encoder的frame进行预处理

Call::CreateVideoSendStream(send_config, ..)

new VideoSendStream(.., config,..)

new ViEEncoder(.., config.pre_encode_callback, ..)例如自定义类A,其对象a要使用这一功能,需要按以下方式进行实现:

- A要继承I420FrameCallback类

- override FrameCallback方法,实现需要做的预处理,可以自己实现,也可以很容易的就集成现有的图像处理库(opencv .etc)

- 创建VideoSendStream::Config send_config, 并初始化pre_encode_callback_

- 根据send_config创建VideoSendStream对象

ViEEncoder::DeliverFrame

将video数据送入编码器

// Pass frame via preprocessor.

const int ret = vpm_->PreprocessFrame(video_frame, &decimated_frame);VideoProcessingModuleImpl::PreprocessFrame

VPMFramePreprocessor::PreprocessFrame

- resize if needed (VPMSimpleSpatialResampler::ResampleFrame(frame,

&resampled_frame_)) - content analysis ( VPMContentAnalysis::ComputeContentMetrics)

对送入编码器的数据进行预处理,在现有的代码中没有相应的实现

if (pre_encode_callback_) {

....

pre_encode_callback_->FrameCallback(decimated_frame);

}将准备好的数据送入编码器:

vcm_->AddVideoFrame(*output_frame);调用关系:

VideoCodingModule::AddVideoFrame

VideoCodingModuleImpl::AddVideoFrame

VideoSender::AddVideoFrameVideoSender::AddVideoFrame

编码器以及编码参数配置

创建Config信息:

所有的编码器选择,编码器参数,编码输出数据的callback函数都是在这里进行配置的

VideoSendStream::Config send_config

- EncoderSettings(payload_name,payload_type, VideoEncoder对象(选择对应的encode类型(H264)))

- Rtp(max_packet_size, RtpExtension, NackConfig, FecConfig, Rtx())

- send_transport //Transport for outgoing packets.

- LoadObserver* overuse_callback, 当前系统的负载情况,根据incoming capture frame的jitter计算

- I420FrameCallback*

- EncodedFrameObserver* post_encode_callback,编码输出帧的处理

- VideoRenderer* local_renderer,Renderer for local preview

- VideoCaptureInput* Input(),video数据输入

VideoEncoderConfig encoder_config

- vector< VideoStream > streams 视频流的常规参数配置(width, height, framerate, bitrate(min/max/target), qp )

创建Encoder,参考EndToEndTest中的实现

rtc::scoped_ptr< VideoEncoder > encoder(

VideoEncoder::Create(VideoEncoder::kVp8));

//根据需要传入不同的参数,初始化不同的encoder

//VP8Encoder::Create();

send_config_.encoder_settings.encoder = encoder.get();创建一个VideoEncoder对象,保存在VideoSendStream::Config中通话下面的调用顺序,进行设置:

CreateVideoSendStream(send_config_, ...);

VideoSendStream::VideoSendStream(..., config, ...);

VideoEncoder::RegisterExternalEncoder(

config.encoder_settings.encoder, ...);

ViEEncoder::RegisterExternalEncoder(encoder, ...);

VideoCodingModuleImpl::RegisterExternalEncoder(externalEncoder, ...);

VideoSender::RegisterExternalEncoder(externalEncoder, ...);

VCMCodecDataBase::RegisterExternalEncoder(external_encoder, ...); 最终保存在VCMCodecDataBase的成员变量external_encoder_中。

初始化VideoSendStream对象

这个对象很重要,所有上行数据的操作都是从这里开始的:

- ViEEncoder初始化

- ViEChannel初始化

- VideoCaptureInput初始化

- EncodedFrameObserver 编码输出码流问题

构造函数的具体实现:

VideoSendStream::VideoSendStream(

int num_cpu_cores,

ProcessThread* module_process_thread,

CallStats* call_stats,

CongestionController* congestion_controller,

const VideoSendStream::Config& config,

const VideoEncoderConfig& encoder_config,

const std::map<uint32_t, RtpState>& suspended_ssrcs)

: stats_proxy_(Clock::GetRealTimeClock(), config),

transport_adapter_(config.send_transport),

encoded_frame_proxy_(config.post_encode_callback),

config_(config),

suspended_ssrcs_(suspended_ssrcs),

module_process_thread_(module_process_thread),

call_stats_(call_stats),

congestion_controller_(congestion_controller),

encoder_feedback_(new EncoderStateFeedback()),

use_config_bitrate_(true) {

// Set up Call-wide sequence numbers, if configured for this send stream.

TransportFeedbackObserver* transport_feedback_observer = nullptr;

for (const RtpExtension& extension : config.rtp.extensions) {

if (extension.name == RtpExtension::kTransportSequenceNumber) {

transport_feedback_observer =

congestion_controller_->GetTransportFeedbackObserver();

break;

}

}

//定义SSRC队列

const std::vector<uint32_t>& ssrcs = config.rtp.ssrcs;

//初始化ViEEncoder

vie_encoder_.reset(new ViEEncoder(

num_cpu_cores, module_process_thread_, &stats_proxy_,

config.pre_encode_callback, congestion_controller_->pacer(),

congestion_controller_->bitrate_allocator()));

RTC_CHECK(vie_encoder_->Init());

//初始化ViEChannel

vie_channel_.reset(new ViEChannel(

num_cpu_cores, config.send_transport, module_process_thread_,

encoder_feedback_->GetRtcpIntraFrameObserver(),

congestion_controller_->GetBitrateController()->

CreateRtcpBandwidthObserver(),

transport_feedback_observer,

congestion_controller_->GetRemoteBitrateEstimator(false),

call_stats_->rtcp_rtt_stats(), congestion_controller_->pacer(),

congestion_controller_->packet_router(), ssrcs.size(), true));

//监听call状态

call_stats_->RegisterStatsObserver(vie_channel_->GetStatsObserver());

//

vie_encoder_->StartThreadsAndSetSharedMembers(

vie_channel_->send_payload_router(),

vie_channel_->vcm_protection_callback());

//配置SSRC

std::vector<uint32_t> first_ssrc(1, ssrcs[0]);

vie_encoder_->SetSsrcs(first_ssrc);

//配置RTP extension信息(TS, CVO .etc)

for (size_t i = 0; i < config_.rtp.extensions.size(); ++i) {

const std::string& extension = config_.rtp.extensions[i].name;

int id = config_.rtp.extensions[i].id;

// One-byte-extension local identifiers are in the range 1-14 inclusive.

RTC_DCHECK_GE(id, 1);

RTC_DCHECK_LE(id, 14);

if (extension == RtpExtension::kTOffset) {

RTC_CHECK_EQ(0, vie_channel_->SetSendTimestampOffsetStatus(true, id));

} else if (extension == RtpExtension::kAbsSendTime) {

RTC_CHECK_EQ(0, vie_channel_->SetSendAbsoluteSendTimeStatus(true, id));

} else if (extension == RtpExtension::kVideoRotation) {

//CVO

RTC_CHECK_EQ(0, vie_channel_->SetSendVideoRotationStatus(true, id));

} else if (extension == RtpExtension::kTransportSequenceNumber) {

RTC_CHECK_EQ(0, vie_channel_->SetSendTransportSequenceNumber(true, id));

} else {

RTC_NOTREACHED() << "Registering unsupported RTP extension.";

}

}

//配置ViEChannel和ViEEncoder

vie_channel_->SetProtectionMode(…);

vie_encoder_->UpdateProtectionMethod(…);

//配置SSRC

ConfigureSsrcs();

vie_channel_->SetRTCPCName(config_.rtp.c_name.c_str());

//初始化VideoCaptureInput对象

input_.reset(new internal::VideoCaptureInput(

module_process_thread_, vie_encoder_.get(), config_.local_renderer,

&stats_proxy_, this, config_.encoding_time_observer));

//设置MTU

vie_channel_->SetMTU(static_cast<uint16_t>(config_.rtp.max_packet_size + 28));

//注册外部编码器(这个名字好坑爹)

RTC_CHECK_EQ(0, vie_encoder_->RegisterExternalEncoder(

config.encoder_settings.encoder,

config.encoder_settings.payload_type,

config.encoder_settings.internal_source));

//配置Encoder

RTC_CHECK(ReconfigureVideoEncoder(encoder_config));

//监听发送端delay情况

vie_channel_->RegisterSendSideDelayObserver(&stats_proxy_);

//注册编码输出数据的callback函数

if (config_.post_encode_callback)

vie_encoder_->RegisterPostEncodeImageCallback(&encoded_frame_proxy_);

//控制bitrate不低于min

if (config_.suspend_below_min_bitrate)

vie_encoder_->SuspendBelowMinBitrate();

//???

congestion_controller_->AddEncoder(vie_encoder_.get());

encoder_feedback_->AddEncoder(ssrcs, vie_encoder_.get());

//注册callback函数,处理RTP/RTCP发送情况

vie_channel_->RegisterSendChannelRtcpStatisticsCallback(&stats_proxy_);

vie_channel_->RegisterSendChannelRtpStatisticsCallback(&stats_proxy_);

vie_channel_->RegisterRtcpPacketTypeCounterObserver(&stats_proxy_);

vie_channel_->RegisterSendBitrateObserver(&stats_proxy_);

vie_channel_->RegisterSendFrameCountObserver(&stats_proxy_);

}上述代码中关键点:

- VideoSendStream::Config& config被保存在VideoSendStream对象的config_变量中

- VideoEncoderConfig& encoder_config,则作为ReconfigureVideoEncoder方法的参数进行处理

ReconfigureVideoEncoder

_encoder

VideoSendStream::

bool ReconfigureVideoEncoder(const VideoEncoderConfig& config)通过前面设置好的VideoEncoderConfig encoder_config信息,通过ReconfigureVideoEncoder函数(不要被命名误导,不一定是Reconfig,第一次config时也是调用该接口),初始化VideoCodec对象(编码器类型,分辨率,码率等);

PS:VideoSendStream::Config *config_作为内部成员变量,函数ReconfigureVideoEncoder可以直接使用

bool VideoSendStream::ReconfigureVideoEncoder(

const VideoEncoderConfig& config) {

TRACE_EVENT0("webrtc", "VideoSendStream::(Re)configureVideoEncoder");

LOG(LS_INFO) << "(Re)configureVideoEncoder: " << config.ToString();

const std::vector<VideoStream>& streams = config.streams;

RTC_DCHECK(!streams.empty());

RTC_DCHECK_GE(config_.rtp.ssrcs.size(), streams.size());

//新建VideoCodec对象,根据config信息,进行初始化

VideoCodec video_codec;

memset(&video_codec, 0, sizeof(video_codec));

if (config_.encoder_settings.payload_name == "VP8") {

video_codec.codecType = kVideoCodecVP8;

} else if (config_.encoder_settings.payload_name == "VP9") {

video_codec.codecType = kVideoCodecVP9;

} else if (config_.encoder_settings.payload_name == "H264") {

video_codec.codecType = kVideoCodecH264;

} else {

video_codec.codecType = kVideoCodecGeneric;

}

switch (config.content_type) {

case VideoEncoderConfig::ContentType::kRealtimeVideo:

video_codec.mode = kRealtimeVideo;

break;

case VideoEncoderConfig::ContentType::kScreen:

video_codec.mode = kScreensharing;

if (config.streams.size() == 1 &&

config.streams[0].temporal_layer_thresholds_bps.size() == 1) {

video_codec.targetBitrate =

config.streams[0].temporal_layer_thresholds_bps[0] / 1000;

}

break;

}

//配置编码格式(H264)

if (video_codec.codecType == kVideoCodecH264) {

video_codec.codecSpecific.H264 = VideoEncoder::GetDefaultH264Settings();

}

if (video_codec.codecType == kVideoCodecH264) {

if (config.encoder_specific_settings != nullptr) {

video_codec.codecSpecific.H264 = *reinterpret_cast<const VideoCodecH264*>(

config.encoder_specific_settings);

}

}

//设置payload Name/Type

strncpy(video_codec.plName,

config_.encoder_settings.payload_name.c_str(),

kPayloadNameSize - 1);

video_codec.plName[kPayloadNameSize - 1] = '\0';

video_codec.plType = config_.encoder_settings.payload_type;

video_codec.numberOfSimulcastStreams =

static_cast<unsigned char>(streams.size());

video_codec.minBitrate = streams[0].min_bitrate_bps / 1000;

RTC_DCHECK_LE(streams.size(), static_cast<size_t>(kMaxSimulcastStreams));

//对每一个stream分别设置码流信息(width, height, bitrate, qp)

for (size_t i = 0; i < streams.size(); ++i) {

SimulcastStream* sim_stream = &video_codec.simulcastStream[i];

RTC_DCHECK_GT(streams[i].width, 0u);

RTC_DCHECK_GT(streams[i].height, 0u);

RTC_DCHECK_GT(streams[i].max_framerate, 0);

// Different framerates not supported per stream at the moment.

RTC_DCHECK_EQ(streams[i].max_framerate, streams[0].max_framerate);

RTC_DCHECK_GE(streams[i].min_bitrate_bps, 0);

RTC_DCHECK_GE(streams[i].target_bitrate_bps, streams[i].min_bitrate_bps);

RTC_DCHECK_GE(streams[i].max_bitrate_bps, streams[i].target_bitrate_bps);

RTC_DCHECK_GE(streams[i].max_qp, 0);

sim_stream->width = static_cast<unsigned short>(streams[i].width);

sim_stream->height = static_cast<unsigned short>(streams[i].height);

sim_stream->minBitrate = streams[i].min_bitrate_bps / 1000;

sim_stream->targetBitrate = streams[i].target_bitrate_bps / 1000;

sim_stream->maxBitrate = streams[i].max_bitrate_bps / 1000;

sim_stream->qpMax = streams[i].max_qp;

sim_stream->numberOfTemporalLayers = static_cast<unsigned char>(

streams[i].temporal_layer_thresholds_bps.size() + 1);

video_codec.width = std::max(video_codec.width,

static_cast<unsigned short>(streams[i].width));

video_codec.height = std::max(

video_codec.height, static_cast<unsigned short>(streams[i].height));

video_codec.minBitrate =

std::min(video_codec.minBitrate,

static_cast<unsigned int>(streams[i].min_bitrate_bps / 1000));

video_codec.maxBitrate += streams[i].max_bitrate_bps / 1000;

video_codec.qpMax = std::max(video_codec.qpMax,

static_cast<unsigned int>(streams[i].max_qp));

}ViEEncoder::SetEncoder

初始化Encoder

ViEChannel::SetSendCodec

将encoder关联到数据通道(数据流)

encoder_params_

通过SetEncoderParameters将参数传递给编码器

编码VCMGenericEncoder::Encode

VideoSender::AddVideoFrame函数中

int32_t ret = _encoder->Encode(converted_frame, codecSpecificInfo, _nextFrameTypes);其中,

- converted_frame是输入的video数据

- codecSpecificInfo是encode特定的信息,只有通过VP8格式编码才不为空

真正的编码操作由VCMGenericEncoder对象完成

VCMGenericEncoder初始化

如文章开始的流程图,

初始化编码器

配置编码器参数,ReconfigureVideoEncoder的时候,通过VideoSender对象的SetSendCodec()函数,新建VCMGenericEncoder对象,并调用InitEncode初始化encoder,VideoSender通过VCMGenericEncoder的GetEncoder方法获取encoder对象

根据前面的分析,如果我们需要一个H264的编码器,首先初始化一个H264的encoder对象:

rtc::scoped_ptr< VideoEncoder > encoder(

VideoEncoder::Create(VideoEncoder::kH264));

//根据需要传入不同的参数,初始化不同的encoder

//H264Encoder::Create();

//new H264VideoToolboxEncoder();

send_config_.encoder_settings.encoder = encoder.get();然后new一个VideoSendStream::Config对象,将h264encoder保存其中;

使用该config初始化一个VideoSendStream,将该对象传给VideoSender;

创建VideoEncoderConfig对象,将编码器相关参数保存在VideoCodec

调用ReconfigureVideoEncoder配置h264encoder的参数

最后,调用Encode方法即可进行编码;

获取编码输出数据

数据的获取是通过callback方法实现的:上层实现了编码后的数据处理方法(ProcessMethod),但是不知道该什么使用调用,于是将函数的指针传递给编码器模块,编码器编码完成后,调用ProcessMethod即可,由于ProcessMethod和数据处于同一层变,该方法又是从encode模块调用的,故而称之为回调函数, 现在分下WebRTC是怎么通过回调函数对编码后的数据进行处理的(以H264VideoToolboxEncoder)为例:

int H264VideoToolboxEncoder::Encode(

const VideoFrame& input_image,

const CodecSpecificInfo* codec_specific_info,

const std::vector<FrameType>* frame_types) {

/*判断callback和compression_session_ *这两个都很重要,一个用于输出编码好的数据,另一个是编码的真正实现 */

if (!callback_ || !compression_session_) {

return WEBRTC_VIDEO_CODEC_UNINITIALIZED;

}

// Get a pixel buffer from the pool and copy frame data over.

CVPixelBufferPoolRef pixel_buffer_pool =

VTCompressionSessionGetPixelBufferPool(compression_session_);

CVPixelBufferRef pixel_buffer = nullptr;

CVReturn ret = CVPixelBufferPoolCreatePixelBuffer(nullptr, pixel_buffer_pool, &pixel_buffer);

if (!internal::CopyVideoFrameToPixelBuffer(input_image, pixel_buffer)) {

LOG(LS_ERROR) << "Failed to copy frame data.";

CVBufferRelease(pixel_buffer);

return WEBRTC_VIDEO_CODEC_ERROR;

}

// Check if we need a keyframe.

bool is_keyframe_required = false;

if (frame_types) {

for (auto frame_type : *frame_types) {

if (frame_type == kVideoFrameKey) {

is_keyframe_required = true;

break;

}

}

}

//设置TS

CMTime presentation_time_stamp =

CMTimeMake(input_image.render_time_ms(), 1000);

CFDictionaryRef frame_properties = nullptr;

if (is_keyframe_required) {

CFTypeRef keys[] = { kVTEncodeFrameOptionKey_ForceKeyFrame };

CFTypeRef values[] = { kCFBooleanTrue };

frame_properties = internal::CreateCFDictionary(keys, values, 1);

}

//设置编码参数

rtc::scoped_ptr<internal::FrameEncodeParams> encode_params;

encode_params.reset(new internal::FrameEncodeParams(

callback_, codec_specific_info, width_, height_,

input_image.render_time_ms(), input_image.timestamp()));

/*将数据送入真正的编码器,进行编码 *注意encode_params.callback_,这个是告诉编码器,编码好后,回调video数据 VTCompressionSessionEncodeFrame( compression_session_, pixel_buffer, presentation_time_stamp, kCMTimeInvalid, frame_properties, encode_params.release(), nullptr); ... return WEBRTC_VIDEO_CODEC_OK; }// This is the callback function that VideoToolbox calls when encode is

// complete.

void VTCompressionOutputCallback(void* encoder,

void* params,

OSStatus status,

VTEncodeInfoFlags info_flags,

CMSampleBufferRef sample_buffer) {

rtc::scoped_ptr<FrameEncodeParams> encode_params(

reinterpret_cast<FrameEncodeParams*>(params));

// Convert the sample buffer into a buffer suitable for RTP packetization.

rtc::scoped_ptr<rtc::Buffer> buffer(new rtc::Buffer());

rtc::scoped_ptr<webrtc::RTPFragmentationHeader> header;

if (!H264CMSampleBufferToAnnexBBuffer(sample_buffer,

is_keyframe,

buffer.get(),

header.accept())) {

return;

}

webrtc::EncodedImage frame(buffer->data(), buffer->size(), buffer->size());

frame._encodedWidth = encode_params->width;

frame._encodedHeight = encode_params->height;

frame._completeFrame = true;

frame._frameType = is_keyframe ? webrtc::kVideoFrameKey : webrtc::kVideoFrameDelta;

frame.capture_time_ms_ = encode_params->render_time_ms;

frame._timeStamp = encode_params->timestamp;

//将编码好的码流传回去,用于发送,可以试着发给decoder做成loopback

int result = encode_params->callback->Encoded(

frame, &(encode_params->codec_specific_info), header.get());

}这里需要说明的是VideoToolbox时IOS上面的H264编码框架,如果在Android系统中使用H264编码,需要自己集成,可以参考WebRTC集成OpenH264,后面我会尝试在Android中集成H264编码器

数据发送

VideoSender::RegisterTransportCallback