FileInputFormat分析

一.程序简介

在mapreduce程序运行的开始阶段,hadoop需要将待处理的文件进行切分,按定义格式读取等操作,这些操作都在InputFormat中进行。

InputFormat是一个抽象类,他含有getSplits()和createRecordReader()抽象方法,在子类中必须被实现。这两个就是InputFormat的基本方法。getSplits()确定输入对象的切分原则,而则可以按一定格式读取相应数据。

二.程序详细分析

FileInputFormat中实现的getSplits()方法如下:

| public List getSplits(JobContext job ) throws IOException { long minSize = Math.max(getFormatMinSplitSize(), getMinSplitSize(job)); long maxSize = getMaxSplitSize(job); // generate splits List splits = new ArrayList(); for (FileStatus file: listStatus(job)) { Path path = file.getPath(); FileSystem fs = path.getFileSystem(job.getConfiguration()); long length = file.getLen(); BlockLocation[] blkLocations = fs.getFileBlockLocations(file, 0, length); if ((length != 0) && isSplitable(job, path)) { long blockSize = file.getBlockSize(); long splitSize = computeSplitSize(blockSize, minSize, maxSize); long bytesRemaining = length; while (((double) bytesRemaining)/splitSize > SPLIT_SLOP) { int blkIndex = getBlockIndex(blkLocations, length-bytesRemaining); splits.add(new FileSplit(path, length-bytesRemaining, splitSize, blkLocations[blkIndex].getHosts())); bytesRemaining -= splitSize; } if (bytesRemaining != 0) { splits.add(new FileSplit(path, length-bytesRemaining, bytesRemaining, blkLocations[blkLocations.length-1].getHosts())); } } else if (length != 0) { splits.add(new FileSplit(path, 0, length, blkLocations[0].getHosts())); } else { //Create empty hosts array for zero length files splits.add(new FileSplit(path, 0, length, new String[0])); } } LOG.debug("Total # of splits: " + splits.size()); return splits; } |

根据对代码的分析,可以看到它是对一个目标文件进行切分操作。如何拆分文件依据以下几个参数:maxsize,BlockSize,minsize

| long minSize = Math.max(getFormatMinSplitSize(),getMinSplitSize(job)); long maxSize = getMaxSplitSize(job); long blockSize = file.getBlockSize(); 根据这3个参数来确定切分文件块的size大小 long splitSize = computeSplitSize(blockSize, minSize, maxSize); minSize: mapred.min.split.size maxSize : mapred.max.split.size |

简单来讲就是切分的原则是splitSize不会小于minSize,不会大于maxSize,如果blockSize能够满足以上要求就取blockSize,如果不能的话就在maxSize和minSize中取值。

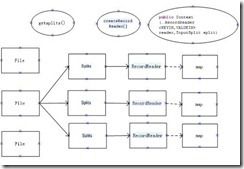

确定了文件的切分块大小后就能进行切分操作,如下图:

这里的RecordReader就是对拆分后的文件如何读取进行了封装,例如以回车符分隔行,按逗号分隔列的典型文件就在RecordReader中定义。

当文件被split以后,会和ReacordReader一起被传递到Mapper类中,Mapper就根据这两个参数将文件转化成可被MapReduce读取的源数据结构。之后再完成map和reduce的操作。

三、其他示例NLineInputFormat

NLineInputFormat继承自FileInputFormat,它实现按行而不是按文件大小来切分的文件的方法。

重写了FileInputFormat中的getSplits()和createRecordReader()方法,因为NLineInputFormat是在旧的mapreduce框架下写的,这里写了新框架下的NLineIputFormat,代码如下:

| package com.yuankang.hadoop; import java.io.IOException; import java.util.ArrayList; import java.util.List; import org.apache.hadoop.conf.Configuration; import org.apache.hadoop.fs.FSDataInputStream; import org.apache.hadoop.fs.FileStatus; import org.apache.hadoop.fs.FileSystem; import org.apache.hadoop.fs.Path; import org.apache.hadoop.io.LongWritable; import org.apache.hadoop.io.Text; import org.apache.hadoop.mapreduce.lib.input.FileInputFormat; import org.apache.hadoop.mapreduce.lib.input.FileSplit; import org.apache.hadoop.mapreduce.InputSplit; import org.apache.hadoop.mapreduce.JobContext; import org.apache.hadoop.mapreduce.lib.input.LineRecordReader; import org.apache.hadoop.mapreduce.RecordReader; import org.apache.hadoop.util.LineReader; import org.apache.hadoop.mapreduce.TaskAttemptContext; public class NLineInputFormat extends FileInputFormat{ private int N = 1; @Override public RecordReader createRecordReader(InputSplit split, TaskAttemptContext context) { return new LineRecordReader(); } /** * Logically splits the set of input files for the job, splits N lines * of the input as one split. * * @see org.apache.hadoop.mapred.FileInputFormat#getSplits(JobConf, int) */ public List getSplits(JobContext job ) throws IOException{ List splits = new ArrayList(); for (FileStatus file : listStatus(job)) { Path path = file.getPath(); FileSystem fs = path.getFileSystem(job.getConfiguration()); LineReader lr = null; try { FSDataInputStream in = fs.open(path); Configuration conf = job.getConfiguration(); lr = new LineReader(in, conf); N = conf.getInt("mapred.line.input.format.linespermap", 1); Text line = new Text(); int numLines = 0; long begin = 0; long length = 0; int num = -1; while ((num = lr.readLine(line)) > 0) { numLines++; length += num; if (numLines == N) { splits.add(new FileSplit(path, begin, length, new String[]{})); begin += length; length = 0; numLines = 0; } } if (numLines != 0) { splits.add(new FileSplit(path, begin, length, new String[]{})); } } finally { if (lr != null) { lr.close(); } } } System.out.println("Total # of splits: " + splits.size()); return splits; } } |