Hive Metastore Thrift Server Partition压力测试

测试环境:

虚拟机10.1.77.84

四核Intel(R) Xeon(R) CPU E5649 @ 2.53GHz,8G内存

起单台metastore server

JVM args : -Xmx7000m -XX:ParallelGCThreads=8 -XX:+UseConcMarkSweepGC

测试表Schema:

table name: metastore_stress_testing

# col_name data_type comment

a string None

# Partition Information

# col_name data_type comment

pt string None

# Detailed Table Information

Database: default

Owner: hadoop

CreateTime: Wed May 29 11:29:30 CST 2013

LastAccessTime: UNKNOWN

Protect Mode: None

Retention: 0

Location: hdfs://10.1.77.86/user/hive9/warehouse/metastore_stress_testing

Table Type: MANAGED_TABLE

Table Parameters:

transient_lastDdlTime1369798170

# Storage Information

SerDe Library: org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe

InputFormat: org.apache.hadoop.mapred.TextInputFormat

OutputFormat: org.apache.hadoop.hive.ql.io.HiveIgnoreKeyTextOutputFormat

Compressed: No

Num Buckets: -1

Bucket Columns: []

Sort Columns: []

Storage Desc Params:

serialization.format1

测试方法:

Hive在解析一条query的时候,如果不加table partition predicate(比如直接select * from dpdw_traffic_base),会在生成物理执行计划,物理计划优化环节PartitionPruner 中调用Hive Metastore Client的getPartition(Table)方法,获取整张表的的List<Partition>,由于每个Partition 可以看成一张大表下的子表,里面包含了各自独立的InputFormatClass, OutputFormatClass, Deserializer, Column Schema , StorageDescriptor,Parameters等等,如果表结构比较复杂,column比较多,Partitions又比较多,会造成返回的对象非常大,而且会对metastore server 造成很大压力。

我们通过模拟HiveMetastoreClient的listPartitions方法来测试性能表现,HiveConf中默认设置metastore client socket timeout超时为20秒(hive.metastore.client.socket.timeout)。为了防止测试过程中read timeout ,我们先将这个值设得很大

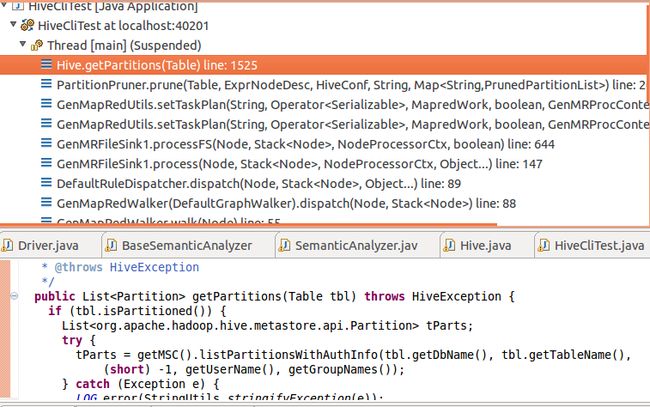

生成物理执行计划的call stack:

测试代码:

public class MetastoreTestConcurrent {

public long timeTasks(int nThread) throws Exception {

final CountDownLatch startGate = new CountDownLatch(1);

final CountDownLatch endGate = new CountDownLatch(nThread);

for (int i = 0; i < nThread; i++) {

Thread t = new Thread(new Runnable() {

@Override

public void run() {

try {

startGate.await();

try {

HiveConf hConf = new HiveConf();

hConf.setIntVar(ConfVars.METASTORE_CLIENT_SOCKET_TIMEOUT, 100000);

HiveMetaStoreClient hmsc = new HiveMetaStoreClient(hConf);

long start = System.currentTimeMillis();

List<Partition> parts = hmsc.listPartitions("default", "metastore_stress_testing", (short) -1);

long end = System.currentTimeMillis();

System.out.println(Thread.currentThread().getName() + " partition_number:" +

parts.size() +" duration:" + (end - start));

} catch (Exception e) {}

finally {

endGate.countDown();

}

} catch (InterruptedException e) {}

}

});

t.start();

}

long start = System.currentTimeMillis();

startGate.countDown();

endGate.await();

long end = System.currentTimeMillis();

return end - start;

}

public static void main(String[] args) throws Exception {

MetastoreTestConcurrent mtc = new MetastoreTestConcurrent();

System.out.println("total duration :" + mtc.timeTasks(20));

}

}

测试结果:

hive metastore server 默认启动的时候会启最小minWorkerThreads = 200, 最大maxWorkerThreads = 100000 的ThreadPoolExecutor,并开启TCP keepalive = true

单个Thread:

duration=3500 millis

partition number=1000

duration=16874 millis

partition number=5000

duration=53697 millis

partition number=20000

Partition List RamUsage: 46264576 bytes

duration:75809 millis

partition number:27092

duration :245805 millis

partition number:50000

三个thread

Thread Thread-2 partition_number:26596 duration:96902 millis

Thread Thread-0 partition_number:26596 duration:97135 millis

Thread Thread-1 partition_number:26596 duration:97193 millis

total duration :100235 millis

十个thread

Thread Thread-4 partition_number:27952 duration:255172 millis

Thread Thread-2 partition_number:27952 duration:257144 millis

Thread Thread-0 partition_number:27952 duration:257424 millis

Thread Thread-7 partition_number:27952 duration:258388 millis

Thread Thread-5 partition_number:27952 duration:260496 millis

Thread Thread-1 partition_number:27952 duration:261106 millis

Thread Thread-3 partition_number:27952 duration:261173 millis

Thread Thread-6 partition_number:28386 duration:324847 millis

Thread Thread-8 partition_number:28506 duration:356391 millis

Thread Thread-9 partition_number:28507 duration:356861 millis

total duration: 361216 millis

五个Thread:

Thread-1 partition_number:50000 duration: 555805 millis

Thread-3 partition_number:50000 duration:557810 millis

Thread-2 partition_number:50000 duration:558112 millis

Thread-0 partition_number:50000 duration:558480 millis

Thread-4 partition_number:50000 duration:558897 millis

total duration :560753 millis

结论

Hive在单线程环境下调用listPartitions时间基本成线性增长,在Partition数为20000的时候,返回的结果已经将近46MB了,这还是只有一个实体column和一个virutal column的情况下,由于前面提到partition之间是相互独立的,所以如果partition非常多,会crash metastore server,也会crach本地hive client。

在多线程环境下,调用时间明显增加,因为metastore server没有query cache,每一次调用都会变成对于底层数据库的一次查询。

综合来看,partition比较多的话,一个是在compile环节上会一直block在获取partition schema环节上,从而无法提交job,另一个是即使获得了全表下所有partition信息,会生成job splits file,这个文件也会非常大,会撑满jvm heap,也有可能撑满本地磁盘(会先serialize到本地再提交到hdfs上)

ps:

大前天晚上部署的hive metastore server宕机了

从日志上看应该是用户获取partition信息(get_partitions_with_auth_result方法)的时候size太大导致的OOM,看来还是得限制下partition个数

Exception in thread "pool-1-thread-196" java.lang.OutOfMemoryError: Java heap space

at java.util.Arrays.copyOf(Arrays.java:2786)

at java.io.ByteArrayOutputStream.write(ByteArrayOutputStream.java:94)

at org.apache.thrift.transport.TSaslTransport.write(TSaslTransport.java:445)

at org.apache.thrift.transport.TSaslServerTransport.write(TSaslServerTransport.java:40)

at org.apache.thrift.protocol.TBinaryProtocol.writeI16(TBinaryProtocol.java:154)

at org.apache.thrift.protocol.TBinaryProtocol.writeFieldBegin(TBinaryProtocol.java:109)

at org.apache.hadoop.hive.metastore.api.FieldSchema.write(FieldSchema.java:421)

at org.apache.hadoop.hive.metastore.api.StorageDescriptor.write(StorageDescriptor.java:1086)

at org.apache.hadoop.hive.metastore.api.Partition.write(Partition.java:897)

at org.apache.hadoop.hive.metastore.api.ThriftHiveMetastore$get_partitions_with_auth_result.write(ThriftHiveMetastore.java:33814)

at org.apache.thrift.ProcessFunction.process(ProcessFunction.java:34)

at org.apache.thrift.TBaseProcessor.process(TBaseProcessor.java:34)

本文链接http://blog.csdn.net/lalaguozhe/article/details/9070203,转载请注明