Python篇----Requests获取网页源码(爬虫基础)

1 下载与安装

见其他教程。

2 Requsts简介

Requests is an Apache2 Licensed HTTP library, written inPython, for human beings.

Python’s standard urllib2 module provides most ofthe HTTP capabilities you need, but the API is thoroughlybroken.It was built for a different time — and a different web. It requires anenormous amount of work (even method overrides) to perform the simplest oftasks.

Requests takes all of the work out of Python HTTP/1.1 — making your integrationwith web services seamless. There’s no need to manually add query strings toyour URLs, or to form-encode your POST data. Keep-alive and HTTP connectionpooling are 100% automatic, powered by urllib3,which is embedded within Requests.------from http://www.python-requests.org/en/latest/

3 获取网页源代码(Get方法)

- 直接获取源代码

- 修改Http头获取源代码

直接获取:

import requests

html = requests.get('http://www.baidu.com')

print html.text

修改http头:

import requests

import re

#下面三行是编码转换的功能

import sys

reload(sys)

sys.setdefaultencoding("utf-8")

#hea是我们自己构造的一个字典,里面保存了user-agent。

#让目标网站误以为本程序是浏览器,并非爬虫。

#从网站的Requests Header中获取。【审查元素】

hea = {'User-Agent':'Mozilla/5.0 (Windows NT 6.3; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/41.0.2272.118 Safari/537.36'}

html = requests.get('http://jp.tingroom.com/yuedu/yd300p/',headers = hea)

html.encoding = 'utf-8' #这一行是将编码转为utf-8否则中文会显示乱码。

print html.text

4 带正则表达式的提取

<pre name="code" class="python">import requests

import re

#下面三行是编码转换的功能

import sys

reload(sys)

sys.setdefaultencoding("utf-8")

#hea是我们自己构造的一个字典,里面保存了user-agent。

#让目标网站误以为本程序是浏览器,并非爬虫。

#从网站的Requests Header中获取。【审查元素】

hea = {'User-Agent':'Mozilla/5.0 (Windows NT 6.3; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/41.0.2272.118 Safari/537.36'}

html = requests.get('http://jp.tingroom.com/yuedu/yd300p/',headers = hea)

html.encoding = 'utf-8' #这一行是将编码转为utf-8否则中文会显示乱码。

#此为正则表达式部分。找到规律,利用正则,内容就可以出来

title = re.findall('color:#666666;">(.*?)</span>',html.text,re.S)

for each in title:

print each

chinese = re.findall('color: #039;">(.*?)</a>',html.text,re.S)

for each in chinese:

print each<pre>

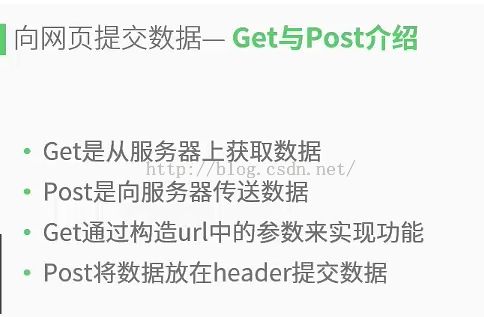

5 向网页提交数据(Post方法)

第二幅图:

此处构造表单,就是下面代码中data的部分,用的字典。为什么要改字典里面的page数字?因为,目标网站采用异步加载方式,不是一次性加载你所需要爬取的全部内容,所以要一页一页的爬去(改数)。

代码中爬取的是目标网址的公司名称,title。

代码展示(含原理解释):

#-*-coding:utf8-*-

import requests

import re

#需要使用Chrome浏览器中的:审查元素->Network

#很多信息,如url、page、提交方法等都必须从里得到

#原来的目标网址,但不能作为目标url

# url = 'https://www.crowdfunder.com/browse/deals'

#Post表单向此链接提交数据

url = 'https://www.crowdfunder.com/browse/deals&template=false'

#get方法比较

# html = requests.get(url).text

# print html

#注意这里的page后面跟的数字需要放到引号里面。

#page的数据可以改动

data = {

'entities_only':'true',

'page':'2'

}

html_post = requests.post(url,data=data)

title = re.findall('"card-title">(.*?)</div>',html_post.text,re.S)

for each in title:

print each

视频资料:http://www.jikexueyuan.com/course/821_3.html?ss=1

资料摘自极客学院