RHEL5.6下安装RAC11.2.0.3

操作系统和存储环境

linux版本:

[root@rac1 ~]# lsb_release -a

LSB Version: :core-4.0-amd64:core-4.0-ia32:core-4.0-noarch:graphics-4.0-amd64:graphics-4.0-ia32:graphics-4.0-noarch:printing-4.0-amd64:printing-4.0-ia32:printing-4.0-noarch

Distributor ID: RedHatEnterpriseServer

Description: Red Hat Enterprise Linux Server release 5.6 (Tikanga)

Release: 5.6

Codename: Tikanga

内存状况

[root@rac1 Server]# free

total used free shared buffers cached

Mem: 4043728 714236 3329492 0 38784 431684

-/+ buffers/cache: 243768 3799960

Swap: 33551744 0 33551744

存储

从openfiler上分出几个lun,划出3个1GB的分区用作ocr和voting,3个100GB的分区用于数据文件的存储,3个60GB的分区用于闪回恢复区

接下来要安装2节点RAC,安装介质可以直接使用11.2.0.3的patch

检查系统中是否安装了需要的包

rpm -q --qf '%{NAME}-%{VERSION}-%{RELEASE} (%{ARCH})\n' binutils \

compat-libstdc++-33 \

elfutils-libelf \

elfutils-libelf-devel \

gcc \

gcc-c++ \

glibc \

glibc-common \

glibc-devel \

glibc-headers \

ksh \

libaio \

libaio-devel \

libgcc \

libstdc++ \

libstdc++-devel \

make \

sysstat \

unixODBC \

unixODBC-devel

创建用户

新建用户组

groupadd -g 1000 oinstall

groupadd -g 1020 asmadmin

groupadd -g 1021 asmdba

groupadd -g 1031 dba

groupadd -g 1022 asmoper

创建用户

useradd -u 1100 -g oinstall -G asmadmin,asmdba,dba grid

useradd -u 1101 -g oinstall -G dba,asmdba oracle

passwd oracle

passwd grid

grid用户的环境变量

if [ -t 0 ]; then

stty intr ^C

fi

export ORACLE_BASE=/opt/app/oracle

export ORACLE_HOME=/opt/app/11.2.0/grid

export ORACLE_SID=+ASM1

export PATH=$ORACLE_HOME/bin:$PATH

umask 022

oracle用户的环境变量

if [ -t 0 ]; then

stty intr ^C

fi

export ORACLE_BASE=/opt/app/oracle

export ORACLE_HOME=/opt/app/oracle/product/11.2.0/db_1

export ORACLE_SID=oradb_1

export PATH=$ORACLE_HOME/bin:$PATH

umask 022

root用户环境变量

export PATH=/opt/app/11.2.0/grid/bin:/opt/app/oracle/product/11.2.0/db_1/bin:$PATH

配置网络

修改/etc/hosts文件

# Do not remove the following line, or various programs

# that require network functionality will fail.

127.0.0.1 localhost.localdomain localhost

# Public Network - (eth0,eth1---bond0)

192.168.106.241 rac1 rac1.wildwave.com

192.168.106.242 rac2 rac2.wildwave.com

# Private Interconnect - (eth2,eth3-bond1)

10.10.10.241 rac1-priv

10.10.10.242 rac2-priv

# Public Virtual IP (VIP) addresses for - (eth0,eth1---bond0)

192.168.106.243 rac1-vip rac1-vip.wildwave.com

192.168.106.244 rac2-vip rac2-vip.wildwave.com

配置DNS

这里在两个节点上各配置一个DNS服务器,这样它们能使用本机上的DNS服务器来解析SCAN和VIP。下面只给出一个节点上的配置过程,rac2上在要在正反向解析的两个文件里相应改动几个地方即可

首先修改/var/named/chroot/etc/named.conf文件

options {

listen-on port 53 { any; };

listen-on-v6 port 53 { ::1; };

directory "/var/named";

dump-file "/var/named/data/cache_dump.db";

statistics-file "/var/named/data/named_stats.txt";

memstatistics-file "/var/named/data/named_mem_stats.txt";

// Those options should be used carefully because they disable port

// randomization

// query-source port 53;

// query-source-v6 port 53;

allow-query { 192.168.106.0/24; };

};

logging {

channel default_debug {

file "data/named.run";

severity dynamic;

};

};

view localhost_resolver {

match-clients { 192.168.106.0/24; };

match-destinations { any; };

recursion yes;

include "/etc/named.rfc1912.zones";

};

controls {

inet 127.0.0.1 allow { localhost; } keys { "rndckey"; };

};

include "/etc/rndc.key";

修改/var/named/chroot/etc/named.rfc1912.zones文件

zone "wildwave.com" IN {

type master;

file "wildwave.zone";

allow-update { none; };

};

zone "106.168.192.in-addr.arpa" IN {

type master;

file "named.wildwave";

allow-update { none; };

};

由于数据库服务器不需要连外网,这里就不配置named.ca了

配置正向解析/var/named/chroot/var/named/wildwave.zone文件

$TTL 86400

@ IN SOA rac1.wildwave.com. root.wildwave.com. (

2010022101 ; serial (d. adams)

3H ; refresh

15M ; retry

1W ; expiry

1D ) ; minimum

@ IN NS rac1.wildwave.com.

rac1 IN A 192.168.106.241

rac2 IN A 192.168.106.242

rac1-vip IN A 192.168.106.243

rac2-vip IN A 192.168.106.244

rac-scan IN A 192.168.106.245

rac-scan IN A 192.168.106.246

rac-scan IN A 192.168.106.247

配置反向解析/var/named/chroot/var/named/named.wildwave

$TTL 86400

@ IN SOA rac1.wildwave.com. root.wildwave.com. (

2010022101 ; Serial

28800 ; Refresh

14400 ; Retry

3600000 ; Expire

86400 ) ; Minimum

@ IN NS rac1.wildwave.com.

241 IN PTR rac1.wildwave.com.

242 IN PTR rac2.wildwave.com.

243 IN PTR rac1-vip.wildwave.com.

244 IN PTR rac2-vip.wildwave.com.

245 IN PTR rac-scan.wildwave.com.

246 IN PTR rac-scan.wildwave.com.

247 IN PTR rac-scan.wildwave.com.

然后重启named服务并配置成开机自启动,服务器端配置就算是完成了:

chkconfig named on

service named restart

接下来配置dns客户端

我们要在/etc/resolv.conf中配置dns服务器地址

nameserver 192.168.106.241

要注意,两个节点上的地址不一样,实际上就是配置成本机地址,使用本机上的dns服务器

然后修改 /etc/nsswitch.conf

hosts: files dns

改成: hosts: dns files

即先使用dns来解析,然后才从/etc/hosts文件中读取

测试是否正确配置了dns

[root@rac2 ~]# nslookup 192.168.106.243

Server: 192.168.106.242

Address: 192.168.106.242#53

243.106.168.192.in-addr.arpa name = rac1-vip.wildwave.com.

[root@rac2 ~]# nslookup rac1-vip.wildwave.com

Server: 192.168.106.242

Address: 192.168.106.242#53

Name: rac1-vip.wildwave.com

Address: 192.168.77.243

时间同步

这里使用CTSS来做时间同步,因此要禁用NTP

/etc/init.d/ntpd stop

chkconfig ntpd off

mv /etc/ntp.conf /etc/ntp.conf.org

内核参数配置

kernel.shmmax = 4294967295

kernel.shmall = 2097152

kernel.shmmni = 4096

kernel.sem = 250 32000 100 128

fs.file-max = 6815744

net.ipv4.ip_local_port_range = 9000 65500

net.core.rmem_default = 262144

net.core.rmem_max = 4194304

net.core.wmem_default = 262144

net.core.wmem_max = 1048576

fs.aio-max-nr=1048576

其中shmmax参数按照实际内存大小来设置

修改资源限制

在/etc/security/limits.conf 中添加以下内容

grid soft nproc 2047

grid hard nproc 16384

grid soft nofile 1024

grid hard nofile 65536

oracle soft nproc 2047

oracle hard nproc 16384

oracle soft nofile 1024

oracle hard nofile 65536

修改/etc/pam.d/login

session required pam_limits.so

修改/etc/profile

if [ $USER = "oracle" ] || [ $USER = "grid\" ]; then

if [ $SHELL = "/bin/ksh" ]; then

ulimit -p 16384

ulimit -n 65536

else

ulimit -u 16384 -n 65536

fi

umask 022

fi

创建相关目录

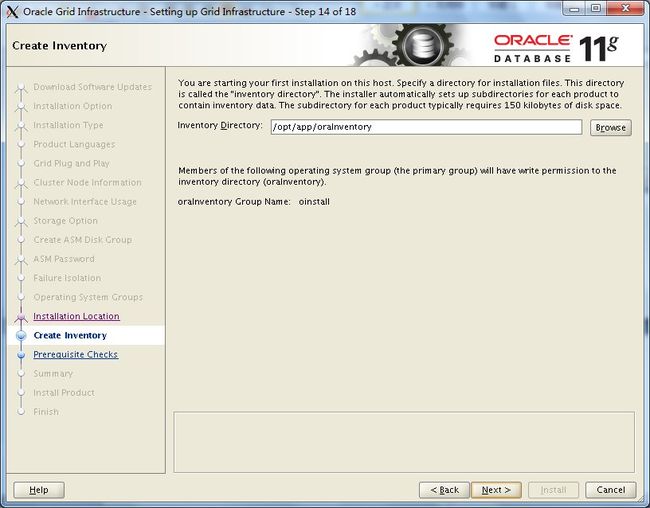

mkdir -p /opt/app/oraInventory

chown -R grid:oinstall /opt/app/oraInventory

chmod -R 775 /opt/app/oraInventory

mkdir -p /opt/app/11.2.0/grid

chown -R grid:oinstall /opt/app/11.2.0/grid

chmod -R 775 /opt/app/11.2.0/grid

mkdir -p /opt/app/oracle

mkdir /opt/app/oracle/cfgtoollogs

chown -R oracle:oinstall /opt/app/oracle

chmod -R 775 /opt/app/oracle

mkdir -p /opt/app/oracle/product/11.2.0/db_1

chown -R oracle:oinstall /opt/app/oracle/product/11.2.0/db_1

chmod -R 775 /opt/app/oracle/product/11.2.0/db_1

安装和配置ASMLib

安装的包根据具体环境来选择对应的版本(官网下载地址是 http://www.oracle.com/technetwork/server-storage/linux/downloads/rhel5-084877.html)

rpm -ivh oracleasm-support-2.1.7-1.el5.x86_64.rpm \

oracleasmlib-2.0.4-1.el5.x86_64.rpm \

oracleasm-2.6.18-238.el5-2.0.5-1.el5.x86_64.rpm

配置

/etc/init.d/oracleasm configure

Default user to own the driver interface []: grid

Default group to own the driver interface []: asmadmin

Start Oracle ASM library driver on boot (y/n) [n]: y

Scan for Oracle ASM disks on boot (y/n) [y]: y

Writing Oracle ASM library driver configuration: done

Initializing the Oracle ASMLib driver: [ OK ]

Scanning the system for Oracle ASMLib disks: [ OK ]

添加ASM disk

这里同样要根据具体的存储情况来创建

/usr/sbin/oracleasm createdisk DISK1 /dev/sdc1

/usr/sbin/oracleasm createdisk DISK2 /dev/sdd1

/usr/sbin/oracleasm createdisk DISK3 /dev/sde1

/usr/sbin/oracleasm createdisk DISK4 /dev/sdf1

/usr/sbin/oracleasm createdisk DISK5 /dev/sdf2

/usr/sbin/oracleasm createdisk DISK6 /dev/sdf3

/usr/sbin/oracleasm createdisk DISK7 /dev/sdg1

/usr/sbin/oracleasm createdisk DISK8 /dev/sdg2

/usr/sbin/oracleasm createdisk DISK9 /dev/sdg3

查看创建好的ASM disk

/usr/sbin/oracleasm scandisks

/usr/sbin/oracleasm listdisks

安装cvuqdisk包

在grid安装文件所在目录中的rpm下,安装这个包

[root@rac rpm]# rpm -ivh cvuqdisk-1.0.9-1.rpm

Preparing... ########################################### [100%]

Using default group oinstall to install package

1:cvuqdisk ########################################### [100%]

若cvuqdisk的所有者不为oinstall,则需要设置环境变量CVUQDISK_GRP的值为该用户组

在两个节点间配置grid用户间的ssh

切换到grid用户,在两个节点上分别执行:

ssh-keygen -t dsa

ssh-keygen -t rsa

然后在rac1上,执行

[grid@rac1 ~]$ cat ~/.ssh/id_dsa.pub >>~/.ssh/authorized_keys

[grid@rac1 ~]$ cat ~/.ssh/id_rsa.pub >>~/.ssh/authorized_keys

[grid@rac1 ~]$ ssh rac2 "cat ~/.ssh/id_dsa.pub">>~/.ssh/authorized_keys

[grid@rac1 ~]$ ssh rac2 "cat ~/.ssh/id_rsa.pub">>~/.ssh/authorized_keys

[grid@rac1 ~]$ scp rac2 ~/.ssh/authorized_keys rac2:~/.ssh

然后在rac1和rac2间通过ssh进行切换,让rac1和rac2的信息都添加到known_hosts中

检查先决条件

(具体用法参照Oracle Clusterware Administration and Deployment Guide 11g Release 2 (11.2))

以grid用户登录,在grid安装文件根目录下,找到runcluvfy.sh文件

检查网络状态:

[grid@rac2 grid]$ ./runcluvfy.sh stage -post hwos -n rac1,rac2 -verbose

检查安装crs前的先决条件

[grid@rac2 grid]$ ./runcluvfy.sh stage -pre crsinst -n rac1,rac2 -verbose

如果不能通过,根据出现failed地方的提示来解决

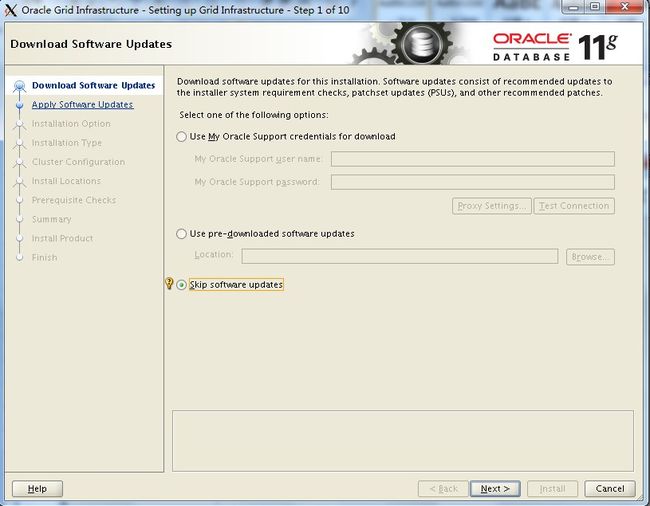

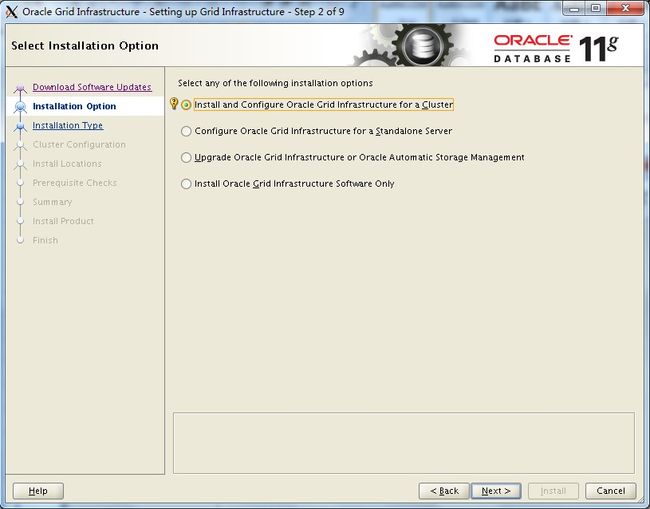

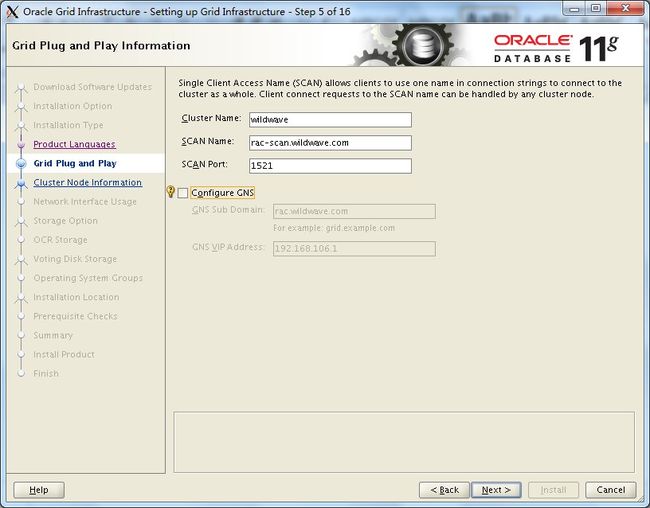

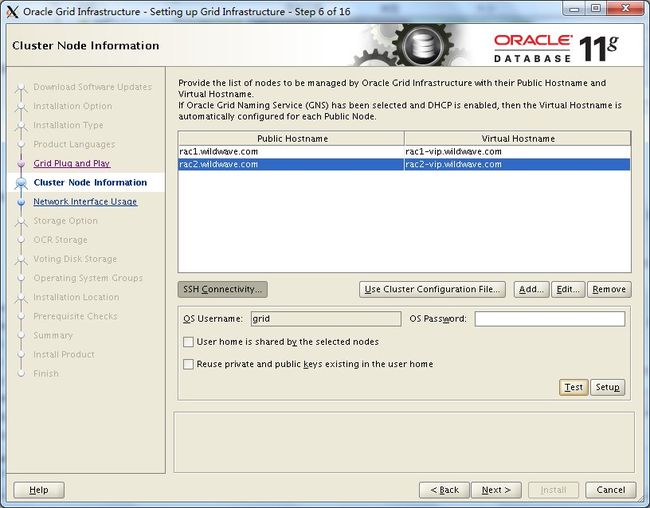

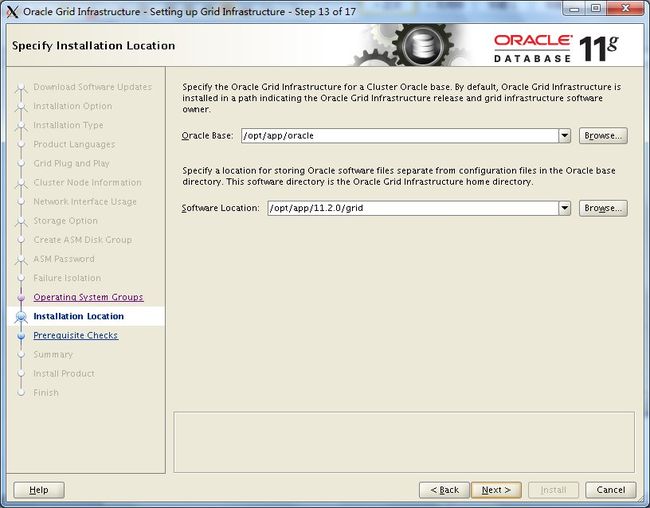

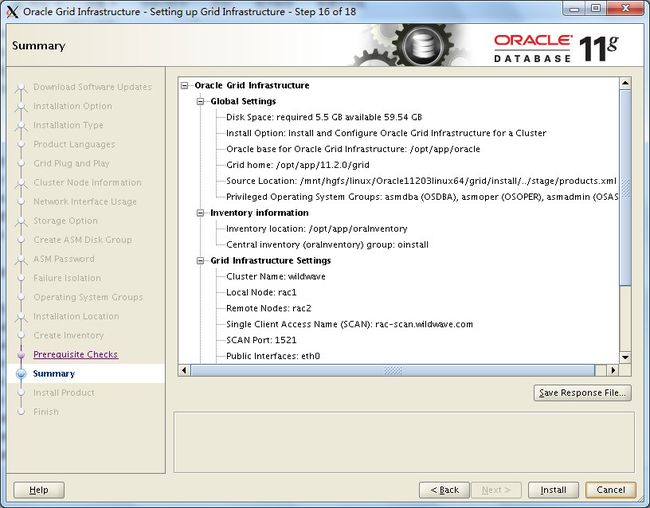

安装Grid Infrastructure

用于对外提供服务的网卡设为public,用于内部通讯的网卡选择private,其他的选择Do not use

Oracle建议使用ASM

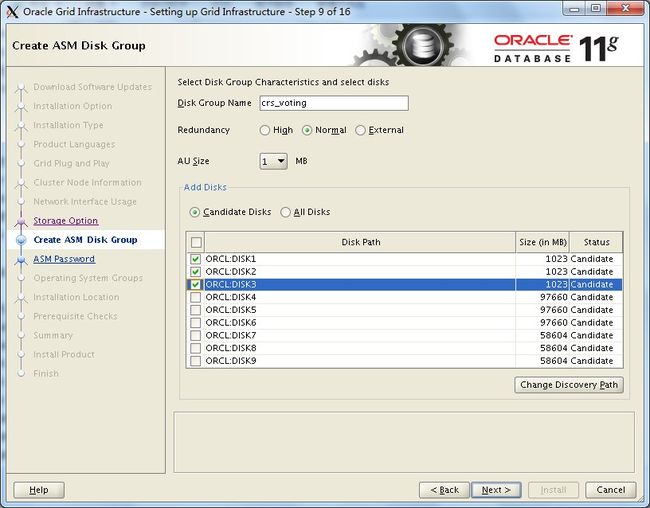

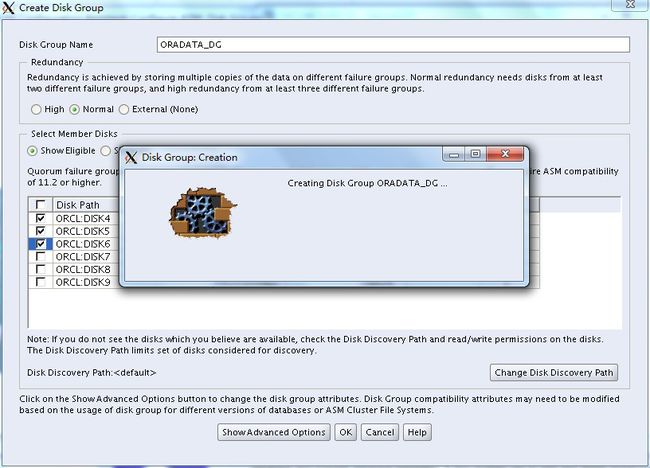

配置crs和voting使用的disk group。这里我们选择normal冗余,需要选择3个ASM disk,其中2个用作failure group

ASM的密码。11g中的密码建议里,有长度、字符/数字、大小写的要求。这里只是测试,可以忽略

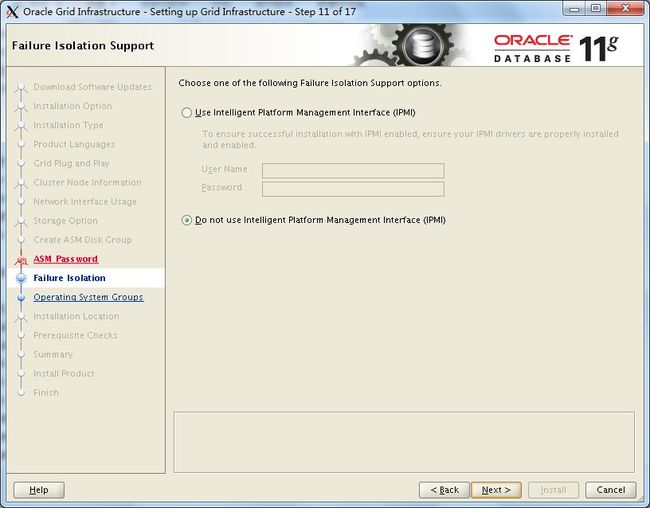

没有能支持IPMI的设备,选择Do not use ...

验证通过以后,这里选择Install开始安装

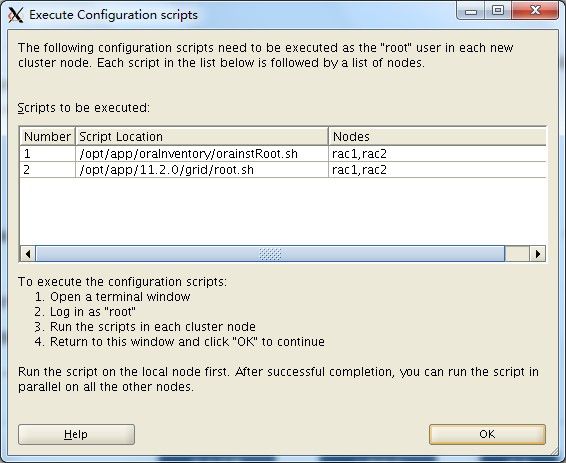

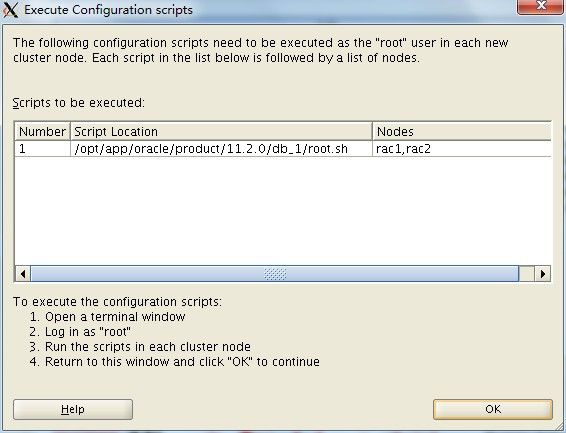

最后要求使用root用户分别在两个节点中执行下面的脚本

Grid Infrastructure成功安装

查看集群现在的状态

[grid@rac1 grid]$ crs_stat -t

除了gsd,其它资源应该都处于online状态

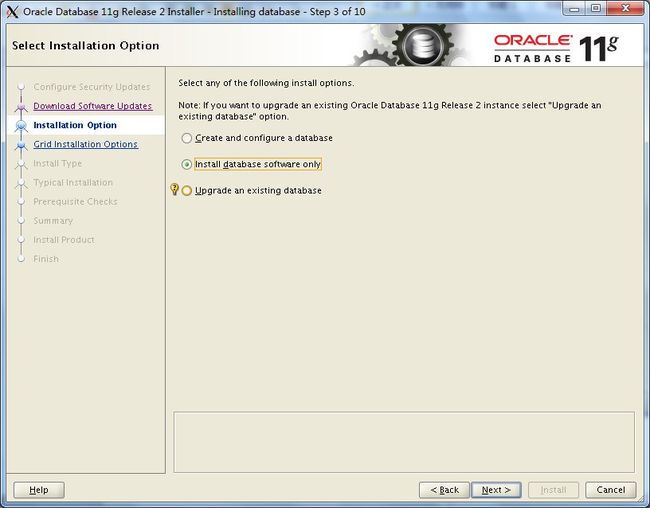

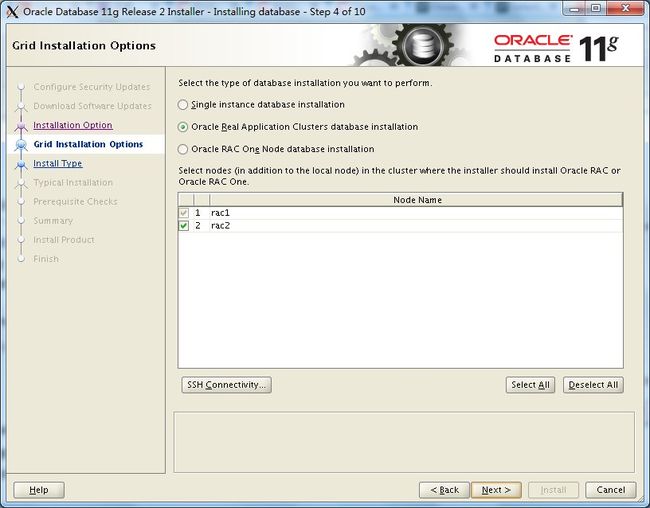

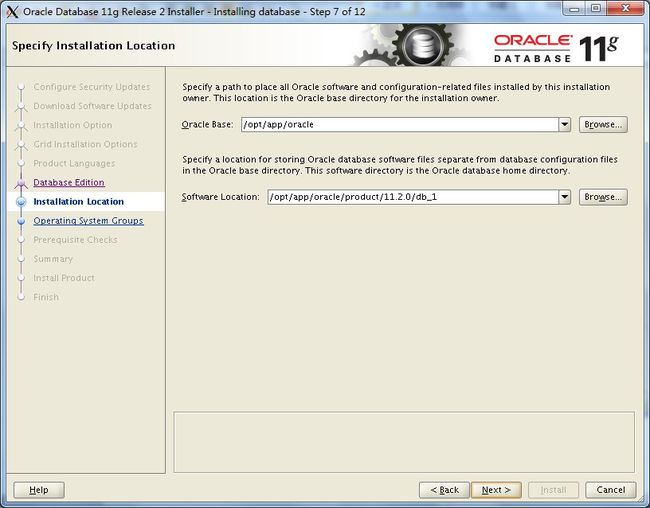

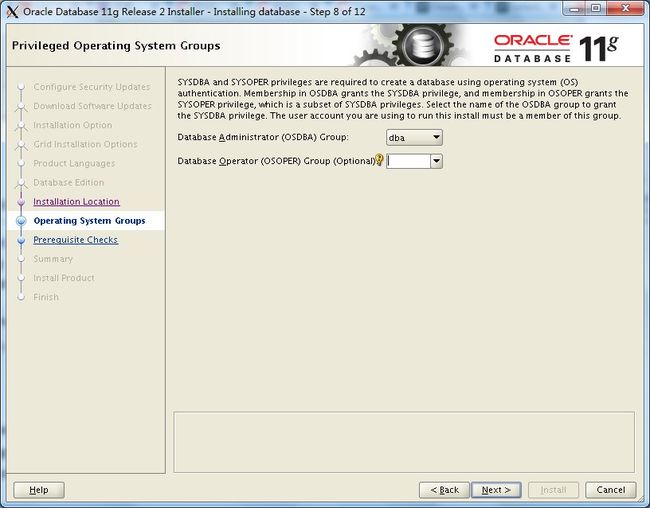

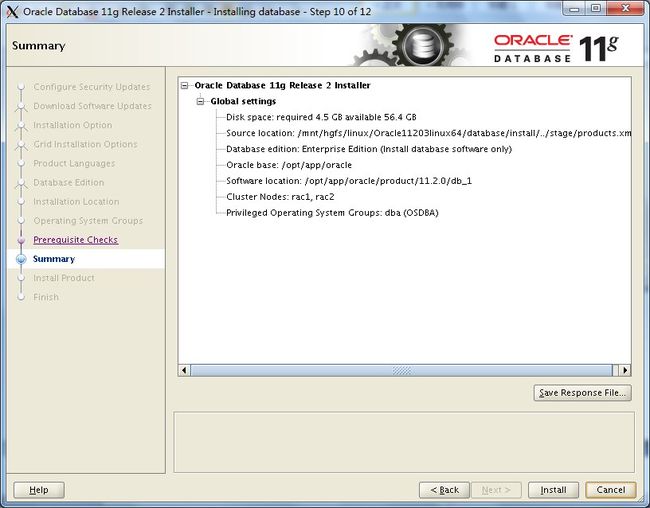

安装RDBMS

检查先决条件

[oracle@rac1 bin]$ /opt/app/11.2.0/grid/bin/cluvfy stage -pre dbinst -n rac1,rac2 -verbose

通过以后就可以开始安装了

[oracle@rac1 database]$ ./runInstaller

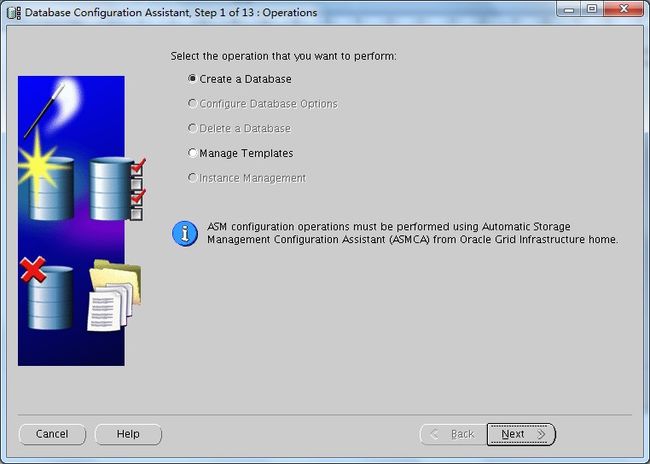

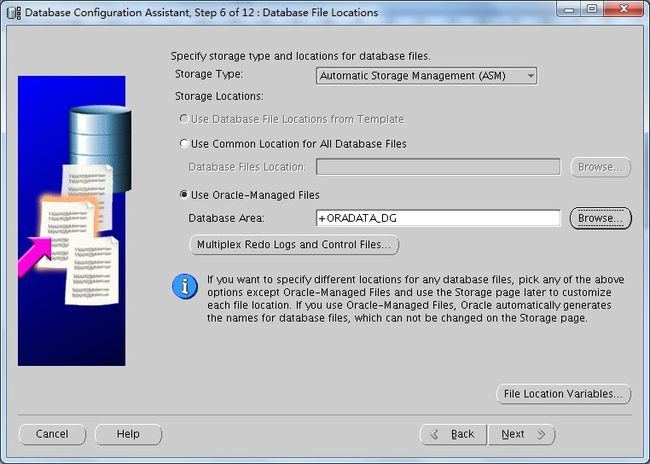

创建数据库

INST_ID INSTANCE_NAME STATUS

---------- ---------------- ------------

1 oradb_1 OPEN

2 oradb_2 OPEN