android多媒体本地播放流程video playback--base on jellybean (三)

上一篇我们讲了多媒体的总体框架,本章我们先来讨论媒体文件的本地播放,也是手机的基本功能。现在市面上的手机配置越来越高,支持高清视频(1920x1080P)已不在话下。那现在android主流播放器都支持哪些媒体格式呢?一般来说mp3,mp4,m4a,m4v,amr等大众格式都是支持的,具体支持成什么样这得看手机厂商和芯片厂商了。具体格式大全可以看framework/base/media/java/android/media/MediaFile.java。

我们下面进入正题研究多媒体文件的本地播放(video playback),具体用到的工具有source insight,astah(免费的画流程图工具),android 4.1代码。代码如何获取可以到google source下下载:http://source.android.com/source/downloading.html。

一般上层应用要本地播放播放一个媒体文件,需要经过如下过程:

MediaPlayer mMediaPlayer = new MediaPlayer( );

mMediaPlayer.setDataSource(mContext, mUri);-

mMediaPlayer.setDisplay(mSurfaceHolder);

mMediaPlayer.setAudioStreamType(AudioManager.STREAM_MUSIC);

mMediaPlayer.prepareAsync();

mMediaPlayer.start();

首先我们先来分析setDataSource方法,这个方法有两个功能:一个是根据文件类型获得相应的player,一个是创建相应文件类型的mediaExtractor,解析媒体文件,记录metadata的主要信息。

代码如下:

framework/av/media/libmedia/ MediaPlayer.cpp

status_t MediaPlayer::setDataSource(

const char *url, const KeyedVector<String8, String8> *headers)

{

ALOGV("setDataSource(%s)", url);

status_t err = BAD_VALUE;

if (url != NULL) {

const sp<IMediaPlayerService>& service(getMediaPlayerService());

if (service != 0) {

sp<IMediaPlayer> player(service->create(getpid(), this, mAudioSessionId));

if ((NO_ERROR != doSetRetransmitEndpoint(player)) ||

(NO_ERROR != player->setDataSource(url, headers))) {

player.clear();

}

err = attachNewPlayer(player);

}

}

return err;

}

我们先来看下setDataSource方法中如何获取player。大体的流程图如下图:

我们知道MediaplayerService是负责外部请求,针对每个APP player ,mediaplayerservice都会开辟一个client端来专门处理。

client 定义如下:

framework/av/media/libmediaplayerservice/ MediaPlayerService.h

class Client : public BnMediaPlayer {...

private:

friend class MediaPlayerService;

Client( const sp<MediaPlayerService>& service,

pid_t pid,

int32_t connId,

const sp<IMediaPlayerClient>& client,

int audioSessionId,

uid_t uid);

}

}

从代码看就是一个BnMediaplayer的子类(即local binder)。既然有了BnMediaplayer,客户端也应该有相应的BpMediaplayer。获取这个BpMediaplayer要分两步骤走:第一,获取BpMediaplayerService;第二就是在setDataSource方法中的:

sp<IMediaPlayer> player(service->create(getpid(), this, mAudioSessionId)); 这个函数会返回一个BpMediaplayer。

获取BpMediaplayerService,首先要去ServiceManager获取相应的服务Mediaplayer,里面的流程是这样检查是否存在要找的service,没有就创建,有就返回BpXX。

有了BpMediaplayerService,我们就可以跟MediaplayerService通信了,自然就可以创建对应的client端来服务对应的BpMediaplayer(客户端):

framework/av/media/libmediaplayerservice/ MediaPlayerService.cpp

sp<IMediaPlayer> MediaPlayerService::create(pid_t pid, const sp<IMediaPlayerClient>& client,

int audioSessionId)

{

int32_t connId = android_atomic_inc(&mNextConnId);

sp<Client> c = new Client(

this, pid, connId, client, audioSessionId,

IPCThreadState::self()->getCallingUid());

ALOGV("Create new client(%d) from pid %d, uid %d, ", connId, pid,

IPCThreadState::self()->getCallingUid());

wp<Client> w = c;

{

Mutex::Autolock lock(mLock);

mClients.add(w);

}

return c;

}

到此我们的sp<IMediaPlayer> player(service->create(getpid(), this, mAudioSessionId));

变成了:sp<IMediaPlayer> player(Client); 那它是如何变成我们需要的BpMediaplayer呢,请看下面的定义原型,INTERFACE就是mediaplayer,大伙把宏取代下就知道了:

frameworks/av/media/include/IMediapalyer.h

class IMediaPlayer: public IInterface

{

public:

DECLARE_META_INTERFACE(MediaPlayer);

DECLARE_META_INTERFACE宏定义如下:

#define DECLARE_META_INTERFACE(INTERFACE) \

static const android::String16 descriptor; \

static android::sp<I##INTERFACE> asInterface( \

const android::sp<android::IBinder>& obj); \

virtual const android::String16& getInterfaceDescriptor() const; \

I##INTERFACE(); \

virtual ~I##INTERFACE();

有了DECLARE IMediaplayer.cpp 必有 IMPLEMENT

#define IMPLEMENT_META_INTERFACE(INTERFACE, NAME) \

const android::String16 I##INTERFACE::descriptor(NAME); \

const android::String16& \

I##INTERFACE::getInterfaceDescriptor() const { \

return I##INTERFACE::descriptor; \

} \

android::sp<I##INTERFACE> I##INTERFACE::asInterface( \

const android::sp<android::IBinder>& obj) \

{ \

android::sp<I##INTERFACE> intr; \

if (obj != NULL) { \

intr = static_cast<I##INTERFACE*>( \

obj->queryLocalInterface( \

I##INTERFACE::descriptor).get()); \

if (intr == NULL) { \

intr = new Bp##INTERFACE(obj); \

} \

} \

return intr; \

} \

I##INTERFACE::I##INTERFACE() { } \

I##INTERFACE::~I##INTERFACE() { } \

通过如上方法 ,我们获得了BpMediaplayer(remoteBinder),我们就可以通过BpMediaplayer 跟BnMediaplayer通信了。两者的交互是IBinder。

BpMediaplayer具体实现在哪呢?

frameworks/av/media/libmedia/IMediaplayer.cpp:

class BpMediaPlayer: public BpInterface<IMediaPlayer>

{

public:

BpMediaPlayer(const sp<IBinder>& impl)

: BpInterface<IMediaPlayer>(impl)

{

}

// disconnect from media player service

void disconnect()

{

Parcel data, reply;

data.writeInterfaceToken(IMediaPlayer::getInterfaceDescriptor());

remote()->transact(DISCONNECT, data, &reply);

}

status_t setDataSource(const char* url,

const KeyedVector<String8, String8>* headers)

{

Parcel data, reply;

data.writeInterfaceToken(IMediaPlayer::getInterfaceDescriptor());

data.writeCString(url);

if (headers == NULL) {

data.writeInt32(0);

} else {

// serialize the headers

data.writeInt32(headers->size());

for (size_t i = 0; i < headers->size(); ++i) {

data.writeString8(headers->keyAt(i));

data.writeString8(headers->valueAt(i));

}

}

remote()->transact(SET_DATA_SOURCE_URL, data, &reply);

return reply.readInt32();

}

remote 就是一个IBinder, IBinder 通过transact 方法中的

IPCThreadState::self()->transact(

mHandle, code, data, reply, flags);

通知相应BnMediaplayer(client)进行相应的处理。里面的如何打开binder,如何传到MediaplayerService::client就不具体说了,有兴趣可以跟下去看看。

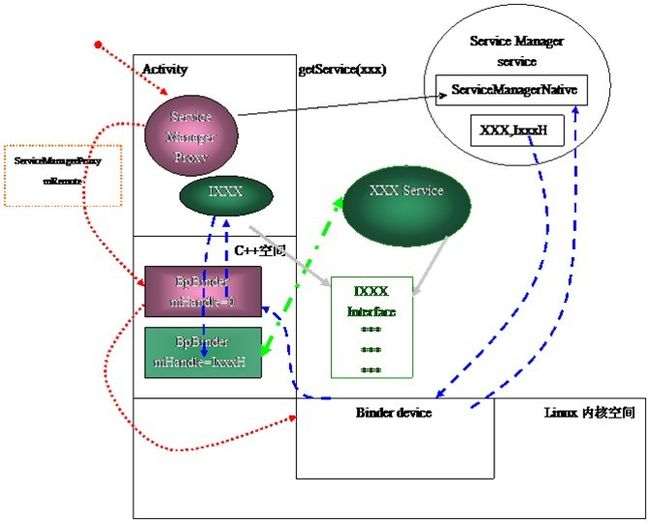

以上我们运用到了Binder的通信机制,如果大家对此不太了解可以看:

Android系统进程间通信(IPC)机制Binder中的Server和Client获得Service Manager接口之路 .

获得了BpMediaplayer ,我们就可以通过调用client端的setDataSource创建 player了:

status_t MediaPlayerService::Client::setDataSource(

const char *url, const KeyedVector<String8, String8> *headers)

{….

如果是url 以content://开头要转换为file descriptor

if (strncmp(url, "content://", 10) == 0) {…

int fd = android::openContentProviderFile(url16);

……….

setDataSource(fd, 0, 0x7fffffffffLL); // this sets mStatus

close(fd);

return mStatus;

} else {

player_type playerType = getPlayerType(url);…. createplayer前要判断是哪种类型

LOGV("player type = %d", playerType);

// create the right type of player

sp<MediaPlayerBase> p = createPlayer(playerType);

mStatus = p->setDataSource(url, headers);

…

return mStatus;

}

}

player_type getPlayerType(const char* url) ……………. 根据url的后缀名判断属于哪种playerType,默认是stagefright,我们现在研究的是本地播放,自然是stagefrightPlayer了

{

if (TestPlayerStub::canBeUsed(url)) {

return TEST_PLAYER;

}

// use MidiFile for MIDI extensions

int lenURL = strlen(url);

for (int i = 0; i < NELEM(FILE_EXTS); ++i) {

int len = strlen(FILE_EXTS[i].extension);

int start = lenURL - len;

if (start > 0) {

if (!strncasecmp(url + start, FILE_EXTS[i].extension, len)) {

return FILE_EXTS[i].playertype;

}

}

}

……………….

return getDefaultPlayerType();

}

自此我们获得了想要的player了。这里最主要的知识点就是Binder的通信了,Binder的流程我们可以用下图来解释,大家可以好好琢磨:

player已经取得,接下来就是setDataSource的第二步:获取相应的MediaExtractor并储存相应的数据。

关于这一步,我也画了个时序图:

紧接刚才我们获得player的步骤,我们实例话一个stagefrightPlayer的同时也实例话了一个AwesomePlayer,其实真正干实事的AwesomePlayer,stagefrightPlayer只是个对外的接口,

代码如下:framework/av/media/libmediaplayerservice/ StagefrightPlayer.cpp

static sp<MediaPlayerBase> createPlayer(player_type playerType, void* cookie,

notify_callback_f notifyFunc) {

…..

case STAGEFRIGHT_PLAYER:

ALOGV(" create StagefrightPlayer");

p = new StagefrightPlayer;

break;

…….

}

创建stagefrightplayer实例也new了个AwesomePlayer(mPlayer)

StagefrightPlayer::StagefrightPlayer()

: mPlayer(new AwesomePlayer) {

LOGV("StagefrightPlayer");

mPlayer->setListener(this);

}

既然Awesomeplayer是干实事的,我们直接进去看看吧:

frameworks/av/media/libstagefright/AwesomePlayer.cpp

status_t AwesomePlayer::setDataSource_l(

const sp<DataSource> &dataSource) {

sp<MediaExtractor> extractor = MediaExtractor::Create(dataSource);…….创建对应的extractor

…..

return setDataSource_l(extractor);

}

status_t AwesomePlayer::setDataSource_l(const sp<MediaExtractor> &extractor) {

…

for (size_t i = 0; i < extractor->countTracks(); ++i) {

sp<MetaData> meta = extractor->getTrackMetaData(i);.......获取相应track的元数据

int32_t bitrate;

if (!meta->findInt32(kKeyBitRate, &bitrate)) {

const char *mime;

CHECK(meta->findCString(kKeyMIMEType, &mime));

ALOGV("track of type '%s' does not publish bitrate", mime);

totalBitRate = -1;

break;

}

totalBitRate += bitrate;

}

.........

if (!haveVideo && !strncasecmp(mime, "video/", 6)) {

setVideoSource(extractor->getTrack(i)); ………>mVideoTrack

haveVideo = true;

} else if (!haveAudio && !strncasecmp(mime, "audio/", 6)) {

setAudioSource(extractor->getTrack(i));……….>mAudioTrack

haveAudio = true;

return OK;

}

关于MediaExtractor里面涉及到媒体文件格式的很多内容,比如track的构成,有几种track等等,我们将来在videoRecorder中再详细讲解。这里只有知道提取相关信息就行了。

此方法调用完成意味着player进入了MEDIA_PLAYER_INITIALIZED状态。Player的状态有如下几种:

MEDIA_PLAYER_STATE_ERROR

MEDIA_PLAYER_IDLE

MEDIA_PLAYER_INITIALIZED

MEDIA_PLAYER_PREPARING

MEDIA_PLAYER_PREPARED

MEDIA_PLAYER_STARTED

MEDIA_PLAYER_PAUSED

MEDIA_PLAYER_STOPPED

MEDIA_PLAYER_PLAYBACK_COMPLETE

setDataSource我们已经讲完了,讲流程我们的目的是熟悉它的架构,希望大家很好好熟悉熟悉,在项目需要的时候根据我们自己的媒体格式,依葫芦画瓢进行改造,比如说支持多track,切换track,以达到KTV的功能等等。。。

下一篇我们将讲解prepare的过程,这个工程主要是匹配codec,初始化codec等。