ARMv8(aarch64)页表建立过程详细分析

目录

文件修订记录...2

目录...3

1ARMv8存储管理...4

1.1Aarch64 Linux中的内存布局... 4

1.2AArch64的虚拟地址格式...4

1.2.14K页时的虚拟地址...4

1.2.264K页时的虚拟地址...5

2head.S页表建立过程分析...6

2.1页表建立函数__create_page_tables.6

2.1.1pgtbl x25,x26, x24分析... 7

2.1.2MM_MMUFLAGS解释...8

2.1.3create_pgd_entry/create_block_map宏解释...8

3问题解答...12

3.1TLB打开之前,内存物理内存大小如何通知OS内核?...12

3.2PGD及PTE的填写过程...12

3.2.1map_mem()12

3.2.2create_mapping()12

3.2.3alloc_init_pud()13

3.2.4alloc_init_pmd()14

3.2.5set_pmd()15

3.2.6alloc_init_pte()15

3.2.7set_pte()15

3.3ARMv8三级页表情况下,全部把页表放到内存中是放不下的(1G大小),是不是部分放到硬盘中?...16

1 ARMv8存储管理

1.1 Aarch64 Linux中的内存布局

ARMv8架构可以支持48位虚拟地址,并配置成4级页表(4K页),或者3级页表(64K页)。而本Linux系统只使用39位虚拟地址(512G内核,512G用户),配置成3级页表(4K页)或者2级页表(64K页)

用户空间的地址63:39位都置零,内核空间地址63:39都置一,虚拟地址的第63位可以用来选择TTBRx。swapper_pg_dir只包含内核全局映射,用户的pgd包含用户(非全局)映射。swapper_pg_dir地址在TTBR1中,不会写入TTBR0中。

AArch64Linux内存布局:

Start End Size Use

--------------------------------------------------------------------------------------------------

0000000000000000 0000007fffffffff 512GB user

ffffff8000000000 ffffffbbfffcffff ~240GB vmalloc

ffffffbbfffd0000 ffffffbcfffdffff 64KB [guardpage]

ffffffbbfffe0000 ffffffbcfffeffff 64KB PCII/O space

ffffffbbffff0000 ffffffbcffffffff 64KB [guard page]

ffffffbc00000000 ffffffbdffffffff 8GB vmemmap

ffffffbe00000000 ffffffbffbffffff ~8GB [guard,future vmmemap]

ffffffbffc000000 ffffffbfffffffff 64MB modules

ffffffc000000000 ffffffffffffffff 256GB memory

1.2 AArch64的虚拟地址格式

1.2.1 4K页时的虚拟地址

1.2.2 64K页时的虚拟地址

2 head.S页表建立过程分析

2.1 页表建立函数__create_page_tables

该函数用于在内核启动时,为FDT(设备树)、内核镜像创建启动所必须的页表。等内核正常运行后,还需运行create_mapping为所有的物理内存创建页表,这将覆盖__create_page_tables所创建的页表。

内核开始运行时创建页表源文件:arm64/kernel/head.Sline345

/*

* Setup the initial page tables. We only setup the barest amount which is

* required to get the kernel running. The following sections are required:

* -identity mapping to enable the MMU (low address, TTBR0)

* -first few MB of the kernel linear mapping to jump to once the MMU has

* been enabled, including the FDT blob (TTBR1)

*/

__create_page_tables:

pgtbl x25, x26,x24 //idmap_pg_dir and swapper_pg_dir addresses

/*

* 清空新建的两个页表TTBR0,TTBR1

*/

mov x0,x25

add x6,x26, #SWAPPER_DIR_SIZE

1: stp xzr,xzr, [x0], #16

stp xzr,xzr, [x0], #16

stp xzr,xzr, [x0], #16

stp xzr,xzr, [x0], #16

cmp x0,x6

b.lo 1b

ldr x7,=MM_MMUFLAGS

/*

* Create the identity mapping.

*/

add x0, x25,#PAGE_SIZE // sectiontable address

adr x3, __turn_mmu_on // virtual/physical address

create_pgd_entry x25, x0, x3, x5, x6 //展开见1.1.3

create_block_map x0, x7, x3, x5, x5, idmap=1

/*

* Map the kernel image (starting withPHYS_OFFSET).

*/

add x0,x26, #PAGE_SIZE //section table address

mov x5,#PAGE_OFFSET

create_pgd_entry x26, x0, x5, x3, x6

ldr x6,=KERNEL_END - 1

mov x3,x24 // physoffset

create_block_map x0, x7, x3, x5, x6

/*

* Map the FDT blob (maximum 2MB; must bewithin 512MB of

* PHYS_OFFSET).

*/

mov x3,x21 // FDTphys address

and x3,x3, #~((1 << 21) - 1) // 2MBaligned

mov x6,#PAGE_OFFSET

sub x5,x3, x24 //subtract PHYS_OFFSET

tst x5,#~((1 << 29) - 1) //within 512MB?

csel x21,xzr, x21, ne // zero the FDTpointer

b.ne 1f

add x5,x5, x6 // __va(FDTblob)

add x6,x5, #1 << 21 // 2MB forthe FDT blob

sub x6,x6, #1 //inclusive range

create_block_map x0, x7, x3, x5, x6

1:

ret

ENDPROC(__create_page_tables)

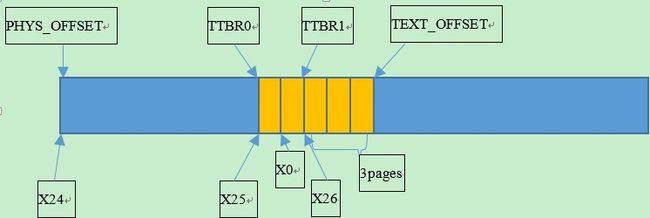

2.1.1 pgtbl x25, x26, x24分析

pgtbl是个宏,定义如下:

arm64/kernel/head.S line55

.macro pgtbl,ttb0, ttb1, phys

add \ttb1,\phys, #TEXT_OFFSET - SWAPPER_DIR_SIZE

sub \ttb0,\ttb1, #IDMAP_DIR_SIZE

.endm

pgtbl x25,x26, x24 //展开后如下

add x26,x24, #TEXT_OFFSET -SWAPPER_DIR_SIZE

sub x25,x26,#IDMAP_DIR_SIZE

其中各变量定义如下:

#defineSWAPPER_DIR_SIZE (3 * PAGE_SIZE)

#defineIDMAP_DIR_SIZE (2 *PAGE_SIZE)

说明:

1、关于TTBR0、TTBR1的介绍见ARM ARM 手册的Page B4-1708

2、x25中存TTBR0(TTBR0 holds the base address of translation table 0)的地址;

3、X26存TTBR1(TTBR1 holds the base address of translation table 1)地址;

4、X24 存PHYS_OFFSET,/* PHYS_OFFSET- the physical address of the start of memory. */

#definePHYS_OFFSET ({ memstart_addr; })

5、TEXT_OFFSET是Bootloader启动时传进来的参数,是内核Image加载时相对于RAM起始地址的偏移量

6、PAGE_OFFSEST:the virtual address of the start of the kernel image.

图1 pgtbl宏分析

2.1.2 MM_MMUFLAGS解释

在文件arm64/kernel/head.S line71:

/*

* Initial memory map attributes.

*/

#ifndefCONFIG_SMP

#definePTE_FLAGS PTE_TYPE_PAGE | PTE_AF

#definePMD_FLAGS PMD_TYPE_SECT | PMD_SECT_AF

#else

#definePTE_FLAGS PTE_TYPE_PAGE | PTE_AF |PTE_SHARED

#definePMD_FLAGS PMD_TYPE_SECT | PMD_SECT_AF| PMD_SECT_S

#endif

#ifdefCONFIG_ARM64_64K_PAGES

#defineMM_MMUFLAGS PTE_ATTRINDX(MT_NORMAL) |PTE_FLAGS

#defineIO_MMUFLAGS PTE_ATTRINDX(MT_DEVICE_nGnRE)| PTE_XN | PTE_FLAGS

#else

#defineMM_MMUFLAGS PMD_ATTRINDX(MT_NORMAL) |PMD_FLAGS

#defineIO_MMUFLAGS PMD_ATTRINDX(MT_DEVICE_nGnRE)| PMD_SECT_XN | PMD_FLAGS

#endif

根据以上宏定义能明确,MM_MMUFLAGS就是根据你编译内核时选定的页大小(64K or 4K),设置MMU。

2.1.3 create_pgd_entry/create_block_map宏解释

1、create_pgd_entry

/*

* Macro to populate the PGD for thecorresponding block entry in the next

* level (tbl) for the given virtual address.

*

* Preserves: pgd,tbl, virt

* Corrupts: tmp1,tmp2

*/

.macro create_pgd_entry,pgd, tbl, virt, tmp1, tmp2

lsr \tmp1,\virt, #PGDIR_SHIFT

and \tmp1,\tmp1, #PTRS_PER_PGD - 1 // PGD index

orr \tmp2,\tbl, #3 // PGD entrytable type

str \tmp2,[\pgd, \tmp1, lsl #3]

.endm

根据以上定义,create_pgd_entry x25, x0, x3, x5, x6展开后如下:

lsr x5, x3,# PGDIR_SHIFT //X3中存放的是__turn_mmu_on的地址,右移PGDIR_SHIFT(30)位

and x5, x5, #PTRS_PER_PGD – 1//将<38:30>置位

orr x6, x0, #3 //x0存放PGD entry(即下级页表地址),低三位用于表项的有效位

str x6, [ x25, x5, lsl #3] //将entry存放到TTBR0(x25)中偏移为x5左移3位(乘8,因为entry为8byte)的位置处。

为了便于理解,如下图所示:

图2 4K页面时48位虚拟地址组成

注意:上图中虚拟地址对应的表格名称是:

PGD:全局描述符表

PUD:折合到PGD中,Linux中不使用

PMD:页表中间描述符表

PTE:页表

Linux内核只使用了39位虚拟地址

图3 64位页表项格式

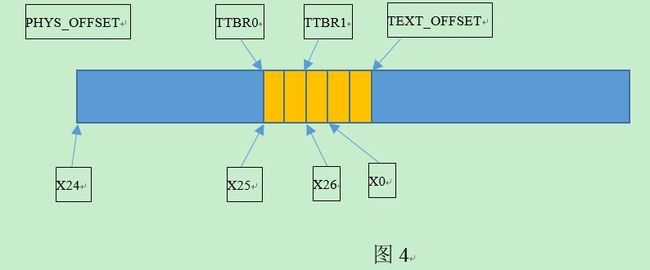

图4

同理,create_pgd_entry x26, x0, x5, x3, x6展开后如下:

lsr x3, x5,#PGDIR_SHIFT //X5中存放的是PAGE_OFFSET= 0xffffffc000000000,右移PGDIR_SHIFT位存入X3

and x3, x3,#PTRS_PER_PGD – 1//将<38:30>置位

orr x6, x0, #3 //x0存放TTBR1指向的页的下一页,低三位用于表项的有效位,存入x6

str x6, [ x26, x3,lsl #3] //将entry存放到TTBR0(x25)中偏移为x5左移3位的位置处

以上内容就是,填写TTBR1指向的页表中偏移为x3*8(因为一个entry是8byte)的页表项,内容为x6(即图4中x0所指的位置)

2、create_block_map

/*

* Macro to populate block entries in the pagetable for the start..end

* virtual range (inclusive).

*

* Preserves: tbl,flags

* Corrupts: phys,start, end, pstate

*/

.macro create_block_map,tbl, flags, phys, start, end, idmap=0

lsr \phys,\phys, #BLOCK_SHIFT

.if \idmap

and \start,\phys, #PTRS_PER_PTE - 1 // table index

.else

lsr \start,\start, #BLOCK_SHIFT

and \start,\start, #PTRS_PER_PTE - 1 // table index

.endif

orr \phys,\flags, \phys, lsl #BLOCK_SHIFT //table entry

.ifnc \start,\end

lsr \end,\end, #BLOCK_SHIFT

and \end,\end, #PTRS_PER_PTE - 1 // tableend index

.endif

9999: str \phys,[\tbl, \start, lsl #3] // storethe entry

.ifnc \start,\end //ifnc:如果string1!=string2

add \start,\start, #1 // next entry

add \phys,\phys, #BLOCK_SIZE // next block

cmp \start,\end

b.ls 9999b

.endif

.endm

根据以上宏定义,create_block_map x0, x7, x3,x5, x5, idmap=1,翻译后如下:

lsr x3, x3, # BLOCK_SHIFT

and x5, x3 # PTRS_PER_PTE – 1

orr x3, x7, x3, lsl # BLOCK_SHIFT

9999:

str x3, [x0, x5, lsl #3]

同理,create_block_map x0, x7, x3, x5,x6展开后如下:

lsr x3,x3, #BLOCK_SHIFT

lsr x5,x5, #BLOCK_SHIFT

and x5,x5, #PTRS_PER_PTE - 1 // table index

orr x3,x7, x3, lsl #BLOCK_SHIFT // tableentry

lsr x6,x6, #BLOCK_SHIFT

and x6,x6, #PTRS_PER_PTE - 1 // table endindex

9999: str x3,[x0, x5, lsl #3] // store the entry

add x5,x5, #1 // next entry

add x3,x3, #BLOCK_SIZE // next block

cmp x5, x6

b.ls 9999b

create_block_mapx0, x7, x3, x5, x6宏的作用就是创建内核镜像所有的映射关系

3 问题解答

3.1 TLB打开之前,内存物理内存大小如何通知OS内核?

Bootloader通过设备树(devicetree.dts文件)将物理内存起始地址及大小传给Linux 内核。物理内存的大小需要在bootloader即dts文件中写明。dts文件中内存声明如下:

memory {

device_type= "memory";

reg= <0x00000000 0x80000000>;

};

Reg字段:<地址 大小>

以上声明一段内存:从地址0x开始,大小为2G

3.2 PGD及PTE的填写过程

内核初始化时,会调用map_mem对所有内存建立页表,进行映射,函数执行流程是:

start_kernel()àsetup_arch()àpaging_init()àmap_mem()àcreate_mapping()

下面我们从map_mem()函数开始分析。

3.2.1 map_mem()

arm64/mm/mmu.cline254

staticvoid __init map_mem(void)

{

struct memblock_region *reg;

// 按照内存块进行映射,映射所有内存bank

for_each_memblock(memory, reg) {

phys_addr_t start = reg->base;

phys_addr_t end = start +reg->size;

if (start >= end) //如果end不大于start 则退出

break;

create_mapping(start,__phys_to_virt(start), end - start);//创建映射

}

}

3.2.2 create_mapping()

arm64/mm/mmu.cline 230

/*

* Create the page directory entries and anynecessary page tables for the

* mapping specified by 'md'.

*/

staticvoid __init create_mapping(phys_addr_t phys, unsigned long virt,

phys_addr_t size)

{

unsigned long addr, length, end, next;

pgd_t *pgd;

if (virt < VMALLOC_START) { //对虚拟地址进行检查

pr_warning("BUG: not creatingmapping for 0x%016llx at 0x%016lx - outside kernel range\n",

phys, virt);

return;

}

addr = virt & PAGE_MASK; // PAGE_MASK=(~(PAGE_SIZE-1)),将虚拟地址的低位偏移掩掉

//计算需要映射的内存长度,对齐到下一页的边界

length= PAGE_ALIGN(size + (virt & ~PAGE_MASK));

//一级数组中addr对应的段在init_mm->pgd的下标,即在内核的pgd中获得一个entry

pgd = pgd_offset_k(addr);

end = addr + length; //计算需要映射的结束地址

do {

next = pgd_addr_end(addr, end);//获得下一段开始地址

//申请并初始化一个段

//段码,虚拟地址,结束地址,物理地址,内存类型

alloc_init_pud(pgd,addr, next, phys);

phys += next - addr;//物理地址累加

} while (pgd++, addr = next, addr != end);

}

3.2.3 alloc_init_pud()

arm64/mm/mmu.cline213

staticvoid __init alloc_init_pud(pgd_t *pgd, unsigned long addr,

unsigned long end, unsigned long phys)

{

//由于Linux内核不使用pud,所以pud折如pgd,这里的pud=pgd

pud_t *pud = pud_offset(pgd, addr);

unsigned long next;

do {

next = pud_addr_end(addr, end);

alloc_init_pmd(pud, addr, next, phys);

phys += next - addr;

} while (pud++, addr = next, addr != end);

}

3.2.4 alloc_init_pmd()

arm64/mm/mmu.cline 187

staticvoid __init alloc_init_pmd(pud_t *pud, unsigned long addr,

unsigned long end, phys_addr_t phys)

{

pmd_t *pmd;

unsigned long next;

/*

*Check for initial section mappings in the pgd/pud and remove them.

*/

if (pud_none(*pud) || pud_bad(*pud)) {

pmd = early_alloc(PTRS_PER_PMD *sizeof(pmd_t));

pud_populate(&init_mm, pud,pmd);

}

pmd = pmd_offset(pud, addr);

do {

next = pmd_addr_end(addr, end);

/* try section mapping first */

//addr,end, phys都是段对齐,则直接进行段映射(大部分情况下应该是满足条件),否则需要进一步填写PTE。

//段大小在不同页大小情况下不同,在3级页表时,2M;在2级页表时,512M

if (((addr | next | phys) &~SECTION_MASK) == 0)

//将物理地址及一些段属性存放到pmd中

set_pmd(pmd, __pmd(phys |prot_sect_kernel));

else

alloc_init_pte(pmd, addr, next,__phys_to_pfn(phys));

phys += next - addr;

} while (pmd++, addr = next, addr != end);

}

3.2.5 set_pmd()

arm64/include/asmline 195

staticinline void set_pmd(pmd_t *pmdp, pmd_t pmd)

{

*pmdp = pmd;

dsb();//同步数据进RAM(由于有cache机制,所以数据操作的时候是先保存在cache中的,这里是强制将数据从cache中刷进RAM中)

}

3.2.6 alloc_init_pte()

arm64/mm/mmu.cline 169

staticvoid __init alloc_init_pte(pmd_t *pmd, unsigned long addr,

unsigned long end, unsigned long pfn)

{

pte_t *pte;

if (pmd_none(*pmd)) {

pte = early_alloc(PTRS_PER_PTE *sizeof(pte_t));

__pmd_populate(pmd, __pa(pte),PMD_TYPE_TABLE);

}

BUG_ON(pmd_bad(*pmd));

pte = pte_offset_kernel(pmd, addr);

do {

set_pte(pte,pfn_pte(pfn, PAGE_KERNEL_EXEC));

pfn++;

} while (pte++, addr += PAGE_SIZE, addr !=end);

}

3.2.7 set_pte()

arm64/include/asm/pgtable.hline 150

staticinline void set_pte(pte_t *ptep, pte_t pte)

{

*ptep = pte;

}

3.3 ARMv8三级页表情况下,全部把页表放到内存中是放不下的(1G大小),是不是部分放到硬盘中?

答:ARMv8 OS是根据内存大小建立页表的,例如当物理内存只有1G时,1G=230=218 *4K(页大小),即需要218个PTE,每个页表512个PTE,所以需要29个PT表,共需要29*4K=2M大小的页表(事实上还需要计算PGD和PMD,各4K)。

所以1G内存空间,需要2M大小页表;

2G——4M

100G——200M

512G——1G页表

相对内存大小来说,页表大小还是很小的。

注意:如果是64位机器,就不存在高端内存一说,因为地址空间很大很大,属于内核的空间也不止1G,在aarch64 linux中内核空间是512G,内核完全可以直接管理所有内存