Hadoop集群节点的动态增加

1. 安装配置节点

具体过程参考 《Hadoop集群实践 之 (1) Hadoop(HDFS)搭建》

2. 在配置过程中需要在所有的Hadoop服务器上更新以下三项配置

$ sudo vim /etc/hadoop/conf/slaves

$ sudo vim /etc/hosts

1 |

10.6.1.150 hadoop-master |

2 |

10.6.1.151 hadoop-node-1 |

3 |

10.6.1.152 hadoop-node-2 |

4 |

10.6.1.153 hadoop-node-3 |

$ sudo vim /etc/hadoop/conf/hdfs-site.xml

02 |

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?> |

06 |

<name>dfs.data.dir</name> |

07 |

<value>/data/hdfs</value> |

10 |

<name>dfs.replication</name> |

14 |

<name>dfs.datanode.max.xcievers</name> |

3. 启动datanode与tasktracker

dongguo@hadoop-node-3:~$ sudo /etc/init.d/hadoop-0.20-datanode start

dongguo@hadoop-node-3:~$ sudo /etc/init.d/hadoop-0.20-tasktracker start

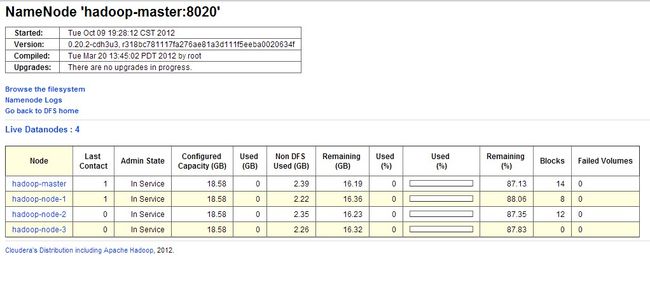

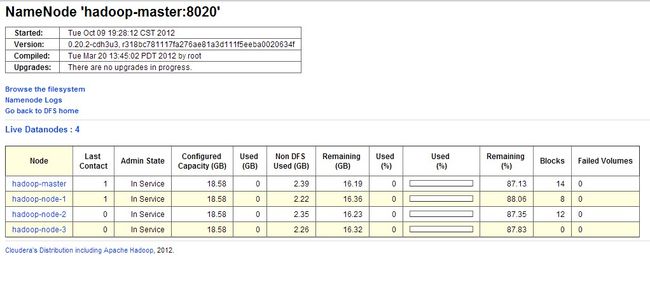

4. 检查新增节点是否已经Live

通过WEB管理界面查看

http://10.6.1.150:50070/dfsnodelist.jsp?whatNodes=LIVE

可以看到hadoop-node-3已经被动态添加到了Hadoop集群中

5.应用新的备份系数dfs.replication

5.1 检查目前的备份系数

dongguo@hadoop-master:~$ sudo -u hdfs hadoop fs -lsr /dongguo

-rw-r--r-- 2 hdfs supergroup 33 2012-10-07 22:02 /dongguo/hello.txt

结果行中的第2列是备份系数(注:文件夹信息存储在namenode节点上,没有备份,故文件夹的备份系数是横杠-)

目前文件的备份系数仍是之前设置的参数2,Hadoop不会自动的按照新的备份系数进行调整。

dongguo@hadoop-master:~$ sudo -u hdfs hadoop fsck /

01 |

12/10/10 21:18:32 INFO security.UserGroupInformation: JAAS Configuration already setup for Hadoop, not re-installing. |

02 |

FSCK started by hdfs (auth:SIMPLE) from /10.6.1.150 for path / at Wed Oct 10 21:18:33 CST 2012 |

03 |

.................Status: HEALTHY |

07 |

Total blocks (validated): 17 (avg. block size 458 B) |

08 |

Minimally replicated blocks: 17 (100.0 %) |

09 |

Over-replicated blocks: 0 (0.0 %) |

10 |

Under-replicated blocks: 0 (0.0 %) |

11 |

Mis-replicated blocks: 0 (0.0 %) |

12 |

Default replication factor: 2 |

13 |

Average block replication: 2.0 |

15 |

Missing replicas: 0 (0.0 %) |

16 |

Number of data-nodes: 4 |

18 |

FSCK ended at Wed Oct 10 21:18:33 CST 2012 in 48 milliseconds |

19 |

The filesystem under path '/' is HEALTHY |

通过 hadoop fsck / 也可以方便的看到Average block replication的值仍然为旧值2,该值我们可以手动的进行动态修改。

而Default replication factor则需要重启整个Hadoop集群才能修改,但实际影响系统的还是Average block replication的值,因此并非一定要修改默认值。

5.2 修改hdfs文件备份系数,把/ 目录下所有文件备份系数设置为3

dongguo@hadoop-master:~$ sudo -u hdfs hadoop dfs -setrep -w 3 -R /

01 |

12/10/10 21:22:35 INFO security.UserGroupInformation: JAAS Configuration already setup for Hadoop, not re-installing. |

02 |

Replication 3 set: hdfs://hadoop-master/dongguo/hello.txt |

03 |

Replication 3 set: hdfs://hadoop-master/hbase/-ROOT-/70236052/.oldlogs/hlog.1349695889266 |

04 |

Replication 3 set: hdfs://hadoop-master/hbase/-ROOT-/70236052/.regioninfo |

05 |

Replication 3 set: hdfs://hadoop-master/hbase/-ROOT-/70236052/info/7670471048629837399 |

06 |

Replication 3 set: hdfs://hadoop-master/hbase/.META./1028785192/.oldlogs/hlog.1349695889753 |

07 |

Replication 3 set: hdfs://hadoop-master/hbase/.META./1028785192/.regioninfo |

08 |

Replication 3 set: hdfs://hadoop-master/hbase/.META./1028785192/info/7438047560768966146 |

09 |

Waiting for hdfs://hadoop-master/dongguo/hello.txt .... done |

10 |

Waiting for hdfs://hadoop-master/hbase/-ROOT-/70236052/.oldlogs/hlog.1349695889266... done |

11 |

Waiting for hdfs://hadoop-master/hbase/-ROOT-/70236052/.regioninfo ... done |

12 |

Waiting for hdfs://hadoop-master/hbase/-ROOT-/70236052/info/7670471048629837399 ...done |

13 |

Waiting for hdfs://hadoop-master/hbase/.META./1028785192/.oldlogs/hlog.1349695889753... done |

14 |

Waiting for hdfs://hadoop-master/hbase/.META./1028785192/.regioninfo ... done |

15 |

Waiting for hdfs://hadoop-master/hbase/.META./1028785192/info/7438047560768966146 ...done |

可以看到Hadoop对所有文件的备份系数进行了刷新

5.3 再次检查备份系数的情况

dongguo@hadoop-master:~$ sudo -u hdfs hadoop fsck /

01 |

12/10/10 21:23:26 INFO security.UserGroupInformation: JAAS Configuration already setup for Hadoop, not re-installing. |

02 |

FSCK started by hdfs (auth:SIMPLE) from /10.6.1.150 for path / at Wed Oct 10 21:23:27 CST 2012 |

03 |

.................Status: HEALTHY |

07 |

Total blocks (validated): 17 (avg. block size 458 B) |

08 |

Minimally replicated blocks: 17 (100.0 %) |

09 |

Over-replicated blocks: 0 (0.0 %) |

10 |

Under-replicated blocks: 0 (0.0 %) |

11 |

Mis-replicated blocks: 0 (0.0 %) |

12 |

Default replication factor: 2 |

13 |

Average block replication: 3.0 |

15 |

Missing replicas: 0 (0.0 %) |

16 |

Number of data-nodes: 4 |

18 |

FSCK ended at Wed Oct 10 21:23:27 CST 2012 in 11 milliseconds |

19 |

The filesystem under path '/' is HEALTHY |

可以看到已经变成了新的备份系数"3"

5.4 测试一下创建新的文件时是否能集成新的备份系数

dongguo@hadoop-master:~$ sudo -u hdfs hadoop fs -copyFromLocal mysql-connector-java-5.1.22.tar.gz /dongguo

dongguo@hadoop-master:~$ sudo -u hdfs hadoop fs -lsr /dongguo

1 |

-rw-r--r-- 3 hdfs supergroup 33 2012-10-07 22:02 /dongguo/hello.txt |

2 |

-rw-r--r-- 3 hdfs supergroup 4028047 2012-10-10 21:28 /dongguo/mysql-connector-java-5.1.22.tar.gz |

可以看到新上传的文件的备份系数是"3"

6 对HDFS中的文件进行负载均衡

dongguo@hadoop-master:~$ sudo -u hdfs hadoop balancer

2 |

12/10/10 21:30:25 INFO net.NetworkTopology: Adding a new node: /default-rack/10.6.1.153:50010 |

3 |

12/10/10 21:30:25 INFO net.NetworkTopology: Adding a new node: /default-rack/10.6.1.150:50010 |

4 |

12/10/10 21:30:25 INFO net.NetworkTopology: Adding a new node: /default-rack/10.6.1.152:50010 |

5 |

12/10/10 21:30:25 INFO net.NetworkTopology: Adding a new node: /default-rack/10.6.1.151:50010 |

6 |

12/10/10 21:30:25 INFO balancer.Balancer: 0 over utilized nodes: |

7 |

12/10/10 21:30:25 INFO balancer.Balancer: 0 under utilized nodes: |

8 |

The cluster is balanced. Exiting... |

9 |

Balancing took 1.006 seconds |

至此,Hadoop集群的动态增加就已经完成了。

下面,我开始对Hadoop集群的节点进行动态的删除。

Hadoop集群节点的动态删除

1. 使用新增的节点

尽可能的在HDFS中产生一些测试数据,并通过Hive执行一些Job以便使新的节点也执行MapReduce并行计算。

这样做的原因是尽可能的模拟线上的环境,因为线上环境在进行删除节点之前肯定是有很多数据和Job执行过的。

2. 修改core-site.xml

dongguo@hadoop-master:~$ sudo vim /etc/hadoop/conf/core-site.xml

2 |

<name>dfs.hosts.exclude</name> |

3 |

<value>/etc/hadoop/conf/exclude</value> |

4 |

<description>Names a file that contains a list of hosts that are |

5 |

not permitted to connect to the namenode. The full pathname of the |

6 |

file must be specified. If the value is empty, no hosts are |

7 |

excluded.</description> |

3. 修改hdfs-site.xml

dongguo@hadoop-master:~$ sudo vim /etc/hadoop/conf/hdfs-site.xml

2 |

<name>dfs.hosts.exclude</name> |

3 |

<value>/etc/hadoop/conf/exclude</value> |

4 |

<description>Names a file that contains a list of hosts that are |

5 |

not permitted to connect to the namenode. The full pathname of the |

6 |

file must be specified. If the value is empty, no hosts are |

7 |

excluded.</description> |

4. 创建/etc/hadoop/conf/exclude

dongguo@hadoop-master:~$ sudo vim /etc/hadoop/conf/exclude

在文件中增加需要删除的节点,一行一个,我这里仅需要写入新增的hadoop-node-3做测试。

5. 降低备份系数

在我的测试环境中,目前节点为4台,备份系数为3,如果去掉一台的话备份系数就与节点数相同了,而Hadoop是不允许的。

通常备份系数不需要太高,可以是服务器总量的1/3左右即可,Hadoop默认的数值是3。

下面,我们将备份系数从3降低到2

5.1 在所有的Hadoop服务器上更新以下配置

$ sudo vim /etc/hadoop/conf/hdfs-site.xml

02 |

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?> |

06 |

<name>dfs.data.dir</name> |

07 |

<value>/data/hdfs</value> |

10 |

<name>dfs.replication</name> |

14 |

<name>dfs.datanode.max.xcievers</name> |

5.2 修改hdfs文件备份系数,把/ 目录下所有文件备份系数设置为2

dongguo@hadoop-master:~$ sudo -u hdfs hadoop dfs -setrep -w 2 -R /

遇到的疑问:

在进行文件备份系数的降低时,能够很快的进行Replication set,但是在Waiting for的过程中却很长时间没有完成。

最终只能手动Ctrl+C中断,个人猜测在这个过程中HDFS正视图对数据文件进行操作,在删除一个副本容量的数据。

因此,我们应该对dfs.replication的数值做出很好的规划,尽量避免需要降低该数值的情况出现。

6. 动态刷新配置

dongguo@hadoop-master:~$ sudo -u hdfs hadoop dfsadmin -refreshNodes

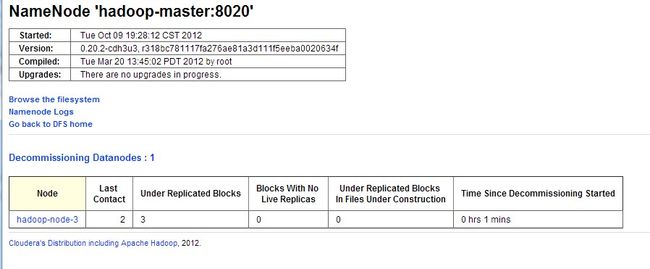

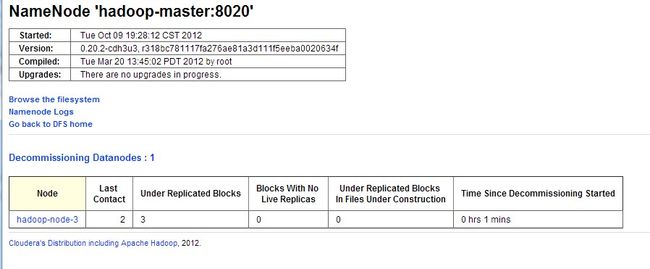

7. 检查节点的处理状态

通过WEB管理界面查看

Decommissioning(退役中)

http://10.6.1.150:50070/dfsnodelist.jsp?whatNodes=DECOMMISSIONING

Dead(已经下线)

http://10.6.1.150:50070/dfsnodelist.jsp?whatNodes=DEAD

可以看到,节点已经经历了退役的过程并成功的下线了。

需要注意的是:

在删除节点时一定要停止所有Hadoop的Job,否则程序还会向要删除的节点同步数据,这样也会导致Decommission的过程一直无法完成。

8. 检查进程状态

这时我们查看进程状态,可以发现datanode进程已经被自动中止了

dongguo@hadoop-node-3:~$ sudo /etc/init.d/hadoop-0.20-datanode status

hadoop-0.20-datanode is not running.

而Tasktracker进程还在,需要我们手动中止

dongguo@hadoop-node-3:~$ sudo /etc/init.d/hadoop-0.20-tasktracker status

hadoop-0.20-tasktracker is running

dongguo@hadoop-node-3:~$ sudo /etc/init.d/hadoop-0.20-tasktracker stop

Stopping Hadoop tasktracker daemon: stopping tasktracker

hadoop-0.20-tasktracker.

此时,即使我们手动启动datanode,也是不能成功的,日志中会显示UnregisteredDatanodeException的错误。

dongguo@hadoop-node-3:~$ sudo /etc/init.d/hadoop-0.20-datanode start

1 |

Starting Hadoop datanode daemon: starting datanode, logging to /usr/lib/hadoop-0.20/logs/hadoop-hadoop-datanode-hadoop-node-3.out |

2 |

ERROR. Could not start Hadoop datanode daemon |

dongguo@hadoop-node-3:~$ tailf /var/log/hadoop/hadoop-hadoop-datanode-hadoop-node-3.log

01 |

2012-10-11 19:33:22,084 ERROR org.apache.hadoop.hdfs.server.datanode.DataNode: org.apache.hadoop.ipc.RemoteException: org.apache.hadoop.hdfs.protocol.UnregisteredDatanodeException: Data node hadoop-node-3:50010 is attempting to report storage ID DS-500645823-10.6.1.153-50010-1349941031723. Node 10.6.1.153:50010 is expected to serve this storage. |

02 |

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.getDatanode(FSNamesystem.java:4547) |

03 |

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.verifyNodeRegistration(FSNamesystem.java:4512) |

04 |

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.registerDatanode(FSNamesystem.java:2355) |

05 |

at org.apache.hadoop.hdfs.server.namenode.NameNode.register(NameNode.java:932) |

06 |

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method) |

07 |

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:39) |

08 |

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:25) |

09 |

at java.lang.reflect.Method.invoke(Method.java:597) |

10 |

at org.apache.hadoop.ipc.RPC$Server.call(RPC.java:557) |

11 |

at org.apache.hadoop.ipc.Server$Handler$1.run(Server.java:1434) |

12 |

at org.apache.hadoop.ipc.Server$Handler$1.run(Server.java:1430) |

13 |

at java.security.AccessController.doPrivileged(Native Method) |

14 |

at javax.security.auth.Subject.doAs(Subject.java:396) |

15 |

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1157) |

16 |

at org.apache.hadoop.ipc.Server$Handler.run(Server.java:1428) |

17 |

at org.apache.hadoop.ipc.Client.call(Client.java:1107) |

18 |

at org.apache.hadoop.ipc.RPC$Invoker.invoke(RPC.java:226) |

19 |

at $Proxy4.register(Unknown Source) |

20 |

at org.apache.hadoop.hdfs.server.datanode.DataNode.register(DataNode.java:717) |

21 |

at org.apache.hadoop.hdfs.server.datanode.DataNode.runDatanodeDaemon(DataNode.java:1519) |

22 |

at org.apache.hadoop.hdfs.server.datanode.DataNode.createDataNode(DataNode.java:1586) |

23 |

at org.apache.hadoop.hdfs.server.datanode.DataNode.secureMain(DataNode.java:1711) |

24 |

at org.apache.hadoop.hdfs.server.datanode.DataNode.main(DataNode.java:1728) |

25 |

2012-10-11 19:33:22,097 INFO org.apache.hadoop.hdfs.server.datanode.DataNode: SHUTDOWN_MSG: |

26 |

/************************************************************ |

27 |

SHUTDOWN_MSG: Shutting down DataNode at hadoop-node-3/10.6.1.153 |

28 |

************************************************************/ |

至此,对Hadoop集群节点的动态删除也已经成功完成了。