Python_字符编码问题,chardet,codecs

1. chardet 插件可以方便的检测文件,URL,XML等等字符编码的类型。

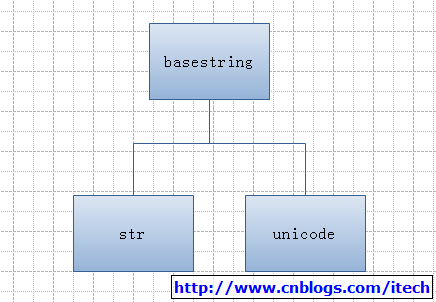

2. python中字符串的结构:

python的全局函数中basestring,str和unicode的描述如下

basestring()

This abstract type is the superclass for str and unicode. It cannot be called or instantiated, but it can be used to test whether an object is an instance of str or unicode.isinstance(obj, basestring) is equivalent to isinstance(obj, (str, unicode)).

str([object])

Return a string containing a nicely printable representation of an object. For strings, this returns the string itself. The difference with repr(object) is that str(object) does not always attempt to return a string that is acceptable to eval(); its goal is to return a printable string. If no argument is given, returns the empty string, ''.

unicode([object[, encoding[, errors]]])

Return the Unicode string version of object using one of the following modes:

If encoding and/or errors are given, unicode() will decode the object which can either be an 8-bit string or a character buffer using the codec for encoding. The encoding parameter is a string giving the name of an encoding; if the encoding is not known, LookupError is raised. Error handling is done according to errors; this specifies the treatment of characters which are invalid in the input encoding. If errors is 'strict' (the default), a ValueError is raised on errors, while a value of 'ignore' causes errors to be silently ignored, and a value of 'replace' causes the official Unicode replacement character, U+FFFD, to be used to replace input characters which cannot be decoded. See also the codecs module.

If no optional parameters are given, unicode() will mimic the behaviour of str() except that it returns Unicode strings instead of 8-bit strings. More precisely, if object is a Unicode string or subclass it will return that Unicode string without any additional decoding applied.

For objects which provide a __unicode__() method, it will call this method without arguments to create a Unicode string. For all other objects, the 8-bit string version or representation is requested and then converted to a Unicode string using the codec for the default encoding in 'strict' mode.

从上面描述来看,python中字符编码转换的大概流程是:先讲一个字符编码解码成python内部的unicode字符串,然后通过相应的字符编码器再将该unicode字符串编码成另一种字符编码。

3. codecs模块的API:http://docs.python.org/library/codecs.html

4. UTF-8中文编码存在的BOM标记问题:http://www.kgblog.net/2009/07/22/utf-8-bom-encoding.html 。可能会导致UTF-8编码报错,解决方法见下面代码。

5. 具体范例代码:需要有四个文件分别是UTF-16,UTF-8,GB2312和ASCII的字符编码。实现了字符编码的检测和转换。

#-*- encoding: gb2312 -*-

import chardet

def test():

import urllib

#rawdata = urllib.urlopen('http://172.16.120.166/media_resource/').read()

#print chardet.detect(rawdata)

#以下代码是尝试使用chardet和python中的unicode()方法以及String的encode方法实现字符编解码

##############################################################################

#查看UTF-16编码格式的文件

file = open("src_1.txt", 'r')

src_1 = file.read()

print "src_1: " + chardet.detect(src_1)["encoding"] + " with confidence: " + str(chardet.detect(src_1)["confidence"])

#查看UTF-8编码格式的文件

file = open("src_2.txt", 'r')

src_2 = file.read()

print "src_2: " + chardet.detect(src_2)["encoding"] + " with confidence: " + str(chardet.detect(src_2)["confidence"])

#查看GB2312编码格式的文件

file = open("src_3.txt", 'r')

src_3 = file.read()

print "src_3: " + chardet.detect(src_3)["encoding"] + " with confidence: " + str(chardet.detect(src_3)["confidence"])

#查看ASCII编码格式的文件

file = open("src_4.txt", 'r')

src_4 = file.read()

print "src_4: " + chardet.detect(src_4)["encoding"] + " with confidence: " + str(chardet.detect(src_4)["confidence"])

#将UTF-16编码转换为GB2312编码

result = unicode(src_1, "utf-16").encode("GB2312") #先解码成python的unicode字符串然后进行GB2312编码

resultFile = open("rst_1.txt", 'w') #输出到rst_1.txt

resultFile.write(result)

resultFile.close()

rst = open("rst_1.txt", 'r').read()

print "rst_1: " + chardet.detect(rst)["encoding"] + " with confidence: " + str(chardet.detect(rst)["confidence"]) #检测是否成功转码

##############################################################################

#以下代码是尝试使用codecs模块实现字符编解码

##############################################################################

import codecs

look_gb2312 = codecs.lookup("GB2312") #创建GB2312编码器

#将UTF-16编码转换为GB2312编码

file_utf16 = codecs.open("src_1.txt", 'r', "UTF-16") #使用codecs.open()方法可以指定编码方式读取文件内容

str_unicode = file_utf16.read() #直接获得python unicode字符串

str_gb2312 = look_gb2312.encode(str_unicode) #使用GB2312编码器encode

resultFile = open("rst_2.txt", 'w') #输出到rst_2.txt

resultFile.write(str_gb2312[0])

resultFile.close()

rst = open("rst_2.txt", 'r').read()

print "rst_2: " + chardet.detect(rst)["encoding"] + " with confidence: " + str(chardet.detect(rst)["confidence"]) #检测是否成功转码

#将UTF-8编码转换为GB2312编码

file_utf8 = codecs.open("src_2.txt", 'r', "UTF-8")

str_unicode = file_utf8.read()

str_gb2312 = look_gb2312.encode(str_unicode, 'ignore') #由于UTF-8的BOM造成的不正常解码问题,所以需要ignore错误的解码。

resultFile = open("rst_3.txt", 'w') #输出到rst_3.txt

resultFile.write(str_gb2312[0])

resultFile.close()

rst = open("rst_3.txt", 'r').read()

print "rst_3: " + chardet.detect(rst)["encoding"] + " with confidence: " + str(chardet.detect(rst)["confidence"]) #检测是否成功转码

##############################################################################

if __name__ == '__main__':

test()