Veritas Linux Cluster

Get Redhat Enterprise 3.0 from:

Your local RedHat rep�

Get Veritas’ VCS and VM products from:

ftp://ftp.veritas.com/pub/products/fst_ha.lxrt2.2.redhatlinux.tar.gz

ftp://ftp.veritas.com/pub/products/fst_ha.lxrt2.2MP1.redhatlinux.tar.gz

You will need a temporary key to complete this install. Please contact your local Veritas account team or email me at [email protected]

Install RedHat Enterprise Linux 3.0:

Put CD1 in your CD drive and reboot.

Make sure you select the smb-server packages during the install. You need that package to run Samba for Windows file-sharing.

Follow the install for your hardware and set up the boot disk to your liking. Be sure and leave some extra room on the disk (a least an extra 2-5 Gb) if you plan to encapsulate the boot volume.

Pay attention to how you setup the Ethernet cards. In Linux your cards will most likely be eth0, eth1, eth2. In my case I had two on-board Ethernet ports and one PCI based Ethernet card. Most Linux installs will recognize the on-board NIC ports first and then any PCI slots. During the install, I configured the public interface as eth2 and left the on-board cards (eth0 and eth1) for the heartbeat network. Keep that in mind for the VCS install later.

Finally, you should try to configure X for your hardware. This will come in handy later for running the GUIs locally. Of course, you can always connect remotely from another workstation.

After both systems have rebooted, make sure you add the other node�s hostname to the /etc/hosts file on both machines. So linuxnode1 will look like this and linuxnode2 should be just the opposite:

[root@linuxnode1]# cat /etc/hosts

127.0.0.1 linuxnode1 localhost.localdomain localhost

192.168.1.113 linuxnode2

Install VM:

Time to install the RPMs. Order is important.

cd to the rpms directory on the fst_ha.lxrt2.2 CD and run this command:

rpm -Uvh VRTSvlic-3.00-008.i686.rpm VRTSvxvm-3.2-update4_RH.i686.rpm VRTSvmdoc-3.2-update4.i686.rpm VRTSvmman-3.2-update4.i686.rpm VRTSvxfs-3.4.3-RH.i686.rpm VRTSfsdoc-3.4.3-GA.i686.rpm VRTSob-3.2.517-0.i686.rpm VRTSobgui-3.2.517-0.i686.rpm VRTSvmpro-3.2-update4.i686.rpm VRTSfspro-3.4.3-RH.i686.rpm

The above should all be on one line.

cd to the /redhat/foundation_suite/rpms on the fst_ha.lxrt2.2MP1 CD and run this command:

rpm -Uvh VRTSvlic-3.00-009.i686.rpm VRTSvxvm-3.2-update5_RH3.i686.rpm VRTSvmdoc-3.2-update5.i686.rpm VRTSvmman-3.2-update5.i686.rpm VRTSvxfs-3.4.4-RHEL3.i686.rpm VRTSfsdoc-3.4.4-GA.i686.rpm VRTSob-3.2.519-0.i686.rpm VRTSobgui-3.2.519-0.i686.rpm

The above should be on one line.

In theory you should be able to just run the second set of RPMs (adding the vmpro and fspro from the first set of RPMs) but I did it this way.

Reboot and ignore the kernel warning message about a tainted kernel and the cp module errors. Run vxinstall and grab any disks attached to the server during the vxinstall process.

Installing VCS:

Fix SSH for VCS Install (from the install guide, fixed to match my environment):

In the following procedure, it is assumed that the VCS is installed on linuxnode1 and linuxnode2 from the machine linuxnode1. All three machines reside on the same public LAN.

Note Before taking any of the following steps, make sure the /root/.ssh/ directory is present on all the cluster nodes. If that directory is missing on any node in the cluster, create it by executing the mkdir /root/.ssh/ command.

1. Log in as root on the linuxnode1 system from which you want to install VCS.

2. Generate a DSA key pair on this system by entering the following command:

# ssh-keygen -t dsa

You will see system output that resembles the following:

Generating public/private dsa key pair.

Enter file in which to save the key (/root/.ssh/id_dsa):

3. Press Enter to accept the default location of ~/.ssh/id_dsa.

You will see system output that resembles the following:

Enter passphrase (empty for no passphrase):

4. Enter a passphrase and press Enter.

Note It is important to enter the passphrase. If no passphrase is entered here, you will see an error message later when you try to configure the ssh-agent. Since the passphrase is a password, you will not see it as you enter. You will see system output that resembles the following:

Enter same passphrase again.

5. Reenter the passphrase.

You will see system output that resembles the following:

Your identification has been saved in /root/.ssh/id_dsa.

Your public key has been saved in /root/.ssh/id_dsa.pub.

The key fingerprint is: 60:83:86:6c:38:c7:9e:57:c6:f1:a1:f3:f0:e8:15:86

Note: Even if the local system is part of the cluster, make sure to take step 6 or step 7 (as the case might be) to complete the ssh configuration.

6. Append the file /root/.ssh/id_dsa.pub to /root/.ssh/authorized_keys2 on the first system in the cluster where VCS is to be installed by entering the following commands:

a. # sftp linuxnode1

If you are performing this step for the first time on this machine, an output similar to the following would be displayed:

Connecting to linuxnode1...

The authenticity of host 'linuxnode1 (10.182.12.3)' can't be established.

RSA key fingerprint is fb:6f:9f:61:91:9d:44:6b:87:86:ef:68:a6:fd:88:7d.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added 'linuxnode1,10.182.12.3' (RSA) to the list of known hosts.

root@linuxnode1's password:

b. Enter the root password:

c. Enter the following at the sftp prompt:

sftp> put /root/.ssh/id_dsa.pub

You will see the following output:

Uploading /root/.ssh/id_dsa.pub to /root/id_dsa.pub

d. Quit the sftp session by entering the following command:

sftp> quit

e. Start the ssh session with linuxnode1 with the following command:

# ssh linuxnode1

f. Enter the root password at the following prompt:

root@linuxnode1's password:

g. After log in, enter the following command:

# cat id_dsa.pub >> /root/.ssh/authorized_keys2

h. Log out of the ssh session by entering the following command:

# exit

7. Append the file /root/.ssh/id_dsa.pub to

/root/.ssh/authorized_keys2 on the second system in the cluster where VCS

is to be installed by entering the following commands:

a. # sftp linuxnode2

If you are performing this step for the first time on this machine, an output similar to the following would be displayed:

Connecting to linuxnode2...

The authenticity of host 'linuxnode2 (10.182.14.5)' can't be established.

RSA key fingerprint is fb:6f:9f:61:91:9d:44:6b:87:86:ef:68:a6:fd:89:8d.

Are you sure you want to continue connecting (yes/no)? yes

Setting Up ssh on Cluster Systems

Warning: Permanently added 'linuxnode2,10.182.14.5' (RSA) to the

list of known hosts.

root@linuxnode2's password:

b. Enter the root password:

c. Enter the following at the sftp prompt:

sftp> put /root/.ssh/id_dsa.pub

You will see the following output:

Uploading /root/.ssh/id_dsa.pub to /root/id_dsa.pub

d. Quit the sftp session by entering the following command:

sftp> quit

e. Start the ssh session with linuxnode2 with the following command:

# ssh linuxnode2

f. Enter the root password at the following prompt:

root@linuxnode2's password:

g. After log in, enter the following command:

# cat id_dsa.pub >> /root/.ssh/authorized_keys2

h. Log out of the ssh session by entering the following command:

# exit

Note: When installing VCS from a node which will participate in the cluster, it is important to add the local systems id_dsa.pub key in to the local ~/.ssh/authorized_key2. Failing to do this will result in a password request during installation causing installation to fail.

8. At the prompt on the linuxnode1, configure the ssh-agent to remember the passphrase.

(This voids the passphrase requirement for each ssh or scp invocation.) Run the following commands on the system from which the installation is taking place:

# exec /usr/bin/ssh-agent $SHELL

# ssh-add

Note: This step is shell-specific and is valid for the duration the shell is alive. You need to re-execute the procedure if you close the shell during the session.

9. When prompted, enter your DSA passphrase.

The output will look something like this: :

Need passphrase for /root/.ssh/id_dsa

Enter passphrase for /root/.ssh/id_dsa

Identity added: /root/.ssh/id_dsa (dsa w/o comment)

Identity added: /root/.ssh/id_rsa (rsa w/o comment)

Identity added: /root/.ssh/identity (root@linuxnode1)

You are ready to install VCS on several systems by running the installvcs script on any one of them or on an independent machine outside the cluster.

To avoid running the ssh-agent on each shell, using the X-Window system, configure it so that you will not be prompted for the passphrase. Refer to the Red Hat documentation for more information.

10. To verify that you can connect to the systems on which VCS is to be installed, enter:

# ssh -l root linuxnode1 ls

# ssh -l root linuxnode2 ifconfig

The commands should execute on the remote system without having to enter a passphrase or password.

Note: You can also configure ssh in other ways. Regardless of how ssh is configured, complete step 10 in the example above to verify the configuration.

Install VCS:

Run the installvcs script from the fst_ha.lxrt2.2MP1 CD. That script is in the /redhat/cluster_server directory. Follow along with the script.

Don’t forget to add /opt/VRTSvcs/bin and /opt/VRTSob/bin to your PATH. To do this in Linux:

vi /root/.bash_profile

Edit the PATH= line to match the below:

PATH=$PATH:$HOME/bin:/opt/VRTSvcs/bin:/opt/VRTSob/bin

If you plan on adding additional users that will need VCS access from the command line then you should also change the .bash_profile in that user’s home directory or you can just add the PATH line to:

vi /etc/skel/.bash_profile

That will add the Veritas binaries path to all users that get added going forward.

Configuring Samba:

On each node (linuxnode1 and linuxnode2):

run ntsysv and make sure the smb service is not selected for automatic startup

edit /etc/samba/smb.conf and make the following changes:

-

* in the [global] section change the “Server String” to a meaningful name

* the “Server String” name is the NetBIOS name

* find the [tmp] share provided in the existing smb.conf

+ uncomment the entries

+ change the path=/tmp to the path you want to share

+ you can also change [tmp] to another name

Make sure you made these change on both nodes.

Add user to both nodes:

Add a regular Linux user:

adduser billgates

passwd billgates

Add the Linux user as a Samba user as well:

smbpasswd -a billgates

If you do not add the user to both nodes you will not be able to login when you failover.

Now fire up the VCS Gui from a remote workstation or install the VCS Gui on one of the nodes of your cluster.

Add Samba Service Group:

Add the Samba Group and Select the Samba Group Template.

Delete the DiskReservation and the Mount resources if you are not using shared storage.

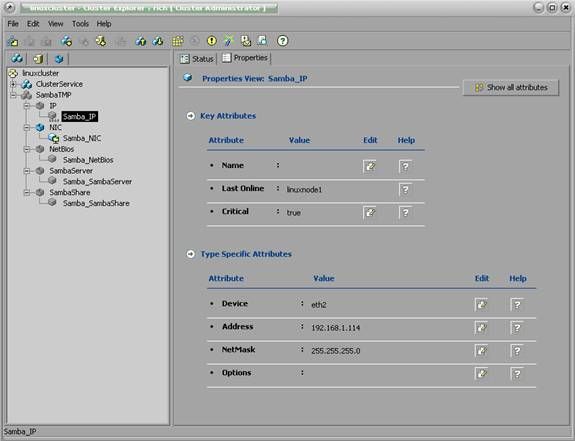

Your Samba Service Group should now look like this.

Configure and enable the Samba IP Resource. Use the device you configured as your publicly accessible NIC back during the OS install. Here I used eth2 which is the card installed in the PCI slot.

Configure the NetBIOS resource. The NetBIOS name is the �Server String� name given in the smb.conf file that we edited previously. Leave the other parameters set to their default.

Configure the SambaServer resource. The ConfFile is our /etc/samba/smb.conf file. If you did not edit this file on both nodes, you will have issues when you enable this resource. The LockDir is /var/run, this is the default on RedHat. The other defaults are fine.

Finally, configure the SambaShare resource. The share name should match whatever you re-named the [tmp] resource name to in the smb.conf file that we edited earlier. I left mine as [tmp] so my share name is tmp. The share options are the tunable options for the Samba Share. The options for this are legion. The default wide open share is public set to yes and writable set to yes. This is equivalent to chmod 777. So the ShareOptions for VCS should be set to: path=/tmp; public=yes; writable=yes for my example. Obviously the path= should be set to the directory you want to share. Files written to this directory will have the permissions of their owners.

All done! Failover test it and connect to your share from both nodes.

Bonus Points-Keeping It All In Sync:

Do not rsync the /tmp directory, doing so will possibly hose your system.

If you choose to use a directory other than /tmp for testing, you can establish turn on rsyncd on both hosts by using ntsysv to turn on the rsync service. Create the file /etc/rsyncd.conf and add entries similar to the ones below:

[syncthis]

path = /thepathnameyouwanttosync

comment = Sync These Puppies Up!

Since rsync is controlled by the xinetd process on RedHat, start rsync by following the steps below:

vi /etc/xinet.d/rsync

change the disable = yes line to read disable = no

Now xinetd will answer requests for rsync services. Make sure you do this process on both nodes.

Check your work by issuing this command on each node:

On linuxnode1:

[root@linuxnode1 root]# rsync linuxnode2::

syncthis Sync These Puppies Up!

On linuxnode2:

[root@linuxnode2 root]# rsync linuxnode1::

syncthis Sync These Puppies Up!

Run this command from linuxnode1 to synchronize linuxnode2 to linuxnode1:

rsync -av --delete-after --stats --progress --ignore-errors linuxnode2::syncthis /thepathnameyouwanttosync

Run this command from linuxnode2 to synchronize linuxnode1 to linuxnode2:

rsync -av --delete-after --stats --progress --ignore-errors linuxnode1::syncthis /thepathnameyouwanttosync

Note: The -delete-after option used in the above command line, if you accidentally delete or otherwise remove the data on one of the nodes, the -delete* option will remove it from the other node during the next rsync. I use it since I keep backups in my real environment, but your propensity for risk may be different. Without the -delete* option, if a user deletes a file on one node, it will remain on the far node. Bottom line, use the -delete* option and keep good backups.

Add these commands to crontab on each box on whatever schedule you choose:

crontab -e

Add on linuxnode1:

9-59,10 * * * * rsync -av --delete-after --stats --progress --ignore-errors linuxnode2::syncthis /thepathnameyouwanttosync

Add on linuxnode2:

7-57,10 * * * * rsync -av --delete-after --stats --progress --ignore-errors linuxnode1::syncthis /thepathnameyouwanttosync

Don’t forget to restart cron on each box so that it will re-read your new crontab:

/etc/init.d/crond restart

Homework:

Make rsync highly available and don’t forget to turn it off in ntsysv and let VCS take control of it. This is harder than it sounds.

Share/Save

2 Responses to “Veritas Linux Cluster”

- RobotThoughts » Veritas Linux Cluster How To Says:

July 20th, 2005 at 9:27 am[...] I did a quick How To on building a Veritas Cluster on Linux. How To’s : Rich 9:27 am : : [...]

- tushar jambhekar Says:

February 17th, 2009 at 5:00 amWow…… gr8 Article ….. this works gr8………