keepalive (2)

DR模型的vrrp

主机有4台,是 .150 .151 .140 .120

注意:所有服务器都要时间同步。

.140:

配置http的网页文件

[root@node3 ~]# echo "<h1>node3 (node3.zye.com)</h1>" > /var/www/html/index.html

[root@node3 ~]# cat /var/www/html/index.html

<h1>node3 (node3.zye.com)</h1>

创建脚本并查看

[root@node3 ~]# vim rs.sh

#!/bin/bash

#

vip="172.168.254.52"

start () {

echo 1 > /proc/sys/net/ipv4/conf/all/arp_ignore

echo 1 > /proc/sys/net/ipv4/conf/lo/arp_ignore

echo 2 > /proc/sys/net/ipv4/conf/all/arp_announce

echo 2 > /proc/sys/net/ipv4/conf/lo/arp_announce

ifconfig lo:0 $vip broadcast $vip netmask 255.255.255.255 up

route add -host $vip dev lo:0

}

stop () {

echo 0 > /proc/sys/net/ipv4/conf/all/arp_ignore

echo 0 > /proc/sys/net/ipv4/conf/lo/arp_ignore

echo 0 > /proc/sys/net/ipv4/conf/all/arp_announce

echo 0 > /proc/sys/net/ipv4/conf/lo/arp_announce

ifconfig lo:0 down

}

case $1 in

start)

start

;;

stop)

stop

;;

*)

echo "Usage:`basename $0` {start|stop}"

exit 1

esac

[root@node3 ~]# bash -n rs.sh

[root@node3 ~]# bash rs.sh

Usage:rs.sh {start|stop}

[root@node3 ~]# bash rs.sh

Usage:rs.sh {start|stop}

[root@node3 ~]# bash rs.sh start

You have new mail in /var/spool/mail/root

[root@node3 ~]# ifconfig lo:0

lo:0 Link encap:Local Loopback

inet addr:172.168.254.52 Mask:255.255.255.255

UP LOOPBACK RUNNING MTU:65536 Metric:1

[root@node3 ~]# cat /proc/sys/net/ipv4/conf/all/arp_ignore

1

[root@node3 ~]# cat /proc/sys/net/ipv4/conf/all/arp_announce

2

You have new mail in /var/spool/mail/root

复制脚本到.120

[root@node3 ~]# scp rs.sh 172.168.254.120:/root

The authenticity of host '172.168.254.120 (172.168.254.120)' can't be established.

RSA key fingerprint is 0e:95:8d:de:b9:2f:c4:75:8d:70:af:e2:84:65:7f:86.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added '172.168.254.120' (RSA) to the list of known hosts.

root@172.168.254.120's password:

rs.sh 100% 703 0.7KB/s 00:00

You have new mail in /var/spool/mail/root

.120:

配置http的网页文件

[root@node3 ~]# echo "<h1>node120 (node120.zye.com)</h1>" > /var/www/html/index.html

[root@node3 ~]# cat /var/www/html/index.html

<h1>node120 (node120.zye.com)</h1>

运行复制文本rs.sh

[root@node120 ~]# bash rs.sh

Usage:rs.sh {start|stop}

[root@node120 ~]# bash rs.sh start

[root@node120 ~]# ifconfig lo:0

lo:0 Link encap:Local Loopback

inet addr:172.168.254.52 Mask:255.255.255.255

UP LOOPBACK RUNNING MTU:65536 Metric:1

[root@node120 ~]# cat /proc/sys/net/ipv4/conf/all/{arp_ignore,arp_announce}

1

2

[root@node120 ~]# cat /proc/sys/net/ipv4/conf/lo/{arp_ignore,arp_announce}

1

2

0.150(接上博客)

[root@node200 ~]# yum install ipvsadm -y

设置接口地址

[root@node200 ~]# ifconfig eth0

eth0 Link encap:Ethernet HWaddr 00:0C:29:9F:1F:E5

inet addr:172.168.254.150 Bcast:172.168.254.255 Mask:255.255.255.0

inet6 addr: fe80::20c:29ff:fe9f:1fe5/64 Scope:Link

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:423297 errors:0 dropped:0 overruns:0 frame:0

TX packets:106380 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:546666043 (521.3 MiB) TX bytes:20216791 (19.2 MiB)

[root@node200 ~]# ifconfig eth0:0 172.168.254.52 broadcast 172.168.254.52 netmask 255.255.255.255 up

[root@node200 ~]# route add -host 172.168.254.52 dev eth0:0

[root@node200 ~]# ifconfig eth0:0 &&route -n

eth0:0 Link encap:Ethernet HWaddr 00:0C:29:9F:1F:E5

inet addr:172.168.254.52 Bcast:172.168.254.52 Mask:255.255.255.255

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

Kernel IP routing table

Destination Gateway Genmask Flags Metric Ref Use Iface

172.168.254.52 0.0.0.0 255.255.255.255 UH 0 0 0 eth0

172.168.254.0 0.0.0.0 255.255.255.0 U 1 0 0 eth0

0.0.0.0 172.168.254.254 0.0.0.0 UG 0 0 0 eth0

创建ipvsadm

[root@node200 ~]# ipvsadm -A -t 172.168.254.52:80 -s rr

[root@node200 ~]# ipvsadm -a -t 172.168.254.52:80 -r 172.168.254.140:80 -w 1 -g

[root@node200 ~]# ipvsadm -a -t 172.168.254.52:80 -r 172.168.254.120:80 -w 1

[root@node200 ~]# ipvsadm -Ln

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 172.168.254.52:80 rr

-> 172.168.254.120:80 Route 1 0 0

-> 172.168.254.140:80 Route 1 0 0

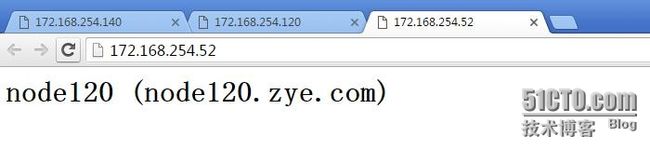

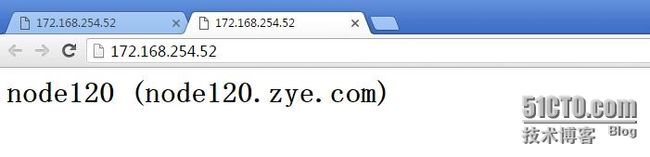

测试

清空规则,关闭开机启动项,关闭接口

[root@node200 ~]# ipvsadm -C

[root@node200 ~]# ipvsadm -Ln

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

[root@node200 ~]# chkconfig ipvsadm off

[root@node200 ~]# chkconfig --list ipvsadm

ipvsadm 0:关闭1:关闭2:关闭3:关闭4:关闭5:关闭6:关闭

[root@node200 ~]# ifconfig eth0:0 down && ifconfig && route -n

eth0 Link encap:Ethernet HWaddr 00:0C:29:9F:1F:E5

inet addr:172.168.254.150 Bcast:172.168.254.255 Mask:255.255.255.0

inet6 addr: fe80::20c:29ff:fe9f:1fe5/64 Scope:Link

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:437150 errors:0 dropped:0 overruns:0 frame:0

TX packets:117182 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:547591624 (522.2 MiB) TX bytes:20896753 (19.9 MiB)

lo Link encap:Local Loopback

inet addr:127.0.0.1 Mask:255.0.0.0

inet6 addr: ::1/128 Scope:Host

UP LOOPBACK RUNNING MTU:65536 Metric:1

RX packets:244 errors:0 dropped:0 overruns:0 frame:0

TX packets:244 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:35608 (34.7 KiB) TX bytes:35608 (34.7 KiB)

Kernel IP routing table

Destination Gateway Genmask Flags Metric Ref Use Iface

172.168.254.0 0.0.0.0 255.255.255.0 U 1 0 0 eth0

0.0.0.0 172.168.254.254 0.0.0.0 UG 0 0 0 eth0

关闭keepalive

[root@node200 ~]# service keepalived stop

停止 keepalived: [确定]

[root@node200 ~]# cd /etc/keepalived/

[root@node200 keepalived]# mv keepalived.conf keepalived.conf.dualmaster

[root@node200 keepalived]# cp keepalived.conf.bak keepalived.conf

[root@node200 keepalived]# vim keepalived.conf

vrrp_instance VI_1 {

state BACKUP

interface eth0

virtual_router_id 51

priority 99

advert_int 1

authentication {

auth_type PASS

auth_pass 9999

}

virtual_ipaddress {

172.168.254.52/32 brd 172.168.254.52 dev eth0 label eth0:0

}

track_script {

chk_mt_down

}

}

启动keepalive

[root@node200 keepalived]# service keepalived start

正在启动 keepalived: [确定]

所有TCP_CHECK做后端服务器检查(修改1个rs或2个)

[root@node200 keepalived]# vim keepalived.conf

real_server 192.168.0.130 80 {

weight 1

TCP_CHECK {

connect_ip 192.168.0.130

connect_port 80

connect_timeout 3

}

}

real_server 192.168.0.120 80 {

weight 1

TCP_CHECK {

connect_ip 192.168.0.120

connect_port 80

connect_timeout 3

}

}

}

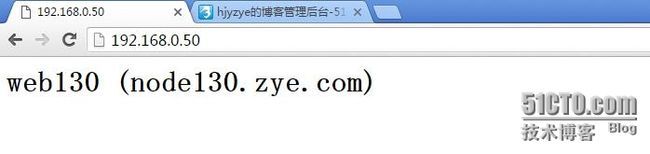

关闭130http

[root@node130 ~]# service httpd stop

停止 httpd: [确定]

查询

[root@node200 keepalived]# ipvsadm -Ln

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 192.168.0.50:80 rr

-> 192.168.0.120:80 Route 1 0 0

打开130httpd

[root@node130 ~]# service httpd start

正在启动 httpd:httpd: Could not reliably determine the server's fully qualified domain name, using node130.zye.com for ServerName

查询 [确定]

[root@node200 keepalived]# ipvsadm -Ln

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 192.168.0.50:80 rr

-> 192.168.0.120:80 Route 1 0 0

-> 192.168.0.130:80 Route 1 0 0

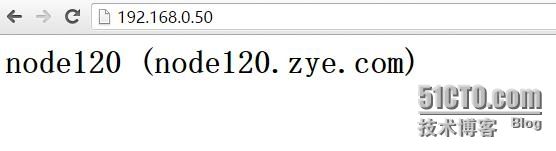

验证

0.151(接上博客)

[root@node2 ~]# yum install -y ipvsadm

添加接口

[root@node2 ~]# ifconfig eth0:0 172.168.254.52 broadcast 172.168.254.52 netmask 255.255.255.255 up

[root@node2 ~]# route add -host 172.168.254.52 dev eth0:0

[root@node2 ~]# ipvsadm -A -t 172.168.254.52:80 -s rr

[root@node2 ~]# ipvsadm -a -t 172.168.254.52:80 -r 172.168.254.140:80 -w 1

[root@node2 ~]# ipvsadm -a -t 172.168.254.52:80 -r 172.168.254.120:80 -w 1

[root@node2 ~]# ipvsadm -L -n

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 172.168.254.52:80 rr

-> 172.168.254.120:80 Route 1 0 0

-> 172.168.254.140:80

[root@node2 ~]# ipvsadm -ln --stats(实验的时候比较慢)

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Conns InPkts OutPkts InBytes OutBytes

-> RemoteAddress:Port

TCP 172.168.254.52:80 5 5 0 260 0

-> 172.168.254.120:80 3 3 0 156 0

-> 172.168.254.140:80 2 2 0 104 0

关闭所有接口

[root@node2 ~]# chkconfig --list ipvsadm

ipvsadm 0:关闭1:关闭2:启用3:启用4:启用5:启用6:关闭

[root@node2 ~]# chkconfig ipvsadm off

[root@node2 ~]# chkconfig --list ipvsadm

ipvsadm 0:关闭1:关闭2:关闭3:关闭4:关闭5:关闭6:关闭

[root@node2 ~]# ipvsadm -C

[root@node2 ~]# ifconfig eth0:0 down

[root@node2 ~]# ifconfig

eth0 Link encap:Ethernet HWaddr 00:0C:29:55:35:62

inet addr:172.168.254.151 Bcast:172.168.254.255 Mask:255.255.255.0

inet6 addr: fe80::20c:29ff:fe55:3562/64 Scope:Link

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:37942 errors:0 dropped:0 overruns:0 frame:0

TX packets:38519 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:2816451 (2.6 MiB) TX bytes:2629871 (2.5 MiB)

lo Link encap:Local Loopback

inet addr:127.0.0.1 Mask:255.0.0.0

inet6 addr: ::1/128 Scope:Host

UP LOOPBACK RUNNING MTU:65536 Metric:1

RX packets:193 errors:0 dropped:0 overruns:0 frame:0

TX packets:193 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:73360 (71.6 KiB) TX bytes:73360 (71.6 KiB)

[root@node2 ~]# route -n

Kernel IP routing table

Destination Gateway Genmask Flags Metric Ref Use Iface

172.168.254.0 0.0.0.0 255.255.255.0 U 1 0 0 eth0

0.0.0.0 172.168.254.254 0.0.0.0 UG 0 0 0 eth0

关闭keepalive

[root@node2 ~]# service keepalived stop

停止 keepalived: [确定]

[root@node2 ~]# cd /etc/keepalived/

[root@node2 keepalived]# mv keepalived.conf keepalived.conf.dualmaster

[root@node2 keepalived]# cp keepalived.conf.bak keepalived.conf

[root@node2 keepalived]# vim keepalived.conf

! Configuration File for keepalived

global_defs {

notification_email {

keepadm@zye.com

}

notification_email_from asda@12.com

smtp_server 127.0.0.1

smtp_connect_timeout 30

router_id LVS_DEVEL

}

vrrp_script chk_mt_down {

script "[[ -f /etc/keepalived/down ]] && exit 1 || exit 0"

interval 1

weight -20

}

vrrp_instance VI_1 {

state MASTER

interface eth0

virtual_router_id 51

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass 9999

}

virtual_ipaddress {

172.168.254.52/32 brd 172.168.254.52 dev eth0 label eth0:0

}

track_script {

chk_mt_down

}

notify_master "/etc/keepalived/clean_arp.sh 172.168.254.52"

}

virtual_server 172.168.254.52 80 {

delay_loop 6

lb_algo rr

lb_kind DR

nat_mask 255.255.255.0

# persistence_timeout 50

protocol TCP

real_server 172.168.254.140 80 {

weight 1

HTTP_GET {

url {

path /index.html

status_code 200

}

connect_timeout 2

nb_get_retry 3

delay_before_retry 1

}

}

real_server 172.168.254.120 80 {

weight 1

HTTP_GET {

url {

path /index.html

status_code 200

}

connect_timeout 2

nb_get_retry 3

delay_before_retry 1

}

}

}

在两台主机上

vim /etc/keepalived/clean_arp.sh #编辑,添加以下代码

#!/bin/sh

VIP=$1

GATEWAY=192.168.21.2 #网关地址

/sbin/arping -I eth0 -c 5 -s $VIP $GATEWAY &>/dev/null

:wq! #保存退出

chmod +x /etc/keepalived/clean_arp.sh

复制文件到0.150

[root@node2 keepalived]# scp keepalived.conf 172.168.254.150:/etc/keepalived/

root@172.168.254.150's password:

keepalived.conf 100% 1377 1.3KB/s 00:00

启动keepalived

[root@node2 keepalived]# service keepalived start

正在启动 keepalived: [确定]

[root@node2 keepalived]# ipvsadm -ln --stats

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Conns InPkts OutPkts InBytes OutBytes

-> RemoteAddress:Port

TCP 172.168.254.52:80 2 2 0 104 0

-> 172.168.254.120:80 1 1 0 52 0

-> 172.168.254.140:80 1 1 0 52 0

使用down文件

[root@node2 keepalived]# ip addr show

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 00:0c:29:55:35:62 brd ff:ff:ff:ff:ff:ff

inet 172.168.254.151/24 brd 172.168.254.255 scope global eth0

inet 172.168.254.52/32 brd 172.168.254.52 scope global eth0:0

inet6 fe80::20c:29ff:fe55:3562/64 scope link

valid_lft forever preferred_lft forever

3: pan0: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN

link/ether 26:a9:e4:0e:31:1a brd ff:ff:ff:ff:ff:ff

[root@node2 keepalived]# touch down

[root@node2 keepalived]# ip addr show

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 00:0c:29:55:35:62 brd ff:ff:ff:ff:ff:ff

inet 172.168.254.151/24 brd 172.168.254.255 scope global eth0

inet6 fe80::20c:29ff:fe55:3562/64 scope link

valid_lft forever preferred_lft forever

3: pan0: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN

link/ether 26:a9:e4:0e:31:1a brd ff:ff:ff:ff:ff:ff

测试0.140 关闭服务查看健康报告

[root@node3 ~]# service httpd stop

停止 httpd: [确定]

You have new mail in /var/spool/mail/root

[root@node2 keepalived]# ipvsadm -L -n

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 172.168.254.52:80 rr

-> 172.168.254.120:80 Route 1 0 0

重新启动0.140查看健康状态

[root@node3 ~]# service httpd start

正在启动 httpd:httpd: Could not reliably determine the server's fully qualified domain name, using node3.zye.com for ServerName

[确定]

You have new mail in /var/spool/mail/root

haproxy双主

两台安装haproxy(测试好后,stop,chkconfig haproxy off)

yum install haproxy -y

vim /etc/haproxy/haproxy.cfg

[root@node200 keepalived]# vim /etc/haproxy/haproxy.cfg

option redispatch

retries 3

timeout http-request 10s

timeout queue 1m

timeout connect 10s

timeout client 1m

timeout server 1m

timeout http-keep-alive 10s

timeout check 10s

maxconn 3000

#---------------------------------------------------------------------

# main frontend which proxys to the backends

#---------------------------------------------------------------------

frontend main *:80

# acl url_static path_beg -i /static /images /javascript /stylesheets

# acl url_static path_end -i .jpg .gif .png .css .js

# use_backend static if url_static

default_backend websrvs

#---------------------------------------------------------------------

# static backend for serving up images, stylesheets and such

#---------------------------------------------------------------------

#backend static

# balance roundrobin

# server static 127.0.0.1:4331 check

#---------------------------------------------------------------------

# round robin balancing between the various backends

#---------------------------------------------------------------------

backend websrvs

balance roundrobin

server web1 192.168.0.130:80 check maxconn 5000

server web2 192.168.0.120:80 check maxconn 5000

listen stats *:9103

stats enable

主节点keepalive

[root@node200 keepalived]# vim keepalived.conf

! Configuration File for keepalived

global_defs {

notification_email {

keepadm@zye.com

}

notification_email_from keepadm@zye.com

smtp_server 127.0.0.1

smtp_connect_timeout 30

router_id LVS_DEVEL

}

vrrp_script chk_mt_down {

script "[[ -f /etc/keepalived/down ]] && exit 1 || exit 0"

interval 1

weight -5

}

vrrp_script chk_proxy {

script "killall -0 haproxy &> /dev/null"

interval 1

weight -5

}

vrrp_instance VI_1 {

state MASTER

interface eth0

virtual_router_id 55

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass lsad

}

virtual_ipaddress {

192.168.0.50/32 brd 192.168.0.50 dev eth0 label eth0:0

}

track_script {

chk_mt_down

chk_haproxy

}

notify_master "/etc/keepalived/notify.sh master"

notify_backup "/etc/keepalived/notify.sh backup"

notify_fault "/etc/keepalived/notify.sh fault"

}

vrrp_instance VI_1 {

state BACKUP

interface eth0

virtual_router_id 56

priority 99

advert_int 1

authentication {

auth_type PASS

auth_pass lsad

}

virtual_ipaddress {

192.168.0.51/32 brd 192.168.0.51 dev eth0 label eth0:1

}

track_script {

chk_mt_down

chk_haproxy

}

notify_master "/etc/keepalived/notify.sh master"

notify_backup "/etc/keepalived/notify.sh backup"

notify_fault "/etc/keepalived/notify.sh fault"

}

备节点

[root@node3 keepalived]# vim keepalived.conf

! Configuration File for keepalived

global_defs {

notification_email {

keepadm@zye.com

}

notification_email_from keepadm@zye.com

smtp_server 127.0.0.1

smtp_connect_timeout 30

router_id LVS_DEVEL

}

vrrp_script chk_mt_down {

script "[[ -f /etc/keepalived/down ]] && exit 1 || exit 0"

interval 1

weight -5

}

vrrp_script chk_haproxy {

script "killall -0 haproxy &> /dev/null"

interval 1

weight -5

}

vrrp_instance VI_1 {

state BACKUP

interface eth0

virtual_router_id 55

priority 99

advert_int 1

authentication {

auth_type PASS

auth_pass lsad

}

virtual_ipaddress {

192.168.0.50/32 brd 192.168.0.50 dev eth0 label eth0:0

}

track_script {

chk_mt_down

chk_haproxy

}

notify_master "/etc/keepalived/notify.sh master"

notify_backup "/etc/keepalived/notify.sh backup"

notify_fault "/etc/keepalived/notify.sh fault"

}

vrrp_instance VI_2 {

state MASTER

interface eth0

virtual_router_id 56

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass lsad

}

virtual_ipaddress {

192.168.0.51/32 brd 192.168.0.50 dev eth0 label eth0:1

}

track_script {

chk_mt_down

chk_haproxy

}

notify_master "/etc/keepalived/notify.sh master"

notify_backup "/etc/keepalived/notify.sh backup"

notify_fault "/etc/keepalived/notify.sh fault"

}

两台

vim notify.sh

#!/bin/bash

# Author: MageEdu <linuxedu@foxmail.com>

# description: An example of notify script

#

vip=192.168.0.50

contact='keepadm@localhost'

gateway=192.168.0.1

notify() {

mailsubject="`hostname` to be $1: $vip floating"

mailbody="`date '+%F %H:%M:%S'`: vrrp transition, `hostname` changed to be $1"

echo $mailbody | mail -s "$mailsubject" $contact

}

case "$1" in

master)

notify master

/etc/rc.d/init.d/haproxy start

exit 0

;;

backup)

notify backup

/etc/rc.d/init.d/haproxy restart

exit 0

;;

fault)

notify fault

/etc/rc.d/init.d/haproxy stop

exit 0

;;

*)

echo 'Usage: `basename $0` {master|backup|fault}'

exit 1

;;

esac