maching learning NG的matlab作业代码

w eek 5

这里是算神经网络的cost Function, Regularized Cost Function 算上了regular.这里主要是y要从一列变成10好几列。

h1=sigmoid([ones(m,1) X]*Theta1');

h2=sigmoid([ones(m,1) h1]*Theta2');

yp=zeros(m,num_labels);

for n=1:m

yp(n,y(n))=1;

end

regular=lambda/(2*m)*(sum(sum(Theta1(:,2:size(Theta1,2)).^2))+sum(sum(Theta2(:,2:size(Theta2,2)).^2)));

J=(1/m)*(sum(sum(-yp.*log(h2)-(1-yp).*log(1-h2))))+regular;

Sigmoid Gradient蛮简单的。

g=sigmoid(z); g=g.*(1-g);

week6 ex6

linearRegCostFunction函数下面的cost function ,以前写过的:

J =sum((h-y).^2)/(2*m)+lambda*(sum(theta(2:length(theta),:).^2))/(2*m);

主要是要注意到,theta,rugular的时候,是不考虑第一个的。前面是普通回归的cost,后面是为了防止过拟合,lambda可以调整拟合程度,普通的相当于lambda=0的特殊情况。这里m原来就是从1开始数,n是从0开始的。

grad梯度也蛮简单。

theta(1,1)=0; grad = (X'*(h-y)+(lambda*theta))/m;

第一行处理两个公式的问题,相当于theta0 = 0,所以就把两条公式并为一条公式。

另外左边的这部分,仔细分析,对应元素相乘再相加,和矩阵的行乘以列 a.n * n.b = a.b 就是对应的步骤,所以就简化为矩阵相乘,这样的结果就是对的。

仔细理一下,是先有成本函数,成本函数衡量差距,然后根据成本函数算偏导数,偏导数来对输出进行校正。

learningCurve里面的代码,对比不同训练集的大小得到的误差,做成曲线,误差公式上图。

for i = 1:m

% Compute train/cross validation errors using training examples

% X(1:i, :) and y(1:i), storing the result in

% error_train(i) and error_val(i)

[theta] = trainLinearReg( X(1:i,:), y(1:i), lambda);

h = X(1:i,:)*theta;

error_train(i)=sum((h-y(1:i)).^2)/(2*i);

h = Xval*theta;

error_val(i)=sum((h-yval).^2)/(2*length(yval));

end

ALIDATIONCURVE Generate the train and validation errors needed to plot a validation curve that we can use to select lambda

把lambda的值设成不同的。

for i = 1:length(lambda_vec)

lambda = lambda_vec(i);

% Compute train / val errors when training linear

% regression with regularization parameter lambda

% You should store the result in error_train(i)

% and error_val(i)

[theta] = trainLinearReg( X, y, lambda);

h = X*theta;

error_train(i)=sum((h-y).^2)/(2*(length(y)));

h = Xval*theta;

error_val(i)=sum((h-yval).^2)/(2*length(yval));

end

week 7 SVM

高斯核函数 gaussianKernel.m

sim = exp(-sum((x1-x2).^2)/(2*sigma*sigma));

min_e = 10

for i = 1:size(test,2)

C_temp = test(i)

for j = 1:size(test,2)

sigma_temp = test(j)

model= svmTrain(X, y, C_temp, @(x1, x2) gaussianKernel(x1, x2, sigma_temp));

predictions = svmPredict(model, Xval);

ee = mean(double(predictions ~= yval))

if ee< min_e

C=C_temp

sigma = sigma_temp

min_e = ee;

end

end

end

Email Preprocessing

[bool,inx]=ismember(str,vocabList)

if bool ==1

word_indices(length(word_indices)+1)= inx

end

Email Feature Extraction

for i =1:length(word_indices)

x(word_indices(i))=1;

end在论坛上看到更为简答的一种,

x(word_indices)=1;一行而且不用循环

降维

findClosestCentroids

for i = 1:size(X,1)

maxdis = 10000;

for j = 1:K

d = sum((X(i,:)-centroids(j,:)).^2);

if d < maxdis

idx(i)=j;

maxdis = d;

end

end

end

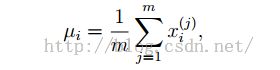

computeCentroid

for i = 1:K

cc = 0;

for j = 1:m

if idx(j) == i

centroids(i,:)=centroids(i,:)+X(j,:);

cc = cc + 1;

end

end

centroids(i,:)=centroids(i,:)/cc;

end

pca.m

Sigma = X'*X/m; [U,S,V]=svd(Sigma);

projectData

Ureduce = U(:, 1:K); Z = X*Ureduce;

recoverData

X_rec = Z * U(:, 1:K)';

ex8

Estimate Gaussian Parameters'

mu = sum(X,1)'/m;

for i=1:m

sigma2 = sigma2+(X(i,:)'-mu).^2;

end

sigma2 = sigma2/m;

Select Threshold

cvPredictions = (pval<epsilon);

fp = sum((cvPredictions == 1) &(yval == 0));

tp = sum((cvPredictions == 1) & (yval == 1));

fn = sum((cvPredictions == 0) &(yval == 1));

prec = tp/(tp+fp);

rec = tp/(tp+fn);

F1=2*prec*rec/(prec+rec);

J = sum(sum(((X*Theta'-Y).*R).^2))/2;

Collaborative Filtering Gradient

X_grad =((X*Theta'-Y).*R)*Theta; Theta_grad = ((X*Theta'-Y).*R)'*X;

Regularized Cost

J = sum(sum(((X*Theta'-Y).*R).^2))/2+lambda*(sum(sum(Theta.^2))+sum(sum(X.^2)))/2;