在eclipse中运行WordCount程序时的错误

有时候,我们为了方便学习或者编码,又或者是linux服务器就不在我们身边,我们只能利用远程来学习使用hadoop,这样我们就的在windows下面链接服务器端的hadoop服务,来作业。

在配置的过程中,我遇到了几个问题,这里我只说一个错误。那就是在运行WordCount程序的时候遇到的错误。

如下图:

是不是比较明白?在FileUtil中的,checkReturnValue方法中出了错误,具体是什么呢?我们来看下源码

private static void checkReturnValue(boolean rv, File p,

FsPermission permission

) throws IOException {

if (!rv) {

throw new IOException("Failed to set permissions of path: " + p +

" to " +

String.format("%04o", permission.toShort()));

}

}

现在我们在eclipse中新建一个工程,导入hadoop-core的源码。

把checkReturnValue方法中的if判断语句注释掉,重新编译,打包

把打包好的jar包重新导入eclipse中,运行,可是另一个错误出现了。

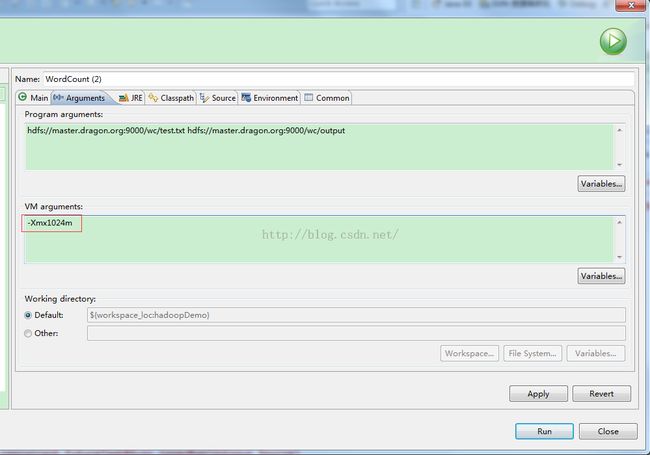

这样我们在项目上点击右键Run as -->Run Configrations-->Arguments

保存,运行

16/02/23 15:02:03 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable 16/02/23 15:02:03 WARN mapred.JobClient: Use GenericOptionsParser for parsing the arguments. Applications should implement Tool for the same. 16/02/23 15:02:03 WARN mapred.JobClient: No job jar file set. User classes may not be found. See JobConf(Class) or JobConf#setJar(String). 16/02/23 15:02:03 INFO input.FileInputFormat: Total input paths to process : 1 16/02/23 15:02:03 WARN snappy.LoadSnappy: Snappy native library not loaded 16/02/23 15:02:03 INFO mapred.JobClient: Running job: job_local1337982978_0001 16/02/23 15:02:03 INFO mapred.LocalJobRunner: Waiting for map tasks 16/02/23 15:02:03 INFO mapred.LocalJobRunner: Starting task: attempt_local1337982978_0001_m_000000_0 16/02/23 15:02:03 INFO mapred.Task: Using ResourceCalculatorPlugin : null 16/02/23 15:02:03 INFO mapred.MapTask: Processing split: hdfs://master.dragon.org:9000/wc/test.txt:0+30 16/02/23 15:02:03 INFO mapred.MapTask: io.sort.mb = 100 16/02/23 15:02:03 INFO mapred.MapTask: data buffer = 79691776/99614720 16/02/23 15:02:03 INFO mapred.MapTask: record buffer = 262144/327680 16/02/23 15:02:03 INFO mapred.MapTask: Starting flush of map output 16/02/23 15:02:03 INFO mapred.MapTask: Finished spill 0 16/02/23 15:02:03 INFO mapred.Task: Task:attempt_local1337982978_0001_m_000000_0 is done. And is in the process of commiting 16/02/23 15:02:03 INFO mapred.LocalJobRunner: 16/02/23 15:02:03 INFO mapred.Task: Task 'attempt_local1337982978_0001_m_000000_0' done. 16/02/23 15:02:03 INFO mapred.LocalJobRunner: Finishing task: attempt_local1337982978_0001_m_000000_0 16/02/23 15:02:03 INFO mapred.LocalJobRunner: Map task executor complete. 16/02/23 15:02:04 INFO mapred.Task: Using ResourceCalculatorPlugin : null 16/02/23 15:02:04 INFO mapred.LocalJobRunner: 16/02/23 15:02:04 INFO mapred.Merger: Merging 1 sorted segments 16/02/23 15:02:04 INFO mapred.Merger: Down to the last merge-pass, with 1 segments left of total size: 61 bytes 16/02/23 15:02:04 INFO mapred.LocalJobRunner: 16/02/23 15:02:04 INFO mapred.Task: Task:attempt_local1337982978_0001_r_000000_0 is done. And is in the process of commiting 16/02/23 15:02:04 INFO mapred.LocalJobRunner: 16/02/23 15:02:04 INFO mapred.Task: Task attempt_local1337982978_0001_r_000000_0 is allowed to commit now 16/02/23 15:02:04 INFO output.FileOutputCommitter: Saved output of task 'attempt_local1337982978_0001_r_000000_0' to hdfs://master.dragon.org:9000/wc/output 16/02/23 15:02:04 INFO mapred.LocalJobRunner: reduce > reduce 16/02/23 15:02:04 INFO mapred.Task: Task 'attempt_local1337982978_0001_r_000000_0' done. 16/02/23 15:02:04 INFO mapred.JobClient: map 100% reduce 100% 16/02/23 15:02:04 INFO mapred.JobClient: Job complete: job_local1337982978_0001 16/02/23 15:02:04 INFO mapred.JobClient: Counters: 19 16/02/23 15:02:04 INFO mapred.JobClient: File Output Format Counters 16/02/23 15:02:04 INFO mapred.JobClient: Bytes Written=23 16/02/23 15:02:04 INFO mapred.JobClient: File Input Format Counters 16/02/23 15:02:04 INFO mapred.JobClient: Bytes Read=30 16/02/23 15:02:04 INFO mapred.JobClient: FileSystemCounters 16/02/23 15:02:04 INFO mapred.JobClient: FILE_BYTES_READ=401 16/02/23 15:02:04 INFO mapred.JobClient: HDFS_BYTES_READ=60 16/02/23 15:02:04 INFO mapred.JobClient: FILE_BYTES_WRITTEN=137384 16/02/23 15:02:04 INFO mapred.JobClient: HDFS_BYTES_WRITTEN=23 16/02/23 15:02:04 INFO mapred.JobClient: Map-Reduce Framework 16/02/23 15:02:04 INFO mapred.JobClient: Map output materialized bytes=65 16/02/23 15:02:04 INFO mapred.JobClient: Map input records=3 16/02/23 15:02:04 INFO mapred.JobClient: Reduce shuffle bytes=0 16/02/23 15:02:04 INFO mapred.JobClient: Spilled Records=10 16/02/23 15:02:04 INFO mapred.JobClient: Map output bytes=49 16/02/23 15:02:04 INFO mapred.JobClient: Total committed heap usage (bytes)=366149632 16/02/23 15:02:04 INFO mapred.JobClient: Combine input records=0 16/02/23 15:02:04 INFO mapred.JobClient: SPLIT_RAW_BYTES=106 16/02/23 15:02:04 INFO mapred.JobClient: Reduce input records=5 16/02/23 15:02:04 INFO mapred.JobClient: Reduce input groups=3 16/02/23 15:02:04 INFO mapred.JobClient: Combine output records=0 16/02/23 15:02:04 INFO mapred.JobClient: Reduce output records=3 16/02/23 15:02:04 INFO mapred.JobClient: Map output records=5 true hello 2 word 1 world 2

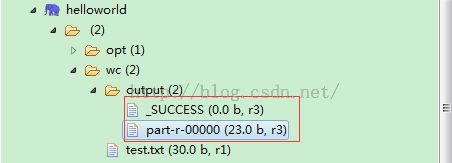

接着看eclipse中Hadoop试图下DFS LOcations中的内容

圈住的是执行完程序后生成的结果。

在test.txt中的内容是

word hello

hello world

world