自定义实现Mapreduce计算的key类型

1. 在进行mapreduce编程时key键往往用于分组或排序,当我们在进行这些操作时Hadoop内置的key键数据类型不能满足需求时,

或针对用例优化自定义数据类型可能执行的更好。因此可以通过实现org.apache.hadoop.io.WritableComparable接口定义一个 自定义的WritableComparable类型,并使其作为mapreduce计算的key类型。

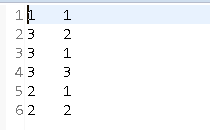

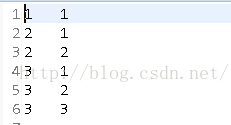

1.示例目的:有两列数据,第一列按照升序排序,若第一列相同时,第二列仍然按升序排序。

2.输入数据:

或针对用例优化自定义数据类型可能执行的更好。因此可以通过实现org.apache.hadoop.io.WritableComparable接口定义一个 自定义的WritableComparable类型,并使其作为mapreduce计算的key类型。

2.自定义Hadoop key类型。

1.Hadoop mapreduce的key类型往往用于进行相互比较, 可以达到进行相互比较来满足排序的目的。

2.Hadoop Writable数据类型实现了WritableComparable<T>接口,并增加了CompareTo()方法。

CompaeTo()方法的返回值有三种类型。负整数、0、正整数分别对应小于、等于、大于被比较对象。

3.Hadoop使用Hashpartitioner作为默认的Partitioner实现。Hashpartitioner需要键对象的hashCode()方法满足一下两个属性。

1.在不同JVM实例提供相同的哈希值。

2.提供哈希值的均匀分布。

4.通过查看源码中org.apache.hadoop.io.WritableComparable接口明确具体实现的实例。

public class MyWritableComparable implements WritableComparable {

// Some data

private int counter;

private long timestamp;

public void write(DataOutput out) throws IOException {

out.writeInt(counter);

out.writeLong(timestamp);

}

public void readFields(DataInput in) throws IOException {

counter = in.readInt();

timestamp = in.readLong();

}

public int compareTo(MyWritableComparable w) {

int thisValue = this.value;

int thatValue = ((IntWritable)o).value;

return (thisValue < thatValue ? -1 : (thisValue==thatValue ? 0 : 1));

}

}

5.下面通过用一个二次排序实例来感受一下自定义的key类型。1.示例目的:有两列数据,第一列按照升序排序,若第一列相同时,第二列仍然按升序排序。

2.输入数据:

3.输出数据:

6.Mapreduce程序的具体实现。

6.1自定义key数据类型.

/**

* 自定义的key类类型应该实现WritableComparable接口

* 实现该类可以使原本不能参与排序的v2,实现后可以把原来的k2和v2封装到一个类中,作为新的k2

*/

static class NewKey implements WritableComparable<NewKey> {

Long first;

Long second;

public NewKey() {

}

public NewKey(long first, long second) {

this.first = first;

this.second = second;

}

//反序列化,从流中的二进制装换成NewKey

@Override

public void readFields(DataInput in) throws IOException {

this.first = in.readLong();

this.second = in.readLong();

}

//序列化,将NewKey装换成使用流传送的二进制

@Override

public void write(DataOutput out) throws IOException {

out.writeLong(first);

out.writeLong(second);

}

/**

* 重载compareTo()方法,进行组合键key的比较。

* 当k2进行排序时,会调用该方法. 当第一列不同时,升序;当第一列相同时,第二列升序

*/

@Override

public int compareTo(NewKey o) {

final long minus = this.first - o.first;

if (minus != 0) {

return (int) minus;

}

return (int) (this.second - o.second);

}

/**

* 新定义类应该重写的两个方法hashCode()和equals()。

*/

@Override

public int hashCode() {

return this.first.hashCode() + this.second.hashCode();

}

@Override

public boolean equals(Object obj) {

if (!(obj instanceof NewKey)) {

return false;

}

NewKey oK2 = (NewKey) obj;

return (this.first == oK2.first) && (this.second == oK2.second);

}

}

6.2Mapper函数. static class MyMapper extends

Mapper<LongWritable, Text, NewKey, LongWritable> {

protected void map(

LongWritable key,

Text value,

org.apache.hadoop.mapreduce.Mapper<LongWritable, Text, NewKey, LongWritable>.Context context)

throws java.io.IOException, InterruptedException {

final String[] splited = value.toString().split("\t");

final NewKey k2 = new NewKey(Long.parseLong(splited[0]),

Long.parseLong(splited[1]));

final LongWritable v2 = new LongWritable(Long.parseLong(splited[1]));

context.write(k2, v2);

};

}

6.3Reducer函数. static class MyReducer extends

Reducer<NewKey, LongWritable, LongWritable, LongWritable> {

protected void reduce(

NewKey k2,

java.lang.Iterable<LongWritable> v2s,

org.apache.hadoop.mapreduce.Reducer<NewKey, LongWritable, LongWritable, LongWritable>.Context context)

throws java.io.IOException, InterruptedException {

context.write(new LongWritable(k2.first), new LongWritable(

k2.second));

};

}

6.4主函数。public class twoSort {

final static String INPUT_PATH = "hdfs://192.168.56.171:9000/TwoSort/twoSort.txt";

final static String OUT_PATH = "hdfs://192.168.56.171:9000/TwoSort/out";

public static void main(String[] args) throws Exception {

final Configuration configuration = new Configuration();

final FileSystem fileSystem = FileSystem.get(new URI(INPUT_PATH),

configuration);

if (fileSystem.exists(new Path(OUT_PATH))) {

fileSystem.delete(new Path(OUT_PATH), true);

}

final Job job = new Job(configuration, twoSort.class.getSimpleName());

// 1.1 指定输入文件路径

FileInputFormat.setInputPaths(job, INPUT_PATH);

// 指定哪个类用来格式化输入文件

job.setInputFormatClass(TextInputFormat.class);

// 1.2指定自定义的Mapper类

job.setMapperClass(MyMapper.class);

// 指定输出<k2,v2>的类型

job.setMapOutputKeyClass(NewKey.class);

job.setMapOutputValueClass(LongWritable.class);

// 1.3 指定分区类

job.setPartitionerClass(HashPartitioner.class);

job.setNumReduceTasks(1);

// 1.4 TODO 排序、分区

// 1.5 TODO (可选)合并

// 2.2 指定自定义的reduce类

job.setReducerClass(MyReducer.class);

// 指定输出<k3,v3>的类型

job.setOutputKeyClass(LongWritable.class);

job.setOutputValueClass(LongWritable.class);

// 2.3 指定输出到哪里

FileOutputFormat.setOutputPath(job, new Path(OUT_PATH));

// 设定输出文件的格式化类

job.setOutputFormatClass(TextOutputFormat.class);

// 把代码提交给JobTracker执行

job.waitForCompletion(true);

}

}